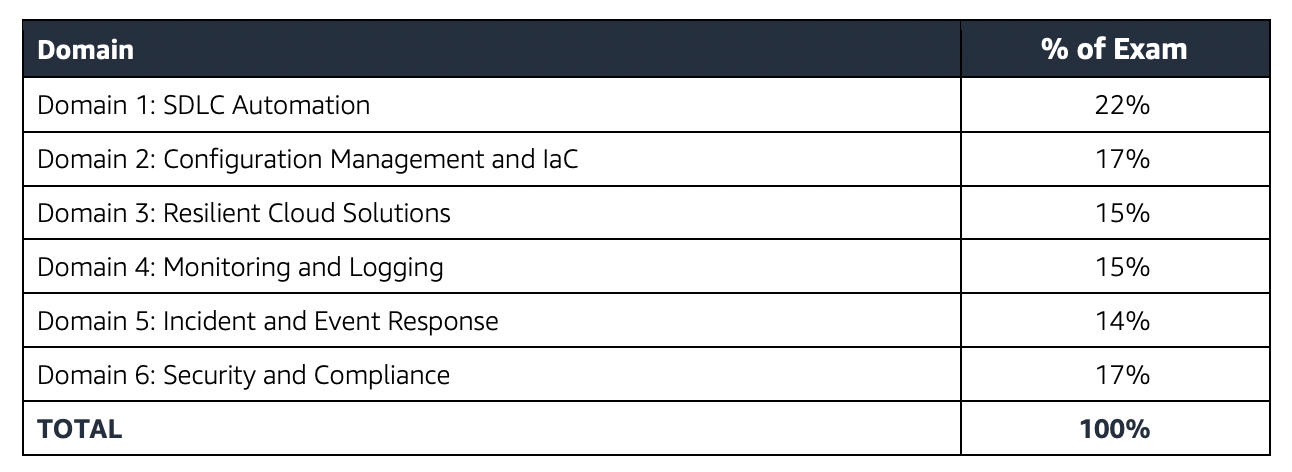

AWS Certified DevOps Engineer – Professional (DOP-C02) Exam Learning Path

- AWS Certified DevOps Engineer – Professional (DOP-C02) exam is the upgraded pattern of the DevOps Engineer – Professional (DOP-C01) exam which was released in March 2023.

- I recently attempted the latest pattern and DOP-C02 is quite similar to DOP-C01 with the inclusion of new services and features.

AWS Certified DevOps Engineer – Professional (DOP-C02) Exam Content

- AWS Certified DevOps Engineer – Professional (DOP-C02) exam is intended for individuals who perform a DevOps engineer role and focuses on provisioning, operating, and managing distributed systems and services on AWS.

- DOP-C02 basically validates

- Implement and manage continuous delivery systems and methodologies on AWS

- Implement and automate security controls, governance processes, and compliance validation

- Define and deploy monitoring, metrics, and logging systems on AWS

- Implement systems that are highly available, scalable, and self-healing on the AWS platform

- Design, manage, and maintain tools to automate operational processes

Refer to AWS Certified DevOps Engineer – Professional Exam Guide

AWS Certified DevOps Engineer – Professional (DOP-C02) Exam Resources

- Online Courses

- Stephane Maarek – AWS Certified DevOps Engineer Professional

- Adrian Cantrill – AWS Certified DevOps Engineer – Professional

- Adrian Cantrill – AWS Professional Bundle

- Whizlabs – AWS Certified DevOps Engineer Professional Course

- Coursera – DevOps on AWS Specialization

- Practice tests

AWS Certified DevOps Engineer – Professional (DOP-C02) Exam Summary

- Professional exams are tough, lengthy, and tiresome. Most of the questions and answers options have a lot of prose and a lot of reading that needs to be done, so be sure you are prepared and manage your time well.

- Each solution involves multiple AWS services.

- DOP-C02 exam has 75 questions to be solved in 170 minutes. Only 65 affect your score, while 10 unscored questions are for evaluation for future use.

- DOP-C02 exam includes two types of questions, multiple-choice and multiple-response.

- DOP-C02 has a scaled score between 100 and 1,000. The scaled score needed to pass the exam is 750.

- Each question mainly touches multiple AWS services.

- Professional exams currently cost $ 300 + tax.

- You can get an additional 30 minutes if English is your second language by requesting Exam Accommodations. It might not be needed for Associate exams but is helpful for Professional and Specialty ones.

- As always, mark the questions for review and move on and come back to them after you are done with all.

- As always, having a rough architecture or mental picture of the setup helps focus on the areas that you need to improve. Trust me, you will be able to eliminate 2 answers for sure and then need to focus on only the other two. Read the other 2 answers to check the difference area and that would help you reach the right answer or at least have a 50% chance of getting it right.

- AWS exams can be taken either remotely or online, I prefer to take them online as it provides a lot of flexibility. Just make sure you have a proper place to take the exam with no disturbance and nothing around you.

- Also, if you are taking the AWS Online exam for the first time try to join at least 30 minutes before the actual time as I have had issues with both PSI and Pearson with long wait times.

AWS Certified DevOps Engineer – Professional (DOP-C02) Exam Topics

- AWS Certified DevOps Engineer – Professional exam covers a lot of concepts and services related to Automation, Deployments, Disaster Recovery, HA, Monitoring, Logging, and Troubleshooting. It also covers security and compliance related topics.

Management & Governance tools

- CloudFormation

- provides an easy way to create and manage a collection of related AWS resources, provision and update them in an orderly and predictable fashion.

- Make sure you have gone through and executed a CloudFormation template to provision AWS resources.

- CloudFormation Concepts cover

- Templates act as a blueprint for provisioning of AWS resources

- Stacks are collection of resources as a single unit, that can be created, updated, and deleted by creating, updating, and deleting stacks.

- Change Sets present a summary or preview of the proposed changes that CloudFormation will make when a stack is updated.

- Nested stacks are stacks created as part of other stacks.

- CloudFormation template anatomy consists of resources, parameters, outputs, and mappings.

- CloudFormation supports multiple features

- Drift detection enables you to detect whether a stack’s actual configuration differs, or has drifted, from its expected configuration.

- Termination protection helps prevent a stack from being accidentally deleted.

- Stack policy can prevent stack resources from being unintentionally updated or deleted during a stack update.

- StackSets help create, update, or delete stacks across multiple accounts and Regions with a single operation.

- Helper scripts with creation policies can help wait for the completion of events before provisioning or marking resources complete.

- Update policy supports rolling and replacing updates with AutoScaling.

- Deletion policies to help retain or backup resources during stack deletion.

- Custom resources can be configured for uses cases not supported for e.g. retrieve AMI IDs or interact with external services

- Understand CloudFormation Best Practices esp. Nested Stacks and logical grouping

- Elastic Beanstalk

- helps to quickly deploy and manage applications in the AWS Cloud without having to worry about the infrastructure that runs those applications.

- Understand Elastic Beanstalk overall – Applications, Versions, and Environments

- Deployment strategies with their advantages and disadvantages

- OpsWorks

- is a configuration management service that helps to configure and operate applications in a cloud enterprise by using Chef.

- Understand OpsWorks overall – stacks, layers, recipes

- Understand OpsWorks Lifecycle events esp. the Configure event and how it can be used.

- Understand OpsWorks Deployment Strategies

- Know OpsWorks auto-healing and how to be notified for it.

- Understand CloudFormation vs Elastic Beanstalk vs OpsWorks

- AWS Organizations

- Difference between Service Control Policies and IAM Policies

- SCP provides the maximum permission that a user can have, however, the user still needs to be explicitly given IAM policy.

- Systems Manager

- AWS Systems Manager and its various services like parameter store, patch manager

- Parameter Store provides secure, scalable, centralized, hierarchical storage for configuration data and secret management. Does not support secrets rotation. Use Secrets Manager instead

- Session Manager provides secure and auditable instance management without the need to open inbound ports, maintain bastion hosts, or manage SSH keys.

- Patch Manager helps automate the process of patching managed instances with both security-related and other types of updates.

- CloudWatch

- supports monitoring, logging, and alerting.

- CloudWatch logs can be used to monitor, store, and access log files from EC2 instances, CloudTrail, Route 53, and other sources. You can create metric filters over the logs.

- CloudWatch Subscription Filters can be used to send logs to Kinesis Data Streams, Lambda, or Kinesis Data Firehose.

- EventBridge (CloudWatch Events) is a serverless event bus service that makes it easy to connect applications with data from a variety of sources.

- EventBridge or CloudWatch events can be used as a trigger for periodically scheduled events.

- CloudWatch unified agent helps collect metrics and logs from EC2 instances and on-premises servers and push them to CloudWatch.

- CloudWatch Synthetics helps create canaries, configurable scripts that run on a schedule, to monitor your endpoints and APIs

- CloudTrail

- for audit and governance

- With Organizations, the trail can be configured to log CloudTrail from all accounts to a central account.

- Config is a fully managed service that provides AWS resource inventory, configuration history, and configuration change notifications to enable security, compliance, and governance.

- supports managed as well as custom rules that can be evaluated on periodic basis or as the event occurs for compliance and trigger automatic remediation

- Conformance pack is a collection of AWS Config rules and remediation actions that can be easily deployed as a single entity in an account and a Region or across an organization in AWS Organizations.

- Control Tower

- to setup, govern, and secure a multi-account environment

- strongly recommended guardrails cover EBS encryption

- Service Catalog

- allows organizations to create and manage catalogues of IT services that are approved for use on AWS with minimal permissions.

- Trusted Advisor

- helps with cost optimization and service limits in addition to security, performance, and fault tolerance.

- AWS Health Dashboard is the single place to learn about the availability and operations of AWS services.

Developer Tools

- Know AWS Developer tools

- CodeCommit is a secure, scalable, fully-managed source control service that helps to host secure and highly scalable private Git repositories.

- can help handle deployments of code to different environments using same repository and different branches.

- CodeBuild is a fully managed build service that compiles source code, runs tests, and produces software packages that are ready to deploy.

- CodeDeploy helps automate code deployments to any instance, including EC2 instances and instances running on-premises, Lambda, and ECS.

- Understand CodeDeploy Lifecycle events hooks

- Understand CodeDeploy deployment configurations (hint : supports canary and linear deployment)

- Understand CodeDeploy redeploy and rollbacks

- CodePipeline is a fully managed continuous delivery service that helps automate the release pipelines for fast and reliable application and infrastructure updates.

- CodePipeline pipeline structure (Hint : run builds parallelly using runorder)

- Understand how to configure notifications on events and failures

- CodePipeline supports Manual Approval

- CodeArtifact is a fully managed artifact repository service that makes it easy for organizations of any size to securely store, publish, and share software packages used in their software development process.

- CodeGuru provides intelligent recommendations to improve code quality and identify an application’s most expensive lines of code. Reviewer helps improve code quality and Profiler helps optimize performance for applications

- EC2 Image Builder helps to automate the creation, management, and deployment of customized, secure, and up-to-date server images that are pre-installed and pre-configured with software and settings to meet specific IT standards.

Disaster Recovery

- Disaster recovery is mainly covered as a part of Re-silent cloud solutions.

- Disaster Recovery whitepaper, although outdated, make sure you understand the differences and implementation for each type esp. pilot light, warm standby w.r.t RTO, and RPO.

- Compute

- Make components available in an alternate region,

- Backup and Restore using either snapshots or AMIs that can be restored.

- Use minimal low-scale capacity running which can be scaled once the failover happens

- Use fully running compute in active-active confirmation with health checks.

- CloudFormation to create, and scale infra as needed

- Storage

- S3 and EFS support cross-region replication

- DynamoDB supports Global tables for multi-master, active-active inter-region storage needs.

- Aurora Global Database provides cross-region read replicas and failover capabilities.

- RDS supports cross-region read replicas which can be promoted to master in case of a disaster. This can be done using Route 53, CloudWatch, and lambda functions.

- Network

- Route 53 failover routing with health checks to failover across regions.

- CloudFront Origin Groups support primary and secondary endpoints with failover.

Networking & Content Delivery

- Networking is covered very lightly.

- VPC – Virtual Private Cloud

- Security Groups, NACLs

- NACLs are stateless and need to open ephemeral ports for response traffic.

- VPC Gateway Endpoints to provide access to S3 and DynamoDB

- VPC Interface Endpoints or PrivateLink provide access to a variety of services like SQS, Kinesis, or Private APIs exposed through NLB.

- VPC Peering to enable communication between VPCs within the same or different regions.

- VPC Peering does not support overlapping CIDRs while PrivateLink does as only the endpoint is exposed.

- VPC Flow Logs to track network traffic and can be published to CloudWatch Logs, S3, or Kinesis Data Firehose.

- NAT Gateway provides managed NAT service that provides better availability, higher bandwidth, and requires less administrative effort.

- Security Groups, NACLs

- Route 53

- Routing Policies

- focus on Weighted, Latency, and failover routing policies

- failover routing provides active-passive configuration for disaster recovery while the others are active-active configurations.

- Routing Policies

- CloudFront

- fully managed, fast CDN service that speeds up the distribution of static, dynamic web or streaming content to end-users.

- Load Balancer – ELB, ALB and NLB

- ELB with Auto Scaling to provide scalable and highly available applications

- Understand ALB vs NLB and their use cases.

- Access logs needs to be enabled and logs only to S3.

- Direct Connect & VPN

- provide on-premises to AWS connectivity

- Understand Direct Connect vs VPN

- VPN can provide a cost-effective, quick failover for Direct Connect.

- VPN over Direct Connect provides a secure dedicated connection and requires a public virtual interface.

Security, Identity & Compliance

- AWS Identity and Access Management

- IAM Roles and use cases. Understand the process for provisioning cross-account access.

- IAM Web Identity & Federation

- IAM Best Practices

- AWS WAF

- protects from common attack techniques like SQL injection and XSS, Conditions based include IP addresses, HTTP headers, HTTP body, and URI strings.

- integrates with CloudFront, ALB, and API Gateway.

- AWS KMS – Key Management Service

- managed encryption service that allows the creation and control of encryption keys to enable data encryption.

- Secrets Manager

- helps protect secrets needed to access applications, services, and IT resources.

- AWS GuardDuty

- is a threat detection service that continuously monitors the AWS accounts and workloads for malicious activity and delivers detailed security findings for visibility and remediation.

-

AWS Security Hub is a cloud security posture management service that performs security best practice checks, aggregates alerts and enables automated remediation.

- Firewall Manager helps centrally configure and manage firewall rules across the accounts and applications in AWS Organizations which includes a variety of protections, including WAF, Shield Advanced, VPC security groups, Network Firewall, and Route 53 Resolver DNS Firewall.

Storage

- Simple Storage Service – S3

- S3 Permissions & S3 Data Protection

- S3 bucket policies to control access to VPC Endpoints and provide cross-account access.

- S3 Storage Classes & Lifecycle policies

- covers S3 Standard, Infrequent access, intelligent tier, and Glacier for archival and object transitions & deletions for cost management.

- S3 supports cross-region replication. Understand how the process works in terms of permissions.

- S3 can be used for static website hosting and integrates with CloudFront to improve performance and latency.

- S3 Security

- S3 supports encryption using KMS

- S3 supports Object Lock and Glacier supports Vault lock to prevent the deletion of objects, especially required for compliance requirements.

- S3 supports the same and cross-region replication for disaster recovery.

- S3 Access Logs enable tracking access requests to an S3 bucket.

- S3 Event Notification enables notifications to be triggered when certain events happen in the bucket and supports SNS, SQS, and Lambda as the destination. S3 needs permission to be able to integrate with the services.

- S3 Permissions & S3 Data Protection

- Elastic Block Store

- EBS Backup using snapshots for HA and Disaster recovery

- Data Lifecycle Manager can be used to automate the creation, retention, and deletion of snapshots taken to back up the EBS volumes.

- Elastic File System – EFS

- provides fully managed, scalable, serverless, shared, and cost-optimized file storage for use with AWS and on-premises resources.

- supports cross-region replication for disaster recovery

- supports storage classes like S3

- supports only Linux-based AMIs

Database

- DynamoDB

- provides a fully managed NoSQL database service with fast and predictable performance with seamless scalability.

- DynamoDB Auto Scaling can be used to handle peaks or bursts.

- DynamoDB Streams for tracking changes in real time.

- Global tables for multi-master, active-active inter-region storage needs.

- Global tables do not support strong global consistency

- DynamoDB Accelerator – DAX for seamless caching to reduce the load on DynamoDB for read-heavy requirements.

- RDS

- supports cross-region read replicas ideal for disaster recovery with low RTO and RPO.

- provides RDS proxy for effective database connection polling

- RDS Multi-AZ vs Read Replicas

- Aurora

- fully managed, MySQL- and PostgreSQL-compatible, relational database engine

- Aurora Serverless provides on-demand, autoscaling configuration.

- Aurora Global Database consists of one primary AWS Region where the data is mastered, and up to five read-only, secondary AWS Regions.

- Aurora Endpoints supports Cluster (writer) and Reader endpoints.

- Understand DynamoDB Global Tables vs Aurora Global Databases

Compute

- EC2

- Auto Scaling provides the ability to ensure a correct number of EC2 instances are always running to handle the load of the application

- Auto Scaling Lifecycle events enable performing custom actions by pausing instances as an ASG launches or terminates them.

- Blue/green deployments with Auto Scaling – With new launch configurations, new auto-scaling groups, or CloudFormation update policies.

- Lambda

- offers Serverless computing

- helps define reserved concurrency limits to reduce the impact

- Lambda Alias now supports canary deployments

- Reserved Concurrency guarantees the maximum number of concurrent instances for the function

- Provisioned Concurrency

- provides greater control over the performance of serverless applications and helps keep functions initialized and hyper-ready to respond in double-digit milliseconds.

- supports Application Auto Scaling.

- Step Functions helps developers use AWS services to build distributed applications, automate processes, orchestrate microservices, and create data and machine learning (ML) pipelines.

- ECS – Elastic Container Service

- container management service that supports Docker containers

- supports two launch types

- EC2 and

- Fargate which provides the serverless capability

- ECR provides a fully managed, secure, scalable, reliable container image registry service. It supports lifecycle policies for images.

Integration Tools

- SQS in terms of loose coupling and scaling.

- Difference between SQS Standard and FIFO esp. with throughput and order

- SQS supports dead letter queues and redrive policy which specifies the source queue, the dead-letter queue, and the conditions under which SQS moves messages from the former to the latter if the consumer of the source queue fails to process a message a specified number of times.

- CloudWatch integration with SNS and Lambda for notifications.

Analytics

- Kinesis

- for real-time data ingestion and analytics.

- Difference between Kinesis Data Streams and Kinesis Firehose

- Kinesis Data Firehose integrates with S3, Redshift, and OpenSearch.

- OpenSearch (Elasticsearch) provides a managed search solution.

Whitepapers

- Blue Green Deployments

- Disaster Recovery

- Architecting for the Cloud: Best Practices

- Building Fault-Tolerant Applications on AWS

- DevOps on AWS

- Disaster Recovery of On-Premises Application to AWS

- Disaster Recovery of Workloads on AWS

- Development and Test on AWS

- Deployment Options on AWS

AWS Certified DevOps Engineer – Professional (DOP-C02) Exam Day

- Make sure you are relaxed and get some good night’s sleep. The exam is not tough if you are well-prepared.

- If you are taking the AWS Online exam

- Try to join at least 30 minutes before the actual time as I have had issues with both PSI and Pearson with long wait times.

- The online verification process does take some time and usually, there are glitches.

- Remember, you would not be allowed to take the take if you are late by more than 30 minutes.

- Make sure you have your desk clear, no hand-watches, or external monitors, keep your phones away, and nobody can enter the room.

Finally, All the Best 🙂