Amazon Athena

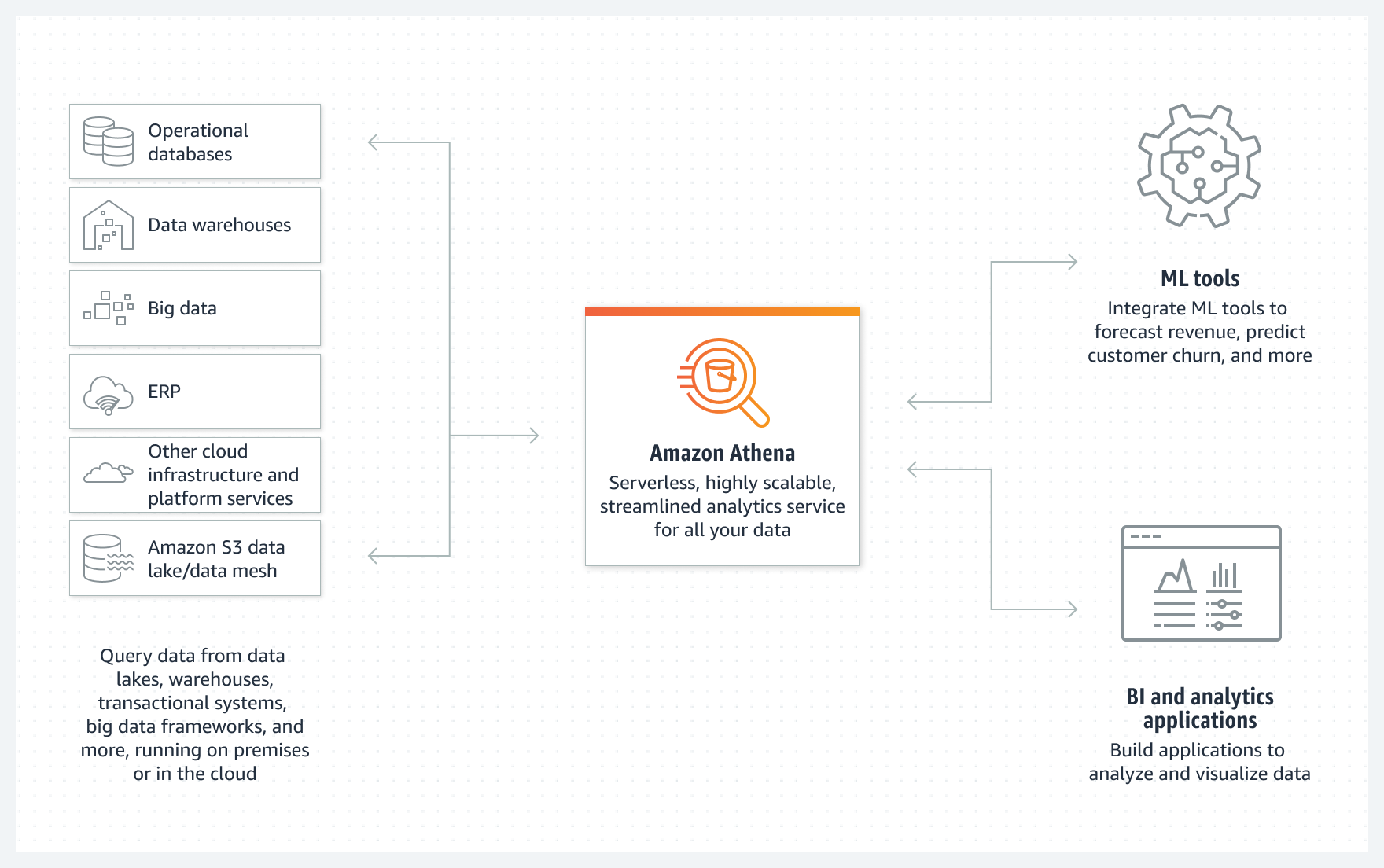

- Amazon Athena is a serverless, interactive analytics service built on open-source frameworks, supporting open-table and file formats.

- provides a simplified, flexible way to analyze petabytes of data in an S3 data lake and 30 data sources, including on-premises data sources or other cloud systems using SQL or Python without loading the data.

- is built on open-source Trino and Presto engines and Apache Spark frameworks, with no provisioning or configuration effort required.

- is highly available and runs queries using compute resources across multiple facilities, automatically routing queries appropriately if a particular facility is unreachable

- can process unstructured, semi-structured, and structured datasets.

- integrates with QuickSight for visualizing the data or creating dashboards.

- supports various standard data formats, including CSV, TSV, JSON, ORC, Avro, and Parquet.

- supports compressed data in Snappy, Zlib, LZO, and GZIP formats. You can improve performance and reduce costs by compressing, partitioning, and using columnar formats.

- can handle complex analysis, including large joins, window functions, and arrays

- uses a managed Glue Data Catalog to store information and schemas about the databases and tables that you create for the data stored in S3

- uses schema-on-read technology, which means that the table definitions are applied to the data in S3 when queries are being applied. There’s no data loading or transformation required. Table definitions and schema can be deleted without impacting the underlying data stored in S3.

- supports fine-grained access control with AWS Lake Formation which allows for centrally managing permissions and access control for data catalog resources in the S3 data lake.

Athena Workgroups

- Athena workgroups can be used to separate users, teams, applications, or workloads, to set limits on amount of data each query or the entire workgroup can process, and to track costs.

- Resource-level identity-based policies can be used to control access to a specific workgroup.

- Workgroups help view query-related metrics in CloudWatch, control costs by configuring limits on the amount of data scanned, create thresholds, and trigger actions, such as SNS, when these thresholds are breached.

- Workgroups integrate with IAM, CloudWatch, Simple Notification Service, and AWS Cost and Usage Reports as follows:

- IAM identity-based policies with resource-level permissions control who can run queries in a workgroup.

- Athena publishes the workgroup query metrics to CloudWatch if you enable query metrics.

- SNS topics can be created that issue alarms to specified workgroup users when data usage controls for queries in a workgroup exceed the established thresholds.

- Workgroup tag can be configured as a cost allocation tag in the Billing and Cost Management console and the costs associated with running queries in that workgroup appear in the Cost and Usage Reports with that cost allocation tag.

Athena Best Practices

- Partition the data

- which helps keep the related data together based on column values such as date, country, and region.

- Athena supports Hive partitioning

- Pick partition keys that will support the queries

- Partition projection is an Athena feature that stores partition information not in the Glue Data Catalog but as rules in the properties of the table in AWS Glue.

- Compression

- Compressing the data can speed up queries significantly, as long as the files are either of an optimal size or the files are splittable.

- Smaller data sizes reduce the data scanned from S3, resulting in lower costs of running queries and reduced network traffic.

- Optimize file sizes

- Queries run more efficiently when data scanning can be parallelized and when blocks of data can be read sequentially.

- Columnar file formats

- Columnar storage formats like ORC and Parquet are optimized for fast retrieval of data as they allow compression and are splittable.

- A splittable file can be read in parallel by the execution engine in Athena, whereas an unsplittable file can’t be read in parallel.

- Optimize queries

AWS Certification Exam Practice Questions

- Questions are collected from Internet and the answers are marked as per my knowledge and understanding (which might differ with yours).

- AWS services are updated everyday and both the answers and questions might be outdated soon, so research accordingly.

- AWS exam questions are not updated to keep up the pace with AWS updates, so even if the underlying feature has changed the question might not be updated

- Open to further feedback, discussion and correction.

- A SysOps administrator is storing access logs in Amazon S3 and wants to use standard SQL to query data and generate a report

without having to manage infrastructure. Which AWS service will allow the SysOps administrator to accomplish this task?- Amazon Inspector

- Amazon CloudWatch

- Amazon Athena

- Amazon RDS

- A Solutions Architect must design a storage solution for incoming billing reports in CSV format. The data does not need to be

scanned frequently and is discarded after 30 days. Which service will be MOST cost-effective in meeting these requirements?- Import the logs into an RDS MySQL instance

- Use AWS Data pipeline to import the logs into a DynamoDB table

- Write the files to an S3 bucket and use Amazon Athena to query the data

- Import the logs to an Amazon Redshift cluster

4 thoughts on “Amazon Athena”

Comments are closed.