TerramEarth manufactures heavy equipment for the mining and agricultural industries. They currently have over 500 dealers and service centers in 100 countries. Their mission is to build products that make their customers more productive.

Key points here are 500 dealers and service centers are spread across the world and they want to make their customers more productive.

Solution Concept

There are 2 million TerramEarth vehicles in operation currently, and we see 20% yearly growth. Vehicles collect telemetry data from many sensors during operation. A small subset of critical data is transmitted from the vehicles in real time to facilitate fleet management. The rest of the sensor data is collected, compressed, and uploaded daily when the vehicles return to home base. Each vehicle usually generates 200 to 500 megabytes of data per day

Key points here are TerramEarth has 2 million vehicles. Only critical data is transferred in real-time while the rest of the data is uploaded in bulk daily.

Executive Statement

Our competitive advantage has always been our focus on the customer, with our ability to provide excellent customer service and minimize vehicle downtimes. After moving multiple systems into Google Cloud, we are seeking new ways to provide best-in-class online fleet management services to our customers and improve operations of our dealerships. Our 5-year strategic plan is to create a partner ecosystem of new products by enabling access to our data, increasing autonomous operation capabilities of our vehicles, and creating a path to move the remaining legacy systems to the cloud.

Key point here is the company wants to improve further in operations, customer experience, and partner ecosystem by allowing them to reuse the data.

Existing Technical Environment

TerramEarth’s vehicle data aggregation and analysis infrastructure resides in Google Cloud and serves clients from all around the world. A growing amount of sensor data is captured from their two main manufacturing plants and sent to private data centers that contain their legacy inventory and logistics management systems. The private data centers have multiple network interconnects configured to Google Cloud.

The web frontend for dealers and customers is running in Google Cloud and allows access to stock management and analytics.

Key point here is the company is hosting its infrastructure in Google Cloud and private data centers. GCP has web frontend and vehicle data aggregation & analysis. Data is sent to private data centers.

Business Requirements

Predict and detect vehicle malfunction and rapidly ship parts to dealerships for just-in-time repair where possible.

- Cloud IoT core can provide a fully managed service to easily and securely connect, manage, and ingest data from globally dispersed devices.

- Existing legacy inventory and logistics management systems running in the private data centers can be migrated to Google Cloud.

- Existing data can be migrated one time using Transfer Appliance.

Decrease cloud operational costs and adapt to seasonality.

-

- Google Cloud provides configuring elasticity and scalability for resources based on the demand.

Increase speed and reliability of development workflow.

-

- Google Cloud CI/CD tools like Cloud Build and open-source tools like Spinnaker can be used to increase the speed and reliability of the deployments.

Allow remote developers to be productive without compromising code or data security.

- Cloud Function to Function authentication

Create a flexible and scalable platform for developers to create custom API services for dealers and partners.

- Google Cloud provides multiple fully managed serverless and scalable application hosting solutions like Cloud Run and Cloud Functions

- Managed Instance group with Compute Engines and GKE cluster with scaling can also be used to provide scalable, highly available compute services.

Technical Requirements

Create a new abstraction layer for HTTP API access to their legacy systems to enable a gradual move into the cloud without disrupting operations.

-

- Google Cloud API Gateway & Cloud Endpoints can be used to provide an abstraction layer to expose the data externally over a variety of backends.

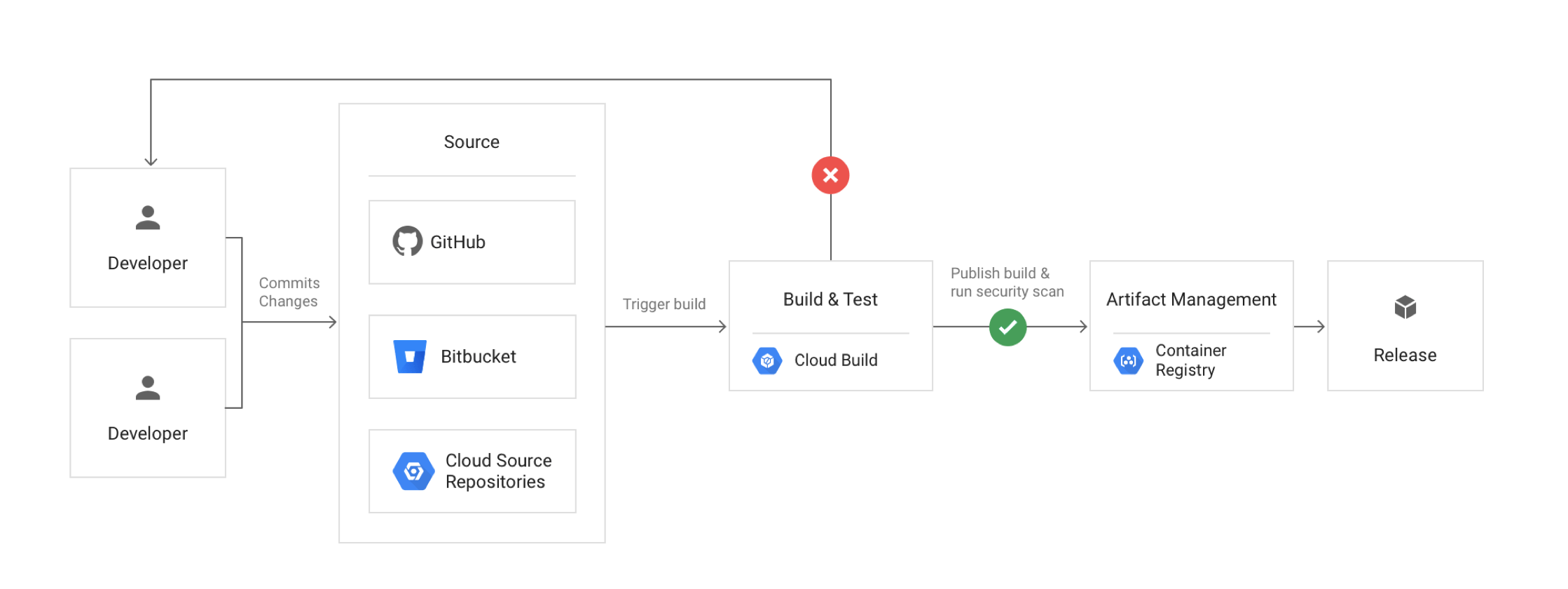

Modernize all CI/CD pipelines to allow developers to deploy container-based workloads in highly scalable environments.

-

- Google Cloud provides DevOps tools like Cloud Build and supports open-source tools like Spinnaker to provide CI/CD features.

- Cloud Source Repositories are fully-featured, private Git repositories hosted on Google Cloud.

- Cloud Build is a fully-managed, serverless service that executes builds on Google Cloud Platform’s infrastructure.

- Container Registry is a private container image registry that supports Docker Image Manifest V2 and OCI image formats.

- Artifact Registry is a fully-managed service with support for both container images and non-container artifacts, Artifact Registry extends the capabilities of Container Registry.

Allow developers to run experiments without compromising security and governance requirements

-

- Google Cloud deployments can be configured for Canary or A/B testing to allow experimentation.

Create a self-service portal for internal and partner developers to create new projects, request resources for data analytics jobs, and centrally manage access to the API endpoints.

Use cloud-native solutions for keys and secrets management and optimize for identity-based access

-

- Google Cloud supports Key Management Service – KMS and Secrets Manager for managing secrets and key management.

Improve and standardize tools necessary for application and network monitoring and troubleshooting.

-

- Google Cloud provides Cloud Operations Suite which includes Cloud Monitoring and Logging to cover both on-premises and Cloud resources.

- Cloud Monitoring collects measurements of key aspects of the service and of the Google Cloud resources used

- Cloud Monitoring Uptime check is a request sent to a publicly accessible IP address on a resource to see whether it responds.

- Cloud Logging is a service for storing, viewing, and interacting with logs.

- Error Reporting aggregates and displays errors produced in the running cloud services.

- Cloud Profiler helps with continuous CPU, heap, and other parameters profiling to improve performance and reduce costs.

- Cloud Trace is a distributed tracing system that collects latency data from the applications and displays it in the Google Cloud Console.

- Cloud Debugger helps inspect the state of an application, at any code location, without stopping or slowing down the running app.

Reference Cellular Upload Architecture

Batch Upload Replacement Architecture

Reference

- Google Cloud – TerramEarth case study

https://jayendrapatil.com/google-cloud-terramearth-case-study/

Good page. Thank you.

“Cloud Storage and other AWS managed services like Pub/Sub, Dataflow and BigQuery:”

AWS 🙂 – Can’t complain too much, I call my kids by the wrong names sometimes.

thanks a lot Gary, multi-cloud issues 🙂 – Corrected the same