AWS Certified Developer – Associate DVA-C02 Exam Learning Path

- AWS Certified Developer – Associate DVA-C02 exam is the latest AWS exam released on 27th February 2023 and has replaced the previous AWS Developer – Associate DVA-C01 certification exam.

- I passed the AWS Developer – Associate DVA-C02 exam with a score of 835/1000.

AWS Certified Developer – Associate DVA-C02 Exam Content

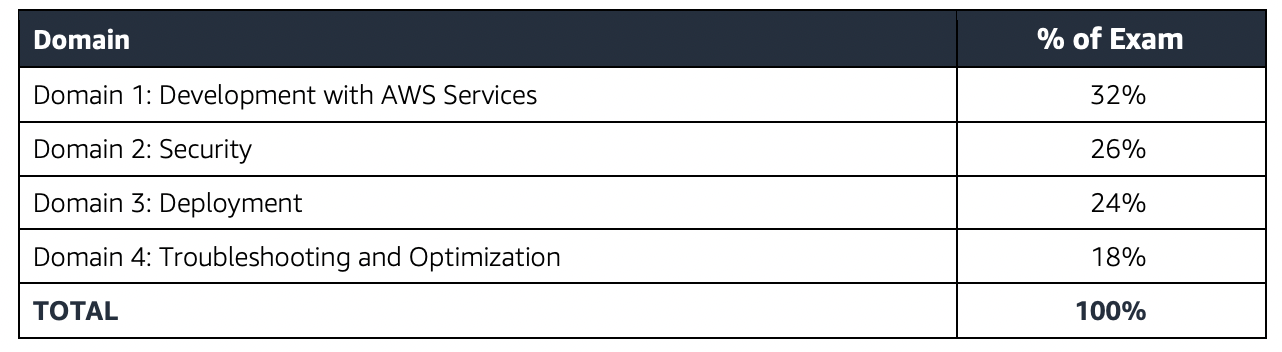

- DVA-C02 validates a candidate’s ability to demonstrate proficiency in developing, testing, deploying, and debugging AWS cloud-based applications.

- DVA-C02 also validates a candidate’s ability to complete the following tasks:

- Develop and optimize applications on AWS

- Package and deploy by using continuous integration and continuous delivery (CI/CD) workflows

- Secure application code and data

- Identify and resolve application issues

Refer AWS Certified Developer – Associate Exam Blue Print

AWS Certified Developer – Associate DVA-C02 Summary

- DVA-C02 exam consists of 65 questions in 130 minutes, and the time is more than sufficient if you are well-prepared.

- DVA-C02 exam includes two types of questions, multiple-choice and multiple-response.

- DVA-C02 has a scaled score between 100 and 1,000. The scaled score needed to pass the exam is 720.

- Associate exams currently cost $ 150 + tax.

- You can get an additional 30 minutes if English is your second language by requesting Exam Accommodations. It might not be needed for Associate exams but is helpful for Professional and Specialty ones.

- AWS exams can be taken either remotely or online, I prefer to take them online as it provides a lot of flexibility. Just make sure you have a proper place to take the exam with no disturbance and nothing around you.

- Also, if you are taking the AWS Online exam for the first time try to join at least 30 minutes before the actual time as I have had issues with both PSI and Pearson with long wait times.

AWS Certified Developer – Associate DVA-C02 Exam Resources

- Online courses

- Stephane Maarek – Ultimate AWS Certified Developer Associate DVA-C02

- Adrian Cantrill – AWS Certified Developer – Associate

- Adrian Cantrill – All Associate Bundle

- DolfinEd – AWS Certified Developer Associate

- Whizlabs – AWS Certified Developer Associate Course

- Practice tests

- Braincert AWS Certified Developer – Associate DVA-C02 Practice Exams

- Stephane Maarek – AWS Certified Developer – Associate Practice Exams

- Whizlabs – AWS Certified Developer Associate Practice Tests

- Signed up with AWS for the Free Tier account which provides a lot of Services to be tried for free with certain limits which are more than enough to get things going. Be sure to decommission anything, if you using anything beyond the free limits, preventing any surprises 🙂

- Read the FAQs at least for the important topics, as they cover important points and are good for quick review

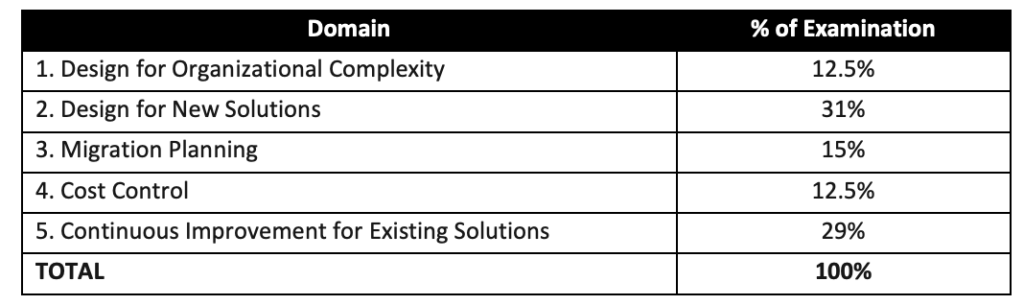

AWS Certified Developer – Associate DVA-C02 Exam Topics

- AWS DVA-C02 exam concepts cover solutions that fall within AWS Well-Architected framework to cover scalable, highly available, cost-effective, performant, and resilient pillars.

- AWS Certified Developer – Associate DVA-C02 exam covers a lot of the latest AWS services like Amplify, X-Ray while focusing majorly on other services like Lambda, DynamoDB, Elastic Beanstalk, S3, EC2

- AWS Certified Developer – Associate DVA-C02 exam is similar to DVA-C01 with more focus on the hands-on development and deployment concepts rather than just the architectural concepts.

- If you had been preparing for the DVA-C01, DVA-C02 is pretty much similar except for the addition of some new services covering Amplify, X-Ray, etc.

Compute

- Elastic Cloud Compute – EC2

- Auto Scaling and ELB

- Auto Scaling provides the ability to ensure a correct number of EC2 instances are always running to handle the load of the application

- Elastic Load Balancer allows the incoming traffic to be distributed automatically across multiple healthy EC2 instances

- Autoscaling & ELB

- work together to provide High Availability and Scalability.

- Span both ELB and Auto Scaling across Multi-AZs to provide High Availability

- Do not span across regions. Use Route 53 or Global Accelerator to route traffic across regions.

- Lambda and serverless architecture, its features, and use cases.

- Lambda integrated with API Gateway to provide a serverless, highly scalable, cost-effective architecture.

- Lambda execution role needs the required permissions to integrate with other AWS services.

- Environment variables to keep functions configurable.

- Lambda Layers provide a convenient way to package libraries and other dependencies that you can use with your Lambda functions.

- Function versions can be used to manage the deployment of the functions.

- Function Alias supports creating aliases, which are mutable, for each function version.

- provides

/tmpephemeral scratch storage. - Integrates with X-Ray for distributed tracing.

- Use RDS proxy for connection pooling.

- Elastic Container Service – ECS with its ability to deploy containers and microservices architecture.

- ECS role for tasks can be provided through taskRoleArn

- ALB provides dynamic port mapping to allow multiple same tasks on the same node.

- Elastic Kubernetes Service – EKS

- managed Kubernetes service to run Kubernetes in the AWS cloud and on-premises data centers

- ideal for migration of an existing workload on Kubernetes

- Elastic Beanstalk

- at a high level, what it provides, and its ability to get an application running quickly.

- Deployment types with their advantages and disadvantages

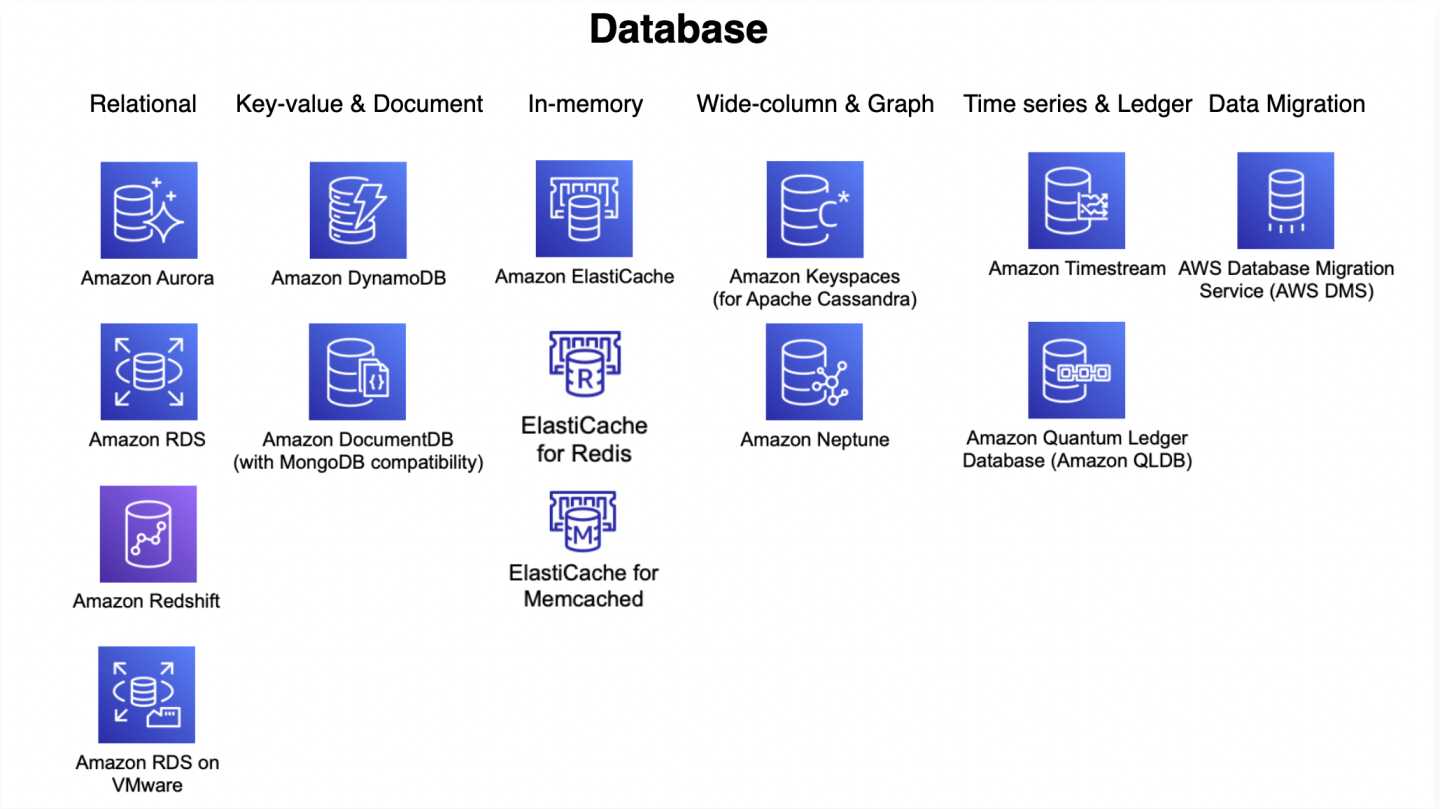

Databases

- Understand relational and NoSQL data storage options which include RDS, DynamoDB, and Aurora with their use cases

- Relational Database Service – RDS

- Read Replicas vs Multi-AZ

- Read Replicas for scalability, Multi-AZ for High Availability

- Multi-AZ is regional only

- Read Replicas can span across regions and can be used for disaster recovery

- Read Replicas vs Multi-AZ

- RDS Proxy

- fully managed, highly available database proxy for RDS that makes applications more secure, scalable, more resilient to database failures.

- allows apps to pool and share DB connections established with the database

- DynamoDB

- provides low latency performance, a key-value store

- is not a relational database

- Secondary indexes on a table allow efficient access to data with attributes other than the primary key.

- Know Local Secondary Indexes vs Global Secondary Indexes

- DynamoDB DAX provides caching for DynamoDB

- DynamoDB TTL helps expire data in DynamoDB without any cost or consuming any write throughput.

- DynamoDB Streams provides a time-ordered sequence of item-level changes made to data in a table and integrates with Lambda.

- DynamoDB Best Practices around designing partition keys and secondary indexes.

- ElastiCache use cases, mainly for caching performance

Storage

- Simple Storage Service – S3

- S3 storage classes with lifecycle policies

- Understand the difference between SA Standard vs SA IA vs SA IA One Zone in terms of cost and durability

- S3 Data Protection

- S3 Client-side encryption encrypts data before storing it in S3

- S3 encryption in transit can be enforced with S3 bucket policies using

secureTransportattributes. - S3 encryption at rest can be enforced with S3 bucket policies using

x-amz-server-side-encryptionattribute.

- S3 features including

- S3 provides cost-effective static website hosting. However, it does not support HTTPS endpoint. Can be integrated with CloudFront for HTTPS, caching, performance, and low-latency access.

- S3 versioning provides protection against accidental overwrites and deletions. Used with MFA Delete feature.

- S3 Pre-Signed URLs for both upload and download provide access without needing AWS credentials.

- S3 CORS allows cross-domain calls

- S3 Transfer Acceleration enables fast, easy, and secure transfers of files over long distances between your client and an S3 bucket.

- S3 Event Notifications to trigger events on various S3 events like objects added or deleted. Supports SQS, SNS, and Lambda functions.

- Integrates with Amazon Macie to detect PII data

- Replication that supports the same and cross-region replication required versioning to be enabled.

- Integrates with Athena to analyze data in S3 using standard SQL.

- S3 storage classes with lifecycle policies

- Instance Store

- is physically attached to the EC2 instance and provides the lowest latency and highest IOPS

- Elastic Block Storage – EBS

- EBS volume types and their use cases in terms of IOPS and throughput. SSD for IOPS and HDD for throughput

- Elastic File System – EFS

- simple, fully managed, scalable, serverless, and cost-optimized file storage for use with AWS Cloud and on-premises resources.

- provides shared volume across multiple EC2 instances, while EBS can be attached to a single instance within the same AZ or EBS Multi-Attach can be attached to multiple instances within the same AZ

- can be mounted with Lambda functions

- supports the NFS protocol, and is compatible with Linux-based AMIs

- supports cross-region replication and storage classes for cost management.

- Difference between EBS vs S3 vs EFS

- Difference between EBS vs Instance Store

- Would recommend referring Storage Options whitepaper, although a bit dated 90% still holds right

Security & Identity

- Identity Access Management – IAM

- IAM role

- provides permissions that are not associated with a particular user, group, or service and are intended to be assumable by anyone who needs it.

- can be used for EC2 application access and Cross-account access

- IAM Best Practices

- IAM role

- Cognito

- provides authentication, authorization, and user management for the web and mobile apps.

- User pools are user directories that provide sign-up and sign-in options for the app users.

- Identity pools enable you to grant the users access to other AWS services.

- Key Management Services – KMS encryption service

- for key management and envelope encryption

- provides encryption at rest and does not handle encryption in transit.

- Amazon Certificate Manager – ACM

- helps easily provision, manage, and deploy public and private SSL/TLS certificates for use with AWS services and internally connected resources.

- AWS Secrets Manager

- helps protect secrets needed to access applications, services, and IT resources.

- supports automatic rotations of secrets

- Secrets Manager vs Systems Manager Parameter Store for secrets management

- Secrets Manager supports automatic credentials rotation and is integrated with Lambda and other services like RDS, and DynamoDB.

- Systems Manager Parameter Store provides free standard parameters and is cost-effective as compared to Secrets Manager.

Front-end Web and Mobile

- API Gateway

- is a fully managed service that makes it easy for developers to publish, maintain, monitor, and secure APIs at any scale.

- Powerful, flexible authentication mechanisms, such as AWS IAM policies, Lambda authorizer functions, and Amazon Cognito user pools.

- supports Canary release deployments for safely rolling out changes.

- define usage plans to meter, restrict third-party developer access, configure throttling, and quota limits on a per API key basis

- integrates with AWS X-Ray for understanding and triaging performance latencies.

- API Gateway CORS allows cross-domain calls

- Amplify

- is a complete solution that lets frontend web and mobile developers easily build, ship, and host full-stack applications on AWS, with the flexibility to leverage the breadth of AWS services as use cases evolve.

Management Tools

- CloudWatch

- monitoring to provide operational transparency

- is extendable with custom metrics

- does not capture memory metrics, by default, and can be done using the CloudWatch agent.

- EventBridge

- is a serverless event bus service that makes it easy to connect applications with data from a variety of sources.

- enables building loosely coupled and distributed event-driven architectures.

- CloudTrail

- helps enable governance, compliance, and operational and risk auditing of the AWS account.

- helps to get a history of AWS API calls and related events for the AWS account.

- CloudFormation

- easy way to create and manage a collection of related AWS resources, and provision and update them in an orderly and predictable fashion.

- Supports Serverless Application Model – SAM for the deployment of serverless applications including Lambda.

- CloudFormation StackSets extends the functionality of stacks by enabling you to create, update, or delete stacks across multiple accounts and Regions with a single operation.

Integration Tools

- Simple Queue Service

- as message queuing service and SNS as pub/sub notification service

- as a decoupling service and provide resiliency

- SQS features like visibility, and long poll vs short poll

- provide scaling for the Auto Scaling group based on the SQS size.

- SQS Standard vs SQS FIFO difference

- FIFO provides exactly-once delivery but with low throughput

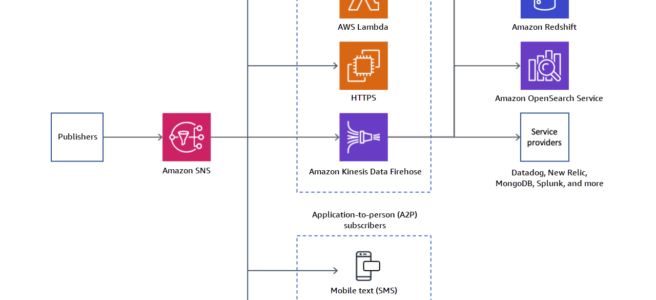

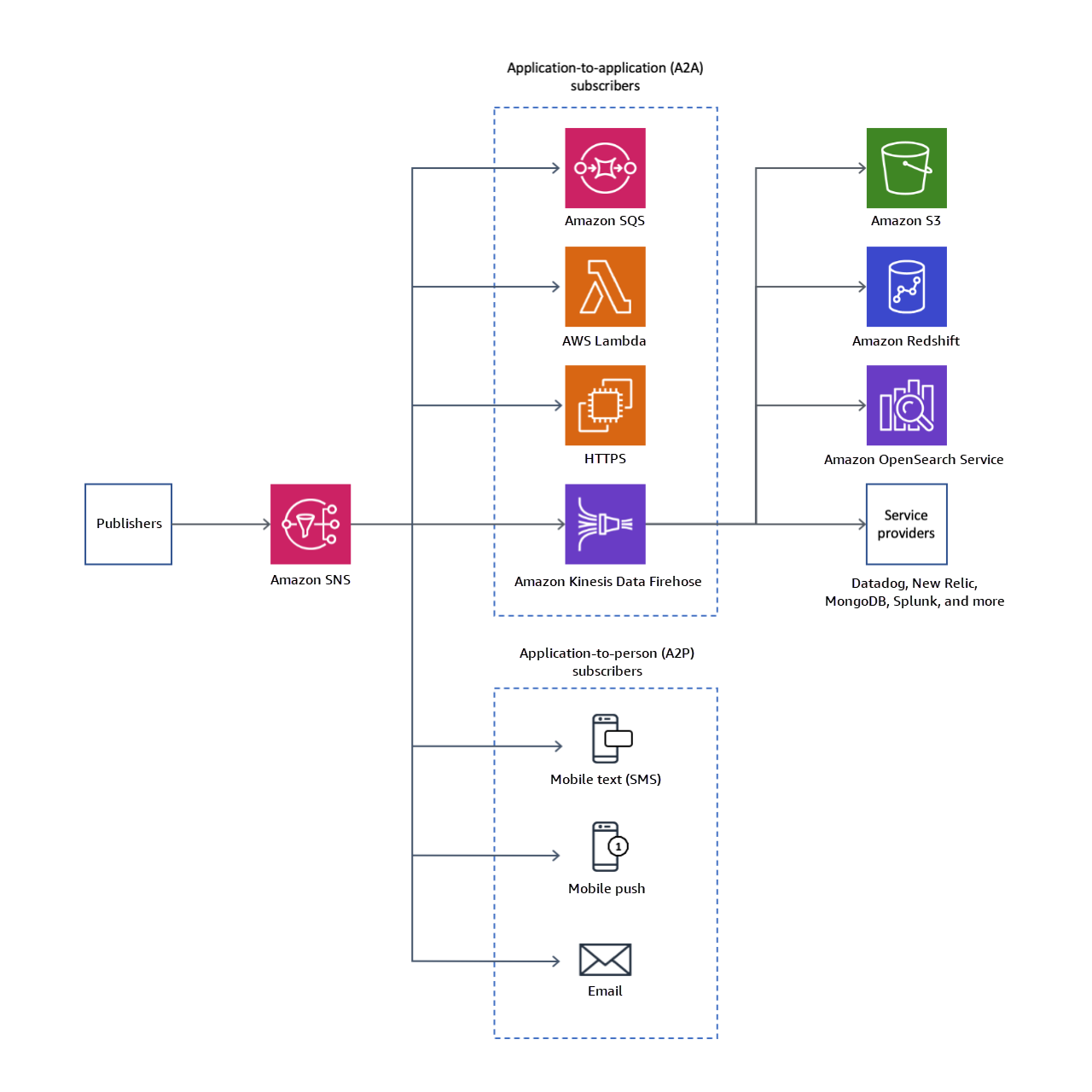

- Simple Notification Service – SNS

- is a web service that coordinates and manages the delivery or sending of messages to subscribing endpoints or clients

- Fanout pattern can be used to push messages to multiple subscribers.

- Understand SQS as a message queuing service and SNS as a pub/sub notification service.

- Know AWS Developer tools

- CodeCommit is a secure, scalable, fully-managed source control service that helps to host secure and highly scalable private Git repositories.

- CodeBuild is a fully managed build service that compiles source code, runs tests, and produces software packages that are ready to deploy.

- CodeDeploy helps automate code deployments to any instance, including EC2 instances and instances running on-premises.

- CodePipeline is a fully managed continuous delivery service that helps automate the release pipelines for fast and reliable application and infrastructure updates.

- CodeArtifact is a fully managed artifact repository service that makes it easy for organizations of any size to securely store, publish, and share software packages used in their software development process.

- X-Ray

- helps developers analyze and debug production, distributed applications for e.g. built using a microservices lambda architecture

Analytics

- Redshift as a business intelligence tool

- Kinesis

- for real-time data capture and analytics.

- Integrates with Lambda functions to perform transformations

- AWS Glue

- fully-managed, ETL service that automates the time-consuming steps of data preparation for analytics

Networking

- Does not cover much networking or designing networks, but be sure you understand VPC, Subnets, Routes, Security Groups, etc.

AWS Cloud Computing Whitepapers

- Architecting for the AWS Cloud: Best Practices

- AWS Well-Architected Framework whitepaper (This is theoretical paper, with loads of theory and is tiresome. If you cover the above topics, you can skip this one)

- AWS Security Best Practices whitepaper, August 2016

- Practicing Continuous Integration and Continuous Delivery on AWS Accelerating Software Delivery with DevOps whitepaper, June 2017

- Microservices on AWS whitepaper, September 2017

- Serverless Architectures with AWS Lambda whitepaper, November 2017

- Optimizing Enterprise Economics with Serverless Architectures whitepaper, October 2017

- Running Containerized Microservices on AWS whitepaper, November 2017

- Blue/Green Deployments on AWS whitepaper, August 2016

On the Exam Day

- Make sure you are relaxed and get some good night’s sleep. The exam is not tough if you are well-prepared.

- If you are taking the AWS Online exam

- Try to join at least 30 minutes before the actual time as I have had issues with both PSI and Pearson with long wait times.

- The online verification process does take some time and usually, there are glitches.

- Remember, you would not be allowed to take the take if you are late by more than 30 minutes.

- Make sure you have your desk clear, no hand-watches, or external monitors, keep your phones away, and nobody can enter the room.

Finally, All the Best 🙂