AWS Certified SysOps Administrator – Associate (SOA-C02) Exam Learning Path

- I recently recertified for the AWS Certified SysOps Administrator – Associate (SOA-C02) exam.

- SOA-C02 is the updated version of the SOA-C01 AWS exam with hands-on labs included, which is the first with AWS.

NOTE: As of March 28, 2023, the AWS Certified SysOps Administrator – Associate exam will not include exam labs until further notice. This removal of exam labs is temporary while we evaluate the exam labs and make improvements to provide an optimal candidate experience.

AWS Certified SysOps Administrator – Associate (SOA-C02) Exam Content

- AWS SysOps Administrator – Associate SOA-C02 is intended for system administrators in a cloud operations role.

- SOA-C02 validates a candidate’s ability to deploy, manage, and operate workloads on AWS which includes

- Deploy, manage, and operate workloads on AWS

- Support and maintain AWS workloads according to the AWS Well-Architected Framework

- Perform operations by using the AWS Management Console and the AWS CLI

- Implement security controls to meet compliance requirements

- Monitor, log, and troubleshoot systems

- Apply networking concepts (for example, DNS, TCP/IP, firewalls)

- Implement architectural requirements (for example, high availability, performance, capacity)

- Perform business continuity and disaster recovery procedures

- Identify, classify, and remediate incidents

Refer AWS Certified SysOps – Associate (SOA-C02) Exam Guide

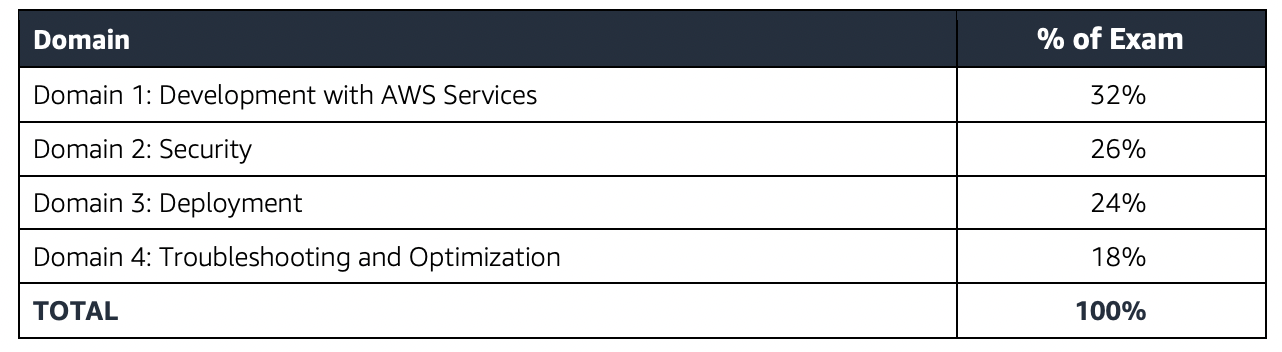

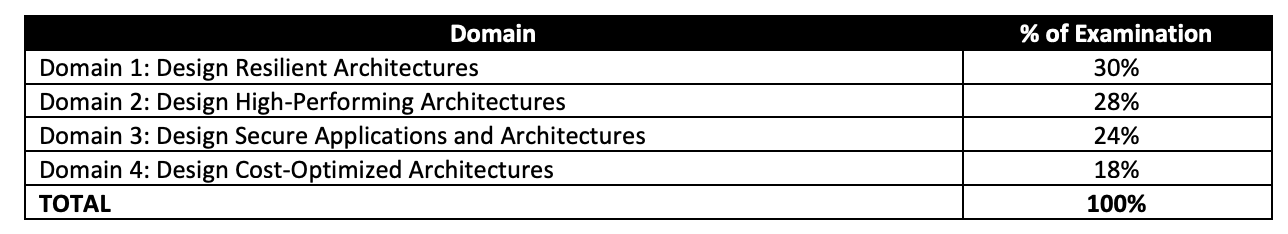

AWS Certified SysOps Administrator – Associate (SOA-C02) Exam Summary

- SOA-C02 is the first AWS exam that included 2 sections

- Objective questions

- Hands-on labs

- With Labs

-

SOA-C02 Exam consists of around 50 objective-type questions and 3 Hands-on labs to be answered in 190 minutes.

- Labs are performed in a separate instance. Copy-paste works, so make sure you copy the exact names on resource creation.

- Labs are pretty easy if you have worked on AWS.

- Plan to leave 20 minutes to complete each exam lab.

- NOTE: Once you complete a section and click next you cannot go back to the section. The same is for the labs. Once a lab is completed, you cannot return back to the lab.

- Practice the Sample Lab provided when you book the exam, which would give you a feel of how the hands-on exam would actually be.

-

- Without Labs

- SOA-C02 exam consists of 65 questions in 130 minutes, and the time is more than sufficient if you are well-prepared.

- SOA-C02 exam includes two types of questions, multiple-choice and multiple-response.

- SOA-C02 has a scaled score between 100 and 1,000. The scaled score needed to pass the exam is 720.

- Associate exams currently cost $ 150 + tax.

- You can get an additional 30 minutes if English is your second language by requesting Exam Accommodations. It might not be needed for Associate exams but is helpful for Professional and Specialty ones.

- AWS exams can be taken either remotely or online, I prefer to take them online as it provides a lot of flexibility. Just make sure you have a proper place to take the exam with no disturbance and nothing around you.

- Also, if you are taking the AWS Online exam for the first time try to join at least 30 minutes before the actual time as I have had issues with both PSI and Pearson with long wait times.

AWS Certified SysOps Administrator – Associate (SOA-C02) Exam Resources

- Online Courses

- Stephane Maarek – AWS Certified SysOps Administrator Associate

- Adrian Cantrill – AWS Certified SysOps Administrator – Associate

- Adrian Cantrill – All Associate Bundle

- DolfinEd Udemy AWS Certified Solutions Architect Associate – SAA-C02 (Self-Paced)

- Whizlabs – AWS Certified SysOps Administrator – Associate Course

- Exam Readiness: AWS Certified SysOps Administrator – Associate

- Practice Tests

- Signed up with AWS for the Free Tier account which provides a lot of the Services to be tried for free with certain limits which are more than enough to get things going. Be sure to decommission anything, if you using anything beyond the free limits, preventing any surprises 🙂

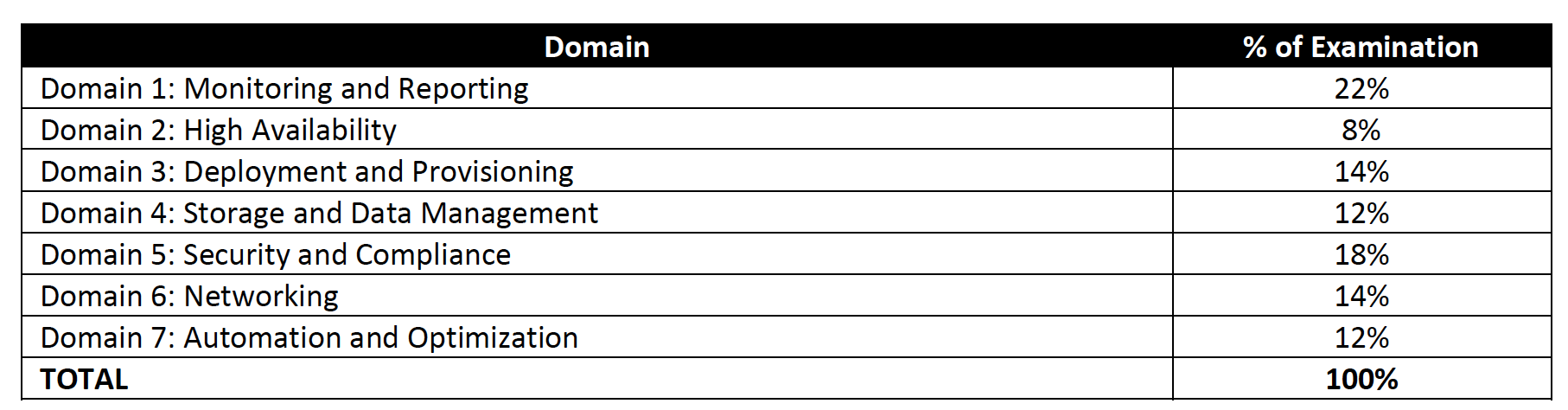

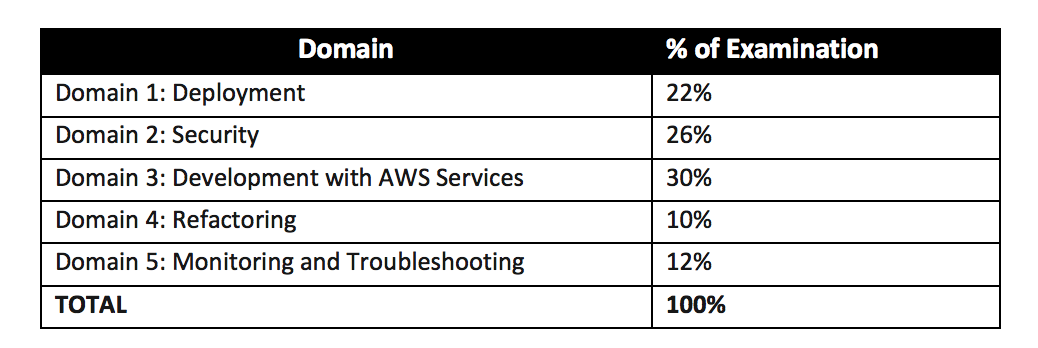

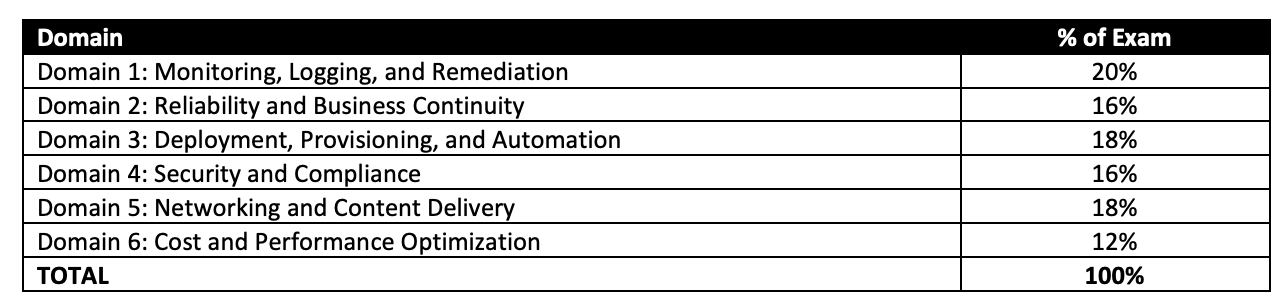

AWS Certified SysOps Administrator – Associate (SOA-C02) Exam Topics

SOA-C02 mainly focuses on SysOps and DevOps tools in AWS and the ability to deploy, manage, operate, and automate workloads on AWS.

Management & Governance Tools

- CloudFormation

- provides an easy way to create and manage a collection of related AWS resources, provision and update them in an orderly and predictable fashion.

- CloudFormation Concepts cover

- Templates act as a blueprint for provisioning of AWS resources

- Stacks are collection of resources as a single unit, that can be created, updated, and deleted by creating, updating, and deleting stacks.

- Change Sets present a summary or preview of the proposed changes that CloudFormation will make when a stack is updated.

- Nested stacks are stacks created as part of other stacks.

- CloudFormation template anatomy consists of resources, parameters, outputs, and mappings.

- CloudFormation supports multiple features

- Drift detection enables you to detect whether a stack’s actual configuration differs, or has drifted, from its expected configuration.

- Termination protection helps prevent a stack from being accidentally deleted.

- Stack policy can prevent stack resources from being unintentionally updated or deleted during a stack update.

- StackSets help create, update, or delete stacks across multiple accounts and Regions with a single operation.

- Helper scripts with creation policies can help wait for the completion of events before provisioning or marking resources complete.

DependsOnattribute can specify the resource creation order and control the creation of a specific resource follows another.- Update policy supports rolling and replacing updates with AutoScaling.

- Deletion policies to help retain or backup resources during stack deletion.

- Custom resources can be configured for uses cases not supported for e.g. retrieve AMI IDs or interact with external services

- Understand CloudFormation Best Practices esp. Nested Stacks and logical grouping

- Elastic Beanstalk helps to quickly deploy and manage applications in the AWS Cloud without having to worry about the infrastructure that runs those applications.

- Understand Elastic Beanstalk overall – Applications, Versions, and Environments

- Deployment strategies with their advantages and disadvantages

- OpsWorks is a configuration management service that helps to configure and operate applications in a cloud enterprise by using Chef.

- Understand CloudFormation vs Elastic Beanstalk vs OpsWorks

- AWS Organizations

- Difference between Service Control Policies and IAM Policies

- SCP provides the maximum permission that a user can have, however, the user still needs to be explicitly given IAM policy.

- Consolidated billing enables consolidating payments from multiple AWS accounts and includes combined usage and volume discounts including sharing of Reserved Instances across accounts.

- Systems Manager is the operations hub and provides various services like parameter store, patch manager

- Parameter Store provides secure, scalable, centralized, hierarchical storage for configuration data and secret management. Does not support secrets rotation. Use Secrets Manager instead

- Session Manager provides secure and auditable instance management without the need to open inbound ports, maintain bastion hosts, or manage SSH keys.

- Patch Manager helps automate the process of patching managed instances with both security-related and other types of updates.

- CloudWatch

- collects monitoring and operational data in the form of logs, metrics, and events, and visualizes it.

- EC2 metrics can track (disk, network, CPU, status checks) but do not capture metrics like memory, disk swap, disk storage, etc.

- CloudWatch unified agent can be used to gather custom metrics like memory, disk swap, disk storage, etc.

- CloudWatch Alarm actions can be configured to perform actions based on various metrics for e.g. CPU below 5%

- CloudWatch alarm can monitor

StatusCheckFailed_Systemstatus on an EC2 instance and automatically recover the instance if it becomes impaired due to an underlying hardware failure or a problem that requires AWS involvement to repair. - Know ELB monitoring

- Load Balancer metrics SurgeQueueLength and SpilloverCount

- HealthyHostCount, UnHealthyHostCount determines the number of healthy and unhealthy instances registered with the load balancer.

- Reasons for 4XX and 5XX errors

- CloudWatch logs can be used to monitor, store, and access log files from EC2 instances, CloudTrail, Route 53, and other sources. You can create metric filters over the logs.

- CloudWatch Subscription Filters can be used to send logs to Kinesis Data Streams, Lambda, or Kinesis Data Firehose.

- EventBridge (CloudWatch Events) is a serverless event bus service that makes it easy to connect applications with data from a variety of sources.

- EventBridge or CloudWatch events can be used as a trigger for periodically scheduled events.

- CloudWatch unified agent helps collect metrics and logs from EC2 instances and on-premises servers and push them to CloudWatch.

- collects monitoring and operational data in the form of logs, metrics, and events, and visualizes it.

- CloudTrail for audit and governance

- With Organizations, the trail can be configured to log CloudTrail from all accounts to a central account.

- CloudTrail log file integrity validation can be used to check whether a log file was modified, deleted, or unchanged after being delivered.

- AWS Config is a fully managed service that provides AWS resource inventory, configuration history, and configuration change notifications to enable security, compliance, and governance.

- supports managed as well as custom rules that can be evaluated on periodic basis or as the event occurs for compliance and trigger automatic remediation

- Conformance pack is a collection of AWS Config rules and remediation actions that can be easily deployed as a single entity in an account and a Region or across an organization in AWS Organizations.

- Control Tower

- to setup, govern, and secure a multi-account environment

- strongly recommended guardrails cover EBS encryption

- Service Catalog

- allows organizations to create and manage catalogues of IT services that are approved for use on AWS with minimal permissions.

- Trusted Advisor provides recommendations that help follow AWS best practices covering security, performance, cost, fault tolerance & service limits.

- AWS Health Dashboard is the single place to learn about the availability and operations of AWS services.

- Cost allocation tags can be used to differentiate resource costs and analyzed using Cost Explorer or on a Cost Allocation report.

- Understand how to setup Billing Alerts using CloudWatch

Networking & Content Delivery

- VPC – Virtual Private Cloud is a virtual network in AWS

- Understand Public Subnet (has access to the Internet) vs Private Subnet (no access to the Internet)

- Route table defines rules, termed as routes, which determine where network traffic from the subnet would be routed

- Internet Gateway enables access to the internet

- Bastion host – allow access to instances in the private subnet without directly exposing them to the internet.

- NAT helps route traffic from private subnets to the internet

- NAT instance vs NAT Gateway

- Virtual Private Gateway – Connectivity between on-premises and VPC

- Egress-Only Internet Gateway – relevant to IPv6 only to allow egress traffic from private subnet to internet, without allowing ingress traffic

- VPC Flow Logs enables you to capture information about the IP traffic going to and from network interfaces in the VPC and can help in monitoring the traffic or troubleshooting any connectivity issues

- Security Groups vs NACLs esp. Security Groups are stateful and NACLs are stateless.

- VPC Peering provides a connection between two VPCs that enables routing of traffic between them using private IP addresses.

- VPC Endpoints enables the creation of a private connection between VPC to supported AWS services and VPC endpoint services powered by PrivateLink using its private IP address

- Ability to debug networking issues like EC2 not accessible, EC2 not reachable, or not able to communicate with others or Internet.

- Route 53 provides a scalable DNS system

- supports ALIAS record type helps map zone apex records to ELB, CloudFront, and S3 endpoints.

- Understand Routing Policies and their use cases

- Failover routing policy helps to configure active-passive failover.

- Geolocation routing policy helps route traffic based on the location of the users.

- Geoproximity routing policy helps route traffic based on the location of the resources and, optionally, shift traffic from resources in one location to resources in another.

- Latency routing policy use with resources in multiple AWS Regions and you want to route traffic to the Region that provides the best latency with less round-trip time.

- Weighted routing policy helps route traffic to multiple resources in specified proportions.

- Focus on Weighted, Latency routing policies

- Understand ELB, ALB, and NLB and what features they provide like

- Understand keys differences ELB vs ALB vs NLB

- ALB provides content and path routing

- NLB provides the ability to give static IPs to the load balancer esp. if there is a requirement to whitelist IPs.

- LB access logs provide the source IP address

- supports Sticky sessions to enable the load balancer to bind a user’s session to a specific target.

- Understand CloudFront and use cases

- CloudFront can be used with S3 to expose static data and website

- Know VPN and Direct Connect to provide AWS to on-premises connectivity. Not covered in detail.

Compute

- Understand EC2 in depth

- Understand EC2 instance types and use cases.

- Understand EC2 purchase options esp. spot instances and improved reserved instances options.

- Understand EC2 Metadata & Userdata.

- Understand EC2 Security.

- Use IAM Role work with EC2 instances to access services

- IAM Role can now be attached to stopped and runnings instances

- AMIs provide the information required to launch an instance, which is a virtual server in the cloud.

- AMIs are regional and can be shared publicly or with other accounts

- Only AMIs with unencrypted volumes or encrypted with a CMK (customer-managed keys) can be shared.

- The best practice is to use prebaked or golden images to reduce startup time for the applications. Leverage EC2 Image Builder.

- Troubleshooting EC2 issues

- RequestLimitExceeded

- InstanceLimitExceeded – Concurrent running instance limit, default is 20, has been reached in a region. Request increase in limits.

- InsufficientInstanceCapacity – AWS does not currently have enough available capacity to service the request. Change AZ or Instance Type.

- Monitoring EC2 instances

- System status checks failure – Stop and Start

- Instance status checks failure – Reboot

- EC2 supports Instance Recovery where the recovered instance is identical to the original instance, including the instance ID, private IP addresses, Elastic IP addresses, and all instance metadata.

- EC2 Image Builder can be used to pre-baked images with software to speed up booting and launching time.

- Understand Placement groups

- Cluster Placement Group provide low latency, High-Performance Computing by the logical grouping of instances within a Single AZ

- Spread Placement Groups is a group of instances that are each placed on distinct underlying hardware i.e. each instance on a distinct rack across AZ

- Partition Placement Groups is a group of instances spread across partitions i.e. group of instances spread across racks across AZs

- Understand Auto Scaling

- Auto Scaling can be configured with multiple AZs for high availability to launch instances across multiple AZs

- Auto Scaling attempts to distribute instances evenly between the AZs that are enabled for the Auto Scaling group

- Auto Scaling supports

- Dynamic scaling, which allows you to scale automatically in response to the changing demand

- Schedule scaling, which allows you to scale the application in response to predictable load changes

- Manual scaling can be performed by changing the desired capacity or adding and removing instances

- Auto Scaling life cycle hooks can be used to perform activities before instance termination.

- Understand Lambda and its use cases

- Lambda functions can be hosted in VPC with internet access controlled by a NAT instance.

- RDS Proxy acts as an intermediary between the application and an RDS database. RDS Proxy establishes and manages the necessary connection pools to the database so that the application creates fewer database connections.

Storage

- S3 provides an object storage service

- Understand storage classes with lifecycle policies

- S3 data protection provides encryption at rest and encryption in transit

- S3 default encryption can be used to encrypt the data with S3 bucket policies to prevent or reject unencrypted object uploads.

- Multi-part handling for fault-tolerant and performant large file uploads

- static website hosting, CORS

- S3 Versioning can help recover from accidental deletes and overwrites.

- Pre-Signed URLs for both upload and download

- S3 Transfer Acceleration enables fast, easy, and secure transfers of files over long distances between the client and an S3 bucket using globally distributed edge locations in CloudFront.

- Understand Glacier as archival storage. Glacier does not provide immediate access to the data even with expediated retrievals.

- Understand EBS storage option

- EBS vs Instance store volumes

- EBS volume types and their use cases, limitations esp. IOPS

- Storage Gateway allows storage of data in the AWS cloud for scalable and cost-effective storage while maintaining data security.

- Gateway-cached volumes stores data is stored in S3 and retains a copy of recently read data locally for low latency access to the frequently accessed data

- Gateway-stored volumes maintain the entire data set locally to provide low latency access

- EFS is a cost-optimized, serverless, scalable, and fully managed file storage for use with AWS Cloud and on-premises resources.

- supports data at rest encryption only during the creation. After creation, the file system cannot be encrypted and must be copied over to a new encrypted disk.

- supports General purpose and Max I/O performance mode.

- If hitting

PercentIOLimitissue move to Max I/O performance mode.

- FSx makes it easy and cost-effective to launch, run, and scale feature-rich, high-performance file systems in the cloud

- FSx for Windows supports SMB protocol and a Multi-AZ file system to provide high availability across multiple AZs.

- AWS Backup can be used to automate backup for EC2 instances and EFS file systems

- Data Lifecycle Manager to automate the creation, retention, and deletion of EBS snapshots and EBS-backed AMIs.

- AWS DataSync automates moving data between on-premises storage and S3 or Elastic File System (EFS).

Databases

- RDS provides cost-efficient, resizable capacity for an industry-standard relational database and manages common database administration tasks.

- Understand RDS Multi-AZ vs Read Replicas and use cases

- Multi-AZ deployment provides high availability, durability, and failover support

- Read replicas enable increased scalability and database availability in the case of an AZ failure.

- Automated backups and database change logs enable point-in-time recovery of the database during the backup retention period, up to the last five minutes of database usage.

- Aurora is a fully managed, MySQL- and PostgreSQL-compatible, relational database engine

- Backtracking “rewinds” the DB cluster to the specified time and performs in-place restore and does not create a new instance.

- Automated Backups that help restore the DB as a new instance

- Know ElastiCache use cases, mainly for caching performance

- Understand ElastiCache Redis vs Memcached

- Redis provides Multi-AZ support helps provide high availability across AZs and Online resharding to dynamically scale.

- ElastiCache can be used as a caching layer for RDS.

- Know DynamoDB. Not covered in detail

Security

- IAM provides Identity and Access Management services.

- Focus on IAM role and its use case, especially with the EC2 instance

- Understand IAM identity providers and federation and use cases

- Understand the process to configure cross-account access

- S3 Encryption supports data at rest and in transit encryption

- Understand S3 with SSE, SSE-C, SSE-KMS

- S3 default encryption can help encrypt objects, however, it does not encrypt existing objects before the setting was enabled. You can use S3 Inventory to list the objects and S3 Batch to encrypt them.

- Understand KMS for key management and envelope encryption

- KMS with imported customer key material does not support rotation and has to be done manually.

- AWS WAF – Web Application Firewall helps protect the applications against common web exploits like XSS or SQL Injection and bots that may affect availability, compromise security, or consume excessive resources

-

AWS GuardDuty is a threat detection service that continuously monitors the AWS accounts and workloads for malicious activity and delivers detailed security findings for visibility and remediation.

- AWS Secrets Manager can help securely expose credentials as well as rotate them.

- Secrets Manager integrates with Lambda and supports credentials rotation

- AWS Shield is a managed Distributed Denial of Service (DDoS) protection service that safeguards applications running on AWS

- Amazon Inspector

- is an automated security assessment service that helps improve the security and compliance of applications deployed on AWS.

- automatically assesses applications for exposure, vulnerabilities, and deviations from best practices.

- AWS Certificate Manager (ACM) handles the complexity of creating, storing, and renewing public and private SSL/TLS X.509 certificates and keys that protect the AWS websites and applications.

- Know AWS Artifact as on-demand access to compliance reports

Analytics

- Amazon Athena can be used to query S3 data without duplicating the data and using SQL queries

- OpenSearch (Elasticsearch) service is a distributed search and analytics engine built on Apache Lucene.

- Opensearch production setup would be 3 AZs, 3 dedicated master nodes, 6 nodes with two replicas in each AZ.

Integration Tools

- Understand SQS as a message queuing service and SNS as pub/sub notification service

- Focus on SQS as a decoupling service

- Understand SQS FIFO, make sure you know the differences between standard and FIFO

- Understand CloudWatch integration with SNS for notification

Practice Labs

- Create IAM users, IAM roles with specific limited policies.

- Create a private S3 bucket

- enable versioning

- enable default encryption

- enable lifecycle policies to transition and expire the objects

- enable same region replication

- Create a public S3 bucket with static website hosting

- Set up a VPC with public and private subnets with Routes, SGs, NACLs.

- Set up a VPC with public and private subnets and enable communication from private subnets to the Internet using NAT gateway

- Create EC2 instance, create a Snapshot and restore it as a new instance.

- Set up Security Groups for ALB and Target Groups, and create ALB, Launch Template, Auto Scaling Group, and target groups with sample applications. Test the flow.

- Create Multi-AZ RDS instance and instance force failover.

- Set up SNS topic. Use Cloud Watch Metrics to create a CloudWatch alarm on specific thresholds and send notifications to the SNS topic

- Set up SNS topic. Use Cloud Watch Logs to create a CloudWatch alarm on log patterns and send notifications to the SNS topic.

- Update a CloudFormation template and re-run the stack and check the impact.

- Use AWS Data Lifecycle Manager to define snapshot lifecycle.

- Use AWS Backup to define EFS backup with hourly and daily backup rules.

AWS Certified SysOps Administrator – Associate (SOA-C02) Exam Day

- Make sure you are relaxed and get some good night’s sleep. The exam is not tough if you are well-prepared.

- If you are taking the AWS Online exam

- Try to join at least 30 minutes before the actual time as I have had issues with both PSI and Pearson with long wait times.

- The online verification process does take some time and usually, there are glitches.

- Remember, you would not be allowed to take the take if you are late by more than 30 minutes.

- Make sure you have your desk clear, no hand-watches, or external monitors, keep your phones away, and nobody can enter the room.

Finally, All the Best 🙂