AWS Certified Solution Architect – Professional Exam Learning Path

NOTE : Refer to Solutions Architect – Professional SAP-C01 learning path for the latest exam.

I recently cleared the AWS Certified Solution Architect Professional Exam with 93% after almost 2 months of preparation

Topic Level Scoring:

1.0 High Availability and Business Continuity: 100%

2.0 Costing: 75%

3.0 Deployment Management: 100%

4.0 Network Design: 85%

5.0 Data Storage: 90%

6.0 Security: 92%

7.0 Scalability & Elasticity: 100%

8.0 Cloud Migration & Hybrid Architecture: 85%

AWS Solution Architect – Professional exam is quite an exhaustive exam with 77 questions in 180 minutes and covers a lot of AWS services and the combinations how they work and integrate together. However, the questions are bit old and has not kept pace with the fast changing AWS enhancements

If looking for Associate Preparation Guide, please refer

- AWS Solution Architect – Associate (SAA-C01)

- AWS SysOps Administrator – Associate (SOA-C01)

- AWS Developer – Associate (DVA-C01)

Refer to the AWS Solution Architect – Professional Exam Blue Print

AWS Solution Architect – Professional exam basically validates the following

- Identify and gather requirements in order to define a solution to be built on AWS

- Evolve systems by introducing new services and features

- Assess the tradeoffs and implications of architectural decisions and choices for applications deployed in AWS

- Design an optimal system by meeting project requirements while maximizing characteristics such as scalability, security, reliability, durability, and cost effectiveness

- Evaluate project requirements and make recommendations for implementation, deployment, and provisioning applications on AWS

- Provide best practice and architectural guidance over the lifecycle of a project

AWS Cloud Computing Whitepapers

- Overview of Security Processes

- Storage Options in the Cloud – without which you cannot clear the exam

- Defining Fault Tolerant Applications in the AWS Cloud

- Overview of Amazon Web Services

- AWS Risk & Compliance Whitepaper

- Architecting for the AWS Cloud: Best Practices

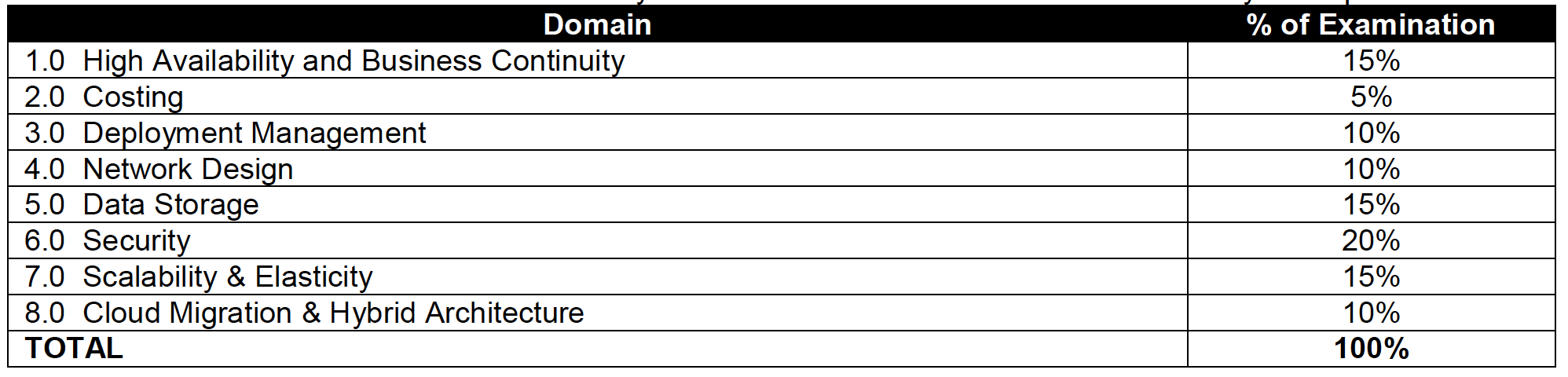

AWS Certified Solution Architect Professional Exam Contents

Domain 1.0: High Availability and Business Continuity

- 1.1 Demonstrate ability to architect the appropriate level of availability based on stakeholder requirements

- 1.2 Demonstrate ability to implement DR for systems based on RPO and RTO

- includes Disaster recovery Whitepaper

- 1.3 Determine appropriate use of multi-Availability Zones vs. multi-Region architectures

- includes services boundaries

- 1.4 Demonstrate ability to implement self-healing capabilities

- 1.5 High Availability vs. Fault Tolerance

- includes High Availability vs Fault Tolerance

Domain 2.0: Costing

- 2.1 Demonstrate ability to make architectural decisions that minimize and optimize infrastructure cost

- 2.2 Apply the appropriate AWS account and billing set-up options based on scenario

- 2.3 Ability to compare and contrast the cost implications of different architectures

- includes S3 Storage classes (Standard vs RRS) , EC2 purchasing options (RI vs On Demand vs Spot), S3 vs EBS, DynamoDb vs RDS

Domain 3.0: Deployment Management

- 3.1 Ability to manage the lifecycle of an application on AWS

- 3.2 Demonstrate ability to implement the right architecture for development, testing, and staging environments

- 3.3 Position and select most appropriate AWS deployment mechanism based on scenario

- includes Elastic Beanstalk – it works with Docker, don’t use to create RDS as it is linked to the lifecycle

- includes CloudFormation, OpsWorks in brief

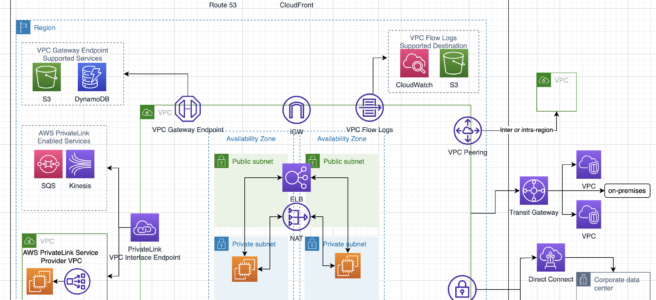

Domain 4.0: Network Design for a complex large scale deployment

- 4.1 Demonstrate ability to design and implement networking features of AWS

- 4.2 Demonstrate ability to design and implement connectivity features of AWS

- includes VPN, Direct Connect

Domain 5.0: Data Storage for a complex large scale deployment

- 5.1 Demonstrate ability to make architectural trade off decisions involving storage options

- includes Storage Options patterns and anti patterns for S3, EBS, Instance Store

- 5.2 Demonstrate ability to make architectural trade off decisions involving database options

- includes Storage Options patterns and anti patterns RDS, DynamoDB, Database on EC2

- 5.3 Demonstrate ability to implement the most appropriate data storage architecture

- includes RDS, DynamoDB, S3, ElastiCache, EBS,

- includes Storage gateway Cached vs Stored

- 5.4 Determine use of synchronous versus asynchronous replication

- includes RDS Multi-AZ vs Read Replicas

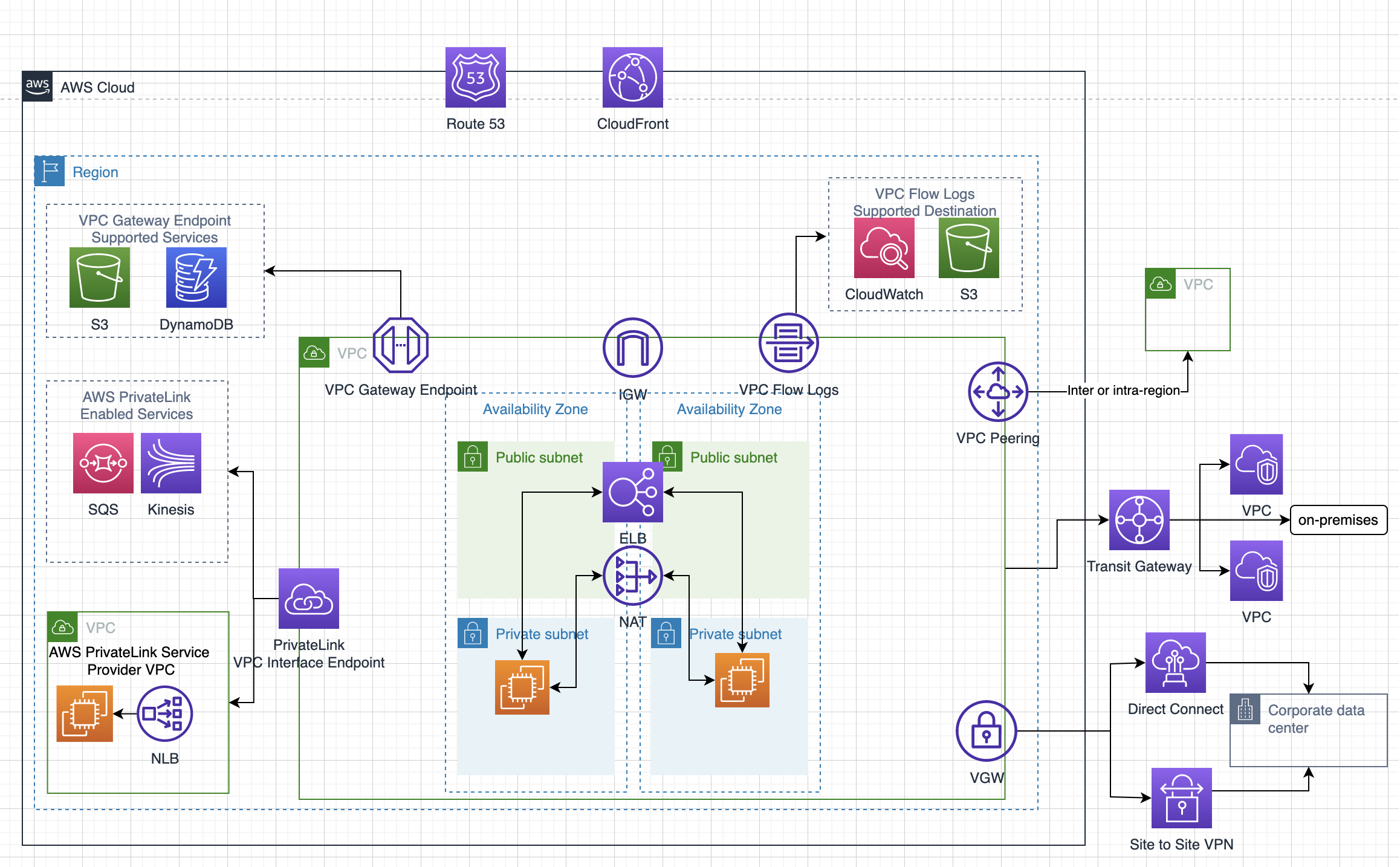

Domain 6.0: Security

- 6.1 Design information security management systems and compliance controls

- includes DDOS attack mitigation steps, WAF, IDS/IPS

- includes IAM Best Practices, CloudTrail

- 6.2 Design security controls with the AWS shared responsibility model and global infrastructure

- includes Security whitepaper

- 6.3 Design identity and access management controls

- 6.4 Design protection of Data at Rest controls

- includes Data Encryption at Rest Whitepaper

- includes S3 Data Protection

- 6.5 Design protection of Data in Flight and Network Perimeter controls

- includes HTTPS, SSL, Security Groups vs NACLs

- includes CloudFront, ELB with Certificates, Proxy Protocol

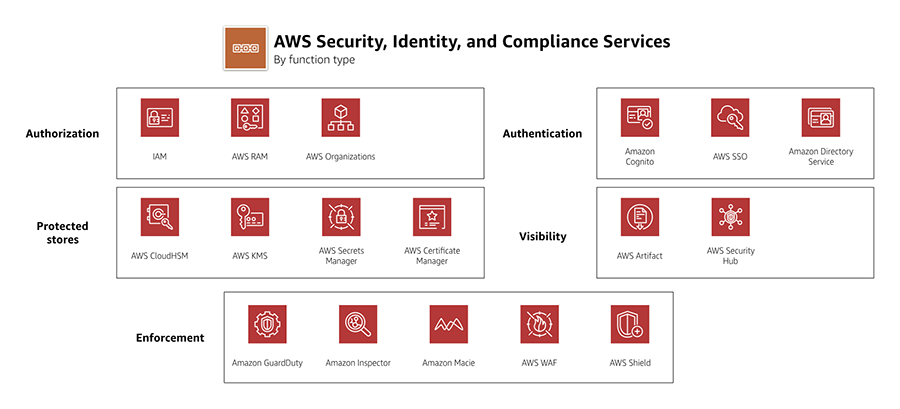

Domain 7.0: Scalability and Elasticity

- 7.1 Demonstrate the ability to design a loosely coupled system

- includes Route 53 health checks, Routing policy

- includes SQS to decouple architecture Job Observer pattern – scaling with Cloud

- includes Kinesis for real time streaming and analytics, parallel outputs, and stores data up to 24 hours by default

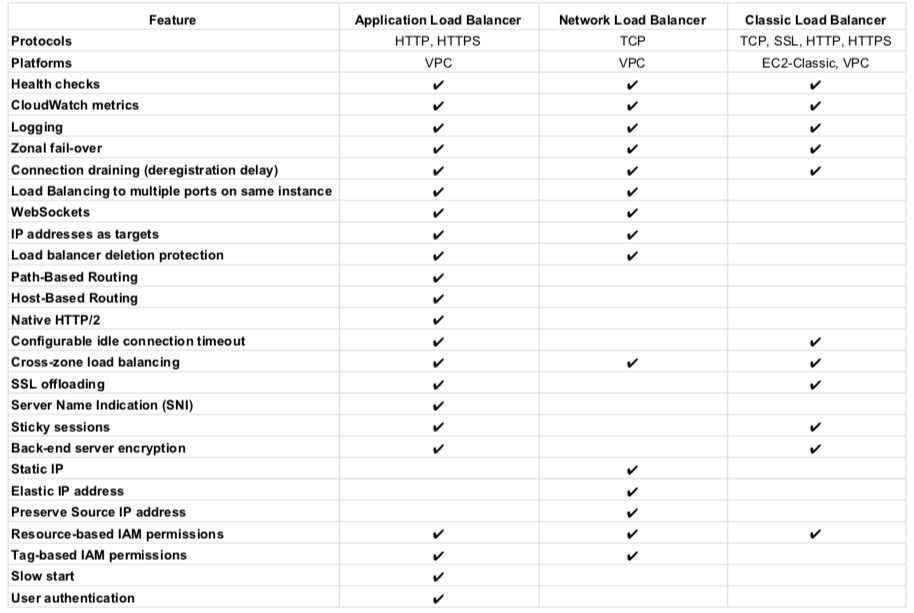

- 7.2 Demonstrate ability to implement the most appropriate front-end scaling architecture

- includes ELB, Auto Scaling, ELB with Auto Scaling

- includes CloudFront covering cache behavior, dynamic content, work with on premise servers as origin, HLS with Elastic Transcoder

- 7.3 Demonstrate ability to implement the most appropriate middle-tier scaling architecture

- 7.4 Demonstrate ability to implement the most appropriate data storage scaling architecture

- includes DynamoDB, RDS, RDS with Read Replicas, ElastiCache

- 7.5 Determine trade-offs between vertical and horizontal scaling

- includes basic understanding of horizontal scaling is scale in/out and vertical scaling is scale up/down

Domain 8.0: Cloud Migration and Hybrid Architecture

- 8.1 Plan and execute for applications migrations

- includes migration with Regional services, VM Import/Export tool, AMI, ,On Premise to RDS replication

- includes S3 with Multi part upload, AWS Import/Export

- 8.2 Demonstrate ability to design hybrid cloud architectures

- includes VPN, Direct Connect covering configuration, route propagation, Direct connect with IPSec, public vs private interface

- includes IAM Role using STS to authenticate using corporate directories

- includes Directory Services

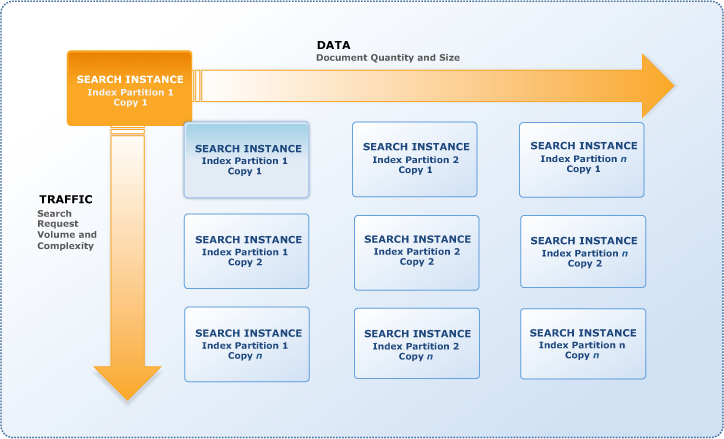

Other services like SWF manual task and ability to retry, SNS Mobile Push, SES for durable email, Elastic Transcoder, CloudSearch for search, Data Pipeline for disaster recovery, CloudWatch provides durable storage for logs, EMR how to improve performance

AWS Solution Architect – Professional Exam Resources

- I have couple of years experience working on AWS

- Online Courses

- Udemy AWS Certified Solutions Architect – Professional 2019 course by DolfinEd is the highest rated course and highly recommended and covers both old and new exam pattern.

- acloud.guru Certified Solutions Architect – Professional course, which is good, but surely is not at all sufficient to clear the exam

- Linux Academy Course which is far more exhaustive and covers a wide range of topics with labs. It also has a free 7 day for you the try it out and offers monthly subscription

- Zeal Vora – AWS Certified Solutions Architect – Professional 2019 Udemy Course which is more detailed with good ratings

- Opinion: If you want to go for a single one, I would suggest go for Udemy DolfinEd course.

- You can also check practice tests

- Braincert AWS Solution Architect – Professional SAP-C01 Practice Exam, which provide set of extensive questions, with very nice, accurate & detailed explanation

- Whizlabs AWS Solution Architect – Professional Exam exams which provide practice exams

- Went through a lot of Whitepapers especially the

- Storage Options (without this you cannot pass the exam)

- DDOS

- High Availability & Fault Tolerance

- Disaster Recovery

- Securing Data at Rest

- Went through a lot of Reinvent videos, couple of playlist

- I have tried to put a Quick Certification Cheat Sheet (WIP) covering most of the AWS services for quick recap before the exam.