AWS Lambda Event Source

- Lambda Event Source is an AWS service or developer-created application that produces events that trigger an AWS Lambda function to run.

- Event sources can be either AWS Services or Custom applications.

- Event sources can be both push and pull sources

- Services like S3, and SNS publish events to Lambda by invoking the cloud function directly.

- Lambda can also poll resources in services like Kafka, and Kinesis streams that do not publish events to Lambda.

- Events are passed to a Lambda function as an event input parameter. For batch event sources, such as Kinesis Streams, the event parameter may contain multiple events in a single call, based on the requested batch size

Lambda Event Source Mapping

- Lambda Event source mapping refers to the configuration which maps an event source to a Lambda function.

- Event source mapping

- enables automatic invocation of the Lambda function when events occur.

- identifies the type of events to publish and the Lambda function to invoke when events occur.

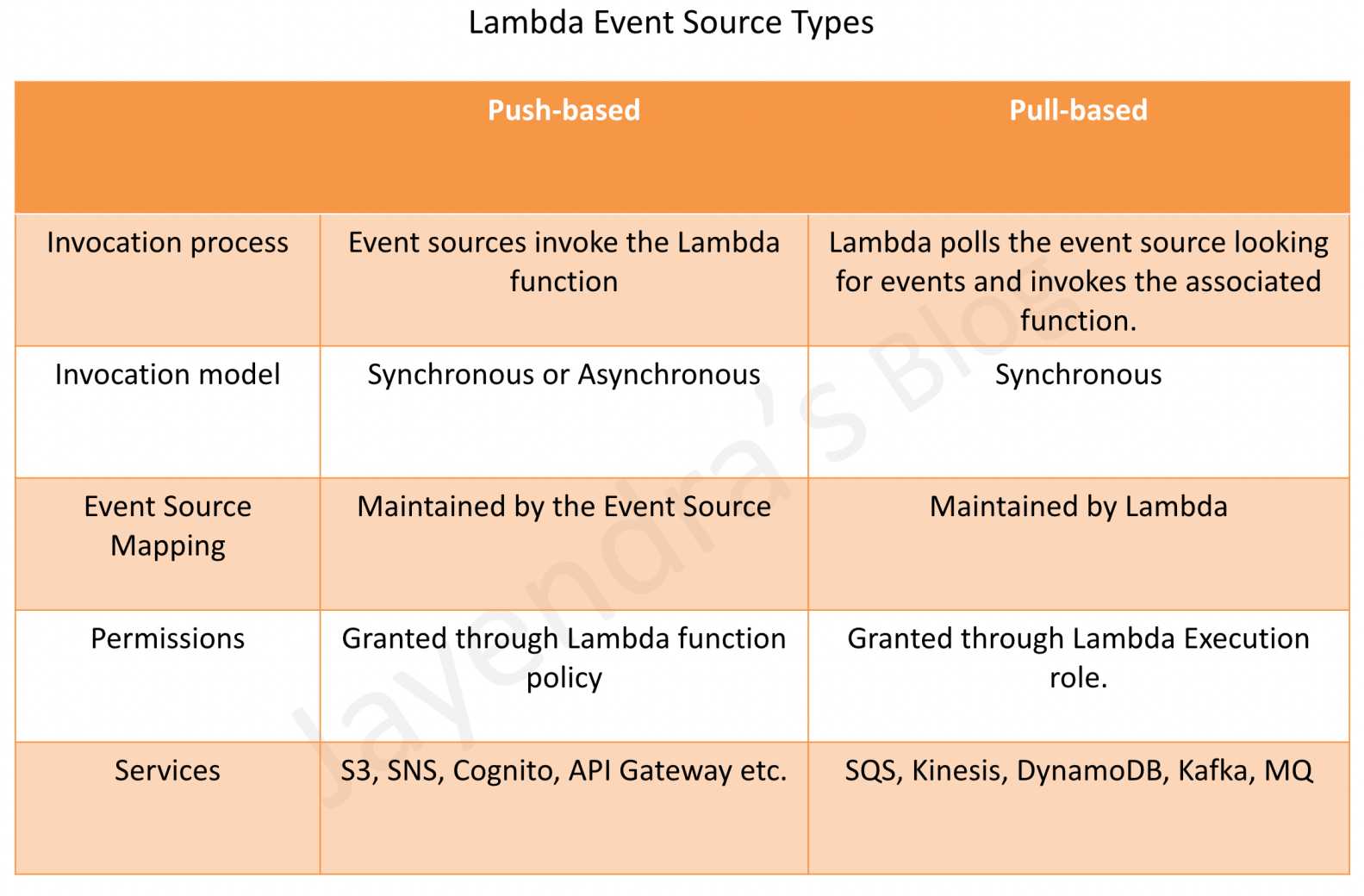

Lambda Event Sources Type

Push-based

- also referred to as the Push model

- includes services like S3, SNS, SES, etc.

- Event source mapping maintained on the event source side

- as the event sources invoke the Lambda function, a resource-based policy should be used to grant the event source the necessary permissions.

Pull-based

- also referred to as the Pull model

- covers mostly the Stream-based event sources like DynamoDB, Kinesis streams, MQ, SQS, Kafka

- Event source mapping maintained on the Lambda side

Lambda Event Sources Invocation Model

Synchronously

- You wait for the function to process the event and return a response.

- Error handling and retries need to be handled by the Client.

- Invocation includes API, and SDK for calls from API Gateway.

Asynchronously

- queues the event for processing and returns a response immediately.

- handles retries and can send invocation records to a destination for successful and failed events.

- Invocation includes S3, SNS, and CloudWatch Events

Lambda Supported Event Sources

AWS Lambda can be configured as an event source for multiple AWS services

| Service | Method of invocation |

|---|---|

| Amazon Alexa | Event-driven; synchronous invocation |

| Amazon MSK – Managed Streaming for Apache Kafka | Lambda polling |

| Self-managed Apache Kafka | Lambda polling |

| Amazon API Gateway | Event-driven; synchronous invocation |

| AWS CloudFormation | Event-driven; asynchronous invocation |

| Amazon CloudFront (Lambda@Edge) | Event-driven; synchronous invocation |

| Amazon EventBridge (CloudWatch Events) | Event-driven; asynchronous invocation |

| Amazon CloudWatch Logs | Event-driven; asynchronous invocation |

| AWS CodeCommit | Event-driven; asynchronous invocation |

| AWS CodePipeline | Event-driven; asynchronous invocation |

| Amazon Cognito | Event-driven; synchronous invocation |

| AWS Config | Event-driven; asynchronous invocation |

| Amazon Connect | Event-driven; synchronous invocation |

| Amazon DynamoDB | Lambda polling |

| Amazon Elastic File System | Special integration |

| Elastic Load Balancing (Application Load Balancer) | Event-driven; synchronous invocation |

| AWS IoT | Event-driven; asynchronous invocation |

| AWS IoT Events | Event-driven; asynchronous invocation |

| Amazon Kinesis | Lambda polling |

| Amazon Kinesis Data Firehose | Event-driven; synchronous invocation |

| Amazon Lex | Event-driven; synchronous invocation |

| Amazon MQ | Lambda polling |

| Amazon Simple Email Service | Event-driven; asynchronous invocation |

| Amazon Simple Notification Service | Event-driven; asynchronous invocation |

| Amazon Simple Queue Service | Lambda polling |

| Amazon S3 | Event-driven; asynchronous invocation |

| Amazon Simple Storage Service Batch | Event-driven; synchronous invocation |

| Secrets Manager | Event-driven; synchronous invocation |

| AWS X-Ray | Special integration |

Amazon S3

- S3 bucket events, such as the object-created or object-deleted events can be processed using Lambda functions for e.g., the Lambda function can be invoked when a user uploads a photo to a bucket to read the image and create a thumbnail.

- S3 bucket notification configuration feature can be configured for the event source mapping, to identify the S3 bucket events and the Lambda function to invoke.

- Error handling for an event source depends on how Lambda is invoked

- S3 invokes your Lambda function asynchronously.

DynamoDB

- Lambda functions can be used as triggers for the DynamoDB table to take custom actions in response to updates made to the DynamoDB table.

- Trigger can be created by

- Enabling DynamoDB Streams for the table.

- Lambda polls the stream and processes any updates published to the stream

- DynamoDB is a stream-based event source and with stream-based service, the event source mapping is created in Lambda, identifying the stream to poll and which Lambda function to invoke.

- Error handling for an event source depends on how Lambda is invoked

Kinesis Streams

- AWS Lambda can be configured to automatically poll the Kinesis stream periodically (once per second) for new records.

- Lambda can process any new records such as social media feeds, IT logs, website click streams, financial transactions, and location-tracking events

- Kinesis Streams is a stream-based event source and with stream-based service, the event source mapping is created in Lambda, identifying the stream to poll and which Lambda function to invoke.

- Error handling for an event source depends on how Lambda is invoked

Simple Notification Service – SNS

- SNS notifications can be processed using Lambda

- When a message is published to an SNS topic, the service can invoke Lambda function by passing the message payload as parameter, which can then process the event

- Lambda function can be triggered in response to CloudWatch alarms and other AWS services that use SNS.

- SNS via topic subscription configuration feature can be used for the event source mapping, to identify the SNS topic and the Lambda function to invoke

- Error handling for an event source depends on how Lambda is invoked

- SNS invokes your Lambda function asynchronously.

Simple Email Service – SES

- SES can be used to receive messages and can be configured to invoke Lambda function when messages arrive, by passing in the incoming email event as parameter

- SES using the rule configuration feature can be used for the event source mapping

- Error handling for an event source depends on how Lambda is invoked

- SES invokes your Lambda function asynchronously.

Amazon Cognito

- Cognito Events feature enables Lambda function to run in response to events in Cognito for e.g. Lambda function can be invoked for the Sync Trigger events, that is published each time a dataset is synchronized.

- Cognito event subscription configuration feature can be used for the event source mapping

- Error handling for an event source depends on how Lambda is invoked

- Cognito is configured to invoke a Lambda function synchronously

CloudFormation

- Lambda function can be specified as a custom resource to execute any custom commands as a part of deploying CloudFormation stacks and can be invoked whenever the stacks are created, updated, or deleted.

- CloudFormation using stack definition can be used for the event source mapping

- Error handling for an event source depends on how Lambda is invoked

- CloudFormation invokes the Lambda function asynchronously

CloudWatch Logs

- Lambda functions can be used to perform custom analysis on CloudWatch Logs using CloudWatch Logs subscriptions.

- CloudWatch Logs subscriptions provide access to a real-time feed of log events from CloudWatch Logs and deliver it to the AWS Lambda function for custom processing, analysis, or loading to other systems.

- CloudWatch Logs using the log subscription configuration can be used for the event source mapping

- Error handling for an event source depends on how Lambda is invoked

- CloudWatch Logs invokes the Lambda function asynchronously

CloudWatch Events

- CloudWatch Events help respond to state changes in the AWS resources. When the resources change state, they automatically send events into an event stream.

- Rules that match selected events in the stream can be created to route them to the Lambda function to take action for e.g., the Lambda function can be invoked to log the state of an EC2 instance or AutoScaling Group.

- CloudWatch Events by using a rule target definition can be used for the event source mapping

- Error handling for an event source depends on how Lambda is invoked

- CloudWatch Events invokes the Lambda function asynchronously

CodeCommit

- Trigger can be created for a CodeCommit repository so that events in the repository will invoke a Lambda function for e.g., Lambda function can be invoked when a branch or tag is created or when a push is made to an existing branch.

- CodeCommit by using a repository trigger can be used for the event source mapping

- Error handling for an event source depends on how Lambda is invoked

- CodeCommit Events invokes the Lambda function asynchronously

Scheduled Events (powered by CloudWatch Events)

- AWS Lambda can be invoked regularly on a scheduled basis using the schedule event capability in CloudWatch Events.

- CloudWatch Events by using a rule target definition can be used for the event source mapping

- Error handling for an event source depends on how Lambda is invoked

- CloudWatch Events invokes the Lambda function asynchronously

AWS Config

- Lambda functions can be used to evaluate whether the AWS resource configurations comply with custom Config rules.

- As resources are created, deleted, or changed, AWS Config records these changes and sends the information to the Lambda functions, which can then evaluate the changes and report results to AWS Config. AWS Config can be used to assess overall resource compliance

- AWS Config by using a rule target definition can be used for the event source mapping

- Error handling for an event source depends on how Lambda is invoked

- AWS Config invokes the Lambda function asynchronously

Amazon API Gateway

- Lambda function can be invoked over HTTPS by defining a custom REST API and endpoint using Amazon API Gateway.

- Individual API operations, such as GET and PUT, can be mapped to specific Lambda functions.

- When an HTTPS request to the API endpoint is received, the API Gateway service invokes the corresponding Lambda function.

- Error handling for an event source depends on how Lambda is invoked.

- API Gateway is configured to invoke a Lambda function synchronously.

Other Event Sources: Invoking a Lambda Function On Demand

- Lambda functions can be invoked on-demand without the need to preconfigure any event source mapping in this case.

AWS Certification Exam Practice Questions

- Questions are collected from Internet and the answers are marked as per my knowledge and understanding (which might differ with yours).

- AWS services are updated everyday and both the answers and questions might be outdated soon, so research accordingly.

- AWS exam questions are not updated to keep up the pace with AWS updates, so even if the underlying feature has changed the question might not be updated

- Open to further feedback, discussion and correction.