Google Cloud Data Transfer Services

Google Cloud Data Transfer services provide various options in terms of network and transfer tools to help transfer data from on-premises to Google Cloud network

Network Services

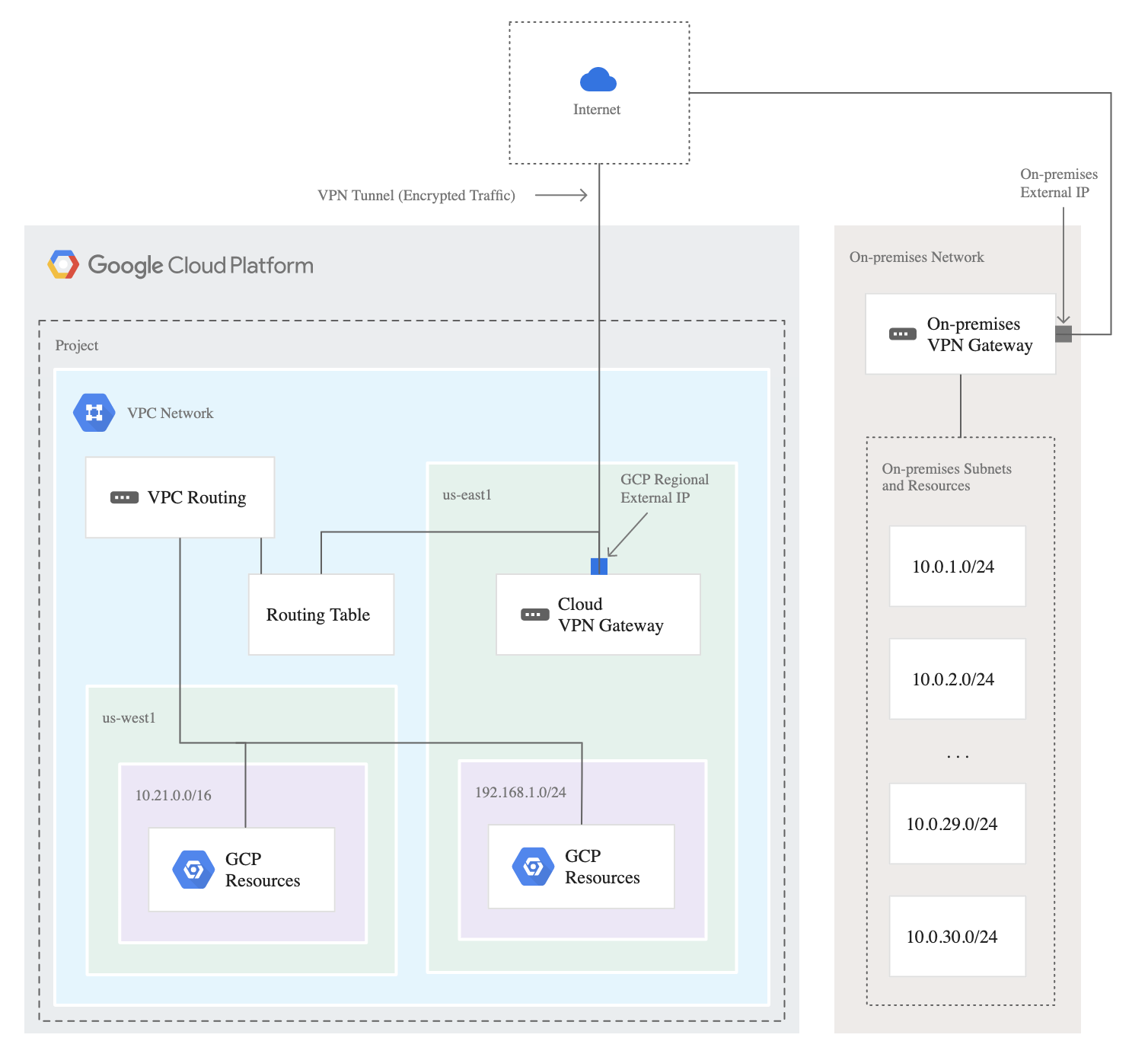

Cloud VPN

- Provides network connectivity with Google Cloud between on-premises network and Google Cloud, or from Google Cloud to another cloud provider.

- Cloud VPN still routes the traffic through the Internet.

- Cloud VPN is quick to set up (as compared to Interconnect)

- Each Cloud VPN tunnel can support up to 3 Gbps total for ingress and egress, but available bandwidth depends on the connectivity

- Choose Cloud VPN to encrypt traffic to Google Cloud, or with lower throughput solution, or experimenting with migrating the workloads to Google Cloud

Cloud Interconnect

- Cloud Interconnect offers a direct connection to Google Cloud through Google or one of the Cloud Interconnect service providers.

- Cloud Interconnect service prevents data from going on the public internet and can provide a more consistent throughput for large data transfers

- For enterprise-grade connection to Google Cloud that has higher throughput requirements, choose Dedicated Interconnect (10 Gbps to 100 Gbps) or Partner Interconnect (50 Mbps to 50 Gbps)

- Cloud Interconnect provides access to all Google Cloud products and services from your on-premises network except Google Workspace.

- Cloud Interconnect also allows access to supported APIs and services by using Private Google Access from on-premises hosts.

Direct Peering

- Direct Peering provides access to the Google network with fewer network hops than with a public internet connection

- By using Direct Peering, internet traffic is exchanged between the customer network and Google’s Edge Points of Presence (PoPs), which means the data does not use the public internet.

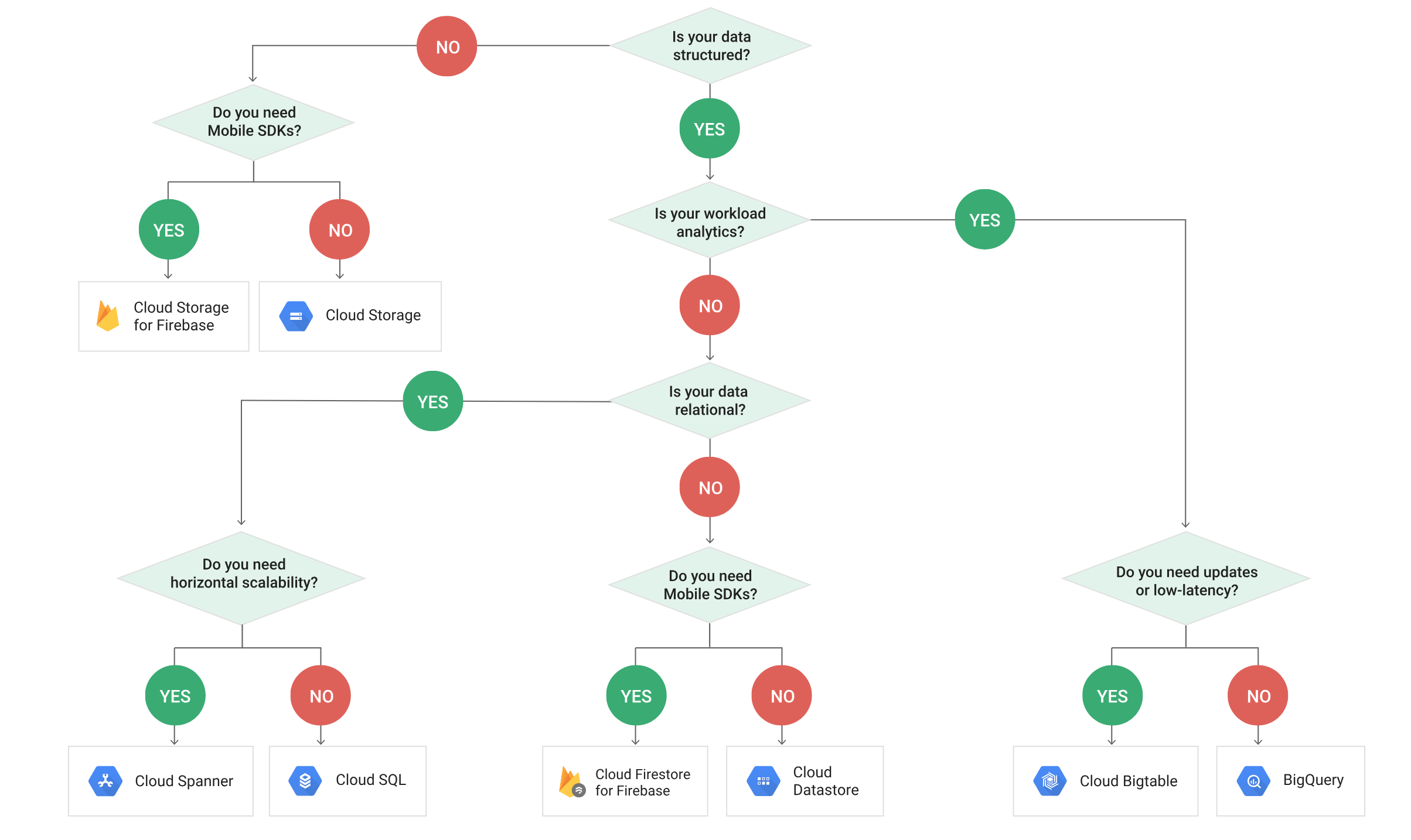

Google Cloud Networking Services Decision Tree

Transfer Services

gsutil

gsutiltool is the standard tool for small- to medium-sized transfers (less than 1 TB) over a typical enterprise-scale network, from a private data center to Google Cloud.gsutilprovides all the basic features needed to manage the Cloud Storage instances, including copying the data to and from the local file system and Cloud Storage.gsutilcan also move, rename and remove objects and perform real-time incremental syncs, likersync, to a Cloud Storage bucket.gsutilis especially useful in the following scenarios:- as-needed transfers or during command-line sessions by your users.

- transferring only a few files or very large files, or both.

- consuming the output of a program (streaming output to Cloud Storage)

- watch a directory with a moderate number of files and sync any updates with very low latencies.

gsutilprovides following features- Parallel multi-threaded transfers with

gsutil -m, increasing transfer speeds. - Composite transfers for a single large file to break them into smaller chunks to increase transfer speed. Chunks are transferred and validated in parallel, sending all data to Google. Once the chunks arrive at Google, they are combined (referred to as compositing) to form a single object

- Parallel multi-threaded transfers with

Storage Transfer Service

- Storage Transfer Service is a fully managed, highly scalable service to automate transfers from other public clouds into Cloud Storage.

- Storage Transfer Service for Cloud-to-Cloud transfers

- supports transfers into Cloud Storage from S3 and HTTP.

- supports daily copies of any modified objects.

- doesn’t currently support data transfers to S3.

- Storage Transfer Service also supports data transfers for on-premises data transfers from network file system (NFS) storage to Cloud Storage.

- Storage Transfer Service for on-premises data

- is designed for large-scale transfers (up to petabytes of data, billions of files).

- supports full copies or incremental copies

- can be setup by installing on-premises software (known as agents) onto computers in the data center.

- has a simple, managed graphical user interface; even non-technically savvy users (after setup) can use it to move data.

- provides robust error-reporting and a record of all files and objects that are moved.

- supports executing recurring transfers on a schedule.

Transfer Appliance

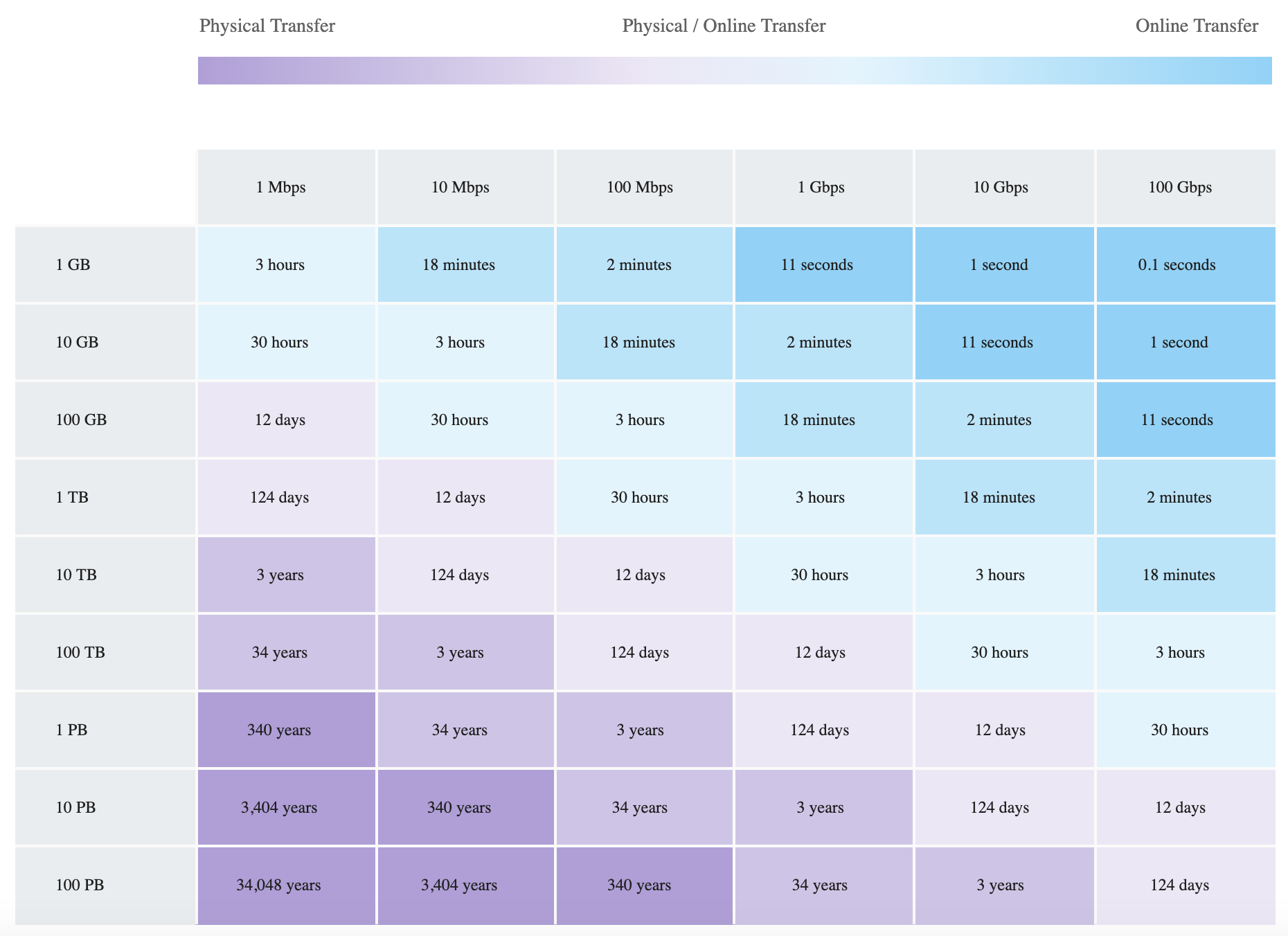

- Transfer Appliance is an excellent option for performing large-scale transfers, especially when a fast network connection is unavailable, it’s too costly to acquire more bandwidth or its one-time transfer

- Expected turnaround time for a network appliance to be shipped, loaded with the data, shipped back, and rehydrated on Google Cloud is 50 days.

- Consider Transfer Appliance, if the online transfer timeframe is calculated to be substantially more than this timeframe.

- Transfer Appliance requires the ability to receive and ship back the Google-owned hardware.

- Transfer Appliance is available only in certain countries.

BigQuery Data Transfer Service

- BigQuery Data Transfer Service automates data movement into BigQuery on a scheduled, managed basis

- After a data transfer is configured, the BigQuery Data Transfer Service automatically loads data into BigQuery on a regular basis.

- BigQuery Data Transfer Service can also initiate data backfills to recover from any outages or gaps.

- BigQuery Data Transfer Service can only sink data to BigQuery and cannot be used to transfer data out of BigQuery.

- BigQuery Data Transfer Service supports loading data from the following data sources:

- Google Software as a Service (SaaS) apps

- Campaign Manager

- Cloud Storage

- Google Ad Manager

- Google Ads

- Google Merchant Center (beta)

- Google Play

- Search Ads 360 (beta)

- YouTube Channel reports

- YouTube Content Owner reports

- External cloud storage providers

- Amazon S3

- Data warehouses

- Teradata

- Amazon Redshift

Transfer Data vs Speed Comparison

GCP Certification Exam Practice Questions

- Questions are collected from Internet and the answers are marked as per my knowledge and understanding (which might differ with yours).

- GCP services are updated everyday and both the answers and questions might be outdated soon, so research accordingly.

- GCP exam questions are not updated to keep up the pace with GCP updates, so even if the underlying feature has changed the question might not be updated

- Open to further feedback, discussion and correction.

- A company wants to connect cloud applications to an Oracle database in its data center. Requirements are a maximum of 9 Gbps of data and a Service Level Agreement (SLA) of 99%. Which option best suits the requirements?

- Implement a high-throughput Cloud VPN connection

- Cloud Router with VPN

- Dedicated Interconnect

- Partner Interconnect

- An organization wishes to automate data movement from Software as a Service (SaaS) applications such as Google Ads and Google Ad Manager on a scheduled, managed basis. This data is further needed for analytics and generate reports. How can the process be automated?

- Use Storage Transfer Service to move the data to Cloud Storage

- Use Storage Transfer Service to move the data to BigQuery

- Use BigQuery Data Transfer Service to move the data to BigQuery

- Use Transfer Appliance to move the data to Cloud Storage

- Your company’s migration team needs to transfer 1PB of data to Google Cloud. The network speed between the on-premises data center and Google Cloud is 100Mbps.

The migration activity has a timeframe of 6 months. What is the efficient way to transfer the data?- Use BigQuery Data Transfer Service to transfer the data to Cloud Storage

- Expose the data as a public URL and Storage Transfer Service to transfer it

- Use Transfer appliance to transfer the data to Cloud Storage

- Use

gsutilcommand to transfer the data to Cloud Storage

- Your company uses Google Analytics for tracking. You need to export the session and hit data from a Google Analytics 360 reporting view on a scheduled basis into BigQuery for analysis. How can the data be exported?

- Configure a scheduler in Google Analytics to convert the Google Analytics data to JSON format, then import directly into BigQuery using

bqcommand line. - Use

gsutilto export the Google Analytics data to Cloud Storage, then import into BigQuery and schedule it using Cron. - Import data to BigQuery directly from Google Analytics using Cron

- Use BigQuery Data Transfer Service to import the data from Google Analytics

- Configure a scheduler in Google Analytics to convert the Google Analytics data to JSON format, then import directly into BigQuery using

References