Google Cloud – EHR Healthcare Case Study

EHR Healthcare is a leading provider of electronic health record software to the medical industry. EHR Healthcare provides its software as a service to multi-national medical offices, hospitals, and insurance providers.

Executive statement

Our on-premises strategy has worked for years but has required a major investment of time and money in training our team on distinctly different systems, managing similar but separate environments, and responding to outages. Many of these outages have been a result of misconfigured systems, inadequate capacity to manage spikes in traffic, and inconsistent monitoring practices. We want to use Google Cloud to leverage a scalable, resilient platform that can span multiple environments seamlessly and provide a consistent and stable user experience that positions us for future growth.

EHR Healthcare wants to move to Google Cloud to expand, build scalable and highly available applications. It also wants to leverage automation and IaaC to provide consistency across environments and reduce provisioning errors.

Solution Concept

Due to rapid changes in the healthcare and insurance industry, EHR Healthcare’s business has been growing exponentially year over year. They need to be able to scale their environment, adapt their disaster recovery plan, and roll out new continuous deployment capabilities to update their software at a fast pace. Google Cloud has been chosen to replace its current colocation facilities.

EHR wants to build a scalable, Highly Available, Disaster Recovery setup and introduce Continous Integration and Deployment in their setup.

Existing Technical Environment

EHR’s software is currently hosted in multiple colocation facilities. The lease on one of the data centers is about to expire.

Customer-facing applications are web-based, and many have recently been containerized to run on a group of Kubernetes clusters. Data is stored in a mixture of relational and NoSQL databases (MySQL, MS SQL Server, Redis, and MongoDB).

EHR is hosting several legacy file- and API-based integrations with insurance providers on-premises. These systems are scheduled to be replaced over the next several years. There is no plan to upgrade or move these systems at the current time.

Users are managed via Microsoft Active Directory. Monitoring is currently being done via various open-source tools. Alerts are sent via email and are often ignored.

- As the lease of one of the data centers is about to expire, time is critical

- Some web applications are containerized and have SQL and NoSQL databases and can be moved

- Some of the systems are legacy and would be replaced and need not be migrated

- Team has multiple monitoring tools and might need consolidation

Business requirements

On-board new insurance providers as quickly as possible.

Provide a minimum 99.9% availability for all customer-facing systems.

- Availability can be increased by hosting applications across multiple zones and using managed services which span multiple AZs

Provide centralized visibility and proactive action on system performance and usage.

- Cloud Monitoring can be used to provide centralized visibility and alerting can provide proactive action

- Cloud Logging can be also used for log monitoring and alerting

Increase ability to provide insights into healthcare trends.

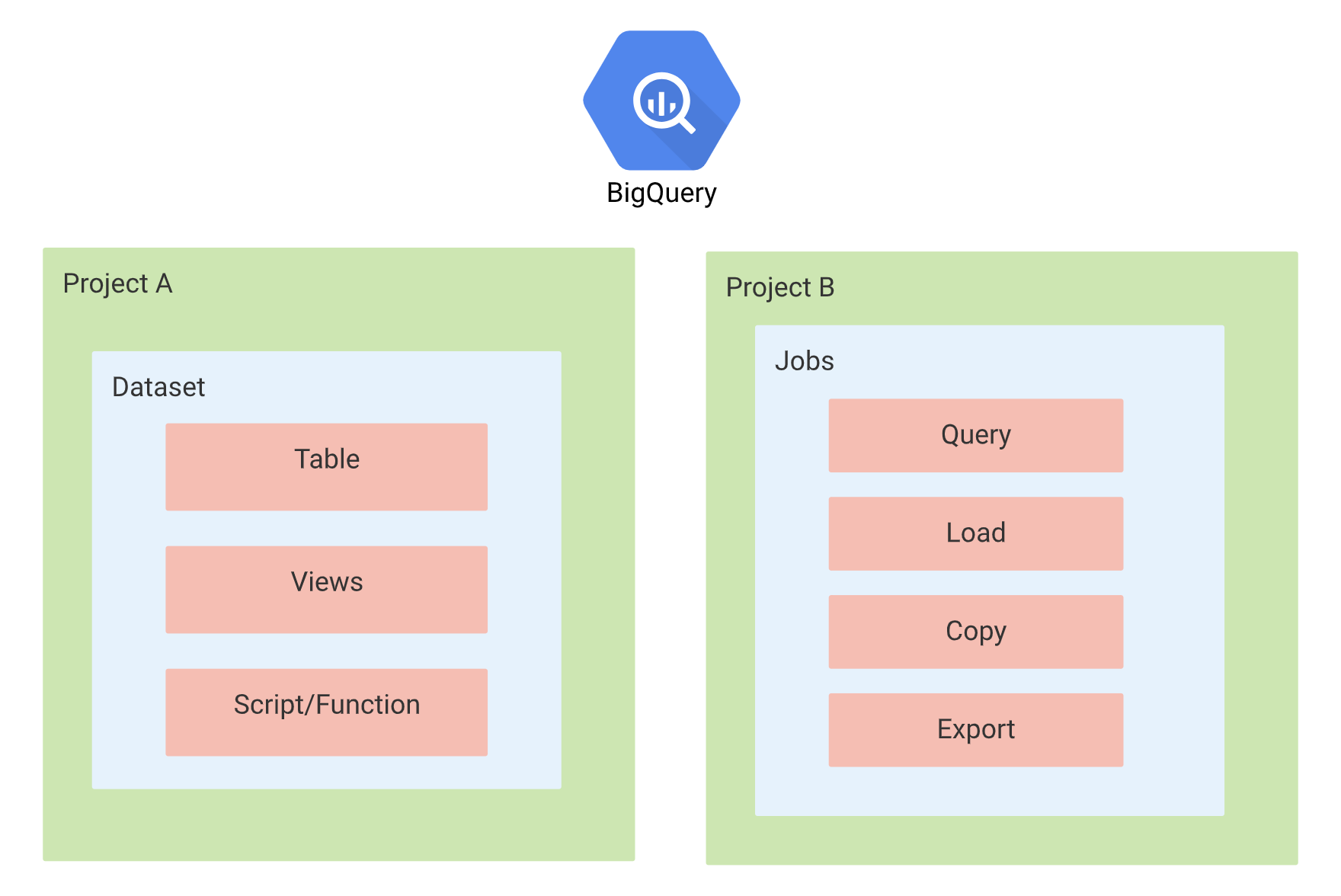

- Data can be pushed and analyzed using BigQuery and insights visualized using Data studio.

Reduce latency to all customers.

- Performance can be improved using Global Load Balancer to expose the applications.

- Applications can also be hosted across regions for low latency access.

Maintain regulatory compliance.

- Regulatory compliance can be maintained using data localization, data retention policies as well as security measures.

Decrease infrastructure administration costs.

- Infrastructure administration costs can be reduced using automation with either Terraform or Deployment Manager

Make predictions and generate reports on industry trends based on provider data.

- Data can be pushed and analyzed using BigQuery.

Technical requirements

Maintain legacy interfaces to insurance providers with connectivity to both on-premises systems and cloud providers.

Provide a consistent way to manage customer-facing applications that are container-based.

- Containers based applications can be deployed GKE or Cloud Run with consistent CI/CD experience

Provide a secure and high-performance connection between on-premises systems and Google Cloud.

- Cloud VPN, Dedicated Interconnect, or Partner Interconnect connections can be established between on-premises and Google Cloud

Provide consistent logging, log retention, monitoring, and alerting capabilities.

Maintain and manage multiple container-based environments.

- Use Deployment Manager or IaaC to provide consistent implementations across environments

Dynamically scale and provision new environments.

- Applications deployed on GKE can be scaled using Cluster Autoscaler and HPA for deployments.

Create interfaces to ingest and process data from new providers.

GCP Certification Exam Practice Questions

- Questions are collected from Internet and the answers are marked as per my knowledge and understanding (which might differ with yours).

- GCP services are updated everyday and both the answers and questions might be outdated soon, so research accordingly.

- GCP exam questions are not updated to keep up the pace with GCP updates, so even if the underlying feature has changed the question might not be updated

- Open to further feedback, discussion and correction.

- For this question, refer to the EHR Healthcare case study. In the past, configuration errors put IP addresses on backend servers that should not have been accessible from the internet. You need to ensure that no one can put external IP addresses on backend Compute Engine instances and that external IP addresses can only be configured on the front end Compute Engine instances. What should you do?

- Create an organizational policy with a constraint to allow external IP addresses on the front end Compute Engine instances

- Revoke the

compute.networkadmin role from all users in the project with front end instances

- Create an Identity and Access Management (IAM) policy that maps the IT staff to the

compute.networkadmin role for the organization

- Create a custom Identity and Access Management (IAM) role named GCE_FRONTEND with the

compute.addresses.create permission

EHR Healthcare References

EHR Healthcare Case Study

Instance

Instance