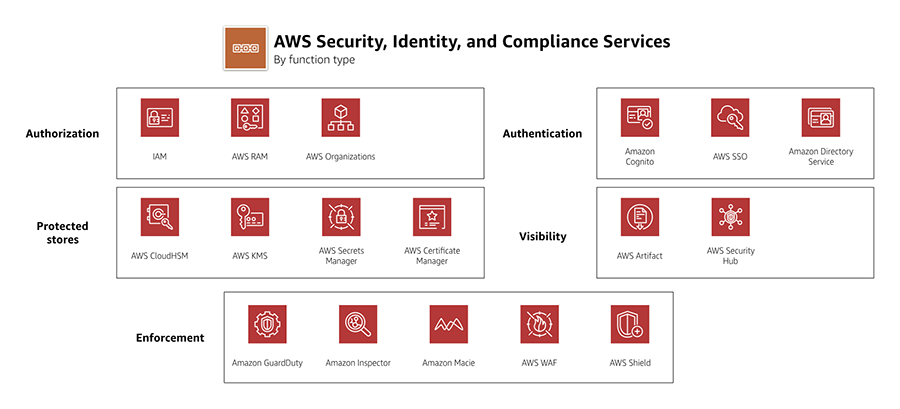

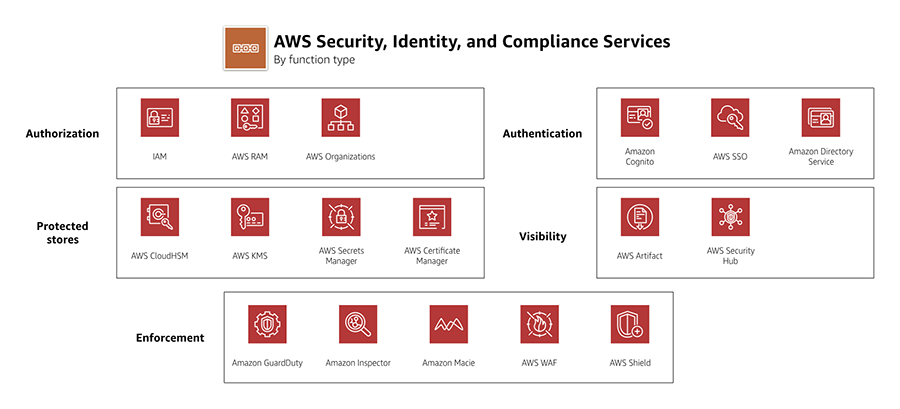

AWS Identity & Security Services Cheat Sheet

Refer blog post @ EBS vs Instance Store

- Questions are collected from Internet and the answers are marked as per my knowledge and understanding (which might differ with yours).

- AWS services are updated everyday and both the answers and questions might be outdated soon, so research accordingly.

- AWS exam questions are not updated to keep up the pace with AWS updates, so even if the underlying feature has changed the question might not be updated

- Open to further feedback, discussion and correction.

- Questions are collected from Internet and the answers are marked as per my knowledge and understanding (which might differ with yours).

- AWS services are updated everyday and both the answers and questions might be outdated soon, so research accordingly.

- AWS exam questions are not updated to keep up the pace with AWS updates, so even if the underlying feature has changed the question might not be updated

- Open to further feedback, discussion and correction.

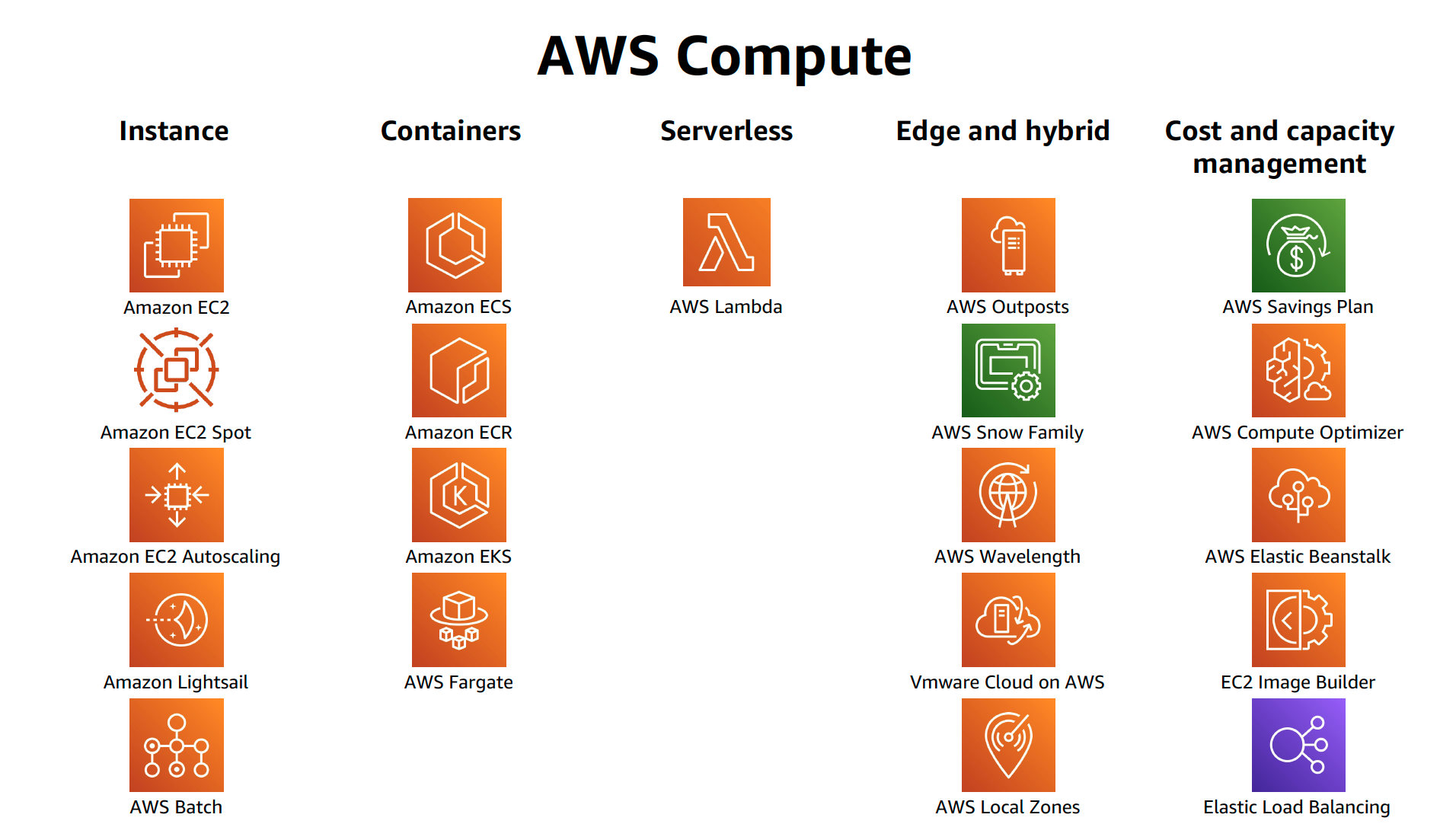

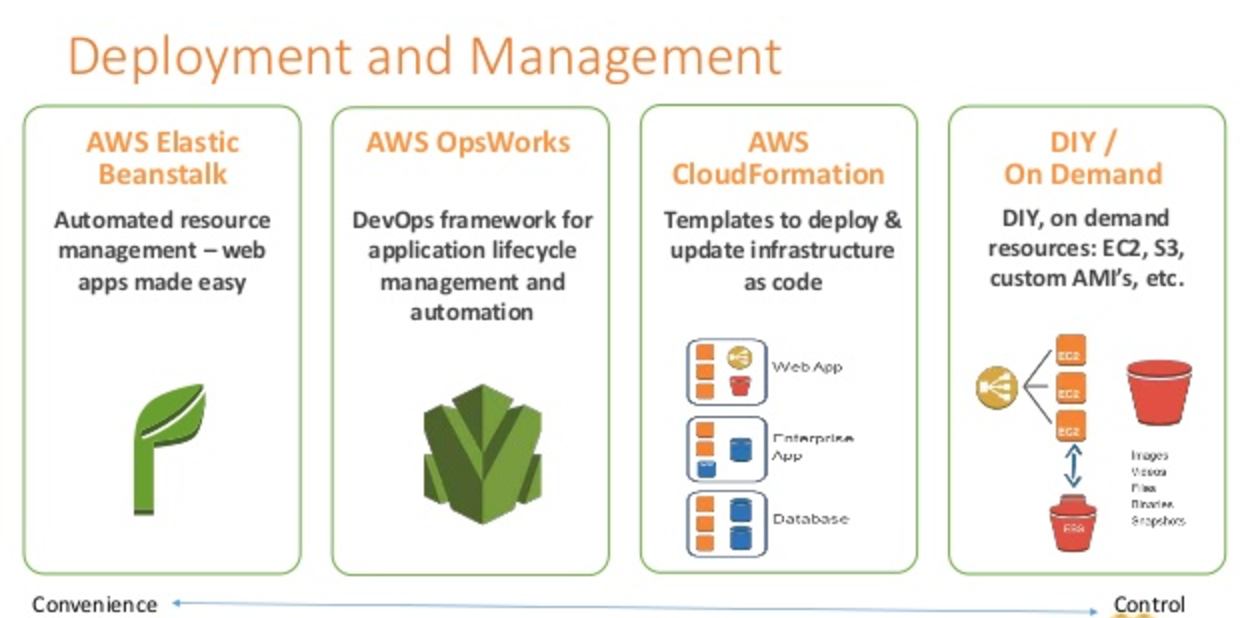

AWS offers multiple options for provisioning IT infrastructure and application deployment and management varying from convenience & easy of setup with low level granular control

- Questions are collected from Internet and the answers are marked as per my knowledge and understanding (which might differ with yours).

- AWS services are updated everyday and both the answers and questions might be outdated soon, so research accordingly.

- AWS exam questions are not updated to keep up the pace with AWS updates, so even if the underlying feature has changed the question might not be updated

- Open to further feedback, discussion and correction.

- AWS Certification Questions are collected from Internet and the answers are marked as per my knowledge and understanding (which might differ with yours).

- AWS services are updated everyday and both the answers and questions might be outdated soon, so research accordingly.

- AWS exam questions are not updated to keep up the pace with AWS updates, so even if the underlying feature has changed the question might not be updated

- Open to further feedback, discussion and correction.

- Questions are collected from Internet and the answers are marked as per my knowledge and understanding (which might differ with yours).

- AWS services are updated everyday and both the answers and questions might be outdated soon, so research accordingly.

- AWS exam questions are not updated to keep up the pace with AWS updates, so even if the underlying feature has changed the question might not be updated

- Open to further feedback, discussion and correction.

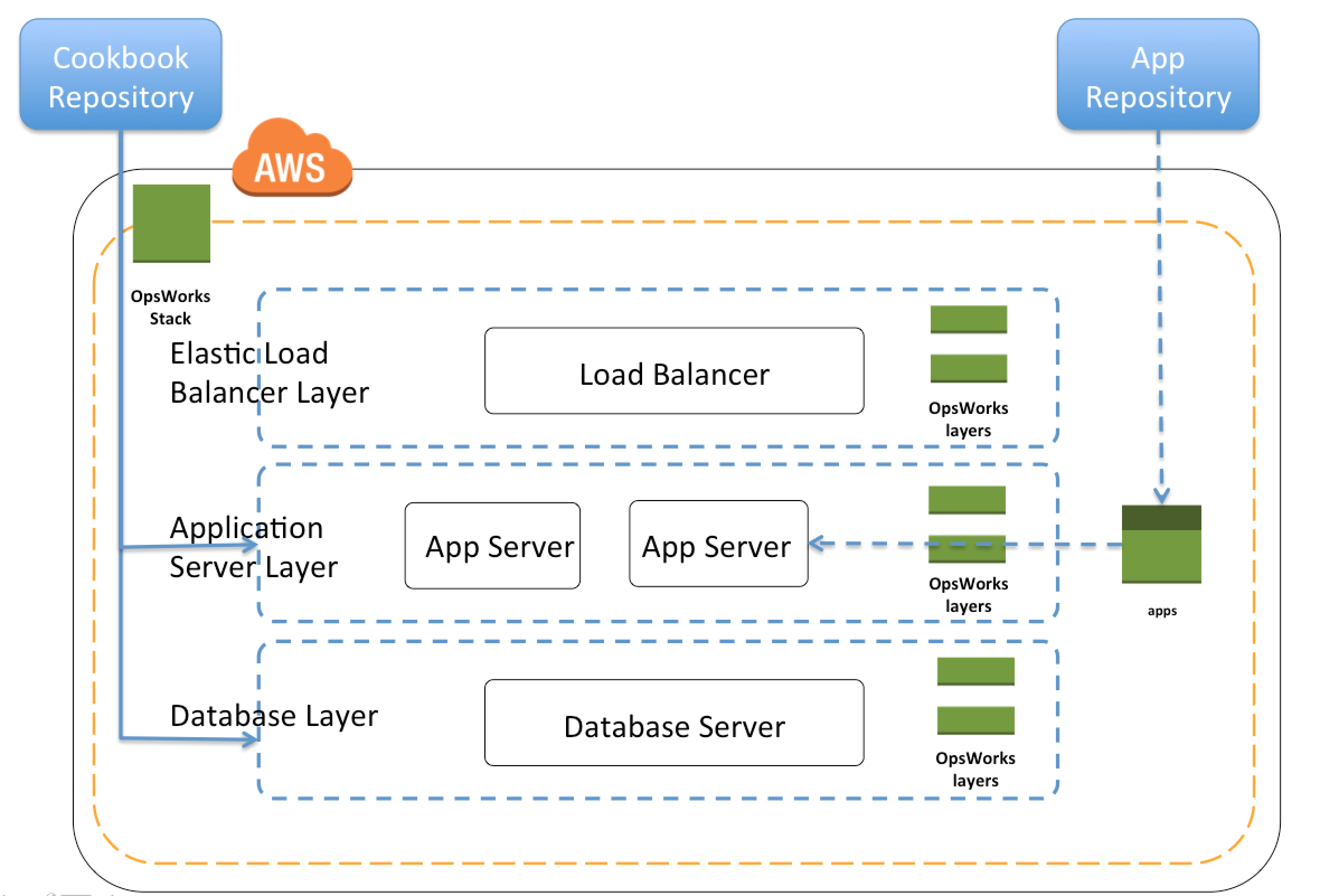

Refer to OpsWorks Deployment Strategies blog post for details

- Questions are collected from Internet and the answers are marked as per my knowledge and understanding (which might differ with yours).

- AWS services are updated everyday and both the answers and questions might be outdated soon, so research accordingly.

- AWS exam questions are not updated to keep up the pace with AWS updates, so even if the underlying feature has changed the question might not be updated

- Open to further feedback, discussion and correction.

- Questions are collected from Internet and the answers are marked as per my knowledge and understanding (which might differ with yours).

- AWS services are updated everyday and both the answers and questions might be outdated soon, so research accordingly.

- AWS exam questions are not updated to keep up the pace with AWS updates, so even if the underlying feature has changed the question might not be updated

- Open to further feedback, discussion and correction.