IAM Access Management

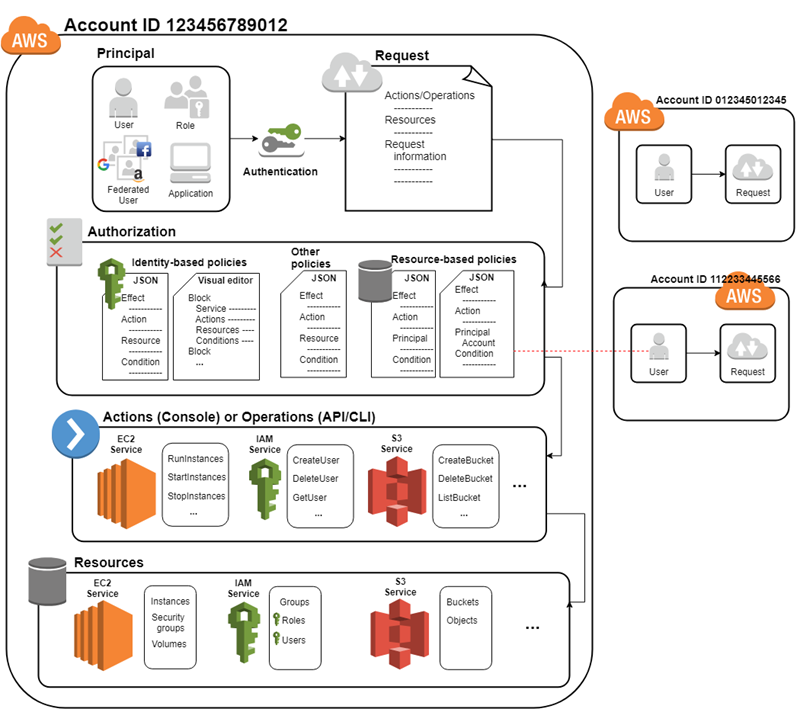

- IAM Access Management is all about Permissions and Policies.

- Permission help define who has access & what actions can they perform.

- IAM Policy helps to fine-tune the permissions granted to the policy owner

- IAM Policy is a document that formally states one or more permissions.

- Most restrictive Policy always wins

- IAM Policy is defined in the JSON (JavaScript Object Notation) format

IAM policy basically states “Principal A is allowed or denied (Effect) to perform Action B on Resource C given Conditions D are satisfied”

|

|

{ "Version": "2012-10-17", "Statement": { "Principal": {"AWS": ["arn:aws:iam::ACCOUNT-ID-WITHOUT-HYPHENS:root"]}, "Action": "s3:ListBucket", "Effect": "Allow", "Resource": "arn:aws:s3:::example_bucket", "Condition": {"StringLike": { "s3:prefix": [ "home/${aws:username}/" ] } } } } |

- An Entity can be associated with Multiple Policies and a Policy can have multiple statements where each statement in a policy refers to a single permission.

- If the policy includes multiple statements, a logical OR is applied across the statements at evaluation time. Similarly, if multiple policies are applicable to a request, a logical OR is applied across the policies at evaluation time.

- Principal can either be specified within the Policy for Resource based policies while for Identity based policies the principal is the user, group, or role to which the policy is attached.

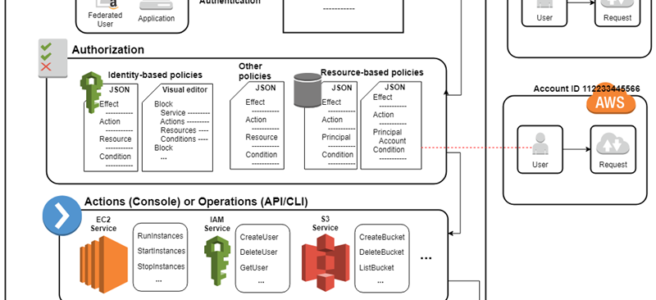

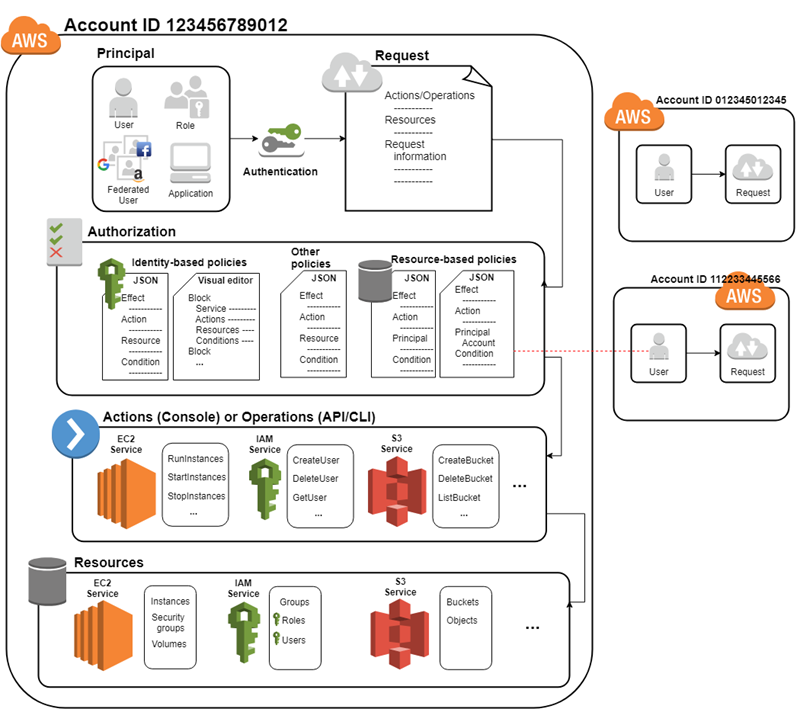

Identity-Based vs Resource-Based Permissions

Identity-based, or IAM permissions

- Identity-based or IAM permissions are attached to an IAM user, group, or role and specify what the user, group, or role can do.

- User, group, or the role itself acts as a Principal.

- IAM permissions can be applied to almost all the AWS services.

- IAM Policies can either be inline or managed (AWS or Customer).

- IAM Policy’s current version is 2012-10-17.

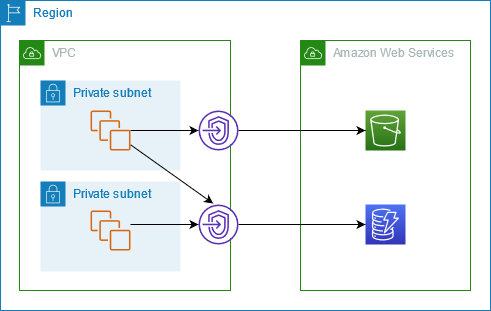

Resource-based permissions

- Resource-based permissions are attached to a resource for e.g. S3, SNS

- Resource-based permissions specify both who has access to the resource (Principal) and what actions they can perform on it (Actions)

- Resource-based policies are inline only, not managed.

- Resource-based permissions are supported only by some AWS services

- Resource-based policies can be defined with version 2012-10-17 or 2008-10-17

Managed Policies and Inline Policies

- Managed policies

- Managed policies are Standalone policies that can be attached to multiple users, groups, and roles in an AWS account.

- Managed policies apply only to identities (users, groups, and roles) but not to resources.

- Managed policies allow reusability

- Managed policy changes are implemented as versions (limited to 5), an new change to the existing policy creates a new version which is useful to compare the changes and revert back, if needed

- Managed policies have their own ARN

- Two types of managed policies:

- AWS managed policies

- Managed policies that are created and managed by AWS.

- AWS maintains and can upgrades these policies for e.g. if a new service is introduced, the changes automatically effects all the existing principals attached to the policy

- AWS takes care of not breaking the policies for e.g. adding an restriction of removal of permission

- Managed policies cannot be modified

- Customer managed policies

- Managed policies are standalone and custom policies created and administered by you.

- Customer managed policies allows more precise control over the policies than when using AWS managed policies.

- Inline policies

- Inline policies are created and managed by you, and are embedded directly into a single user, group, or role.

- Deletion of the Entity (User, Group or Role) or Resource deletes the In-Line policy as well

ABAC – Attribute-Based Access Control

- ABAC – Attribute-based access control is an authorization strategy that defines permissions based on attributes called tags.

- ABAC policies can be designed to allow operations when the principal’s tag matches the resource tag.

- ABAC is helpful in environments that are growing rapidly and help with situations where policy management becomes cumbersome.

- ABAC policies are easier to manage as different policies for different job functions need not be created.

- Complements RBAC for granular permissions, with RBAC allowing access to only specific resources and ABAC can allow actions on all resources, but only if the resource tag matches the principal’s tag.

- ABAC can help use employee attributes from the corporate directory with federation where attributes are applied to their resulting principal.

IAM Permissions Boundaries

- Permissions boundary allows using a managed policy to set the maximum permissions that an identity-based policy can grant to an IAM entity.

- Permissions boundary allows it to perform only the actions that are allowed by both its identity-based policies and its permissions boundaries.

- Permissions boundary supports both the AWS-managed policy and the customer-managed policy to set the boundary for an IAM entity.

- Permissions boundary can be applied to an IAM entity (user or role ) but is not supported for IAM Group.

- Permissions boundary does not grant permissions on its own.

IAM Policy Simulator

- IAM Policy Simulator helps test and troubleshoot IAM and resource-based policies

- IAM Policy Simulator can help test the following ways:-

- Test IAM based policies. If multiple policies are attached, you can test all the policies or select individual policies to test. You can test which actions are allowed or denied by the selected policies for specific resources.

- Test Resource based policies. However, Resource-based policies cannot be tested standalone and have to be attached to the Resource

- Test new IAM policies that are not yet attached to a user, group, or role by typing or copying them into the simulator. These are used only in the simulation and are not saved.

- Test the policies with selected services, actions, and resources

- Simulate real-world scenarios by providing context keys, such as an IP address or date, that are included in Condition elements in the policies being tested.

- Identify which specific statement in a policy results in allowing or denying access to a particular resource or action.

- IAM Policy Simulator does not make an actual AWS service request and hence does not make unwanted changes to the AWS live environment

- IAM Policy Simulator just reports the result Allowed or Denied

- IAM Policy Simulator allows to you modify the policy and test. These changes are not propagated to the actual policies attached to the entities

- Introductory Video for Policy Simulator

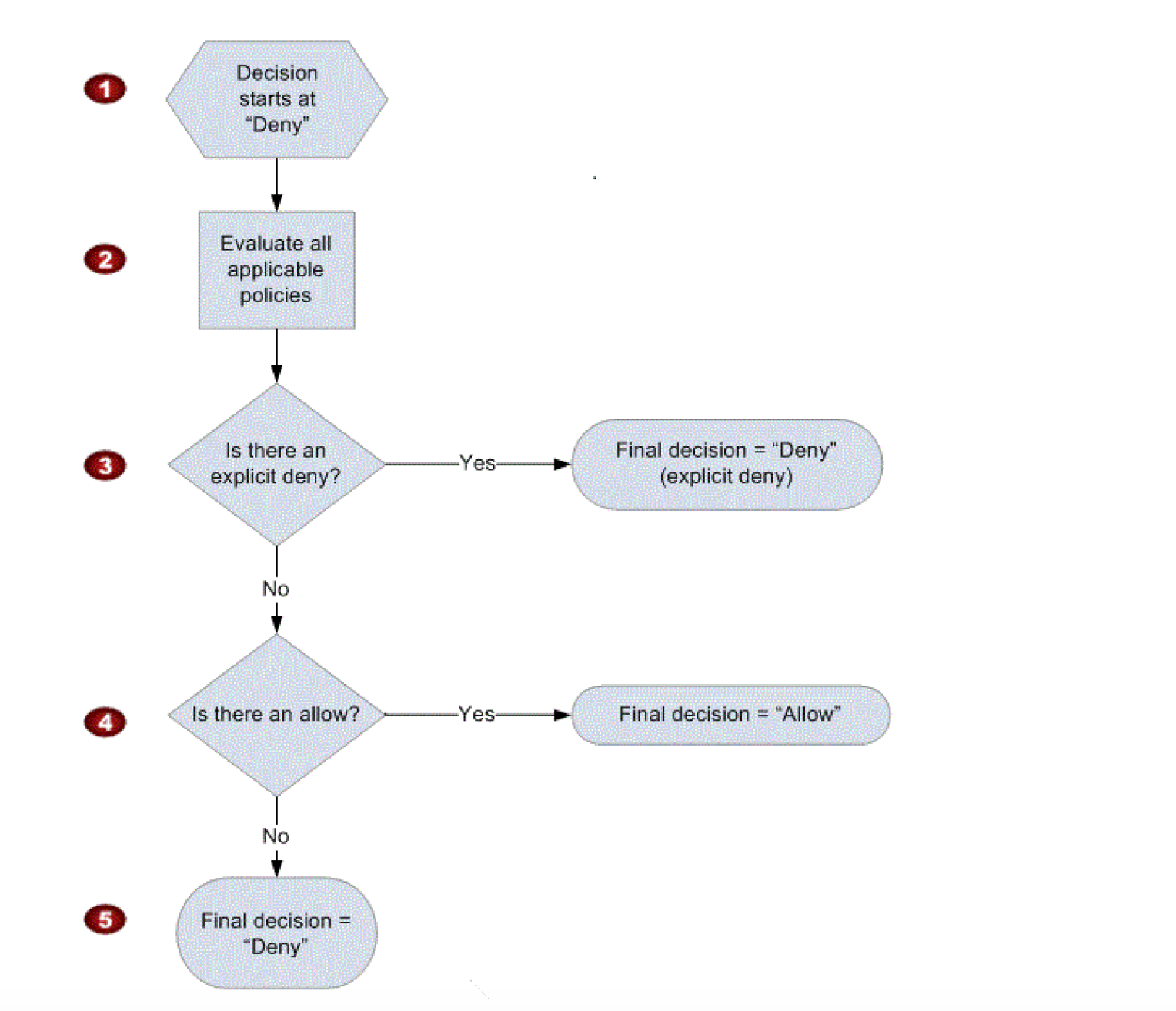

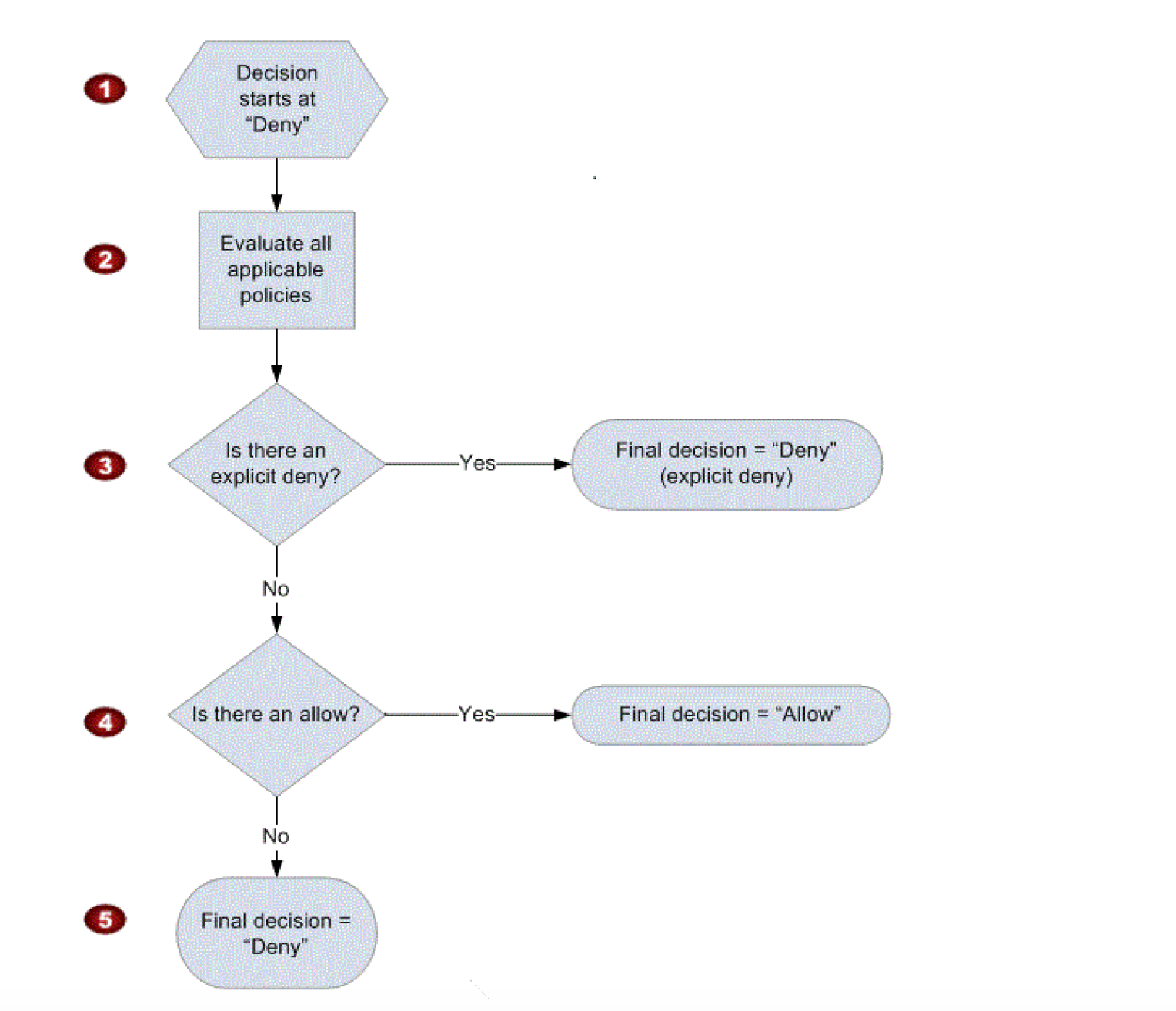

IAM Policy Evaluation

When determining if permission is allowed, the hierarchy is followed

- Decision allows starts with Deny.

- IAM combines and evaluates all the policies.

- Explicit Deny

- First IAM checks for an explicit denial policy.

- Explicit Deny overrides everything and if something is explicitly denied it can never be allowed.

- Explicit Allow

- If one does not exist, it then checks for an explicit allow policy.

- For granting the User any permission, the permission must be explicitly allowed

- Implicit Deny

- If neither an explicit deny nor explicit allow policy exists, it reverts to the default: implicit deny.

- All permissions are implicity denied by default

IAM Policy Variables

- Policy variables provide a feature to specify placeholders in a policy.

- When the policy is evaluated, the policy variables are replaced with values that come from the request itself

- Policy variables allow a single policy to be applied to a group of users to control access for e.g. all user having access to S3 bucket folder with their name only

- Policy variable is marked using a $ prefix followed by a pair of curly braces ({ }). Inside the ${ } characters, with the name of the value from the request that you want to use in the policy

- Policy variables work only with policies defined with Version 2012-10-17

- Policy variables can only be used in the Resource element and in string comparisons in the Condition element

- Policy variables are case sensitive and include variables like aws:username, aws:userid, aws:SourceIp, aws:CurrentTime etc.

AWS Certification Exam Practice Questions

- Questions are collected from Internet and the answers are marked as per my knowledge and understanding (which might differ with yours).

- AWS services are updated everyday and both the answers and questions might be outdated soon, so research accordingly.

- AWS exam questions are not updated to keep up the pace with AWS updates, so even if the underlying feature has changed the question might not be updated

- Open to further feedback, discussion and correction.

- IAM’s Policy Evaluation Logic always starts with a default ____________ for every request, except for those that use the AWS account’s root security credentials b

- Permit

- Deny

- Cancel

- An organization has created 10 IAM users. The organization wants each of the IAM users to have access to a separate DynamoDB table. All the users are added to the same group and the organization wants to setup a group level policy for this. How can the organization achieve this?

- Define the group policy and add a condition which allows the access based on the IAM name

- Create a DynamoDB table with the same name as the IAM user name and define the policy rule which grants access based on the DynamoDB ARN using a variable

- Create a separate DynamoDB database for each user and configure a policy in the group based on the DB variable

- It is not possible to have a group level policy which allows different IAM users to different DynamoDB Tables

- An organization has setup multiple IAM users. The organization wants that each IAM user accesses the IAM console only within the organization and not from outside. How can it achieve this?

- Create an IAM policy with the security group and use that security group for AWS console login

- Create an IAM policy with a condition which denies access when the IP address range is not from the organization

- Configure the EC2 instance security group which allows traffic only from the organization’s IP range

- Create an IAM policy with VPC and allow a secure gateway between the organization and AWS Console

- Can I attach more than one policy to a particular entity?

- Yes always

- Only if within GovCloud

- No

- Only if within VPC

- A __________ is a document that provides a formal statement of one or more permissions.

- policy

- permission

- Role

- resource

- A __________ is the concept of allowing (or disallowing) an entity such as a user, group, or role some type of access to one or more resources.

- user

- AWS Account

- resource

- permission

- True or False: When using IAM to control access to your RDS resources, the key names that can be used are case sensitive. For example, aws:CurrentTime is NOT equivalent to AWS:currenttime.

- TRUE

- FALSE (Refer link)

- A user has set an IAM policy where it allows all requests if a request from IP 10.10.10.1/32. Another policy allows all the requests between 5 PM to 7 PM. What will happen when a user is requesting access from IP 10.10.10.1/32 at 6 PM?

- IAM will throw an error for policy conflict

- It is not possible to set a policy based on the time or IP

- It will deny access

- It will allow access

- Which of the following are correct statements with policy evaluation logic in AWS Identity and Access Management? Choose 2 answers.

- By default, all requests are denied

- An explicit allow overrides an explicit deny

- An explicit allow overrides default deny

- An explicit deny does not override an explicit allow

- By default, all request are allowed

- A web design company currently runs several FTP servers that their 250 customers use to upload and download large graphic files. They wish to move this system to AWS to make it more scalable, but they wish to maintain customer privacy and keep costs to a minimum. What AWS architecture would you recommend? [PROFESSIONAL]

- Ask their customers to use an S3 client instead of an FTP client. Create a single S3 bucket. Create an IAM user for each customer. Put the IAM Users in a Group that has an IAM policy that permits access to subdirectories within the bucket via use of the ‘username’ Policy variable.

- Create a single S3 bucket with Reduced Redundancy Storage turned on and ask their customers to use an S3 client instead of an FTP client. Create a bucket for each customer with a Bucket Policy that permits access only to that one customer. (Creating bucket for each user is not a scalable model, also 100 buckets are a limit earlier without extending which has since changed link)

- Create an auto-scaling group of FTP servers with a scaling policy to automatically scale-in when minimum network traffic on the auto-scaling group is below a given threshold. Load a central list of ftp users from S3 as part of the user Data startup script on each Instance (Expensive)

- Create a single S3 bucket with Requester Pays turned on and ask their customers to use an S3 client instead of an FTP client. Create a bucket tor each customer with a Bucket Policy that permits access only to that one customer. (Creating bucket for each user is not a scalable model, also 100 buckets are a limit earlier without extending which has since changed link)

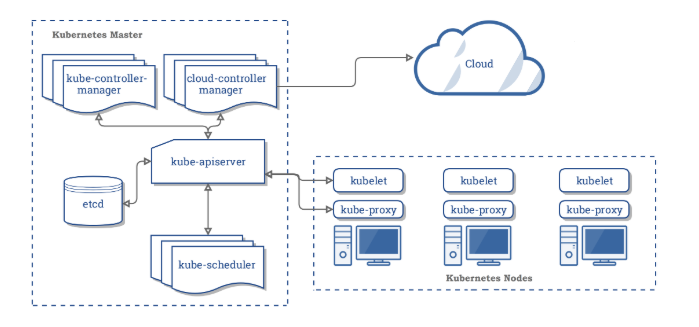

Master components

Master components