Google Cloud Storage – GCS

- Google Cloud Storage is a service for storing unstructured data i.e. objects/blobs in Google Cloud.

- Google Cloud Storage provides a RESTful service for storing and accessing the data on Google’s infrastructure.

- GCS combines the performance and scalability of Google’s cloud with advanced security and sharing capabilities.

Google Cloud Storage Components

Buckets

- Buckets are the logical containers for objects

- All buckets are associated with a project and projects can be grouped under an organization.

- Bucket name considerations

- reside in a single Cloud Storage namespace.

- must be unique.

- are publicly visible.

- can only be assigned during creation and cannot be changed.

- can be used in a DNS record as part of a

CNAME or A redirect.

- Bucket name requirements

- must contain only lowercase letters, numbers, dashes (

-), underscores (_), and dots (.). Spaces are not allowed. Names containing dots require verification.

- must start and end with a number or letter.

- must contain 3-63 characters. Names containing dots can contain up to 222 characters, but each dot-separated component can be no longer than 63 characters.

- cannot be represented as an IP address for e.g., 192.168.5.4

- cannot begin with the

goog prefix.

- cannot contain

google or close misspellings, such as g00gle.

Objects

- An object is a piece of data consisting of a file of any format.

- Objects are stored in containers called buckets.

- Objects are immutable, which means that an uploaded object cannot change throughout its storage lifetime.

- Objects can be overwritten and overwrites are Atomic

- Object names reside in a flat namespace within a bucket, which means

- Different buckets can have objects with the same name.

- Objects do not reside within subdirectories in a bucket.

- Existing objects cannot be directly renamed and need to be copied

Object Metadata

- Objects stored in Cloud Storage have metadata associated with them

- Metadata exists as key:value pairs and identifies properties of the object

- Mutability of metadata varies as some metadata is set at the time the object is created for e.g. Content-Type, Cache-Control while for others they can be edited at any time

Composite Objects

- Composite objects help to make appends to an existing object, as well as for recreating objects uploaded as multiple components in parallel.

- Compose operation works with objects

- having the same storage class.

- be stored in the same Cloud Storage bucket.

- NOT use customer-managed encryption keys.

Cloud Storage Locations

- GCS buckets need to be created in a location for storing the object data.

- GCS support different location types

- regional

- A region is a specific geographic place, such as London.

- helps optimize latency and network bandwidth for data consumers, such as analytics pipelines, that are grouped in the same region.

- dual-region

- is a specific pair of regions, such as Finland and the Netherlands.

- provides higher availability that comes with being geo-redundant.

- multi-region

- is a large geographic area, such as the United States, that contains two or more geographic places.

- allows serving content to data consumers that are outside of the Google network and distributed across large geographic areas, or

- provides higher availability that comes with being geo-redundant.

- Objects stored in a multi-region or dual-region are geo-redundant i.e. data is stored redundantly in at least two separate geographic places separated by at least 100 miles.

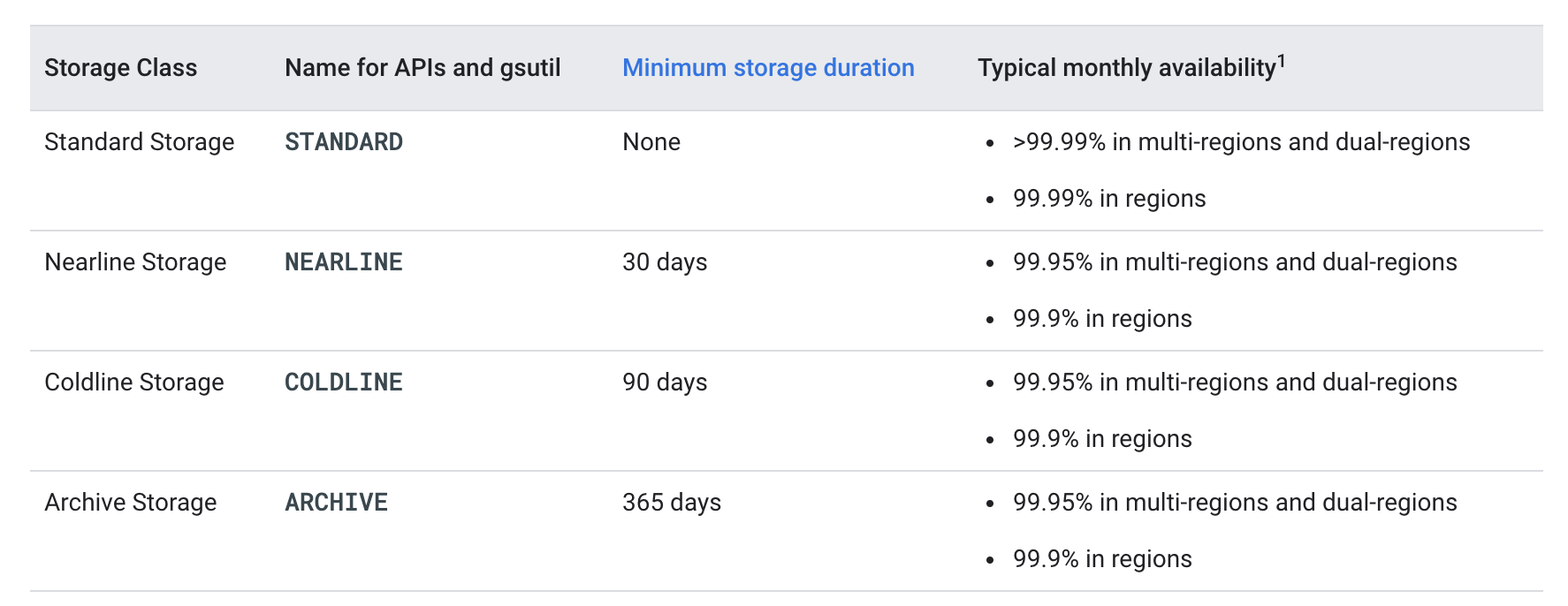

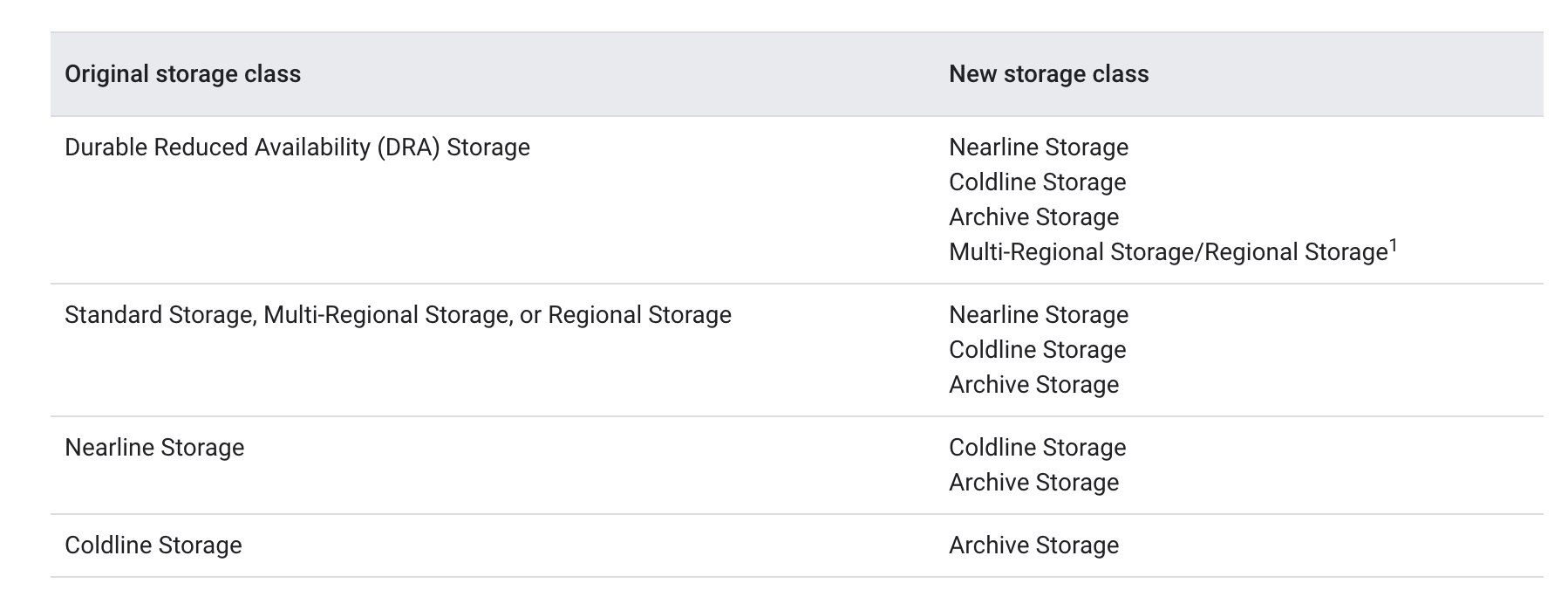

Cloud Storage Classes

Refer blog Google Cloud Storage – Storage Classes

Cloud Storage Security

Refer blog Google Cloud Storage – Security

GCS Upload and Download

- GCS supports upload and storage of any MIME type of data up to 5 TB

- Uploaded object consists of the data along with any associated metadata

- GCS supports multiple upload types

- Simple upload – ideal for small files that can be uploaded again in their entirety if the connection fails, and if there are no object metadata to send as part of the request.

- Multipart upload – ideal for small files that can be uploaded again in their entirety if the connection fails, and there is a need to include object metadata as part of the request.

- Resumable upload – ideal for large files with a need for more reliable transfer. Supports streaming transfers, which is a type of resumable upload that allows uploading an object of unknown size.

Resumable Upload

- Resumable uploads are the recommended method for uploading large files because they don’t need to be restarted from the beginning if there is a network failure while the upload is underway.

- Resumable upload allows resumption of data transfer operations to Cloud Storage after a communication failure has interrupted the flow of data

- Resumable uploads work by sending multiple requests, each of which contains a portion of the object you’re uploading.

- Resumable upload mechanism supports transfers where the file size is not known in advance or for streaming transfer.

- Resumable upload must be completed within a week of being initiated.

Streaming Transfers

- Streaming transfers allow streaming data to and from the Cloud Storage account without requiring that the data first be saved to a file.

- Streaming uploads are useful when uploading data whose final size is not known at the start of the upload, such as when generating the upload data from a process, or when compressing an object on the fly.

- Streaming downloads are useful to download data from Cloud Storage into a process.

Parallel Composite Uploads

- Parallel composite uploads divide a file into up to 32 chunks, which are uploaded in parallel to temporary objects, the final object is recreated using the temporary objects, and the temporary objects are deleted

- Parallel composite uploads can be significantly faster if network and disk speed are not limiting factors; however, the final object stored in the bucket is a composite object, which only has a

crc32c hash and not an MD5 hash

- As a result,

crcmod needs to be used to perform integrity checks when downloading the object with gsutil or other Python applications.

You should only perform parallel composite uploads if the following apply:

- Parallel composite uploads do not support buckets with default customer-managed encryption keys, because the compose operation does not support source objects encrypted in this way.

- Parallel composite uploads do not need the uploaded objects to have an MD5 hash.

Object Versioning

- Object Versioning retains a noncurrent object version when the live object version gets replaced, overwritten, or deleted

- Object Versioning is disabled by default.

- Object Versioning prevents accidental overwrites and deletion

- Object Versioning causes deleted or overwritten objects to be archived instead of being deleted

- Object Versioning increases storage costs as it maintains the current and noncurrent versions of the object, which can be partially mitigated by lifecycle management

- Noncurrent versions retain the name of the object but are uniquely identified by their generation number.

- Noncurrent versions only appear in requests that explicitly call for object versions to be included.

- Objects versions can be permanently deleted by including the generation number or configuring Object Lifecycle Management to delete older object versions

- Object versioning, if disabled, does not create versions for new ones but old versions are not deleted

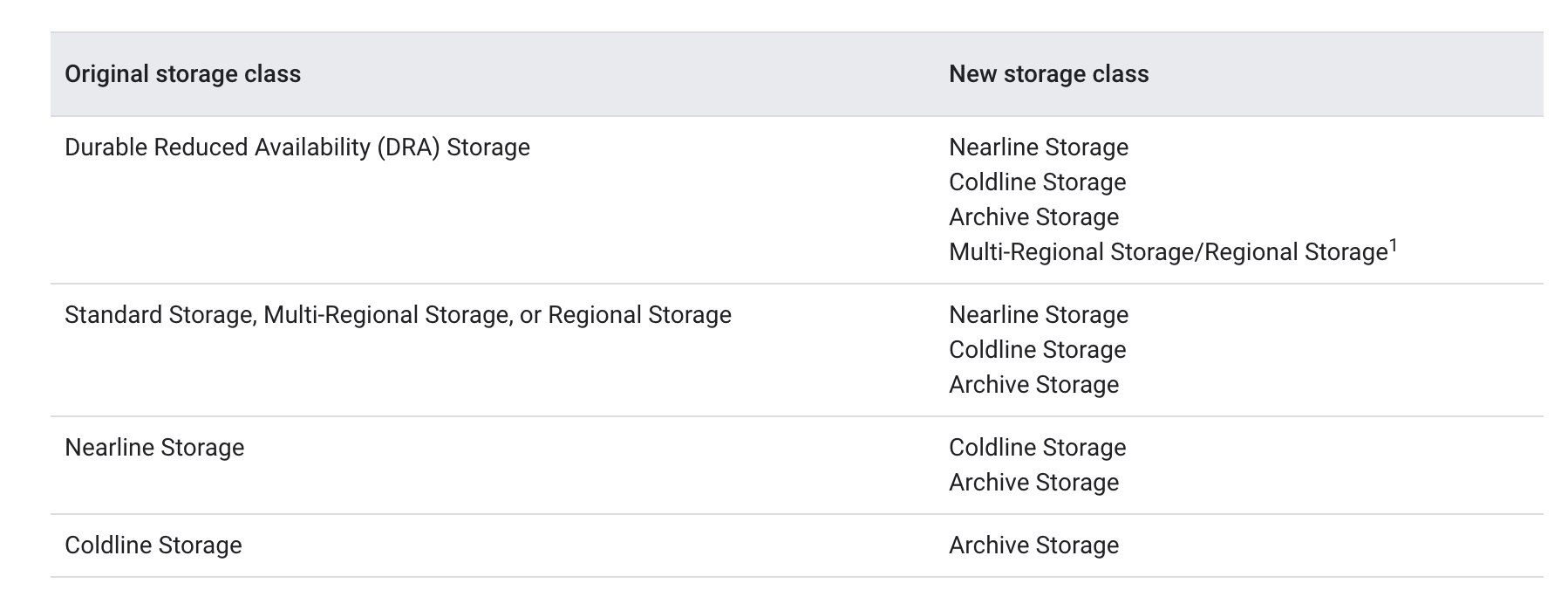

Object Lifecycle Management

- Object Lifecycle Management sets Time To Live (TTL) on an object and helps configure transition or expiration of the objects based on specified rules for e.g.

SetStorageClass to downgrade the storage class, delete to expire noncurrent or archived objects

- Lifecycle management configuration can be applied to a bucket, which contains a set of rules applied to current and future objects in the bucket

- Lifecycle management rules precedence

Delete action takes precedence over any SetStorageClass action.SetStorageClass action switches the object to the storage class with the lowest at-rest storage pricing takes precedence.

- Cloud Storage doesn’t validate the correctness of the storage class transition

- Lifecycle actions can be tracked using Cloud Storage usage logs or using Pub/Sub Notifications for Cloud Storage

- Lifecycle management is done using rules, conditions, and actions and is applied if

- With multiple rules, any of the rules can be met (OR operation)

- All the conditions in a rule (AND operation) should be met

Object Lifecycle Behavior

- Cloud Storage performs the action asynchronously, so there can be a lag between when the conditions are satisfied and the action is taken

- Updates to lifecycle configuration may take up to 24 hours to take effect.

Delete action will not take effect on an object while the object either has an object hold placed on it or an unfulfilled retention policy.SetStorageClass action is not affected by the existence of object holds or retention policies.SetStorageClass does not rewrite an object and hence you are not charged for retrieval and deletion operations.

GCS Requester Pays

- Project owner of the resource is billed normally for the access which includes operation charges, network charges, and data retrieval charges

- However, if the requester provides a billing project with their request, the requester’s project is billed instead.

- Requester Pays requires the requester to include a billing project in their requests, thus billing the requester’s project

- Enabling Requester Pays is useful, e.g. if you have a lot of data to share, but you don’t want to be charged for their access to that data.

- Requester Pays does not cover the storage charges and early deletion charges

CORS

- Cloud Storage allows setting CORS configuration at the bucket level only

Cloud Storage Tracking Updates

- Pub/Sub notifications

- sends information about changes to objects in the buckets to Pub/Sub, where the information is added to a specified Pub/Sub topic in the form of messages.

- Each notification contains information describing both the event that triggered it and the object that changed.

- Audit Logs

- Google Cloud services write audit logs to help you answer the questions, “Who did what, where, and when?”

- Cloud projects contain only the audit logs for resources that are directly within the project.

- Cloud Audit Logs generates the following audit logs for operations in Cloud Storage:

- Admin Activity logs: Entries for operations that modify the configuration or metadata of a project, bucket, or object.

- Data Access logs: Entries for operations that modify objects or read a project, bucket, or object.

Data Consistency

- Cloud Storage operations are primarily strongly consistent with few exceptions being eventually consistent

- Cloud Storage provides strong global consistency for the following operations, including both data and metadata:

- Read-after-write

- Read-after-metadata-update

- Read-after-delete

- Bucket listing

- Object listing

- Cloud Storage provides eventual consistency for following operations

- Granting access to or revoking access from resources.

gsutil

-

gsutil tool is the standard tool for small- to medium-sized transfers (less than 1 TB) over a typical enterprise-scale network, from a private data center to Google Cloud.

gsutil provides all the basic features needed to manage the Cloud Storage instances, including copying the data to and from the local file system and Cloud Storage.gsutil can also move, rename and remove objects and perform real-time incremental syncs, like rsync, to a Cloud Storage bucket.gsutil is especially useful in the following scenarios:

- as-needed transfers or during command-line sessions by your users.

- transferring only a few files or very large files, or both.

- consuming the output of a program (streaming output to Cloud Storage)

- watch a directory with a moderate number of files and sync any updates with very low latencies.

gsutil provides following features

- Parallel multi-threaded transfers with

gsutil -m, increasing transfer speeds.

- Composite transfers for a single large file to break them into smaller chunks to increase transfer speed. Chunks are transferred and validated in parallel, sending all data to Google. Once the chunks arrive at Google, they are combined (referred to as compositing) to form a single object

gsutil perfdiag can help gather stats to provide diagnostic output to the Cloud Storage team

Best Practices

- Use IAM over ACL whenever possible as IAM provides an audit trail

- Cloud Storage auto-scaling performs well if requests ram up gradually rather than having a sudden spike.

- If the request rate is less than 1000 write requests per second or 5000 read requests per second, then no ramp-up is needed.

- If the request rate is expected to go over these thresholds, start with a request rate below or near the thresholds and then double the request rate no faster than every 20 minutes.

- Avoid sequential naming bottleneck as Cloud Storage uploads data to different shards based on the file name/path as using the same pattern would overload a shard leading to performance degrade

- Use Truncated exponential backoff as a standard error handling strategy

- For multiple smaller files, use

gsutil with -m option that performs a batched, parallel, multi-threaded/multi-processing to upload which can significantly increase the performance of an upload

- For large objects downloads, use

gsutil with HTTP Range GET requests to perform “sliced” downloads in parallel

- To upload large files efficiently, use parallel composite upload with object composition to perform uploads in parallel for large, local files. It splits a large file into component pieces, uploads them in parallel, and then recomposes them once they’re in the cloud (and deletes the temporary components it created locally).

GCP Certification Exam Practice Questions

- Questions are collected from Internet and the answers are marked as per my knowledge and understanding (which might differ with yours).

- GCP services are updated everyday and both the answers and questions might be outdated soon, so research accordingly.

- GCP exam questions are not updated to keep up the pace with GCP updates, so even if the underlying feature has changed the question might not be updated

- Open to further feedback, discussion and correction.

- You have a collection of media files over 50GB each that you need to migrate to Google Cloud Storage. The files are in your on-premises data center. What migration method can you use to help speed up the transfer process?

- Use multi-threaded uploads using the -m option.

- Use parallel uploads to break the file into smaller chunks then transfer it simultaneously.

- Use the Cloud Transfer Service to transfer.

- Start a recursive upload.

- Your company has decided to store data files in Cloud Storage. The data would be hosted in a regional bucket to start with. You need to configure Cloud Storage lifecycle rule to move the data for archival after 30 days and delete the data after a year. Which two actions should you take?

- Create a Cloud Storage lifecycle rule with Age: “30”, Storage Class: “Standard”, and Action: “Set to Coldline”, and create a second GCS life-cycle rule with Age: “365”, Storage Class: “Coldline”, and Action: “Delete”.

- Create a Cloud Storage lifecycle rule with Age: “30”, Storage Class: “Standard”, and Action: “Set to Coldline”, and create a second GCS life-cycle rule with Age: “275”, Storage Class: “Coldline”, and Action: “Delete”.

- Create a Cloud Storage lifecycle rule with Age: “30”, Storage Class: “Standard”, and Action: “Set to Nearline”, and create a second GCS life-cycle rule with Age: “365”, Storage Class: “Nearline”, and Action: “Delete”.

- Create a Cloud Storage lifecycle rule with Age: “30”, Storage Class: “Standard”, and Action: “Set to Nearline”, and create a second GCS life-cycle rule with Age: “275”, Storage Class: “Nearline”, and Action: “Delete”.

References

Google Cloud Platform – Cloud Storage