Google Cloud Hybrid Connectivity

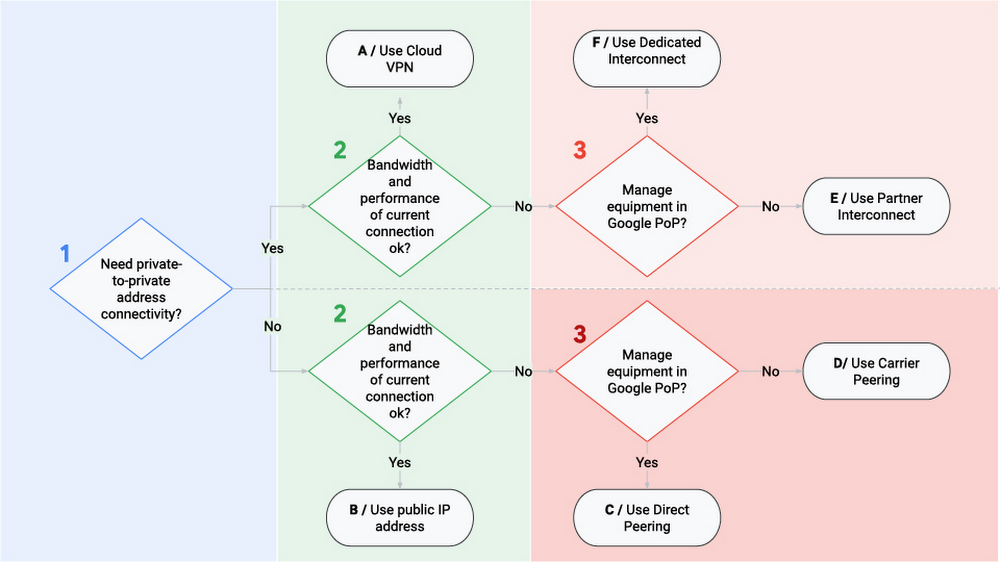

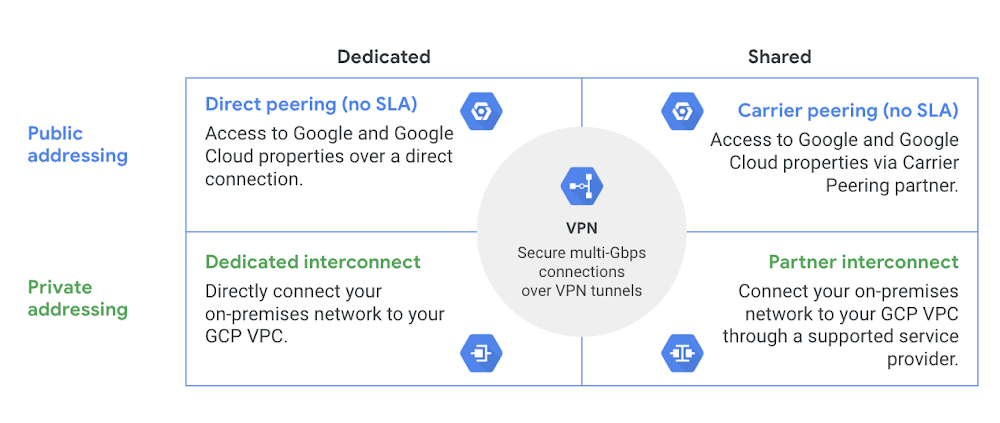

Google Cloud provides various network connectivity options to meet the needs, using either public networks, peering, or interconnect technologies

Public Network Connectivity

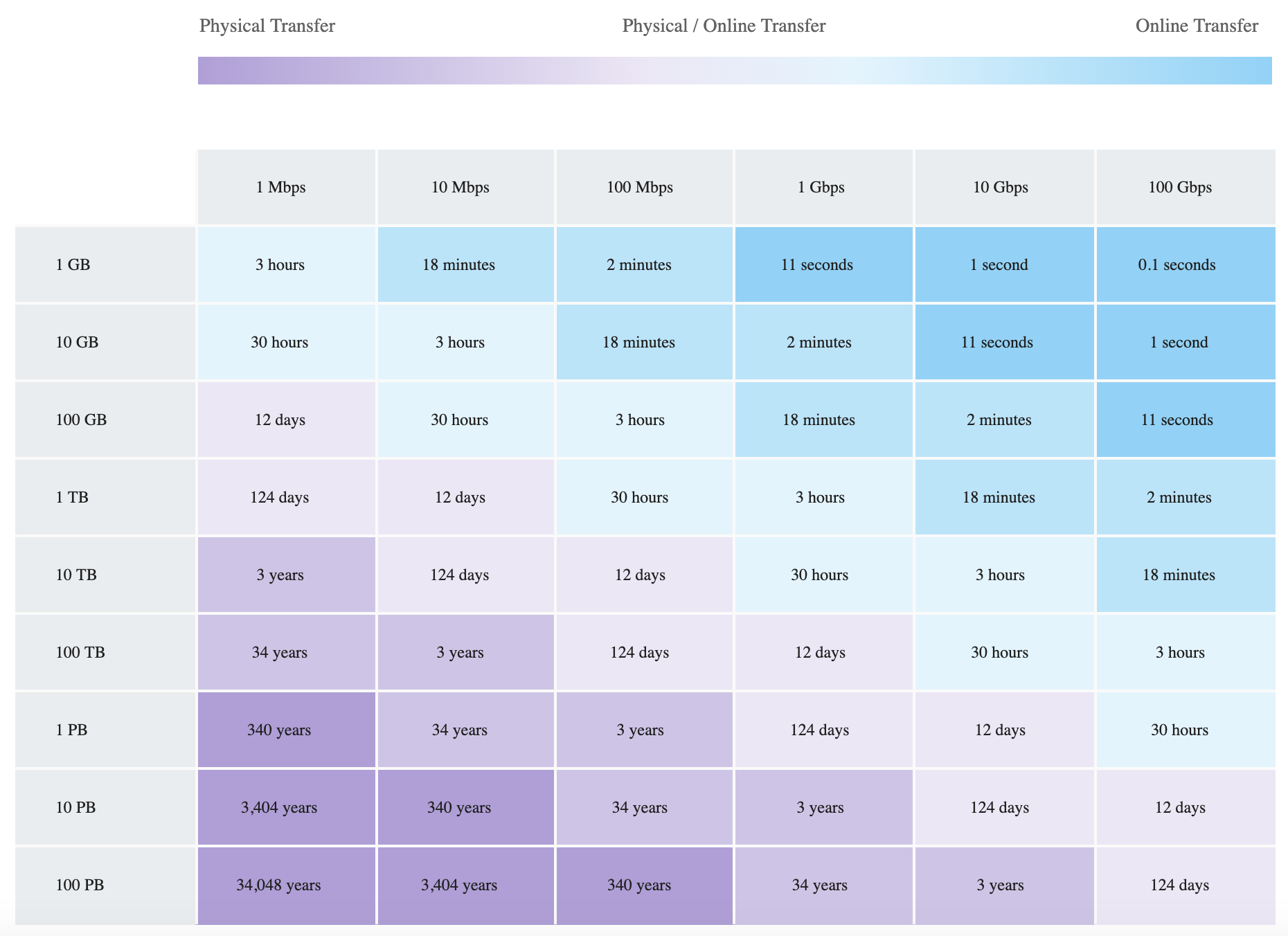

Standard internet connection can be used to connect Google Cloud with the on-premises environment if it meets the bandwidth needs.

Cloud VPN

- provides secure, private connectivity using IPSec

- connects on-premises networks to VPC or two VPCs in GCP

- traffic flows via the VPN tunnel but is still routed over the public internet

- traffic is encrypted by one gateway and decrypted by the other

- allows users to access private RFC1918 addresses on resources in the VPC from on-prem computers also using private RFC1918 addresses.

- can be used with Private Google Access for on-premises hosts

- provides guaranteed uptime of 99.99% using High availability VPN

- supports only site-to-site VPN

- supports up to 3Gbps per tunnel with a maximum of 8 tunnels

- supports static as well as dynamic routing using Cloud Router

- supports IKEv1 or IKEv2 using a shared secret

Peering

- Peering provides better connectivity to Google Cloud as compared to the public connection. However, the connectivity is still not RFC1918-to-RFC1918 private address connectivity.

- Peering gets your network as close as possible to Google Cloud public IP addresses.

Direct Peering

- requires you to lease co-lo space and install and support routing equipment in a Google Point Of Presence (PoP).

- supports BGP over a link to exchange network routes.

- All traffic destined to Google rides over this new link, while traffic to other sites on the internet rides your regular internet connection.

Carrier Peering

- preferred if installing equipment isn’t an option or would prefer to work with a service provider partner as an intermediary to peer with Google

- connection to Google is via a new link connection installed to a partner carrier that is already connected to the Google network itself.

- supports BGP or uses static routing over that link.

- All traffic destined to Google rides over this new link.

- Traffic to other sites on the internet rides your regular internet connection.

Interconnect

- Interconnects are similar to peering in that the connections get your network as close as possible to the Google network.

- Interconnects differ from peering as they provide connectivity using private address space into the Google VPC.

- For RFC1918-to-RFC1918 private address connectivity, either a dedicated or partner interconnect is required

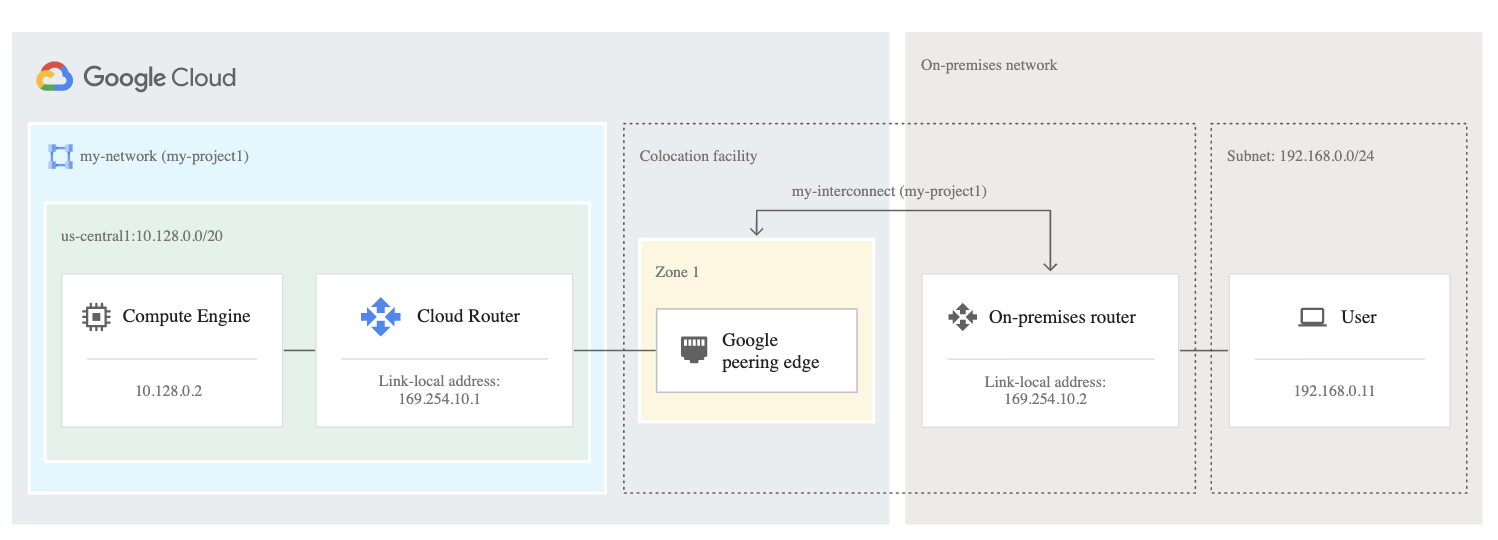

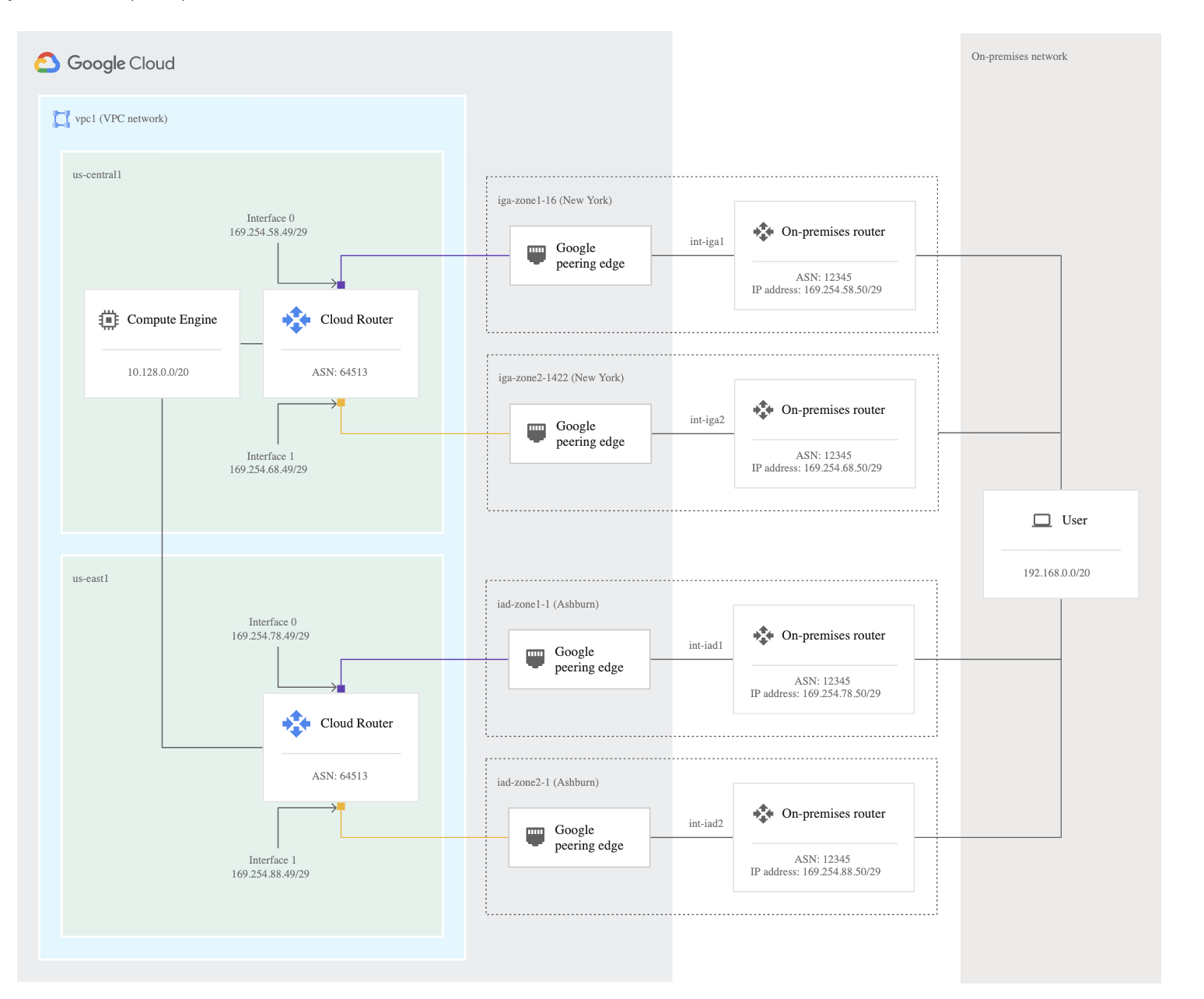

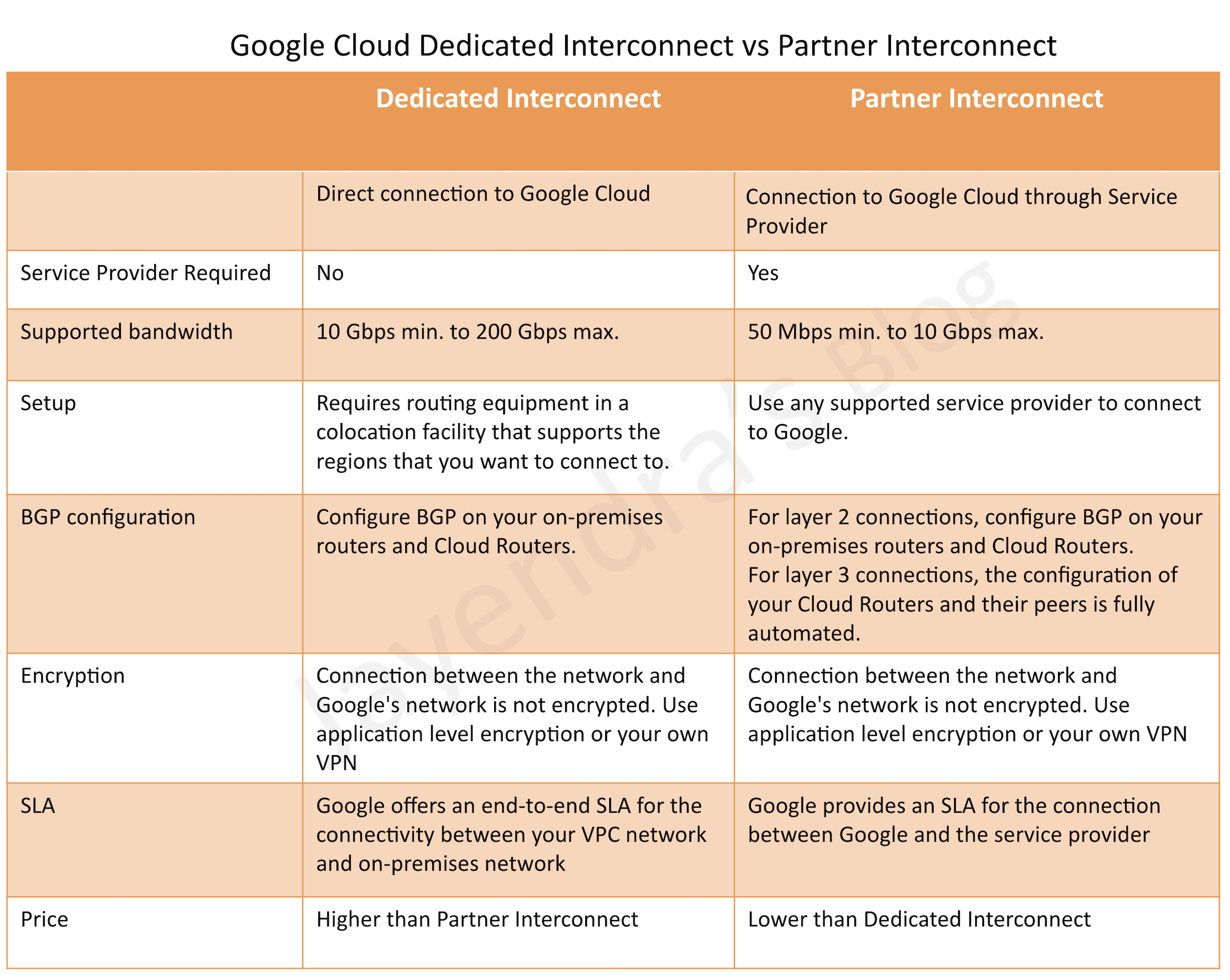

Dedicated Interconnect

- provides private, high-performance connectivity to Google Cloud

- requires you to lease co-lo space and install and support routing equipment in a Google Point Of Presence (PoP).

- requires installing a link directly to Google by choosing a 10 Gbps or 200 Gbps pipe and provisioning a VLAN attachment over the physical link

- gives the RFC1918-to-RFC1918 private address connectivity.

- All traffic destined to the Google Cloud VPC rides over this new link.

- Traffic to other sites on the internet rides the regular internet connection.

- Single Interconnect connection does not offer HA and GCP recommends redundancy using 2 (99.9%) or 4 (99.99%) interconnect connections so that if one connection fails, the other connection can continue to serve traffic

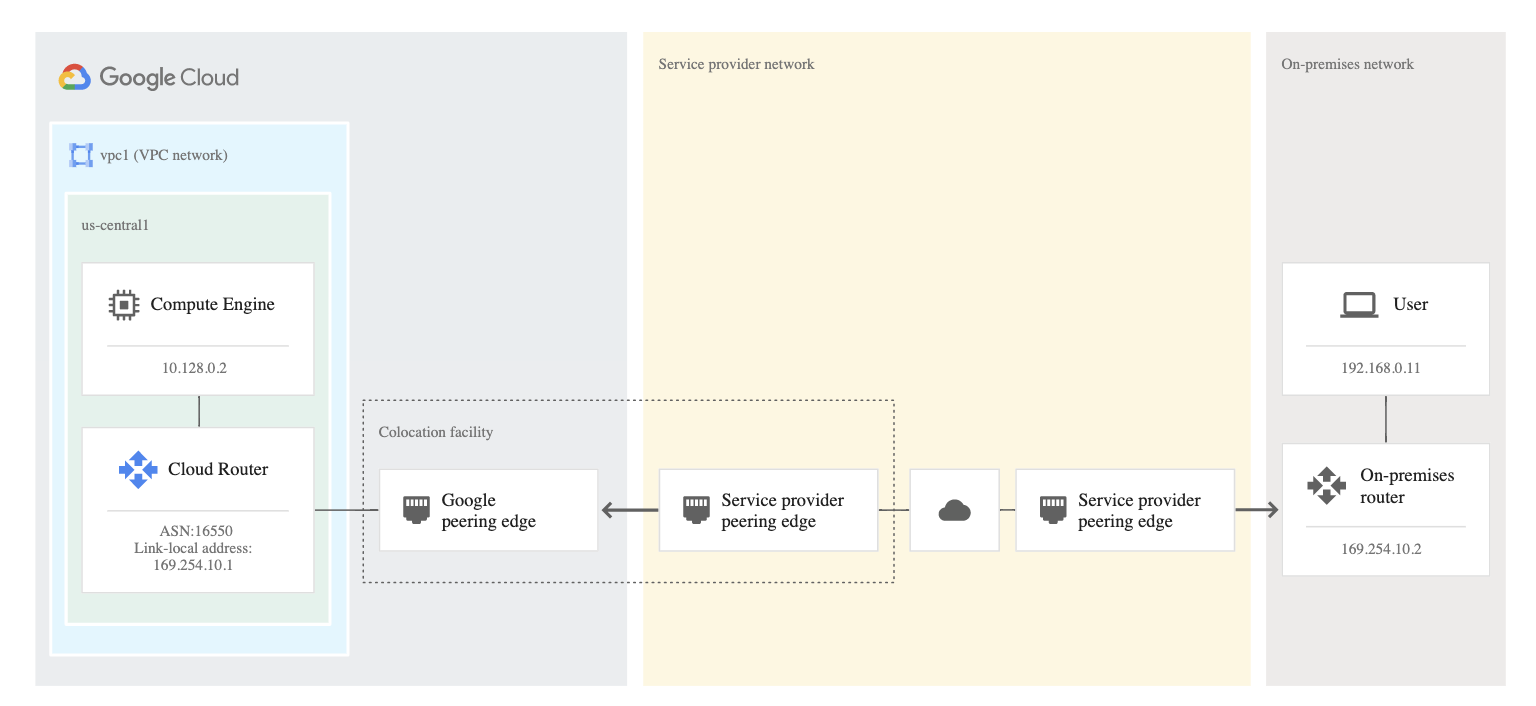

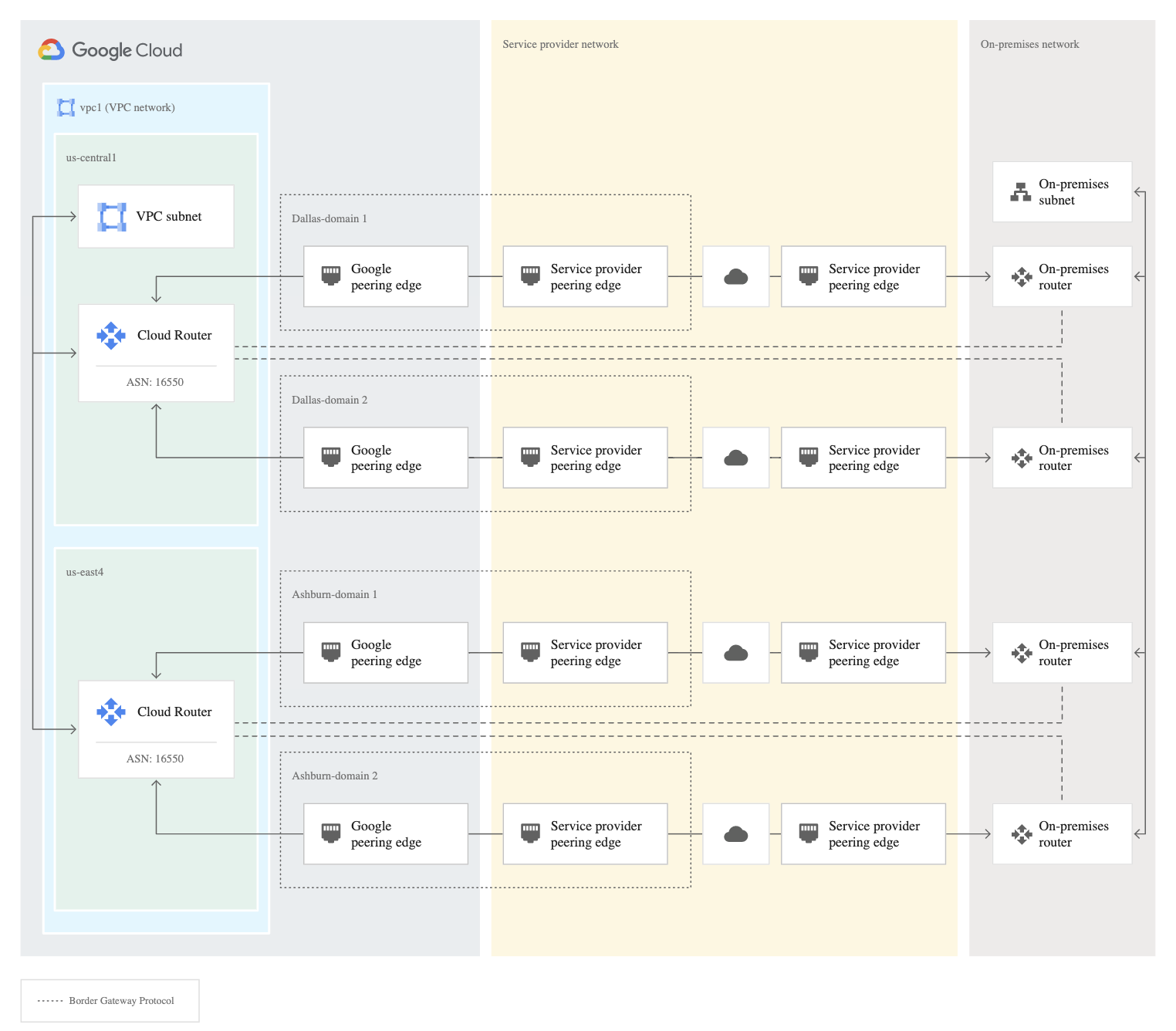

Partner Interconnect

- provides private, high-performance connectivity to Google Cloud

- preferred if bandwidth requires are below 10 Gbps or installing equipment isn’t an option or would prefer to work with a service provider partner as an intermediary

- similar to carrier peering in that you connect to a partner service provider that is directly connected to Google.

- supports BGP or use static routing over that link.

- requires provisioning a VLAN attachment over the physical link

- gives the RFC1918-to-RFC1918 private address connectivity.

- All traffic destined to your Google VPC rides over this new link.

- Traffic to other sites on the internet rides your regular internet connection.

Google Cloud Hydrid Connectivity Decision Tree