AWS EC2 Instance Purchasing Option

- Amazon provides different ways to pay for the EC2 instances

- On-Demand Instances

- Reserved Instances

- Spot Instances

- Dedicated Hosts

- Dedicated Instances

- Capacity reservations

- EC2 instances can be launched on shared or dedicated tenancy

On-Demand Instances

- Pay for the instances and the compute capacity used by the hour or the second, depending on which instances you run

- No long-term commitments or up-front payments

- Instances can be scaled accordingly as per the demand

- Although AWS makes effort to have the capacity to launch On-Demand instances, there might be instances during peak demand where the instance cannot be launched

- Well suited for

- Users that want the low cost and flexibility of EC2 without any up-front payment or long-term commitment

- Applications with short term, spiky, or unpredictable workloads that cannot be interrupted

- Applications being developed or tested on EC2 for the first time

Reserved Instances

- Reserved Instances provides lower hourly running costs by providing a billing discount (up to 75%) as well as capacity reservation that is applied to instances and there would never be a case of insufficient capacity

- Discounted usage price is fixed as long as you own the Reserved Instance, allowing compute costs prediction over the term of the reservation

- Reserved instances are best suited if consistent, heavy, use is expected and they can provide savings over owning the hardware or running only On-Demand instances.

- Well Suited for

- Applications with steady state or predictable usage

- Applications that require reserved capacity

- Users are able to make upfront payments to reduce their total computing costs even further

- Reserved instance is not a physical instance that is launched, but rather a billing discount applied to the use of On-Demand Instances

- On-Demand Instances must match certain attributes, such as instance type and Region, in order to benefit from the billing discount.

- Reserved Instances do not renew automatically, and the EC2 instances can be continued to be used but charged On-Demand rates

- Auto Scaling or other AWS services can be used to launch the On-Demand instances that use the Reserved Instance benefits

- With Reserved Instances

- You pay for the entire term, regardless of the usage

- Once purchased, the reservation cannot be canceled but can be sold in the Reserved Instance Marketplace

- Reserved Instance pricing tier discounts only apply to purchases made from AWS, and not to the third party Reserved instances

Reserved Instance Pricing Key Variables

Instance attributes

A Reserved Instance has four instance attributes that determine its price.

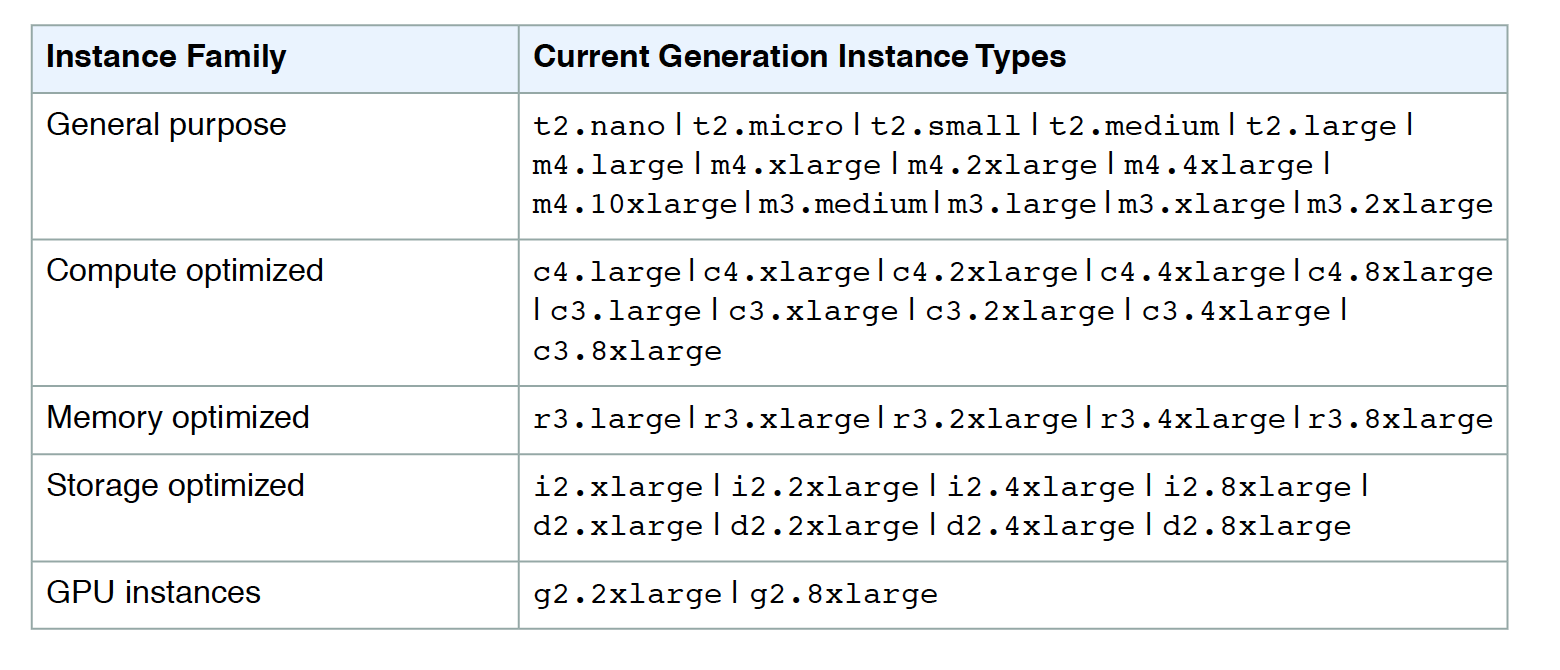

- Instance type: Instance family + Instance size e.g.

m4.largecomposed of the instance family (m4) and the instance size (large). - Region: Region in which the Reserved Instance is purchased.

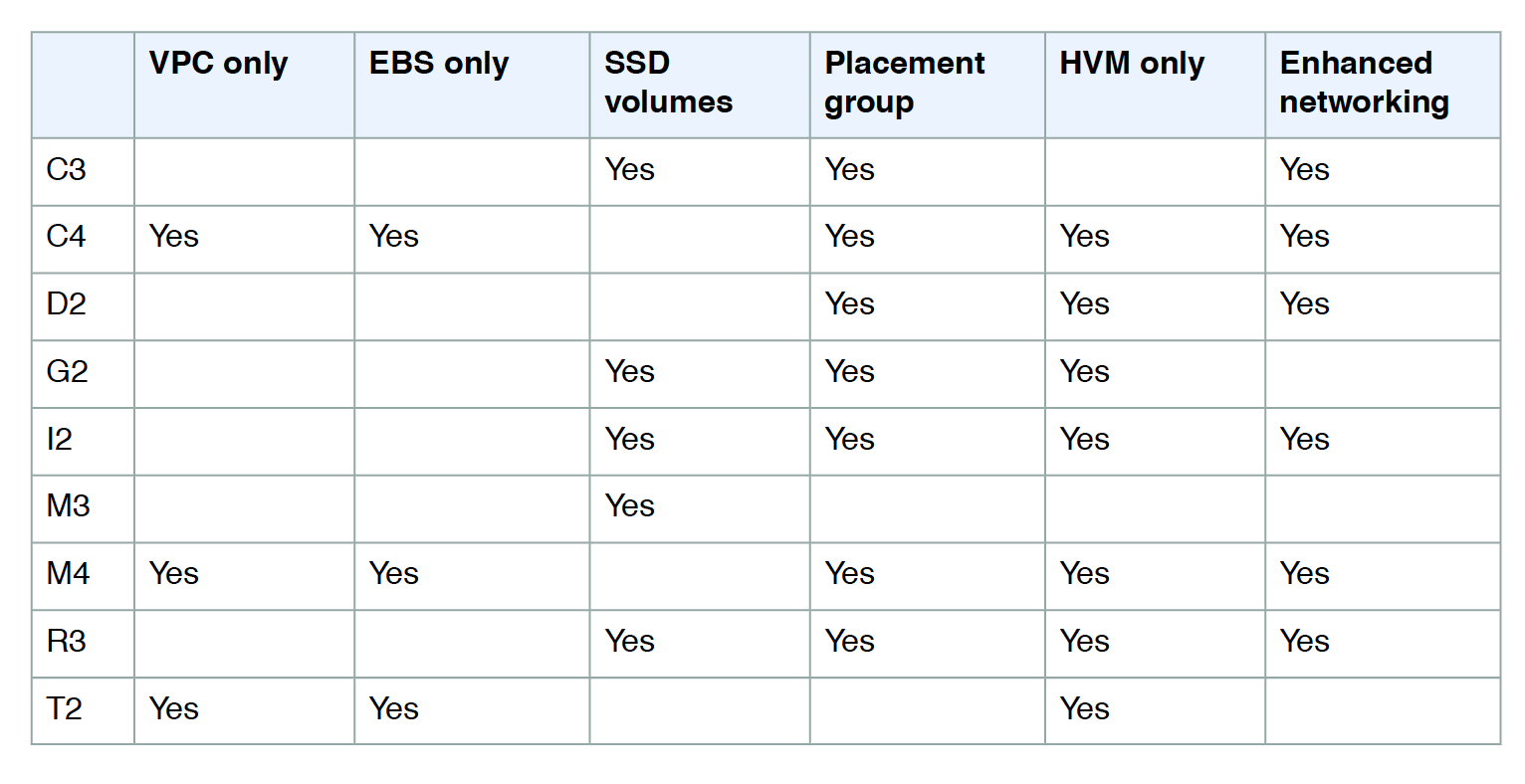

- Tenancy: Whether your instance runs on shared (default) or single-tenant (dedicated) hardware.

- Platform: Operating system; for example, Windows or Linux/Unix.

Term commitment

Reserved Instance can be purchased for a one-year or three-year commitment, with the three-year commitment offering a bigger discount.

- One-year: A year is defined as 31536000 seconds (365 days).

- Three-year: Three years is defined as 94608000 seconds (1095 days).

Payment options

- No Upfront

- No upfront payment is required and the account is charged at a discounted hourly rate for every hour, regardless of the usage

- Only available as a 1-year reservation

- Partial Upfront

- A portion of the cost is paid upfront and the remaining hours in the term are charged at an hourly discounted rate, regardless of the usage

- Full Upfront

- Full payment is made at the start of the term, with no costs for the remainder of the term, regardless of the usage

Offering class

- Standard: Provide the most significant discount, but can only be modified.

- Convertible: These provide a lower discount than Standard Reserved Instances, but can be exchanged for another Convertible Reserved Instance with different instance attributes. Convertible Reserved Instances can also be modified.

How Reserved Instances work

Billing Benefits & Payment Options

- Reserved Instance purchase reservation is automatically applied to running instances that match the specified parameters

- Reserved Instance can also be utilized by launching On-Demand instances with the same configuration as to the purchased reserved capacity

Understanding Hourly Billing

- Reserved Instances are billed for every clock-hour during the term that you select, regardless of whether the instance is running or not.

- A Reserved Instance billing benefit can be applied to a running instance on a per-second basis. Per-second billing is available for instances using an open-source Linux distribution, such as Amazon Linux and Ubuntu.

- Per-hour billing is used for commercial Linux distributions, such as Red Hat Enterprise Linux and SUSE Linux Enterprise Server.

- A Reserved Instance billing benefit can apply to a maximum of 3600 seconds (one hour) of instance usage per clock-hour. You can run multiple instances concurrently, but can only receive the benefit of the Reserved Instance discount for a total of 3600 seconds per clock-hour; instance usage that exceeds 3600 seconds in a clock-hour is billed at the On-Demand rate.

Reservations and discounted rates only apply to one instance-hour per hour. If an instance restarts during the first hour of a reservation and runs for two hours before stopping, the first instance-hour is charged at the discounted rate but three instance-hours are charged at the On-Demand rate. If the instance restarts during one hour and again the next hour before running the remainder of the reservation, one instance-hour is charged at the On-Demand rate but the discounted rate is applied to previous and subsequent instance-hours.

Consolidated Billing

- Pricing benefits of Reserved Instances are shared when the purchasing account is part of a set of accounts billed under one consolidated billing payer account

- Consolidated billing account aggregates the list value of member accounts within a region.

- When the list value of all active Reserved Instances for the consolidated billing account reaches a discount pricing tier, any Reserved Instances purchased after this point by any member of the consolidated billing account are charged at the discounted rate (as long as the list value for that consolidated account stays above the discount pricing tier threshold)

Buying Reserved Instances

Buying Reserved Instances need a selection of the following

- Platform (for example, Linux)

- Instance type (for example, m1.small)

- Availability Zone in which to run the instance for Zonal reserved instance

- Term (time period) over which you want to reserve capacity

- Tenancy You can reserve capacity for your instance to run in single-tenant hardware (dedicated tenancy, as opposed to shared).

- Offering (No Upfront, Partial Upfront, All Upfront).

Modifying Reserved Instances

- Standard or Convertible Reserved Instances can be modified and continue to benefit from the capacity reservation as the computing needs change.

- Availability Zone, instance size (within the same instance family), and scope of the Reserved Instance can be modified

- All or a subset of the Reserved Instances can be modified

- Two or more Reserved Instances can be merged into a single Reserved Instance

- Modification does not change the remaining term of the Reserved Instances; their end dates remain the same.

- There is no fee, and you do not receive any new bills or invoices.

- Modification is separate from purchasing and does not affect how you use, purchase, or sell Reserved Instances.

- Complete reservation or a subset of it can be modified in one or more of the following ways:

- Switch Availability Zones within the same region

- Change between EC2-VPC and EC2-Classic

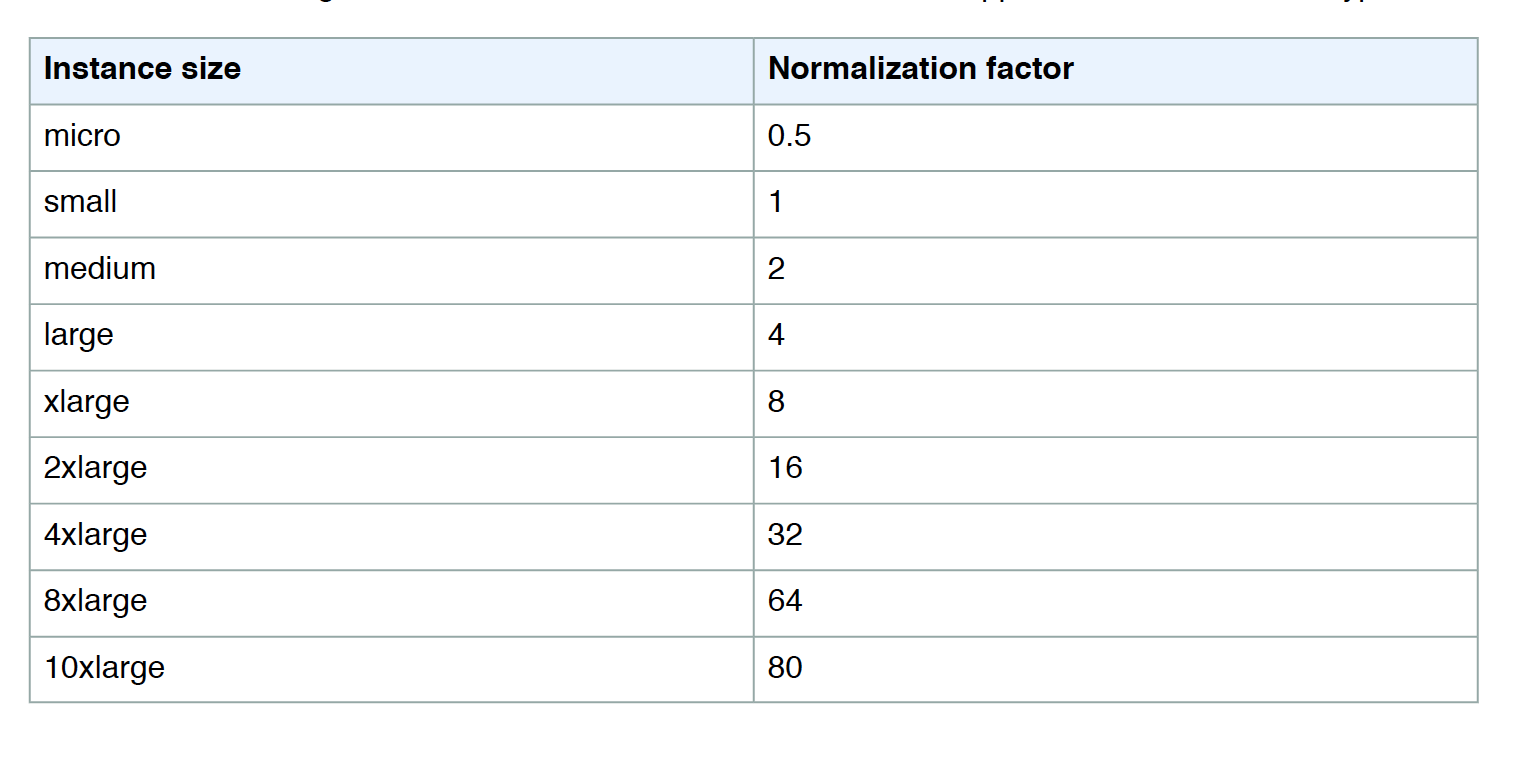

- Change the instance size within the same instance type, given the instance size footprint remains the same for e.g. four m1.medium instances (4 x 2), you can turn it into a reservation for eight m1.small instances (8 x 1) and vice versa. However, you cannot convert a reservation for a single m1.small instance (1 x 1) into a reservation for an m1.large instance (1 x 4).

Scheduled Reserved Instances

- AWS does not have any capacity available for Scheduled Reserved Instances or any plans to make it available in the future. To reserve capacity, use On-Demand Capacity Reservations instead

- Scheduled Reserved Instances (Scheduled Instances) enable capacity reservations purchase that recurs on a daily, weekly, or monthly basis, with a specified start time and duration, for a one-year term.

- Capacity is reserved in advance and is always available when needed

- Charges are incurred for the time that the instances are scheduled, even if they are not used

- Scheduled Instances are a good choice for workloads that do not run continuously, but do run on a regular schedule for e.g. weekly or monthly batch jobs

- EC2 launches the instances, based on the launch specification during their scheduled time periods

- EC2 terminates the EC2 instances three minutes before the end of the current scheduled time period to ensure the capacity is available for any other Scheduled Instances it is reserved for.

- Scheduled Reserved instances cannot be stopped or rebooted, however, they can be terminated and relaunched within minutes of termination

- Scheduled Reserved instances limits or restrictions

- after purchase cannot be modified, canceled, or resold

- only supported instance types: C3, C4, M4, and R3

- the required term is 365 days (one year).

- minimum required utilization is 1,200 hours per year

- purchase up to three months in advance

On-Demand Capacity Reservations

- On-Demand Capacity Reservations enable you to reserve compute capacity for the EC2 instances in a specific AZ for any duration.

- This gives you the ability to create and manage Capacity Reservations independently from the billing discounts offered by Savings Plans or regional Reserved Instances.

- By creating Capacity Reservations, you ensure that you always have access to EC2 capacity when you need it, for as long as you need it.

- Capacity Reservations can be created at any time, without entering into a one-year or three-year term commitment, and the capacity is available immediately.

- Billing starts as soon as the capacity is provisioned and the Capacity Reservation enters the active state.

- When no longer needed, the Capacity Reservation can be canceled to stop incurring charges.

- Capacity Reservation creation requires

- AZ in which to reserve the capacity

- Number of instances for which to reserve capacity

- Instance attributes, including the instance type, tenancy, and platform/OS

- Capacity Reservations can only be used by instances that match their attributes. By default, they are automatically used by running instances that match the attributes. If you don’t have any running instances that match the attributes of the Capacity Reservation, it remains unused until you launch an instance with matching attributes.

Spot Instances

Refer blog post @ EC2 Spot Instances

Dedicated Instances

- Dedicated Instances are EC2 instances that run in a VPC on hardware that’s dedicated to a single customer

- Dedicated Instances are physically isolated at the host hardware level from the instances that aren’t Dedicated Instances and from instances that belong to other AWS accounts.

- Each VPC has a related instance tenancy attribute.

default- default is shared.

- the tenancy can be changed to

dedicatedafter creation - all instances launched would be shared, unless you explicitly specify a different tenancy during instance launch.

dedicated- all instances launched would be dedicated

- the tenancy can’t be changed to

defaultafter creation

- Each instance launched into a VPC has a tenancy attribute. Default tenancy depends on the VPC tenancy, which by default is shared.

default– instance runs on shared hardware.dedicated– instance runs on single-tenant hardware.host– instance runs on a Dedicated Host, which is an isolated server with configurations that you can control.defaulttenancy cannot be changed todedicatedorhostand vice versa.dedicatedtenancy can be changed tohostand vice version

- Dedicated Instances can be launched using

- Create the VPC with the instance tenancy set to dedicated, all instances launched into this VPC are Dedicated Instances even though if you mark the tenancy as shared.

- Create the VPC with the instance tenancy set to default, and specify dedicated tenancy for any instances that should be Dedicated Instances when launched.

Dedicated Hosts

- EC2 Dedicated Host is a physical server with EC2 instance capacity fully dedicated to your use

- Dedicated Hosts allow using existing per-socket, per-core, or per-VM software licenses, including Windows Server, Microsoft SQL Server, SUSE, and Linux Enterprise Server.

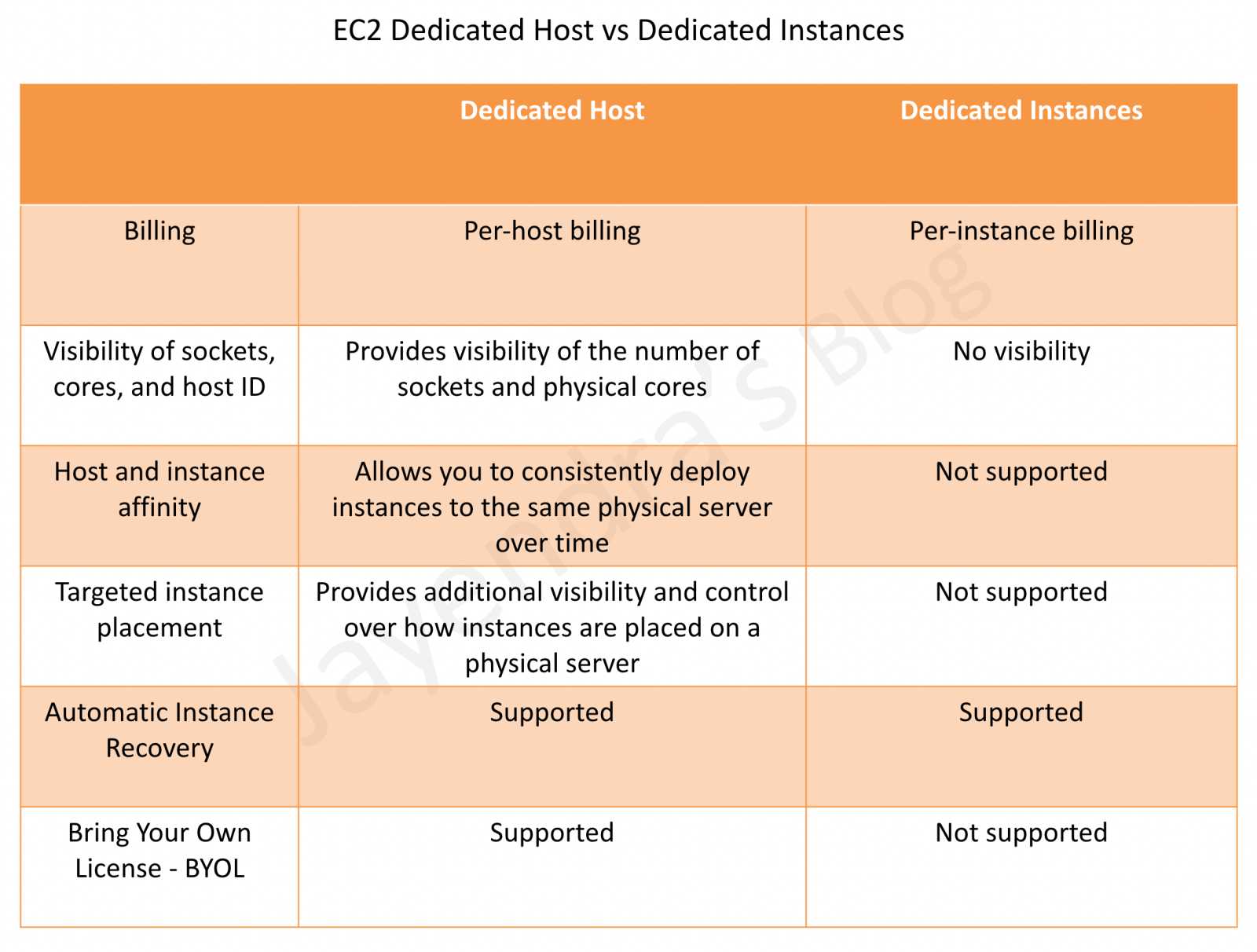

Dedicated Hosts vs Dedicated Instances

AWS Certification Exam Practice Questions

- Questions are collected from Internet and the answers are marked as per my knowledge and understanding (which might differ with yours).

- AWS services are updated everyday and both the answers and questions might be outdated soon, so research accordingly.

- AWS exam questions are not updated to keep up the pace with AWS updates, so even if the underlying feature has changed the question might not be updated

- Open to further feedback, discussion and correction.

- If I want my instance to run on a single-tenant hardware, which value do I have to set the instance’s tenancy attribute to?

- dedicated

- isolated

- one

- reserved

- You have a video transcoding application running on Amazon EC2. Each instance polls a queue to find out which video should be transcoded, and then runs a transcoding process. If this process is interrupted, the video will be transcoded by another instance based on the queuing system. You have a large backlog of videos, which need to be transcoded, and would like to reduce this backlog by adding more instances. You will need these instances only until the backlog is reduced. Which type of Amazon EC2 instances should you use to reduce the backlog in the most cost efficient way?

- Reserved instances

- Spot instances

- Dedicated instances

- On-demand instances

- The one-time payment for Reserved Instances is __________ refundable if the reservation is cancelled.

- always

- in some circumstances

- never

- You run a web application where web servers on EC2 Instances are In an Auto Scaling group Monitoring over the last 6 months shows that 6 web servers are necessary to handle the minimum load. During the day up to 12 servers are needed Five to six days per year, the number of web servers required might go up to 15. What would you recommend to minimize costs while being able to provide hill availability?

- 6 Reserved instances (heavy utilization). 6 Reserved instances (medium utilization), rest covered by On-Demand instances

- 6 Reserved instances (heavy utilization). 6 On-Demand instances, rest covered by Spot Instances (don’t go for spot as availability not guaranteed)

- 6 Reserved instances (heavy utilization) 6 Spot instances, rest covered by On-Demand instances (don’t go for spot as availability not guaranteed)

- 6 Reserved instances (heavy utilization) 6 Reserved instances (medium utilization) rest covered by Spot instances (don’t go for spot as availability not guaranteed)

- A user is running one instance for only 3 hours every day. The user wants to save some cost with the instance. Which of the below mentioned Reserved Instance categories is advised in this case?

- The user should not use RI; instead only go with the on-demand pricing (seems question before the introduction of the Scheduled Reserved instances in Jan 2016, which can be used in this case)

- The user should use the AWS high utilized RI

- The user should use the AWS medium utilized RI

- The user should use the AWS low utilized RI

- Which of the following are characteristics of a reserved instance? Choose 3 answers (but 4 answers seem correct)

- It can be migrated across Availability Zones (can be modified)

- It is specific to an Amazon Machine Image (AMI) (specific to platform)

- It can be applied to instances launched by Auto Scaling (are allowed)

- It is specific to an instance Type (specific to instance family but instance type can be changed)

- It can be used to lower Total Cost of Ownership (TCO) of a system (helps to reduce cost)

- You have a distributed application that periodically processes large volumes of data across multiple Amazon EC2 Instances. The application is designed to recover gracefully from Amazon EC2 instance failures. You are required to accomplish this task in the most cost-effective way. Which of the following will meet your requirements?

- Spot Instances

- Reserved instances

- Dedicated instances

- On-Demand instances

- Can I move a Reserved Instance from one Region to another?

- No

- Only if they are moving into GovCloud

- Yes

- Only if they are moving to US East from another region

- An application you maintain consists of multiple EC2 instances in a default tenancy VPC. This application has undergone an internal audit and has been determined to require dedicated hardware for one instance. Your compliance team has given you a week to move this instance to single-tenant hardware. Which process will have minimal impact on your application while complying with this requirement?

- Create a new VPC with tenancy=dedicated and migrate to the new VPC (possible but impact not minimal)

- Use

ec2-reboot-instancescommand line and set the parameterdedicated=true - Right click on the instance, select properties and check the box for dedicated tenancy

- Stop the instance, create an AMI, launch a new instance with tenancy=dedicated, and terminate the old instance

- Your department creates regular analytics reports from your company’s log files. All log data is collected in Amazon S3 and processed by daily Amazon Elastic Map Reduce (EMR) jobs that generate daily PDF reports and aggregated tables in CSV format for an Amazon Redshift data warehouse. Your CFO requests that you optimize the cost structure for this system. Which of the following alternatives will lower costs without compromising average performance of the system or data integrity for the raw data? [PROFESSIONAL]

- Use reduced redundancy storage (RRS) for PDF and CSV data in Amazon S3. Add Spot instances to Amazon EMR jobs. Use Reserved Instances for Amazon Redshift. (Spot instances impacts performance)

- Use reduced redundancy storage (RRS) for all data in S3. Use a combination of Spot instances and Reserved Instances for Amazon EMR jobs. Use Reserved instances for Amazon Redshift (Combination of the Spot and reserved with guarantee performance and help reduce cost. Also, RRS would reduce cost and guarantee data integrity, which is different from data durability )

- Use reduced redundancy storage (RRS) for all data in Amazon S3. Add Spot Instances to Amazon EMR jobs. Use Reserved Instances for Amazon Redshift (Spot instances impacts performance)

- Use reduced redundancy storage (RRS) for PDF and CSV data in S3. Add Spot Instances to EMR jobs. Use Spot Instances for Amazon Redshift. (Spot instances impacts performance)

- A research scientist is planning for the one-time launch of an Elastic MapReduce cluster and is encouraged by her manager to minimize the costs. The cluster is designed to ingest 200TB of genomics data with a total of 100 Amazon EC2 instances and is expected to run for around four hours. The resulting data set must be stored temporarily until archived into an Amazon RDS Oracle instance. Which option will help save the most money while meeting requirements? [PROFESSIONAL]

- Store ingest and output files in Amazon S3. Deploy on-demand for the master and core nodes and spot for the task nodes.

- Optimize by deploying a combination of on-demand, RI and spot-pricing models for the master, core and task nodes. Store ingest and output files in Amazon S3 with a lifecycle policy that archives them to Amazon Glacier. (Reserved Instance not cost effective for 4 hour job and data not needed in S3 once moved to RDS)

- Store the ingest files in Amazon S3 RRS and store the output files in S3. Deploy Reserved Instances for the master and core nodes and on-demand for the task nodes. (Reserved Instance not cost effective)

- Deploy on-demand master, core and task nodes and store ingest and output files in Amazon S3 RRS (RRS provides not much cost benefits for a 4 hour job while the amount of input data would take time to upload and Output data to reproduce)

- A company currently has a highly available web application running in production. The application’s web front-end utilizes an Elastic Load Balancer and Auto scaling across 3 availability zones. During peak load, your web servers operate at 90% utilization and leverage a combination of heavy utilization reserved instances for steady state load and on-demand and spot instances for peak load. You are asked with designing a cost effective architecture to allow the application to recover quickly in the event that an availability zone is unavailable during peak load. Which option provides the most cost effective high availability architectural design for this application? [PROFESSIONAL]

- Increase auto scaling capacity and scaling thresholds to allow the web-front to cost-effectively scale across all availability zones to lower aggregate utilization levels that will allow an availability zone to fail during peak load without affecting the applications availability. (Ideal for HA to reduce and distribute load)

- Continue to run your web front-end at 90% utilization, but purchase an appropriate number of utilization RIs in each availability zone to cover the loss of any of the other availability zones during peak load. (90% is not recommended as well RIs would increase the cost)

- Continue to run your web front-end at 90% utilization, but leverage a high bid price strategy to cover the loss of any of the other availability zones during peak load. (90% is not recommended as high bid price would not guarantee instances and would increase cost)

- Increase use of spot instances to cost effectively to scale the web front-end across all availability zones to lower aggregate utilization levels that will allow an availability zone to fail during peak load without affecting the applications availability. (Availability cannot be guaranteed)

- You run accounting software in the AWS cloud. This software needs to be online continuously during the day every day of the week, and has a very static requirement for compute resources. You also have other, unrelated batch jobs that need to run once per day at any time of your choosing. How should you minimize cost? [PROFESSIONAL]

- Purchase a Heavy Utilization Reserved Instance to run the accounting software. Turn it off after hours. Run the batch jobs with the same instance class, so the Reserved Instance credits are also applied to the batch jobs. (Because the instance will always be online during the day, in a predictable manner, and there are sequences of batch jobs to perform at any time, we should run the batch jobs when the account software is off. We can achieve Heavy Utilization by alternating these times, so we should purchase the reservation as such, as this represents the lowest cost. There is no such thing a “Full” level utilization purchases on EC2.)

- Purchase a Medium Utilization Reserved Instance to run the accounting software. Turn it off after hours. Run the batch jobs with the same instance class, so the Reserved Instance credits are also applied to the batch jobs.

- Purchase a Light Utilization Reserved Instance to run the accounting software. Turn it off after hours. Run the batch jobs with the same instance class, so the Reserved Instance credits are also applied to the batch jobs.

- Purchase a Full Utilization Reserved Instance to run the accounting software. Turn it off after hours. Run the batch jobs with the same instance class, so the Reserved Instance credits are also applied to the batch jobs.