EC2 Elastic Block Storage – EBS

- Elastic Block Storage – EBS provides highly available, reliable, durable, block-level storage volumes that can be attached to an EC2 instance.

- EBS as a primary storage device is recommended for data that requires frequent and granular updates e.g. running a database or filesystem.

- An EBS volume

- behaves like a raw, unformatted, external block device that can be attached to a single EC2 instance at a time.

- persists independently from the running life of an instance.

- is Zonal and can be attached to any instance within the same Availability Zone and can be used like any other physical hard drive.

- is particularly well-suited for use as the primary storage for file systems, databases, or any applications that require fine granular updates and access to raw, unformatted, block-level storage.

Elastic Block Storage Features

- EBS Volumes are created in a specific Availability Zone and can be attached to any instance in that same AZ.

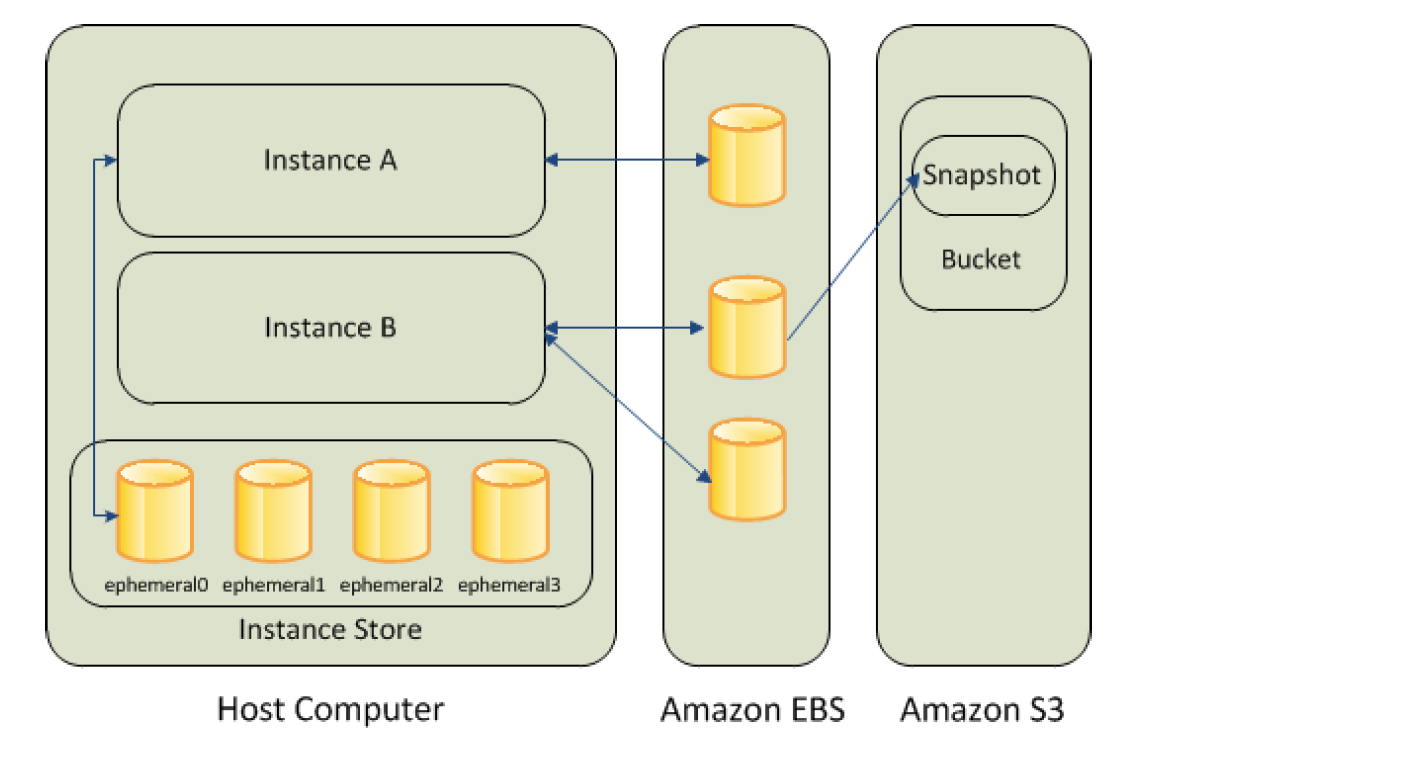

- Volumes can be backed up by creating a snapshot of the volume, which is stored in S3.

- Volumes can be created from a snapshot that can be attached to another instance within the same region.

- Volumes can be made available outside of the AZ by creating and restoring the snapshot to a new volume anywhere in that region.

- Snapshots can also be copied to other regions and then restored to new volumes, making it easier to leverage multiple AWS regions for geographical expansion, data center migration, and disaster recovery.

- Volumes allow encryption using the EBS encryption feature. All data stored at rest, disk I/O, and snapshots created from the volume are encrypted.

- Encryption occurs on the EC2 instance, providing encryption of data-in-transit from EC2 to the EBS volume.

- Elastic Volumes help easily adapt the volumes as the needs of the applications change. Elastic Volumes allow you to dynamically increase capacity, tune performance, and change the type of any new or existing current generation volume with no downtime or performance impact.

- You can dynamically increase size, modify the provisioned IOPS capacity, and change volume type on live production volumes.

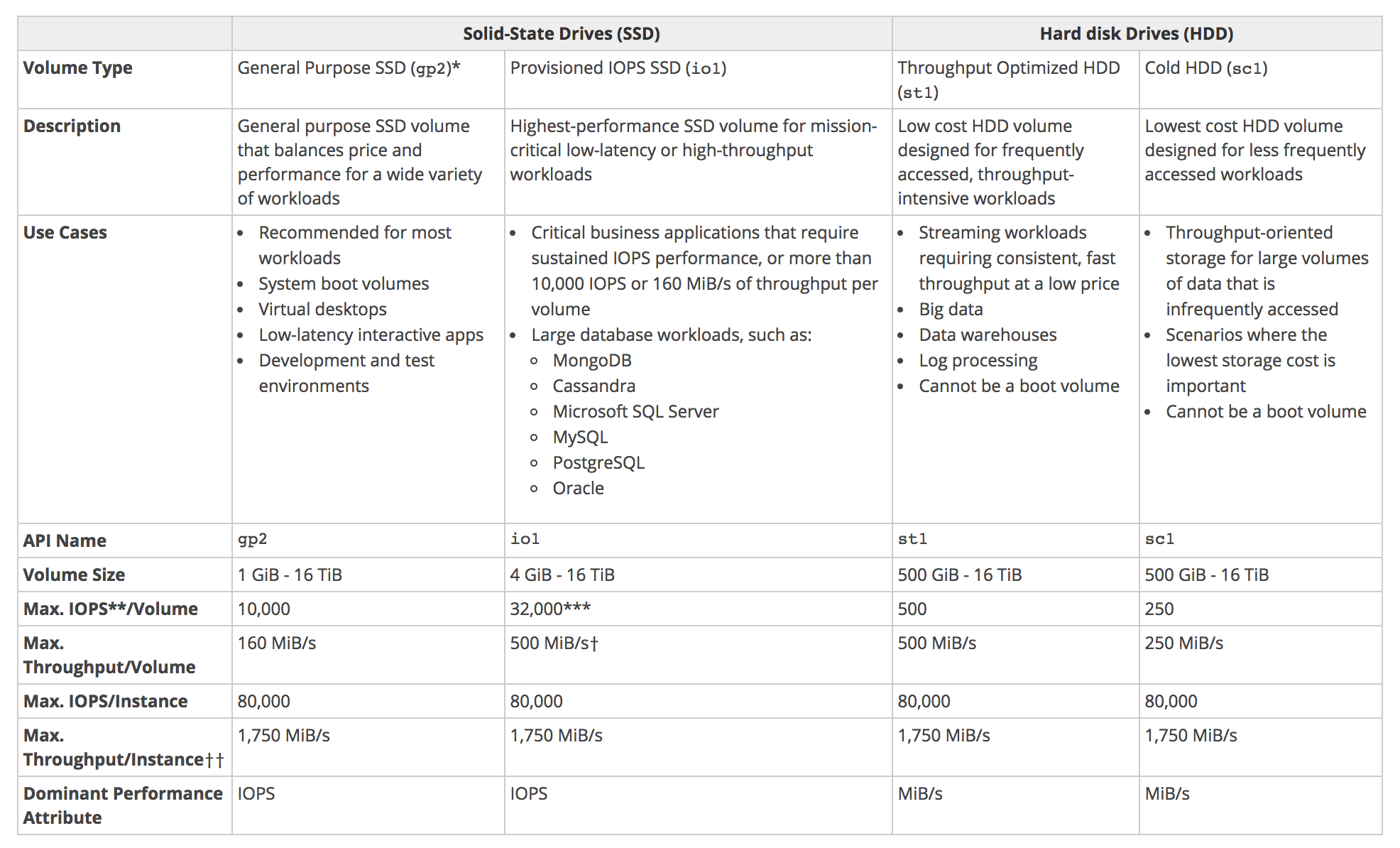

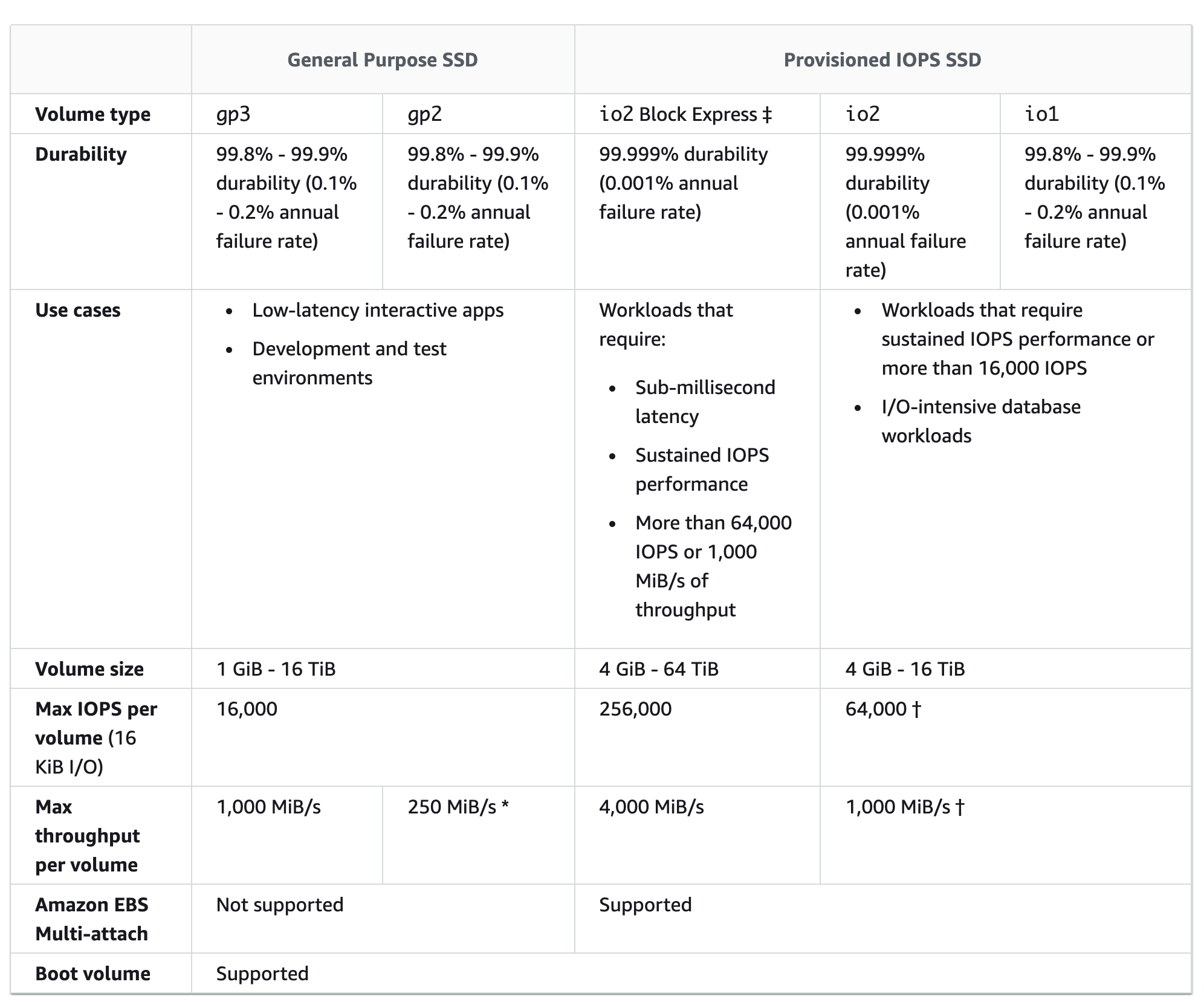

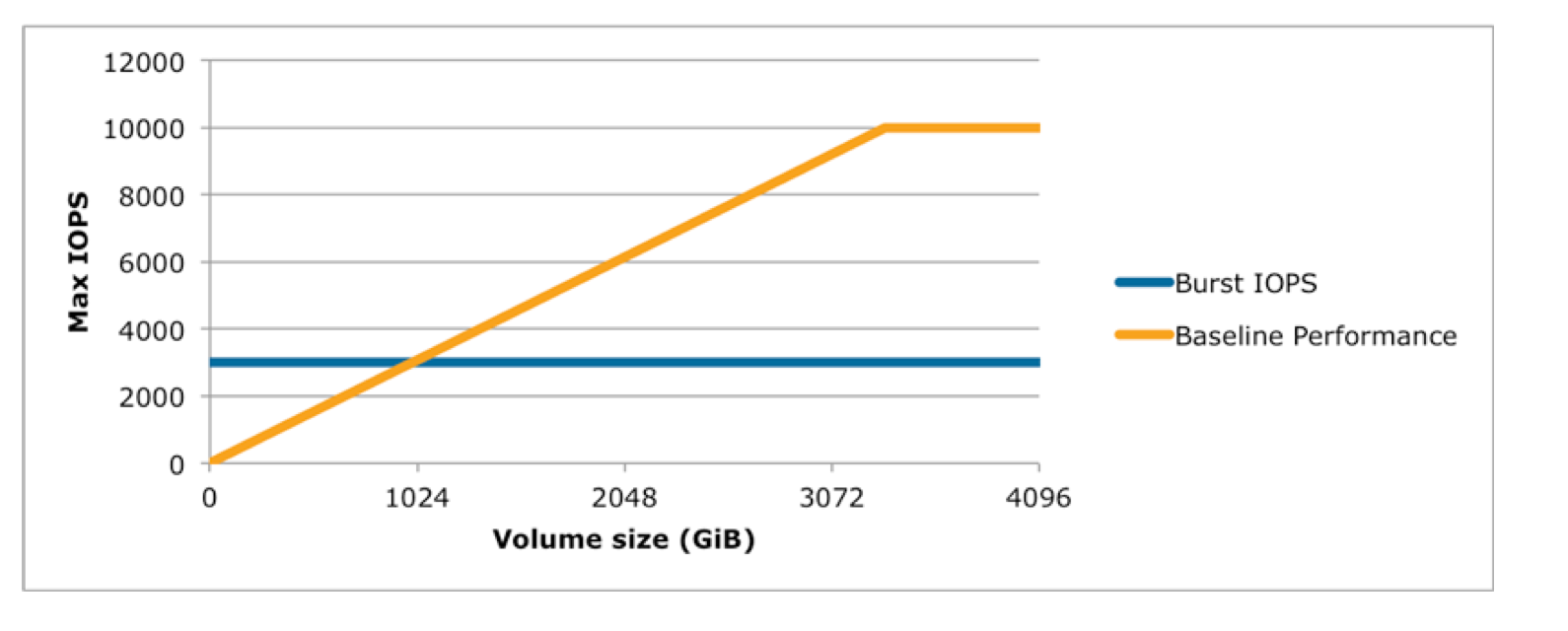

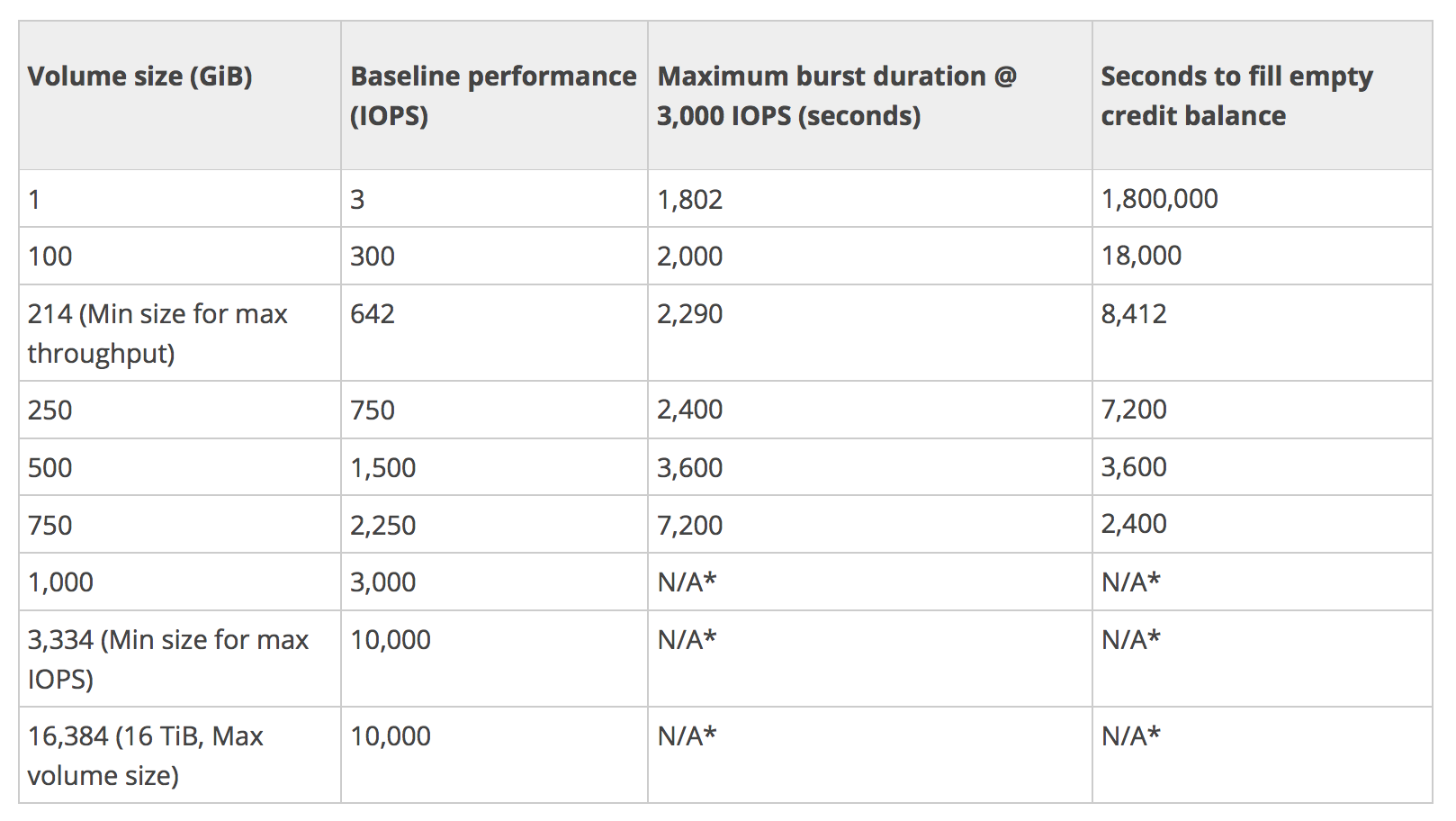

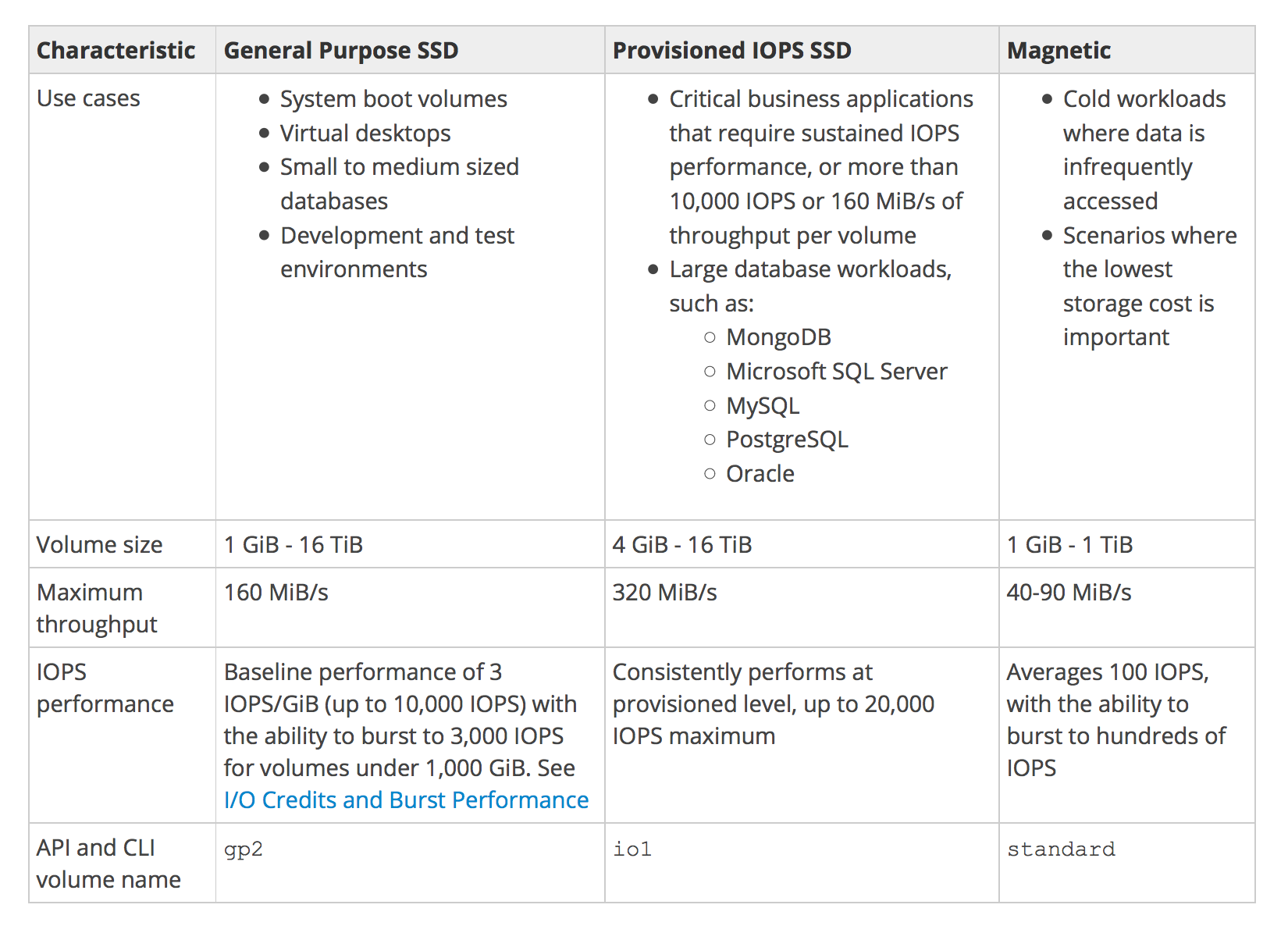

- General Purpose (SSD) volumes support up to

10,000 16000 IOPS and 160 250 MB/s of throughput and Provisioned IOPS (SSD) volumes support up to 20,000 64000 IOPS and 320 1000 MB/s of throughput.

EBS Magnetic volumes can be created from 1 GiB to 1 TiB in size; EBS General Purpose (SSD) and Provisioned IOPS (SSD) volumes can be created up to 16 TiB in size.

EBS Benefits

- Data Availability

- Data is automatically replicated in an Availability Zone to prevent data loss due to the failure of any single hardware component.

- Data Persistence

- persists independently of the running life of an EC2 instance

- persists when an instance is stopped, started, or rebooted

- Root volume is deleted, by default, on Instance termination but the behaviour can be changed using the

DeleteOnTermination flag

- All attached volumes persist, by default, on instance termination

- Data Encryption

- can be encrypted by the EBS encryption feature

- uses 256-bit AES-256 and an Amazon-managed key infrastructure.

- Encryption occurs on the server that hosts the EC2 instance, providing encryption of data-in-transit from the EC2 instance to EBS storage

- Snapshots of encrypted EBS volumes are automatically encrypted.

- Snapshots

- provides the ability to create snapshots (backups) of any EBS volume and write a copy of the data in the volume to S3, where it is stored redundantly in multiple Availability Zones.

- can be used to create new volumes, increase the size of the volumes or replicate data across Availability Zones or Regions.

- are incremental backups and store only the data that was changed from the time the last snapshot was taken.

- Snapshot size can probably be smaller than the volume size as the data is compressed before being saved to S3.

- Even though snapshots are saved incrementally, the snapshot deletion process is designed so that you need to retain only the most recent snapshot in order to restore the volume.

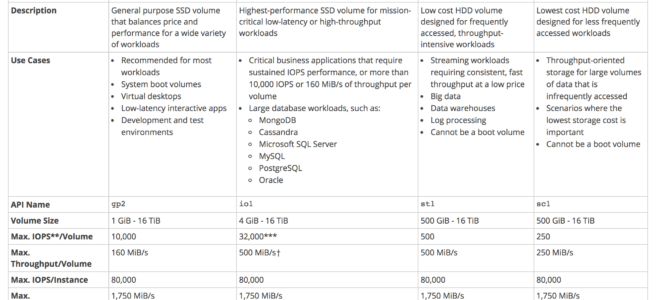

EBS Volume Types

Refer blog post @ EBS Volume Types

EBS Volume

EBS Volume Creation

- Creating New volumes

- Completely new from console or command line tools and can then be attached to an EC2 instance in the same Availability Zone.

- Restore volume from Snapshots

- Volumes can also be restored from previously created snapshots

- New volumes created from existing snapshots are loaded lazily in the background.

- There is no need to wait for all of the data to transfer from S3 to the volume before the attached instance can start accessing the volume and all its data.

- If the instance accesses the data that hasn’t yet been loaded, the volume immediately downloads the requested data from S3, and continues loading the rest of the data in the background.

- Volumes restored from encrypted snapshots are always encrypted, by default.

- Volumes can be created and attached to a running EC2 instance by specifying a block device mapping

EBS Volume Detachment

- EBS volumes can be detached from an instance explicitly or by terminating the instance.

- EBS root volumes can be detached by stopping the instance.

- EBS data volumes, attached to a running instance, can be detached by unmounting the volume from the instance first.

- If the volume is detached without being unmounted, it might result in the volume being stuck in a busy state and could possibly damage the file system or the data it contains.

- EBS volume can be force detached from an instance, using the

Force Detach option, but it might lead to data loss or a corrupted file system as the instance does not get an opportunity to flush file system caches or file system metadata.

- Charges are still incurred for the volume after its detachment

EBS Volume Deletion

- EBS volume deletion would wipe out its data and the volume can’t be attached to any instance. However, it can be backed up before deletion using EBS snapshots

EBS Volume Resize

- EBS Elastic Volumes can be modified to increase the volume size, change the volume type, or adjust the performance of your EBS volumes.

- If the instance supports Elastic Volumes, changes can be performed without detaching the volume or restarting the instance.

EBS Volume Snapshots

Refer blog post @ EBS Snapshot

EBS Encryption

- EBS volumes can be created and attached to a supported instance type and support the following types of data

- Data at rest

- All disk I/O i.e All data moving between the volume and the instance

- All snapshots created from the volume

- All volumes created from those snapshots

- Encryption occurs on the servers that host EC2 instances, providing encryption of data-in-transit from EC2 instances to EBS storage.

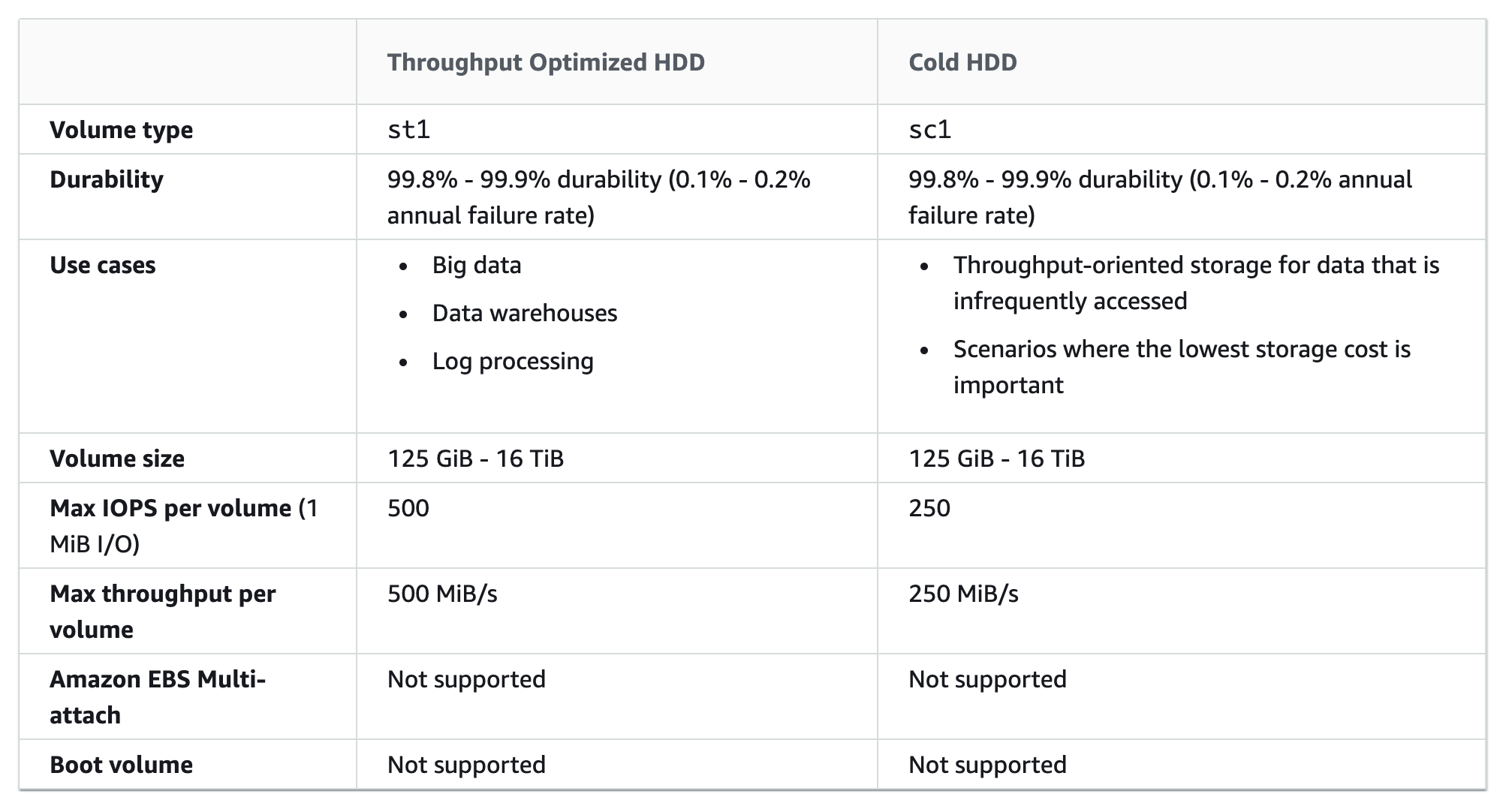

- EBS encryption is supported with all EBS volume types (gp2, io1, st1, and sc1), and has the same IOPS performance on encrypted volumes as with unencrypted volumes, with a minimal effect on latency

- EBS encryption is only available on select instance types.

- Volumes created from encrypted snapshots and snapshots of encrypted volumes are automatically encrypted using the same encryption key.

- EBS encryption uses AWS KMS customer master keys (CMK) when creating encrypted volumes and any snapshots created from the encrypted volumes.

- EBS volumes can be encrypted using either

- a default CMK created for you automatically.

- a CMK that you created separately using AWS KMS, giving you more flexibility, including the ability to create, rotate, disable, define access controls, and audit the encryption keys used to protect your data.

- Public or shared snapshots of encrypted volumes are not supported, because other accounts would be able to decrypt your data and needs to be migrated to an unencrypted status before sharing.

- Existing unencrypted volumes cannot be encrypted directly, but can be migrated by

- Option 1

- create an unencrypted snapshot from the volume

- create an encrypted copy of an unencrypted snapshot

- create an encrypted volume from the encrypted snapshot

- Option 2

- create an unencrypted snapshot from the volume

- create an encrypted volume from an unencrypted snapshot

- An encrypted snapshot can be created from an unencrypted snapshot by creating an encrypted copy of the unencrypted snapshot.

- Unencrypted volume cannot be created from an encrypted volume directly but needs to be migrated

EBS Multi-Attach

Refer blog Post @ EBS Multi-Attach

EBS Performance

Refer blog Post @ EBS Performance

EBS vs Instance Store

Refer blog post @ EBS vs Instance Store

AWS Certification Exam Practice Questions

- Questions are collected from Internet and the answers are marked as per my knowledge and understanding (which might differ with yours).

- AWS services are updated everyday and both the answers and questions might be outdated soon, so research accordingly.

- AWS exam questions are not updated to keep up the pace with AWS updates, so even if the underlying feature has changed the question might not be updated

- Open to further feedback, discussion and correction.

- _____ is a durable, block-level storage volume that you can attach to a single, running Amazon EC2 instance.

- Amazon S3

- Amazon EBS

- None of these

- All of these

- Which Amazon storage do you think is the best for my database-style applications that frequently encounter many random reads and writes across the dataset?

- None of these.

- Amazon Instance Storage

- Any of these

- Amazon EBS

- What does Amazon EBS stand for?

- Elastic Block Storage

- Elastic Business Server

- Elastic Blade Server

- Elastic Block Store

- Which Amazon Storage behaves like raw, unformatted, external block devices that you can attach to your instances?

- None of these.

- Amazon Instance Storage

- Amazon EBS

- All of these

- A user has created numerous EBS volumes. What is the general limit for each AWS account for the maximum number of EBS volumes that can be created?

- 10000

- 5000

- 100

- 1000

- Select the correct set of steps for exposing the snapshot only to specific AWS accounts

- Select Public for all the accounts and check mark those accounts with whom you want to expose the snapshots and click save.

- Select Private and enter the IDs of those AWS accounts, and click Save.

- Select Public, enter the IDs of those AWS accounts, and click Save.

- Select Public, mark the IDs of those AWS accounts as private, and click Save.

- If an Amazon EBS volume is the root device of an instance, can I detach it without stopping the instance?

- Yes but only if Windows instance

- No

- Yes

- Yes but only if a Linux instance

- Can we attach an EBS volume to more than one EC2 instance at the same time?

- Yes

- No

- Only EC2-optimized EBS volumes.

- Only in read mode.

- Do the Amazon EBS volumes persist independently from the running life of an Amazon EC2 instance?

- Only if instructed to when created

- Yes

- No

- Can I delete a snapshot of the root device of an EBS volume used by a registered AMI?

- Only via API

- Only via Console

- Yes

- No

- By default, EBS volumes that are created and attached to an instance at launch are deleted when that instance is terminated. You can modify this behavior by changing the value of the flag_____ to false when you launch the instance

- DeleteOnTermination

- RemoveOnDeletion

- RemoveOnTermination

- TerminateOnDeletion

- Your company policies require encryption of sensitive data at rest. You are considering the possible options for protecting data while storing it at rest on an EBS data volume, attached to an EC2 instance. Which of these options would allow you to encrypt your data at rest? (Choose 3 answers)

- Implement third party volume encryption tools

- Do nothing as EBS volumes are encrypted by default

- Encrypt data inside your applications before storing it on EBS

- Encrypt data using native data encryption drivers at the file system level

- Implement SSL/TLS for all services running on the server

- Which of the following are true regarding encrypted Amazon Elastic Block Store (EBS) volumes? Choose 2 answers

- Supported on all Amazon EBS volume types

- Snapshots are automatically encrypted

- Available to all instance types

- Existing volumes can be encrypted

- Shared volumes can be encrypted

- How can you secure data at rest on an EBS volume?

- Encrypt the volume using the S3 server-side encryption service

- Attach the volume to an instance using EC2’s SSL interface.

- Create an IAM policy that restricts read and write access to the volume.

- Write the data randomly instead of sequentially.

- Use an encrypted file system on top of the EBS volume

- A user has deployed an application on an EBS backed EC2 instance. For a better performance of application, it requires dedicated EC2 to EBS traffic. How can the user achieve this?

- Launch the EC2 instance as EBS dedicated with PIOPS EBS

- Launch the EC2 instance as EBS enhanced with PIOPS EBS

- Launch the EC2 instance as EBS dedicated with PIOPS EBS

- Launch the EC2 instance as EBS optimized with PIOPS EBS

- A user is trying to launch an EBS backed EC2 instance under free usage. The user wants to achieve encryption of the EBS volume. How can the user encrypt the data at rest?

- Use AWS EBS encryption to encrypt the data at rest (Encryption is allowed on micro instances)

- User cannot use EBS encryption and has to encrypt the data manually or using a third party tool (Encryption was not allowed on micro instances before)

- The user has to select the encryption enabled flag while launching the EC2 instance

- Encryption of volume is not available as a part of the free usage tier

- A user is planning to schedule a backup for an EBS volume. The user wants security of the snapshot data. How can the user achieve data encryption with a snapshot?

- Use encrypted EBS volumes so that the snapshot will be encrypted by AWS

- While creating a snapshot select the snapshot with encryption

- By default the snapshot is encrypted by AWS

- Enable server side encryption for the snapshot using S3

- A user has launched an EBS backed EC2 instance. The user has rebooted the instance. Which of the below mentioned statements is not true with respect to the reboot action?

- The private and public address remains the same

- The Elastic IP remains associated with the instance

- The volume is preserved

- The instance runs on a new host computer

- A user has launched an EBS backed EC2 instance. What will be the difference while performing the restart or stop/start options on that instance?

- For restart it does not charge for an extra hour, while every stop/start it will be charged as a separate hour

- Every restart is charged by AWS as a separate hour, while multiple start/stop actions during a single hour will be counted as a single hour

- For every restart or start/stop it will be charged as a separate hour

- For restart it charges extra only once, while for every stop/start it will be charged as a separate hour

- A user has launched an EBS backed instance. The user started the instance at 9 AM in the morning. Between 9 AM to 10 AM, the user is testing some script. Thus, he stopped the instance twice and restarted it. In the same hour the user rebooted the instance once. For how many instance hours will AWS charge the user?

- 3 hours

- 4 hours

- 2 hours

- 1 hour

- You are running a database on an EC2 instance, with the data stored on Elastic Block Store (EBS) for persistence At times throughout the day, you are seeing large variance in the response times of the database queries Looking into the instance with the isolate command you see a lot of wait time on the disk volume that the database’s data is stored on. What two ways can you improve the performance of the database’s storage while maintaining the current persistence of the data? Choose 2 answers

- Move to an SSD backed instance

- Move the database to an EBS-Optimized Instance

- Use Provisioned IOPs EBS

- Use the ephemeral storage on an m2.4xLarge Instance Instead

- An organization wants to move to Cloud. They are looking for a secure encrypted database storage option. Which of the below mentioned AWS functionalities helps them to achieve this?

- AWS MFA with EBS

- AWS EBS encryption

- Multi-tier encryption with Redshift

- AWS S3 server-side storage

- A user has stored data on an encrypted EBS volume. The user wants to share the data with his friend’s AWS account. How can user achieve this?

- Create an AMI from the volume and share the AMI

- Copy the data to an unencrypted volume and then share

- Take a snapshot and share the snapshot with a friend

- If both the accounts are using the same encryption key then the user can share the volume directly

- A user is using an EBS backed instance. Which of the below mentioned statements is true?

- The user will be charged for volume and instance only when the instance is running

- The user will be charged for the volume even if the instance is stopped

- The user will be charged only for the instance running cost

- The user will not be charged for the volume if the instance is stopped

- A user is planning to use EBS for his DB requirement. The user already has an EC2 instance running in the VPC private subnet. How can the user attach the EBS volume to a running instance?

- The user must create EBS within the same VPC and then attach it to a running instance.

- The user can create EBS in the same zone as the subnet of instance and attach that EBS to instance. (Should be in the same AZ)

- It is not possible to attach an EBS to an instance running in VPC until the instance is stopped.

- The user can specify the same subnet while creating EBS and then attach it to a running instance.

- A user is creating an EBS volume. He asks for your advice. Which advice mentioned below should you not give to the user for creating an EBS volume?

- Take the snapshot of the volume when the instance is stopped

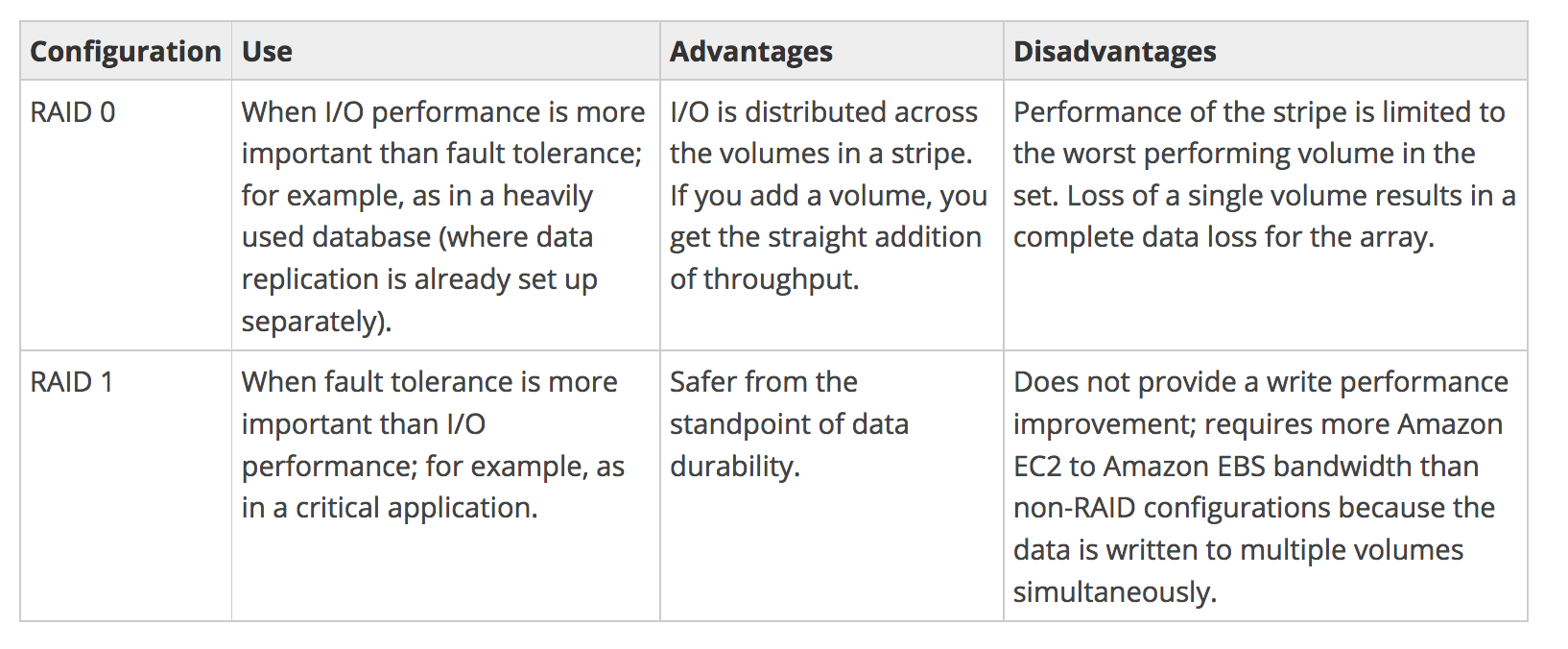

- Stripe multiple volumes attached to the same instance

- Create an AMI from the attached volume (AMI is created from the snapshot)

- Attach multiple volumes to the same instance

- An EC2 instance has one additional EBS volume attached to it. How can a user attach the same volume to another running instance in the same AZ?

- Terminate the first instance and only then attach to the new instance

- Attach the volume as read only to the second instance

- Detach the volume first and attach to new instance

- No need to detach. Just select the volume and attach it to the new instance, it will take care of mapping internally

- What is the scope of an EBS volume?

- VPC

- Region

- Placement Group

- Availability Zone

Reference

Amazon_EBS