CloudFront Security

- CloudFront Security has multiple features, including

- Support for Encryption at Rest and Transit.

- Prevent users in specific geographic locations from accessing content

- Configure HTTPS connections.

- Use signed URLs or cookies to restrict access for selected users.

- Restrict access to content in S3 buckets using origin access identity – OAI, to prevent users from using the direct URL of the file.

- Set up field-level encryption for specific content fields

- Use AWS WAF web ACLs to create a web access control list (web ACL) to restrict access to your content.

- Use geo-restriction, also known as geoblocking, to prevent users in specific geographic locations from accessing content served through a CloudFront distribution.

- Integration with AWS Shield to protect from DDoS attacks.

Data Protection

- CloudFront supports both Encryption at Rest and in Transit.

- CloudFront provides Encryption in Transit and can be configured

- to require viewers to use HTTPS to request the files so that connections are encrypted when CloudFront communicates with viewers.

- to use HTTPS to get files from the origin, so that connections are encrypted when CloudFront communicates with the origin.

- HTTPS can be enforced using the Viewer Protocol Policy and Origin Protocol Policy.

- CloudFront provides Encryption at Rest

- using SSDs which are encrypted for edge location points of presence (POPs), and encrypted EBS volumes for Regional Edge Caches (RECs).

- Function code and configuration are always stored in an encrypted format on the encrypted SSDs on the edge location POPs, and in other storage locations used by CloudFront.

Restrict Viewer Access

Serving Private Content

- To securely serve private content using CloudFront

- Require the users to access the private content by using special CloudFront signed URLs or signed cookies with the following restrictions

- end date and time, after which the URL is no longer valid

- start date-time, when the URL becomes valid

- IP address or range of addresses to access the URLs

- Require that users access the S3 content only using CloudFront URLs, not S3 URLs. Requiring CloudFront URLs isn’t required, but recommended to prevent users from bypassing the restrictions specified in signed URLs or signed cookies.

- Require the users to access the private content by using special CloudFront signed URLs or signed cookies with the following restrictions

- Signed URLs or Signed Cookies can be used with CloudFront using an HTTP server as an origin. It requires the content to be publicly accessible and care should be taken to not share the direct URL of the content

- Restriction for Origin can be applied by

- For S3, using Origin Access Identity – OAI to grant only CloudFront access using Bucket policies or Object ACL, to the content and removing any other access permissions

- For a Load balancer OR HTTP server, custom headers can be added by CloudFront which can be used at Origin to verify the request has come from CloudFront.

- Custom origins can also be configured to allow traffic from CloudFront IPs only. CloudFront managed prefix list can be used to allow inbound traffic to the origin only from CloudFront’s origin-facing servers, preventing any non-CloudFront traffic from reaching your origin

- Trusted Signer

- To create signed URLs or signed cookies, at least one AWS account (trusted signer) is needed that has an active CloudFront key pair

- Once the AWS account is added as a trusted signer to the distribution, CloudFront starts to require that users use signed URLs or signed cookies to access the objects.

- Private key from the trusted signer’s key pair to sign a portion of the URL or the cookie. When someone requests a restricted object, CloudFront compares the signed portion of the URL or cookie with the unsigned portion to verify that the URL or cookie hasn’t been tampered with. CloudFront also validates the URL or cookie is valid for e.g, that the expiration date and time hasn’t passed.

- Each Trusted signer AWS account used to create CloudFront signed URLs or signed cookies must have its own active CloudFront key pair, which should be frequently rotated

- A maximum of 5 trusted signers can be assigned for each cache behavior or RTMP distribution

Signed URLs vs Signed Cookies

- CloudFront signed URLs and signed cookies help to secure the content and provide control to decide who can access the content.

- Use signed URLs in the following cases:

for RTMP distribution as signed cookies aren’t supported- to restrict access to individual files, for e.g., an installation download for the application.

- users using a client, for e.g. a custom HTTP client, that doesn’t support cookies

- Use signed cookies in the following cases:

- provide access to multiple restricted files, e.g., all of the video files in HLS format or all of the files in the subscribers’ area of a website.

- don’t want to change the current URLs.

- Signed URLs take precedence over signed cookies, if both signed URLs and signed cookies are used to control access to the same files and a viewer uses a signed URL to request a file, CloudFront determines whether to return the file to the viewer based only on the signed URL.

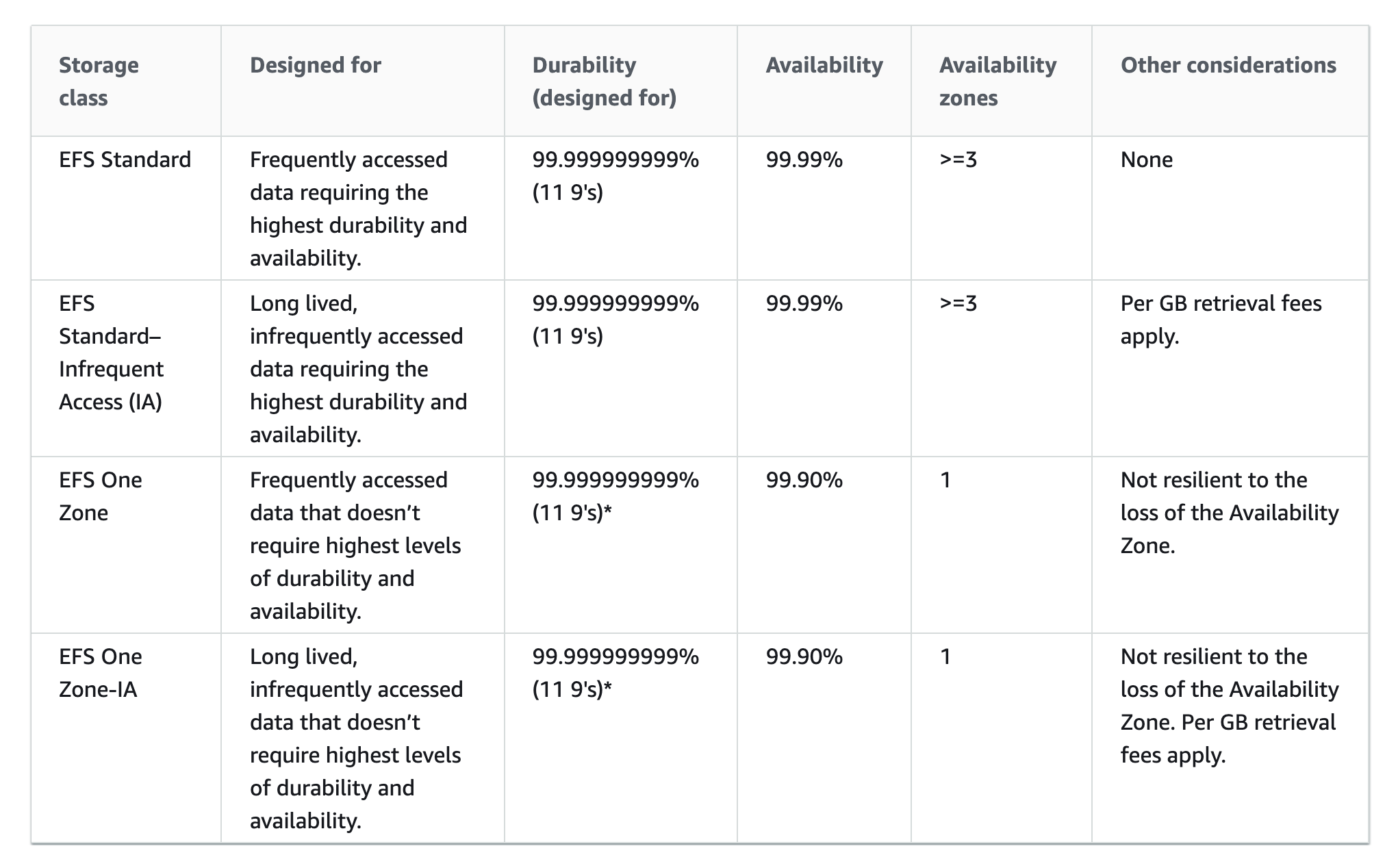

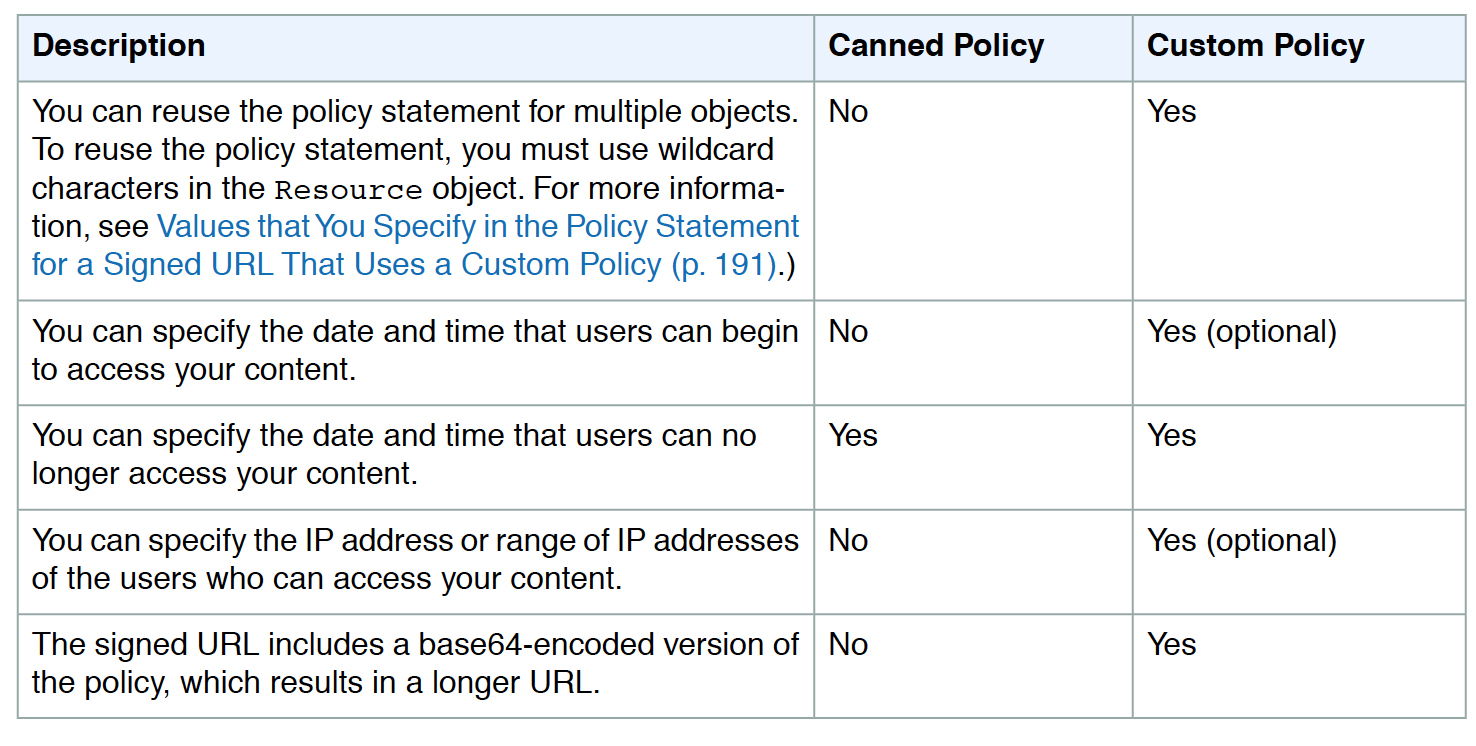

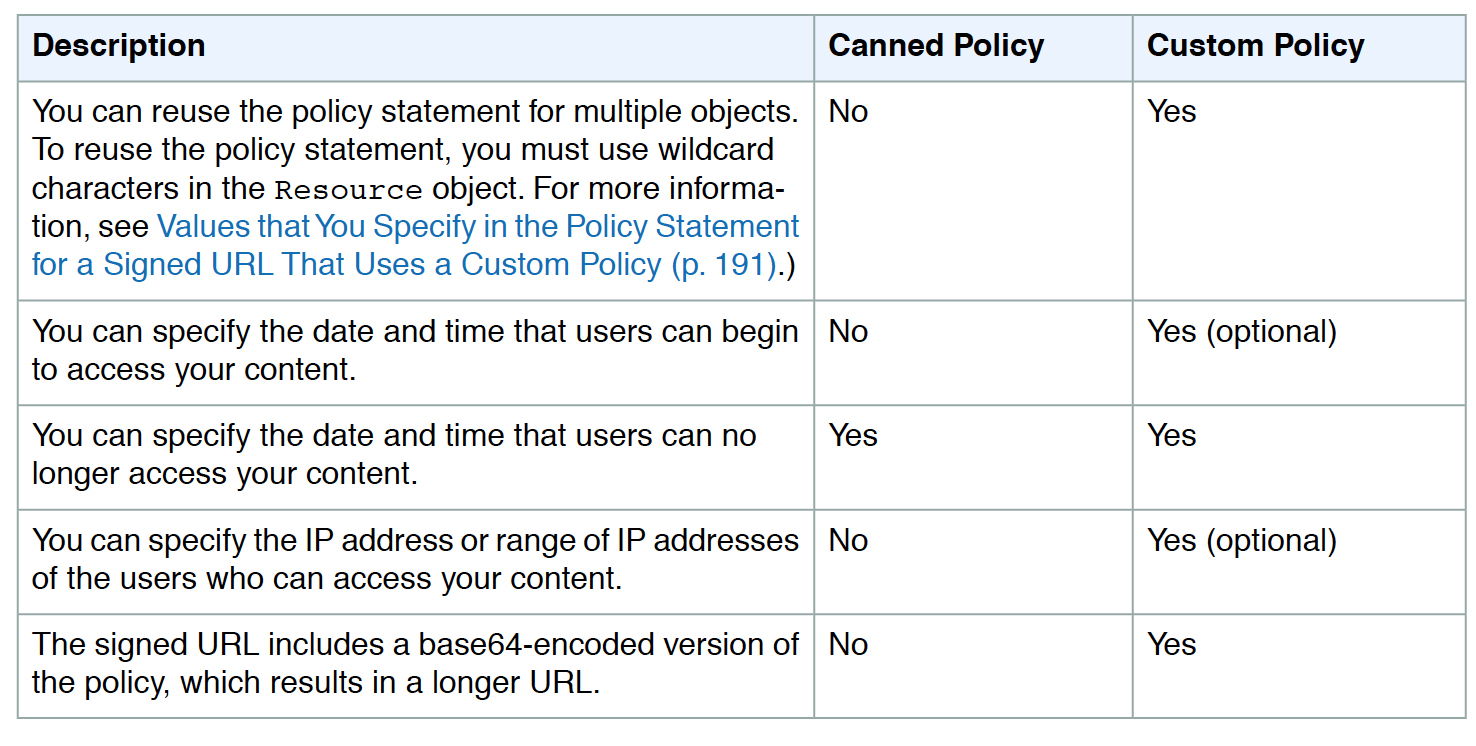

Canned Policy vs Custom Policy

- Canned policy or a custom policy is a policy statement, used by the Signed URLs, that helps define the restrictions for e.g. expiration date and time

- CloudFront validates the expiration time at the start of the event.

- If the user is downloading a large object, and the URL expires the download would still continue, and the same for RTMP distribution.

- However, if the user is using range GET requests, or while streaming video skips to another position which might trigger another event, the request would fail.

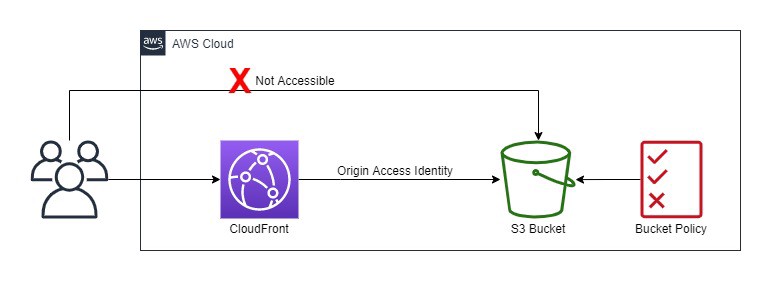

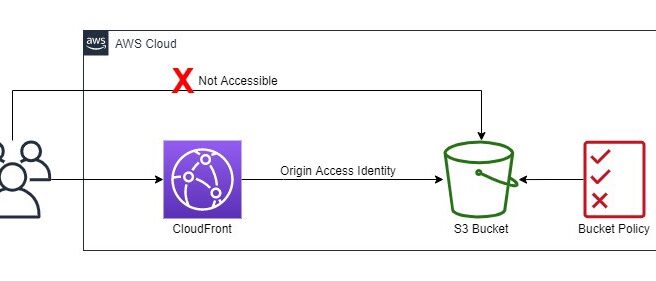

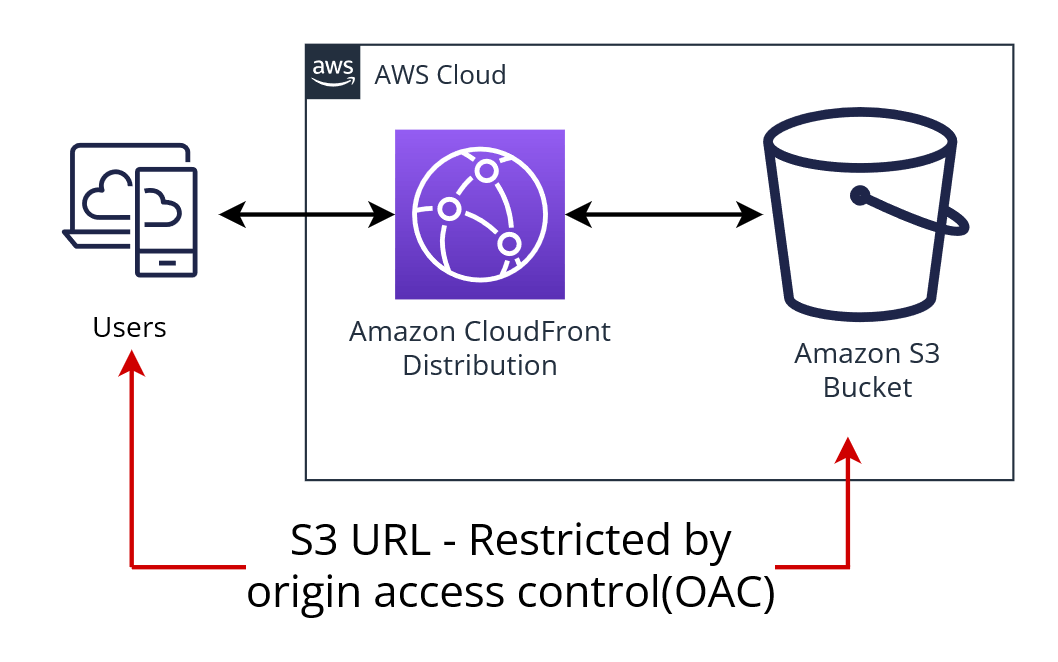

S3 Origin Access Identity – OAI

- Origin Access Identity (OAI) can be used to prevent users from directly accessing objects from S3.

- S3 origin objects must be granted public read permissions and hence the objects are accessible from both S3 as well as CloudFront.

- Even though CloudFront does not expose the underlying S3 URL, it can be known to the user if shared directly or used by applications.

- For using CloudFront signed URLs or signed cookies, it would be necessary to prevent users from having direct access to the S3 objects.

- Users accessing S3 objects directly would

- bypass the controls provided by CloudFront signed URLs or signed cookies, for e.g., control over the date-time that a user can no longer access the content and the IP addresses can be used to access content

- CloudFront access logs are less useful because they’re incomplete.

- Origin access identity, which is a special CloudFront user, can be created and associated with the distribution.

- S3 bucket/object permissions need to be configured to only provide access to the Origin Access Identity.

- When users access the object from CloudFront, it uses the OAI to fetch the content on the user’s behalf, while the S3 object’s direct access is restricted

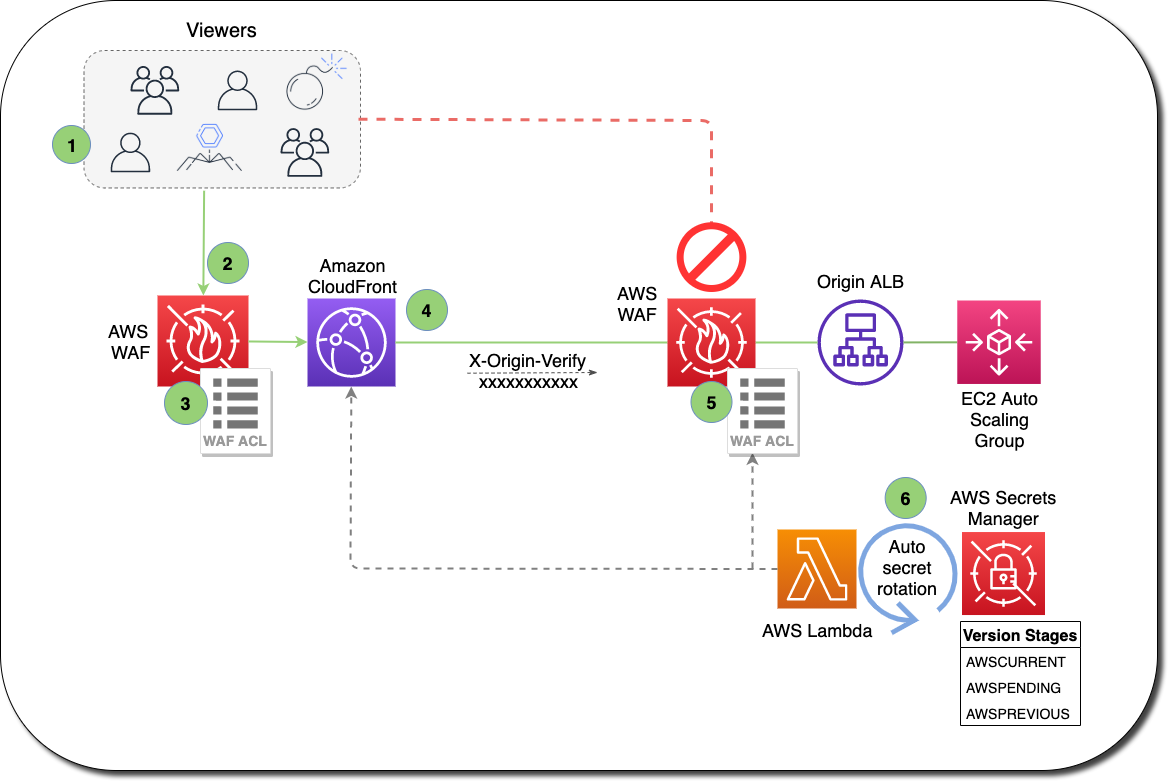

Custom Headers

- Custom headers can be added by CloudFront which can be used at Origin to verify the request has come from CloudFront.

- A viewer accesses the website or application and requests one or more files, such as an image file and an HTML file.

- DNS routes the request to the CloudFront edge location that can best serve the request – typically the nearest edge location in terms of latency.

- At the edge location, AWS WAF inspects the incoming request according to configured web ACL rules.

- At the edge location, CloudFront checks its cache for the requested content.

- If the content is in the cache, CloudFront returns it to the user.

- If the content isn’t in the cache, CloudFront adds the custom header,

X-Origin-Verify, with the value of the secret from Secrets Manager, and forwards the request to the origin.

- At the origin ALB, ALB rules or AWS WAF inspects the incoming request header,

X-Origin-Verify, and allows the request if the string value is valid. If the header isn’t valid, AWS WAF blocks the request. - At the configured interval, Secrets Manager automatically rotates the custom header value and updates the origin AWS WAF and CloudFront configurations.

Geo-Restriction – Geoblocking

- Geo restriction can help allow or prevent users in selected countries from accessing the content,

- CloudFront distribution can be configured either to allow users in

- whitelist of specified countries to access the content or to

- deny users in a blacklist of specified countries to access the content

- Geo restriction can be used to restrict access to all of the files that are

associated with distribution and to restrict access at the country level. - CloudFront responds to a request from a viewer in a restricted country with an HTTP status code 403 (Forbidden).

- Use a third-party geolocation service, if access is to be restricted to a subset of the files that are associated with a distribution or to restrict access at a finer granularity than the country level.

Field Level Encryption Config

- CloudFront can enforce secure end-to-end connections to origin servers by using HTTPS.

- Field-level encryption adds an additional layer of security that helps protect specific data throughout system processing so that only certain applications can see it.

- Field-level encryption can be used to securely upload user-submitted sensitive information. The sensitive information provided by the clients is encrypted at the edge closer to the user and remains encrypted throughout the entire application stack, ensuring that only applications that need the data – and have the credentials to decrypt it – are able to do so.

AWS Certification Exam Practice Questions

- Questions are collected from Internet and the answers are marked as per my knowledge and understanding (which might differ with yours).

- AWS services are updated everyday and both the answers and questions might be outdated soon, so research accordingly.

- AWS exam questions are not updated to keep up the pace with AWS updates, so even if the underlying feature has changed the question might not be updated

- Open to further feedback, discussion and correction.

- You are building a system to distribute confidential training videos to employees. Using CloudFront, what method could be used to serve content that is stored in S3, but not publically accessible from S3 directly?

- Create an Origin Access Identity (OAI) for CloudFront and grant access to the objects in your S3 bucket to that OAI.

- Add the CloudFront account security group “amazon-cf/amazon-cf-sg” to the appropriate S3 bucket policy.

- Create an Identity and Access Management (IAM) User for CloudFront and grant access to the objects in your S3 bucket to that IAM User.

- Create a S3 bucket policy that lists the CloudFront distribution ID as the Principal and the target bucket as the Amazon Resource Name (ARN).

- A media production company wants to deliver high-definition raw video for preproduction and dubbing to customer all around the world. They would like to use Amazon CloudFront for their scenario, and they require the ability to limit downloads per customer and video file to a configurable number. A CloudFront download distribution with TTL=0 was already setup to make sure all client HTTP requests hit an authentication backend on Amazon Elastic Compute Cloud (EC2)/Amazon RDS first, which is responsible for restricting the number of downloads. Content is stored in S3 and configured to be accessible only via CloudFront. What else needs to be done to achieve an architecture that meets the requirements? Choose 2 answers

- Enable URL parameter forwarding, let the authentication backend count the number of downloads per customer in RDS, and return the content S3 URL unless the download limit is reached.

- Enable CloudFront logging into an S3 bucket, leverage EMR to analyze CloudFront logs to determine the number of downloads per customer, and return the content S3 URL unless the download limit is reached. (CloudFront logs are logged periodically and EMR not being real time, hence not suitable)

- Enable URL parameter forwarding, let the authentication backend count the number of downloads per customer in RDS, and invalidate the CloudFront distribution as soon as the download limit is reached. (Distribution are not invalidated but Objects)

- Enable CloudFront logging into the S3 bucket, let the authentication backend determine the number of downloads per customer by parsing those logs, and return the content S3 URL unless the download limit is reached. (CloudFront logs are logged periodically and EMR not being real time, hence not suitable)

- Configure a list of trusted signers, let the authentication backend count the number of download requests per customer in RDS, and return a dynamically signed URL unless the download limit is reached.

- To enable end-to-end HTTPS connections from the user‘s browser to the origin via CloudFront, which of the following options are valid? Choose 2 answers

- Use self-signed certificate in the origin and CloudFront default certificate in CloudFront. (Origin cannot be self-signed)

- Use the CloudFront default certificate in both origin and CloudFront (CloudFront cert cannot be applied to origin)

- Use a 3rd-party CA certificate in the origin and CloudFront default certificate in CloudFront

- Use 3rd-party CA certificate in both origin and CloudFront

- Use a self-signed certificate in both the origin and CloudFront (Origin cannot be self-signed)

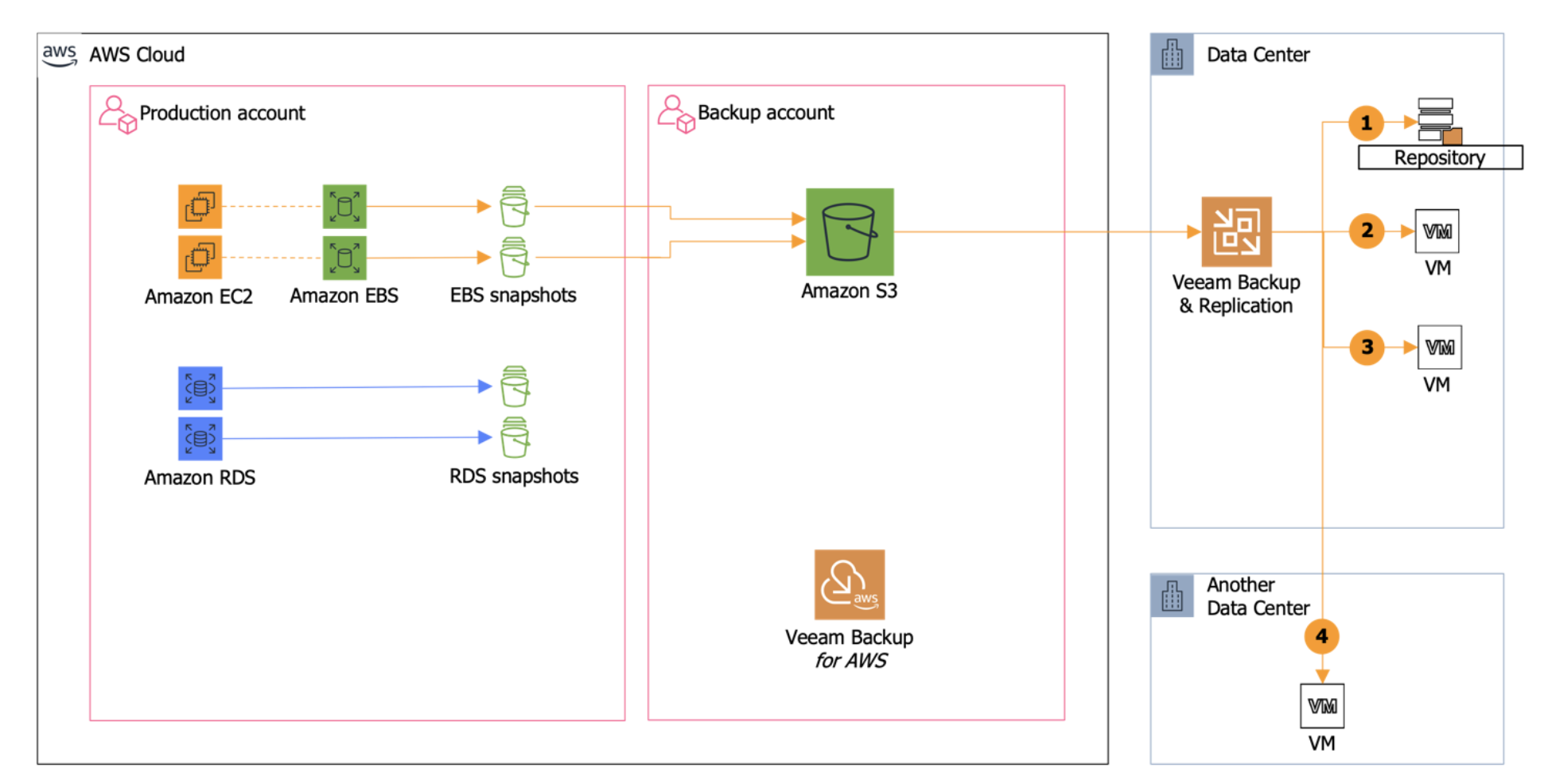

The diagram above shows how simple this can be carried out. By protecting workloads in AWS and using Veeam, you can simply move workloads across different platforms. It doesn’t matter which direction you want to move workloads either, you can just as easily take a virtual machine running on VMware vSphere or Microsoft Hyper-V and migrate that to AWS EC2 as an instance. You can move workloads across multiple platforms or hypervisors extremely easily.

The diagram above shows how simple this can be carried out. By protecting workloads in AWS and using Veeam, you can simply move workloads across different platforms. It doesn’t matter which direction you want to move workloads either, you can just as easily take a virtual machine running on VMware vSphere or Microsoft Hyper-V and migrate that to AWS EC2 as an instance. You can move workloads across multiple platforms or hypervisors extremely easily.