Auto Scaling Overview

- Auto Scaling provides the ability to ensure a correct number of EC2 instances are always running to handle the load of the application

- Auto Scaling helps

- to achieve better fault tolerance, better availability and cost management.

- helps specify scaling policies that can be used to launch and terminate EC2 instances to handle any increase or decrease in demand.

- Auto Scaling attempts to distribute instances evenly between the AZs that are enabled for the Auto Scaling group.

- Auto Scaling does this by attempting to launch new instances in the AZ with the fewest instances. If the attempt fails, it attempts to launch the instances in another AZ until it succeeds.

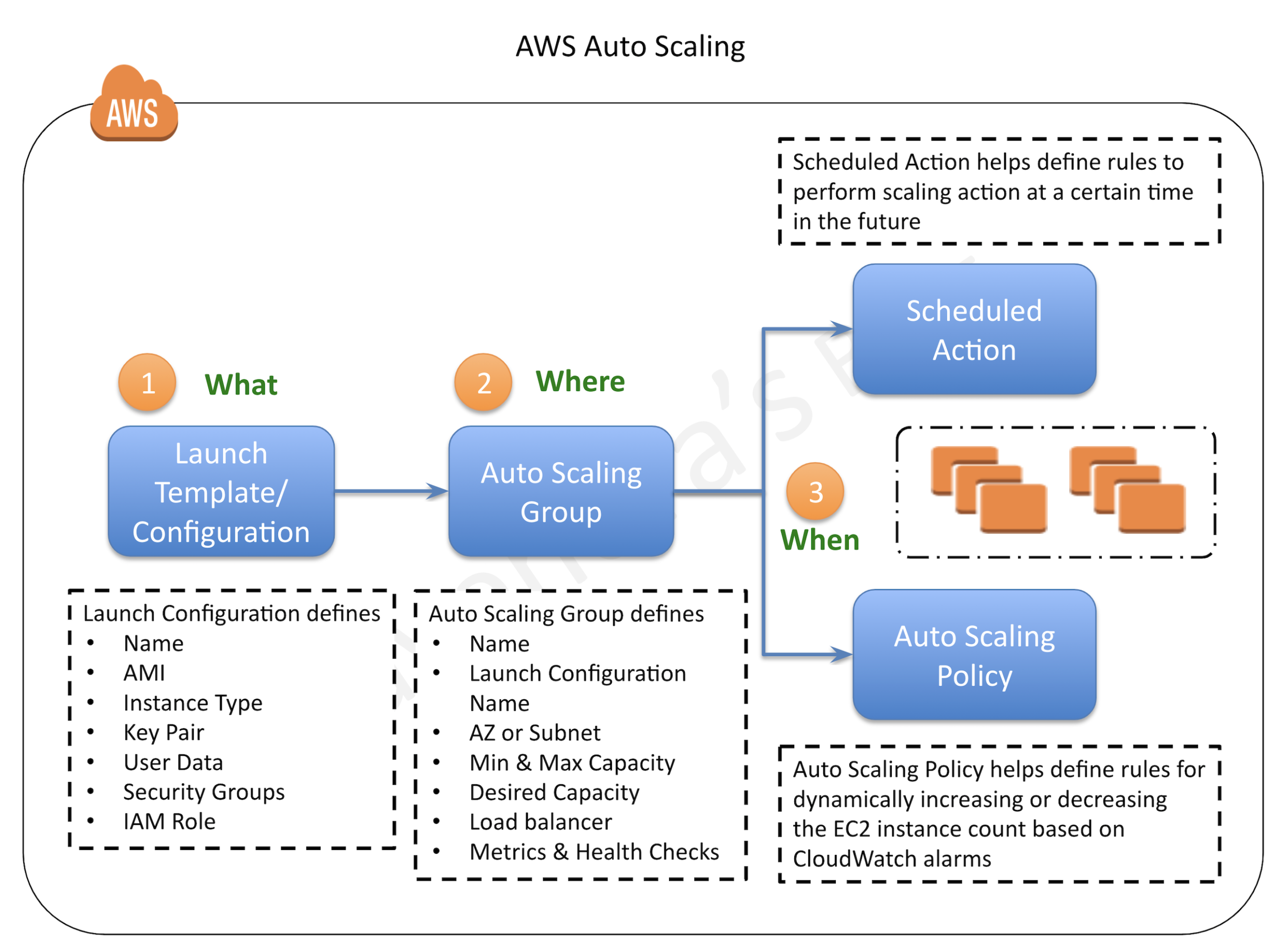

Auto Scaling Components

Auto Scaling Groups – ASG

- Auto Scaling groups are the core of Auto Scaling and contain a collection of EC2 instances that share similar characteristics and are treated as a logical grouping for the purposes of automatic scaling and management.

- ASG requires

- Launch configuration OR Launch Template

- determine the EC2 template to use for launching the instance

- Minimum & Maximum capacity

- determine the number of instances when an autoscaling policy is applied.

- Number of instances cannot grow beyond these boundaries

- Desired capacity

- to determine the number of instances the ASG must maintain at all times. If missing, it equals the minimum size.

- Desired capacity is different from minimum capacity.

- An Auto Scaling group’s desired capacity is the default number of instances that should be running. A group’s minimum capacity is the fewest number of instances the group can have running

- Availability Zones or Subnets in which the instances will be launched.

- Metrics & Health Checks

- metrics to determine when it should launch or terminate instances and health checks to determine if the instance is healthy or not

- Launch configuration OR Launch Template

- ASG starts by launching a desired capacity of instances and maintains this number by performing periodic health checks.

- If an instance becomes unhealthy, the ASG terminates and launches a new instance.

- ASG can also use scaling policies to increase or decrease the number of instances automatically to meet changing demands

- An ASG can contain EC2 instances in one or more AZs within the same region.

- ASGs cannot span multiple regions.

- ASG can launch On-Demand Instances, Spot Instances, or both when configured to use a launch template.

- To merge separate single-zone ASGs into a single ASG spanning multiple AZs, rezone one of the single-zone groups into a multi-zone group, and then delete the other groups. This process works for groups with or without a load balancer, as long as the new multi-zone group is in one of the same AZs as the original single-zone groups.

- ASG can be associated with a single launch configuration or template

- As the Launch Configuration can’t be modified once created, the only way to update the Launch Configuration for an ASG is to create a new one and associate it with the ASG.

- When the launch configuration for the ASG is changed, any new instances launched, use the new configuration parameters, but the existing instances are not affected.

- ASG can be deleted from CLI, if it has no running instances else need to set the minimum and desired capacity to 0. This is handled automatically when deleting an ASG from the AWS management console.

Launch Configuration

- Launch configuration is an instance configuration template that an ASG uses to launch EC2 instances.

- Launch configuration is similar to EC2 configuration and involves the selection of the Amazon Machine Image (AMI), block devices, key pair, instance type, security groups, user data, EC2 instance monitoring, instance profile, kernel, ramdisk, the instance tenancy, whether the instance has a public IP address, and is EBS-optimized.

- Launch configuration can be associated with multiple ASGs

- Launch configuration can’t be modified after creation and needs to be created new if any modification is required.

- Basic or detailed monitoring for the instances in the ASG can be enabled when a launch configuration is created.

- By default, basic monitoring is enabled when you create the launch configuration using the AWS Management Console, and detailed monitoring is enabled when you create the launch configuration using the AWS CLI or an API

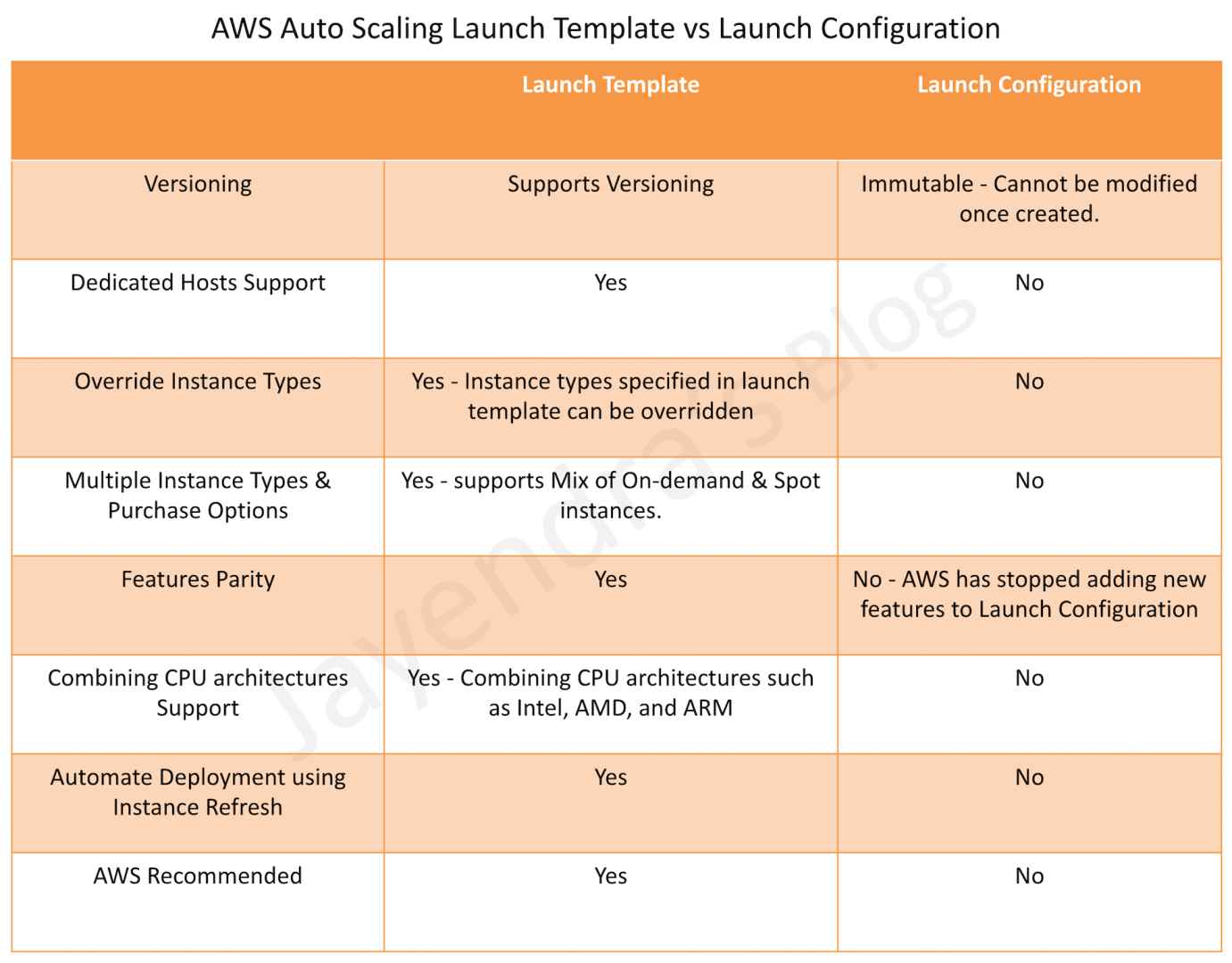

- AWS recommends using Launch Template instead.

Launch Template

- A Launch Template is similar to a launch configuration, with additional features, and is recommended by AWS.

- Launch Template allows multiple versions of a template to be defined.

- With versioning, a subset of the full set of parameters can be created and then reused to create other templates or template versions for e.g, a default template that defines common configuration parameters can be created and allow the other parameters to be specified as part of another version of the same template.

- Launch Template allows the selection of both Spot and On-Demand Instances or multiple instance types.

- Launch templates support EC2 Dedicated Hosts. Dedicated Hosts are physical servers with EC2 instance capacity that are dedicated to your use.

- Launch templates provide the following features

- Support for multiple instance types and purchase options in a single ASG.

- Launching Spot Instances with the capacity-optimized allocation strategy.

- Support for launching instances into existing Capacity Reservations through an ASG.

- Support for unlimited mode for burstable performance instances.

- Support for Dedicated Hosts.

- Combining CPU architectures such as Intel, AMD, and ARM (Graviton2)

- Improved governance through IAM controls and versioning.

- Automating instance deployment with Instance Refresh.

Auto Scaling Launch Configuration vs Launch Template

Auto Scaling Policies

Refer blog post @ Auto Scaling Policies

Auto Scaling Cooldown Period

- Auto Scaling Cooldown period is a configurable setting for the ASG that helps to ensure that Auto Scaling doesn’t launch or terminate additional instances before the previous scaling activity takes effect and allows the newly launched instances to start handling traffic and reduce load

- When ASG dynamically scales using a simple scaling policy and launches an instance, Auto Scaling suspends the scaling activities for the cooldown period (default 300 seconds) to complete before resuming scaling activities

- Example Use Case

- You configure a scale out alarm to increase the capacity, if the CPU utilization increases more than 80%

- A CPU spike occurs and causes the alarm to be triggered, Auto Scaling launches a new instance

- However, it would take time for the newly launched instance to be configured, instantiated, and started, let’s say 5 mins

- Without a cooldown period, if another CPU spike occurs Auto Scaling would launch a new instance again and this would continue for 5 mins till the previously launched instance is up and running and started handling traffic

- With a cooldown period, Auto Scaling would suspend the activity for the specified time period enabling the newly launched instance to start handling traffic and reduce the load.

- After the cooldown period, Auto Scaling resumes acting on the alarms

- When manually scaling the ASG, the default is not to wait for the cooldown period but can be overridden to honour the cooldown period.

- Note that if an instance becomes unhealthy, Auto Scaling does not wait for the cooldown period to complete before replacing the unhealthy instance.

- Cooldown periods are automatically applied to dynamic scaling activities for simple scaling policies and are not supported for step scaling policies.

Auto Scaling Termination Policy

- Termination policy helps Auto Scaling decide which instances it should terminate first when Auto Scaling automatically scales in.

- Auto Scaling specifies a default termination policy and also provides the ability to create a customized one.

Default Termination Policy

Default termination policy helps ensure that the network architecture spans AZs evenly and instances are selected for termination as follows:-

- Selection of Availability Zone

- selects the AZ, in multiple AZs environments, with the most instances and at least one instance that is not protected from scale in.

- selects the AZ with instances that use the oldest launch configuration, if there is more than one AZ with the same number of instances

- Selection of an Instance within the Availability Zone

- terminates the unprotected instance using the oldest launch configuration if one exists.

- terminates unprotected instances closest to the next billing hour, If multiple instances with the oldest launch configuration. This helps in maximizing the use of the EC2 instances that have an hourly charge while minimizing the number of hours billed for EC2 usage.

- terminates instances at random, if more than one unprotected instance is closest to the next billing hour.

Customized Termination Policy

- Auto Scaling first assesses the AZs for any imbalance. If an AZ has more instances than the other AZs that are used by the group, then it applies the specified termination policy on the instances from the imbalanced AZ

- If the Availability Zones used by the group are balanced, then Auto Scaling applies the specified termination policy.

- Following Customized Termination, policies are supported

- OldestInstance – terminates the oldest instance in the group and can be useful to upgrade to new instance types

- NewestInstance – terminates the newest instance in the group and can be useful when testing a new launch configuration

- OldestLaunchConfiguration – terminates instances that have the oldest launch configuration

- OldestLaunchTemplate – terminates instances that have the oldest launch template

- ClosestToNextInstanceHour – terminates instances that are closest to the next billing hour and helps to maximize the use of your instances and manage costs.

- Default – terminates as per the default termination policy

Instance Refresh

- Instance refresh can be used to update the instances in the ASG instead of manually replacing instances a few at a time.

- An instance refresh can be helpful when you have a new AMI or a new user data script.

- Instance refresh also helps configure the minimum healthy percentage, instance warmup, and checkpoints.

- To use an instance refresh

- Create a new launch template that specifies the new AMI or user data script.

- Start an instance refresh to begin updating the instances in the group immediately.

- EC2 Auto Scaling starts performing a rolling replacement of the instances.

Instance Protection

- Instance protection controls whether Auto Scaling can terminate a particular instance or not.

- Instance protection can be enabled on an ASG or an individual instance as well, at any time

- Instances launched within an ASG with Instance protection enabled would inherit the property.

- Instance protection starts as soon as the instance is InService and if the Instance is detached, it loses its Instance protection

- If all instances in an ASG are protected from termination during scale in and a scale-in event occurs, it can’t terminate any instance and will decrement the desired capacity.

- Instance protection does not protect for the below cases

- Manual termination through the EC2 console, the

terminate-instancescommand, or theTerminateInstancesAPI. - If it fails health checks and must be replaced

- Spot instances in an ASG from interruption

- Manual termination through the EC2 console, the

Standby State

Auto Scaling allows putting the InService instances in the Standby state during which the instance is still a part of the ASG but does not serve any requests. This can be used to either troubleshoot an instance or update an instance and return the instance back to service.

- An instance can be put into Standby state and it will continue to remain in the Standby state unless exited.

- Auto Scaling, by default, decrements the desired capacity for the group and prevents it from launching a new instance. If no decrement is selected, it would launch a new instance

- When the instance is in the standby state, the instance can be updated or used for troubleshooting.

- If a load balancer is associated with Auto Scaling, the instance is automatically deregistered when the instance is in Standby state and registered again when the instance exits the Standby state

Suspension

- Auto Scaling processes can be suspended and then resumed. This can be very useful to investigate a configuration problem or debug an issue with the application, without triggering the Auto Scaling process.

- Auto Scaling also performs Administrative Suspension where it would suspend processes for ASGs if the ASG has been trying to launch instances for over 24 hours but has not succeeded in launching any instances.

- Auto Scaling processes include

- Launch – Adds a new EC2 instance to the group, increasing its capacity.

- Terminate – Removes an EC2 instance from the group, decreasing its capacity.

- HealthCheck – Checks the health of the instances.

- ReplaceUnhealthy – Terminates instances that are marked as unhealthy and subsequently creates new instances to replace them.

- AlarmNotification – Accepts notifications from CloudWatch alarms that are associated with the group. If suspended, Auto Scaling does not automatically execute policies that would be triggered by an alarm

- ScheduledActions – Performs scheduled actions that you create.

- AddToLoadBalancer – Adds instances to the load balancer when they are launched.

- InstanceRefresh – Terminates and replaces instances using the instance refresh feature.

- AZRebalance – Balances the number of EC2 instances in the group across the Availability Zones in the region.

- If an AZ either is removed from the ASG or becomes unhealthy or unavailable, Auto Scaling launches new instances in an unaffected AZ before terminating the unhealthy or unavailable instances

- When the unhealthy AZ returns to a healthy state, Auto Scaling automatically redistributes the instances evenly across the Availability Zones for the group.

- Note that if you suspend AZRebalance and a scale out or scale in event occurs, Auto Scaling still tries to balance the Availability Zones for e.g. during scale out, it launches the instance in the Availability Zone with the fewest instances.

- If you suspend Launch, AZRebalance neither launches new instances nor terminates existing instances. This is because AZRebalance terminates instances only after launching the replacement instances.

- If you suspend Terminate, the ASG can grow up to 10% larger than its maximum size, because Auto Scaling allows this temporarily during rebalancing activities. If it cannot terminate instances, your ASG could remain above its maximum size until the Terminate process is resumed

Auto Scaling Lifecycle

Refer to blog post @ Auto Scaling Lifecycle

Autoscaling & ELB

Refer to blog post @ Autoscaling & ELB

AWS Certification Exam Practice Questions

- Questions are collected from Internet and the answers are marked as per my knowledge and understanding (which might differ with yours).

- AWS services are updated everyday and both the answers and questions might be outdated soon, so research accordingly.

- AWS exam questions are not updated to keep up the pace with AWS updates, so even if the underlying feature has changed the question might not be updated

- Open to further feedback, discussion and correction.

- A user is trying to setup a scheduled scaling activity using Auto Scaling. The user wants to setup the recurring schedule. Which of the below mentioned parameters is not required in this case?

- Maximum size

- Auto Scaling group name

- End time

- Recurrence value

- A user has configured Auto Scaling with 3 instances. The user had created a new AMI after updating one of the instances. If the user wants to terminate two specific instances to ensure that Auto Scaling launches an instances with the new launch configuration, which command should he run?

- as-delete-instance-in-auto-scaling-group <Instance ID> –no-decrement-desired-capacity

- as-terminate-instance-in-auto-scaling-group <Instance ID> –update-desired-capacity

- as-terminate-instance-in-auto-scaling-group <Instance ID> –decrement-desired-capacity

- as-terminate-instance-in-auto-scaling-group <Instance ID> –no-decrement-desired-capacity

- A user is planning to scale up an application by 8 AM and scale down by 7 PM daily using Auto Scaling. What should the user do in this case?

- Setup the scaling policy to scale up and down based on the CloudWatch alarms

- User should increase the desired capacity at 8 AM and decrease it by 7 PM manually

- User should setup a batch process which launches the EC2 instance at a specific time

- Setup scheduled actions to scale up or down at a specific time

- An organization has setup Auto Scaling with ELB. Due to some manual error, one of the instances got rebooted. Thus, it failed the Auto Scaling health check. Auto Scaling has marked it for replacement. How can the system admin ensure that the instance does not get terminated?

- Update the Auto Scaling group to ignore the instance reboot event

- It is not possible to change the status once it is marked for replacement

- Manually add that instance to the Auto Scaling group after reboot to avoid replacement

- Change the health of the instance to healthy using the Auto Scaling commands

- A user has configured Auto Scaling with the minimum capacity as 2 and the desired capacity as 2. The user is trying to terminate one of the existing instance with the command: as-terminate-instance-in-auto-scaling-group<Instance ID> –decrement-desired-capacity. What will Auto Scaling do in this scenario?

- Terminates the instance and does not launch a new instance

- Terminates the instance and updates the desired capacity to 1

- Terminates the instance and updates the desired capacity & minimum size to 1

- Throws an error

- An organization has configured Auto Scaling for hosting their application. The system admin wants to understand the Auto Scaling health check process. If the instance is unhealthy, Auto Scaling launches an instance and terminates the unhealthy instance. What is the order execution?

- Auto Scaling launches a new instance first and then terminates the unhealthy instance

- Auto Scaling performs the launch and terminate processes in a random order

- Auto Scaling launches and terminates the instances simultaneously

- Auto Scaling terminates the instance first and then launches a new instance

- A user has configured ELB with Auto Scaling. The user suspended the Auto Scaling terminate process only for a while. What will happen to the availability zone rebalancing process (AZRebalance) during this period?

- Auto Scaling will not launch or terminate any instances

- Auto Scaling will allow the instances to grow more than the maximum size

- Auto Scaling will keep launching instances till the maximum instance size

- It is not possible to suspend the terminate process while keeping the launch active

- An organization has configured Auto Scaling with ELB. There is a memory issue in the application which is causing CPU utilization to go above 90%. The higher CPU usage triggers an event for Auto Scaling as per the scaling policy. If the user wants to find the root cause inside the application without triggering a scaling activity, how can he achieve this?

- Stop the scaling process until research is completed

- It is not possible to find the root cause from that instance without triggering scaling

- Delete Auto Scaling until research is completed

- Suspend the scaling process until research is completed

- A user has configured ELB with Auto Scaling. The user suspended the Auto Scaling Alarm Notification (which notifies Auto Scaling for CloudWatch alarms) process for a while. What will Auto Scaling do during this period?

- AWS will not receive the alarms from CloudWatch

- AWS will receive the alarms but will not execute the Auto Scaling policy

- Auto Scaling will execute the policy but it will not launch the instances until the process is resumed

- It is not possible to suspend the AlarmNotification process

- An organization has configured two single availability zones. The Auto Scaling groups are configured in separate zones. The user wants to merge the groups such that one group spans across multiple zones. How can the user configure this?

- Run the command as-join-auto-scaling-group to join the two groups

- Run the command as-update-auto-scaling-group to configure one group to span across zones and delete the other group

- Run the command as-copy-auto-scaling-group to join the two groups

- Run the command as-merge-auto-scaling-group to merge the groups

- An organization has configured Auto Scaling with ELB. One of the instance health check returns the status as Impaired to Auto Scaling. What will Auto Scaling do in this scenario?

- Perform a health check until cool down before declaring that the instance has failed

- Terminate the instance and launch a new instance

- Notify the user using SNS for the failed state

- Notify ELB to stop sending traffic to the impaired instance

- A user has setup an Auto Scaling group. The group has failed to launch a single instance for more than 24 hours. What will happen to Auto Scaling in this condition

- Auto Scaling will keep trying to launch the instance for 72 hours

- Auto Scaling will suspend the scaling process

- Auto Scaling will start an instance in a separate region

- The Auto Scaling group will be terminated automatically

- A user is planning to setup infrastructure on AWS for the Christmas sales. The user is planning to use Auto Scaling based on the schedule for proactive scaling. What advise would you give to the user?

- It is good to schedule now because if the user forgets later on it will not scale up

- The scaling should be setup only one week before Christmas

- Wait till end of November before scheduling the activity

- It is not advisable to use scheduled based scaling

- A user is trying to setup a recurring Auto Scaling process. The user has setup one process to scale up every day at 8 am and scale down at 7 PM. The user is trying to setup another recurring process which scales up on the 1st of every month at 8 AM and scales down the same day at 7 PM. What will Auto Scaling do in this scenario

- Auto Scaling will execute both processes but will add just one instance on the 1st

- Auto Scaling will add two instances on the 1st of the month

- Auto Scaling will schedule both the processes but execute only one process randomly

- Auto Scaling will throw an error since there is a conflict in the schedule of two separate Auto Scaling Processes

- A sys admin is trying to understand the Auto Scaling activities. Which of the below mentioned processes is not performed by Auto Scaling?

- Reboot Instance

- Schedule Actions

- Replace Unhealthy

- Availability Zone Re-Balancing

- You have started a new job and are reviewing your company’s infrastructure on AWS. You notice one web application where they have an Elastic Load Balancer in front of web instances in an Auto Scaling Group. When you check the metrics for the ELB in CloudWatch you see four healthy instances in Availability Zone (AZ) A and zero in AZ B. There are zero unhealthy instances. What do you need to fix to balance the instances across AZs?

- Set the ELB to only be attached to another AZ

- Make sure Auto Scaling is configured to launch in both AZs

- Make sure your AMI is available in both AZs

- Make sure the maximum size of the Auto Scaling Group is greater than 4

- You have been asked to leverage Amazon VPC EC2 and SQS to implement an application that submits and receives millions of messages per second to a message queue. You want to ensure your application has sufficient bandwidth between your EC2 instances and SQS. Which option will provide the most scalable solution for communicating between the application and SQS?

- Ensure the application instances are properly configured with an Elastic Load Balancer

- Ensure the application instances are launched in private subnets with the EBS-optimized option enabled

- Ensure the application instances are launched in public subnets with the associate-public-IP-address=trueoption enabled

- Launch application instances in private subnets with an Auto Scaling group and Auto Scaling triggers configured to watch the SQS queue size

- You have decided to change the Instance type for instances running in your application tier that are using Auto Scaling. In which area below would you change the instance type definition?

- Auto Scaling launch configuration

- Auto Scaling group

- Auto Scaling policy

- Auto Scaling tags

- A user is trying to delete an Auto Scaling group from CLI. Which of the below mentioned steps are to be performed by the user?

- Terminate the instances with the ec2-terminate-instance command

- Terminate the Auto Scaling instances with the as-terminate-instance command

- Set the minimum size and desired capacity to 0

- There is no need to change the capacity. Run the as-delete-group command and it will reset all values to 0

- A user has created a web application with Auto Scaling. The user is regularly monitoring the application and he observed that the traffic is highest on Thursday and Friday between 8 AM to 6 PM. What is the best solution to handle scaling in this case?

- Add a new instance manually by 8 AM Thursday and terminate the same by 6 PM Friday

- Schedule Auto Scaling to scale up by 8 AM Thursday and scale down after 6 PM on Friday

- Schedule a policy which may scale up every day at 8 AM and scales down by 6 PM

- Configure a batch process to add a instance by 8 AM and remove it by Friday 6 PM

- A user has configured the Auto Scaling group with the minimum capacity as 3 and the maximum capacity as 5. When the user configures the AS group, how many instances will Auto Scaling launch?

- 3

- 0

- 5

- 2

- A sys admin is maintaining an application on AWS. The application is installed on EC2 and user has configured ELB and Auto Scaling. Considering future load increase, the user is planning to launch new servers proactively so that they get registered with ELB. How can the user add these instances with Auto Scaling?

- Increase the desired capacity of the Auto Scaling group

- Increase the maximum limit of the Auto Scaling group

- Launch an instance manually and register it with ELB on the fly

- Decrease the minimum limit of the Auto Scaling group

- In reviewing the auto scaling events for your application you notice that your application is scaling up and down multiple times in the same hour. What design choice could you make to optimize for the cost while preserving elasticity? Choose 2 answers.

- Modify the Amazon CloudWatch alarm period that triggers your auto scaling scale down policy.

- Modify the Auto scaling group termination policy to terminate the oldest instance first.

- Modify the Auto scaling policy to use scheduled scaling actions.

- Modify the Auto scaling group cool down timers.

- Modify the Auto scaling group termination policy to terminate newest instance first.

- You have a business critical two tier web app currently deployed in two availability zones in a single region, using Elastic Load Balancing and Auto Scaling. The app depends on synchronous replication (very low latency connectivity) at the database layer. The application needs to remain fully available even if one application Availability Zone goes off-line, and Auto scaling cannot launch new instances in the remaining Availability Zones. How can the current architecture be enhanced to ensure this? [PROFESSIONAL]

- Deploy in two regions using Weighted Round Robin (WRR), with Auto Scaling minimums set for 100% peak load per region.

- Deploy in three AZs, with Auto Scaling minimum set to handle 50% peak load per zone.

- Deploy in three AZs, with Auto Scaling minimum set to handle 33% peak load per zone. (Loss of one AZ will handle only 66% if the autoscaling also fails)

- Deploy in two regions using Weighted Round Robin (WRR), with Auto Scaling minimums set for 50% peak load per region.

- A user has created a launch configuration for Auto Scaling where CloudWatch detailed monitoring is disabled. The user wants to now enable detailed monitoring. How can the user achieve this?

- Update the Launch config with CLI to set InstanceMonitoringDisabled = false

- The user should change the Auto Scaling group from the AWS console to enable detailed monitoring

- Update the Launch config with CLI to set InstanceMonitoring.Enabled = true

- Create a new Launch Config with detail monitoring enabled and update the Auto Scaling group

- A user has created an Auto Scaling group with default configurations from CLI. The user wants to setup the CloudWatch alarm on the EC2 instances, which are launched by the Auto Scaling group. The user has setup an alarm to monitor the CPU utilization every minute. Which of the below mentioned statements is true?

- It will fetch the data at every minute but the four data points [corresponding to 4 minutes] will not have value since the EC2 basic monitoring metrics are collected every five minutes

- It will fetch the data at every minute as detailed monitoring on EC2 will be enabled by the default launch configuration of Auto Scaling

- The alarm creation will fail since the user has not enabled detailed monitoring on the EC2 instances

- The user has to first enable detailed monitoring on the EC2 instances to support alarm monitoring at every minute

- A customer has a website which shows all the deals available across the market. The site experiences a load of 5 large EC2 instances generally. However, a week before Thanksgiving vacation they encounter a load of almost 20 large instances. The load during that period varies over the day based on the office timings. Which of the below mentioned solutions is cost effective as well as help the website achieve better performance?

- Keep only 10 instances running and manually launch 10 instances every day during office hours.

- Setup to run 10 instances during the pre-vacation period and only scale up during the office time by launching 10 more instances using the AutoScaling schedule.

- During the pre-vacation period setup a scenario where the organization has 15 instances running and 5 instances to scale up and down using Auto Scaling based on the network I/O policy.

- During the pre-vacation period setup 20 instances to run continuously.

- When Auto Scaling is launching a new instance based on condition, which of the below mentioned policies will it follow?

- Based on the criteria defined with cross zone Load balancing

- Launch an instance which has the highest load distribution

- Launch an instance in the AZ with the fewest instances

- Launch an instance in the AZ which has the highest instances

- The user has created multiple AutoScaling groups. The user is trying to create a new AS group but it fails. How can the user know that he has reached the AS group limit specified by AutoScaling in that region?

- Run the command: as-describe-account-limits

- Run the command: as-describe-group-limits

- Run the command: as-max-account-limits

- Run the command: as-list-account-limits

- A user is trying to save some cost on the AWS services. Which of the below mentioned options will not help him save cost?

- Delete the unutilized EBS volumes once the instance is terminated

- Delete the Auto Scaling launch configuration after the instances are terminated (Auto Scaling Launch config does not cost anything)

- Release the elastic IP if not required once the instance is terminated

- Delete the AWS ELB after the instances are terminated

- To scale up the AWS resources using manual Auto Scaling, which of the below mentioned parameters should the user change?

- Maximum capacity

- Desired capacity

- Preferred capacity

- Current capacity

- For AWS Auto Scaling, what is the first transition state an existing instance enters after leaving steady state in Standby mode?

- Detaching

- Terminating:Wait

- Pending (You can put any instance that is in an InService state into a Standby state. This enables you to remove the instance from service, troubleshoot or make changes to it, and then put it back into service. Instances in a Standby state continue to be managed by the Auto Scaling group. However, they are not an active part of your application until you put them back into service. Refer link)

- EnteringStandby

- For AWS Auto Scaling, what is the first transition state an instance enters after leaving steady state when scaling in due to health check failure or decreased load?

- Terminating (When Auto Scaling responds to a scale in event, it terminates one or more instances. These instances are detached from the Auto Scaling group and enter the Terminating state. Refer link)

- Detaching

- Terminating:Wait

- EnteringStandby

- A user has setup Auto Scaling with ELB on the EC2 instances. The user wants to configure that whenever the CPU utilization is below 10%, Auto Scaling should remove one instance. How can the user configure this?

- The user can get an email using SNS when the CPU utilization is less than 10%. The user can use the desired capacity of Auto Scaling to remove the instance

- Use CloudWatch to monitor the data and Auto Scaling to remove the instances using scheduled actions

- Configure CloudWatch to send a notification to Auto Scaling Launch configuration when the CPU utilization is less than 10% and configure the Auto Scaling policy to remove the instance

- Configure CloudWatch to send a notification to the Auto Scaling group when the CPU Utilization is less than 10% and configure the Auto Scaling policy to remove the instance

- A user has enabled detailed CloudWatch metric monitoring on an Auto Scaling group. Which of the below mentioned metrics will help the user identify the total number of instances in an Auto Scaling group including pending, terminating and running instances?

- GroupTotalInstances (Refer link)

- GroupSumInstances

- It is not possible to get a count of all the three metrics together. The user has to find the individual number of running, terminating and pending instances and sum it

- GroupInstancesCount

- Your startup wants to implement an order fulfillment process for selling a personalized gadget that needs an average of 3-4 days to produce with some orders taking up to 6 months you expect 10 orders per day on your first day. 1000 orders per day after 6 months and 10,000 orders after 12 months. Orders coming in are checked for consistency then dispatched to your manufacturing plant for production quality control packaging shipment and payment processing. If the product does not meet the quality standards at any stage of the process employees may force the process to repeat a step. Customers are notified via email about order status and any critical issues with their orders such as payment failure. Your case architecture includes AWS Elastic Beanstalk for your website with an RDS MySQL instance for customer data and orders. How can you implement the order fulfillment process while making sure that the emails are delivered reliably? [PROFESSIONAL]

- Add a business process management application to your Elastic Beanstalk app servers and re-use the ROS database for tracking order status use one of the Elastic Beanstalk instances to send emails to customers.

- Use SWF with an Auto Scaling group of activity workers and a decider instance in another Auto Scaling group with min/max=1 Use the decider instance to send emails to customers.

- Use SWF with an Auto Scaling group of activity workers and a decider instance in another Auto Scaling group with min/max=1 use SES to send emails to customers.

- Use an SQS queue to manage all process tasks Use an Auto Scaling group of EC2 Instances that poll the tasks and execute them. Use SES to send emails to customers.

References

AWS_Auto_Scaling_Developer_Guide

Hello! Are all sample questions for pro or associate level of exam?

Sample questions are primarily for the Associate level

Hi Sir,

Its regarding question 23 can you please explain possible modifications that cloud watch alarm and cool down timers can do in this case that reduce cost and preserve elasticity.

As the scaling activity is happening quite frequently, the reasons would either be that the alarms

configured are causing the auto scaling to scale up and down fast or the cool down timers are small due to which the auto

scaling activity is triggered before the new instance gets a chance to handle traffic.

Option B is wrong as terminating oldest instance would help save cost but would not prevent the auto scaling from scale

up/down cycle.

Option C is wrong as scheduled scaling only helps when the pattern is known

Option E is wrong as terminating newest instance would increase cost but also would not prevent the auto scaling from scale

up/down cycle.

Question(22)

Can you clarify why increase desired and not maximum ? it’s a future load , so i thought that maximum would be better to manage the load without paying for not used resources.

as much as i understand that desired is the number which Auto Scale will reach even if the not needed.

Key point in the question is proactively, so you should set desired to build up the instances beforehand.

This is not correct–

“Auto Scaling group can be deleted, if it has no running instances.”

http://docs.aws.amazon.com/autoscaling/latest/userguide/as-process-shutdown.html.

When you delete an Auto Scaling group, its desired, minimum, and maximum values are set to 0. As a result, the Auto Scaling instances are terminated. Alternatively, you can terminate or detach the instances before you delete the Auto Scaling group.

Thanks, there is a correction to the statement.

Auto Scaling group can be deleted from CLI, if it has no running instances else need to set the minimum and desired capacity to 0. This is handled automatically when deleting an ASG from AWS management console.

Question 17 I think the answer is B. The question asks how to ensure scalability between the application and SQS, so private subnet and EBS optimized makes sense. Your answer, D, answers a different question around how to scale based on queue size.

Scalability between SQS and Application can be improved by the Application being able to handle millions of messages.

Having instances in private subnet with EBS optimized would not improve the handling capacity of the EC2 instances.

EBS–optimized instance provides additional, dedicated capacity for Amazon EBS I/O but not with SQS.

EBS optimized takes EBS disk traffic off the main network interface, leaving more bandwidth for SQS. The question asks for the best bandwidth to both sending and receiving – option D is just for receiving and doesn’t help sending at all. However of course SQS bandwidth scales linearly with the number of instances, but again D is all about receive and doesn’t mention sending.

I checked the cloud guru forums, and it’s 3 votes for B, 4 for D, but the moderator voted for B. So it’s one of those tricky questions.

I guess the question is which answer is least wrong, as none are quite right. The best answer is probably EBS optimized to help provide bandwidth for sending along with scaling the processing instances based on queue size, so a combination of B and D.

Questions like this are why 2 minutes per question is tricky.

Agreed, its always tricky for these kind of open ended questions, where its open to interpretation.

Professional is quite intensive to for time for sure, so unless very well prepared it can get very tough.

Hi Jayendra,

Thanks for publishing such a useful blog.

Could you please explain answer to question #1? Why answer is A and not C?

As per AWS documentation even End time and recurrence value is not required.

Thanks in advance

Hi Priti,

The question is not about optional and required. But what parameters are valid which is causing the confusion.

Refer to Auto Scaling AWS documentation.

For Q13 :

I can see that you can create scheduling event way into the future .. why does the recommendation has to be wait till end of november? why not do it now ?

Any thoughts from you ?

As the start time can be a max month before

Refer http://docs.aws.amazon.com/cli/latest/reference/autoscaling/describe-scheduled-actions.html

StartTime -> (timestamp)

The date and time that the action is scheduled to begin. This date and time can be up to one month in the future.

When StartTime and EndTime are specified with Recurrence , they form the boundaries of when the recurring action will start and stop.

Hi Jayendra,

I tried this just now. I am able to set the start time to be 1st Dec 2017. Today is 9th Oct 2017. Can you please throw some light on this: “This date and time can be up to one month in the future.” ? At the link mentioned by you, the aforementioned quoted statement is mentioned in the output of the describe-scheduled-actions command. Is it the case of document defect where the doc has not been updated when previously such a limitation was there?

Thanks!

Mahesh

Hello Mr. Jay,

is the answer still valid?

Q.13

A. Wait till end of November before scheduling the activity

Seems to be still. Refer the AWS documentation.

StartTime -> (timestamp)

The date and time that the action is scheduled to begin. This date and time can be up to one month in the future.

Q14 :

I am able to set up a scheduling event daily at 9AM starting from Aug 1 – Aug 31.

Also a scheduling event weekly on monday starting from Aug 1 – Aug 31.

with different min, max , desired capacities.

In this case there will a conflict on every monday. But I am allowed to do it.

from my observation it complained only when the start date and time of the two scheduled events are the same. It did not seem to mind the intersect that happens during the time period they were set up. Any thoughts from you ?

Pleas eIgnore this .. as long as the times conflict in the period aws is not allowing to save.

You have a great blog and great set of questions probably batter than any other paid content. Thank you for such an effort. I should have thanked you in the first post itself.

Q19:

I have never used aws CLI / API. so may be I am missing some thing very fundamantal here. to delete ASG why not use the delete autoscaling command instead of setting desired capacity to zero ?

I see that there is a commanad for that

http://docs.aws.amazon.com/cli/latest/reference/autoscaling/delete-auto-scaling-group.html

As all the instances needs to be delete before and the other options are not valid. To terminate all instances before deleting the Auto Scaling group, call update-auto-scaling-group and set the minimum size and desired capacity of the Auto Scaling group to zero.

Could you please say opinion about practise questions on Braincert.com.

It seems architect level than associate. Kindly share your opinion on this.

Does we really need in depth knowledge as sampled in those questions to complete the associate exam ?

Practice questions on Braincert are Associate level, professional level practice exams are much more complex, longer in prose and almost always involve multiple AWS services in the solution.

If my database server crossed threshold value (ex. CPU reaches 92% but my threshold value is 80%) how can I troubleshot the issue.

you can look into the monitoring stats and you can also check the logs to check the slow, long running queries.

You can configure alerts to notify you when it reaches 70% or 80%, so that you can check at that time.

Which AWS services are Self scalable by default ?

I know Lambda is self scalable (You do not have to scale your Lambda functions – AWS Lambda scales them automatically on your behalf).

Can you provide any such AWS services that are auto scalable and doesnt’ require any configurations?

The other service I am thinking is SQS ( Amazon SQS can scale transparently to handle the load without any provisioning instructions from you).

ELB, DynamoDB are auto scalable. ELB scales automatically as per the demand. DynamoDB with auto scaling will scale automatically. API Gateway scales automatically. Most of the Custom AWS managed services (Not RDS as there is underlying DB) should scale automatically.

Hi Jayendra,

Please could you advice for the below question.

An Auto-Scaling group spans 3 AZs and currently has 4 running EC2 instances. When

Auto Scaling needs to terminate an EC2 instance by default, AutoScaling will:

Choose 2 answers

A. Allow at least five minutes for Windows/Linux shutdown scripts to complete, before

terminating the instance.

B. Terminate the instance with the least active network connections. If multiple instances

meet this criterion, one will be randomly selected.

C. Send an SNS notification, if configured to do so.

D. Terminate an instance in the AZ which currently has 2 running EC2 instances.

E. Randomly select one of the 3 AZs, and then terminate an instance in that AZ.

Correct answer should be C & D as Auto Scaling would select the AZ with most instances as per the default termination policy and send notification through SNS.

Please add this important info:

If you suspend AddToLoadBalancer, Auto Scaling launches the instances but does not add them to the load balancer or target group. If you resume the AddToLoadBalancer process, Auto Scaling resumes adding instances to the load balancer or target group when they are launched. However, Auto Scaling does not add the instances that were launched while this process was suspended. You must register those instances manually.

Hi Jayendra,

Please could you advice for the below question.

You have set up an Auto Scaling group. The cool down period for the Auto Scaling group is 7 minutes. The first

instance is launched after 3 minutes, while the second instance is launched after 4 minutes. How many

minutes after the first instance is launched will Auto Scaling accept another scaling actMty request?

A. 11 minutes

B. 7 minutes

C. 10 minutes

D. 14 minutes

Thanks!

Hi Edivando, it should be 11 minutes, as it would take the last instance launch time

Refer AWS documentation

With multiple instances, the cooldown period (either the default cooldown or the scaling-specific cooldown) takes effect starting when the last instance launches.Thanks for the great blog. I have a query on below statement..

Auto Scaling increases the desired capacity of the group by the number of instances being attached. But if the number of instances being attached plus the desired capacity exceeds the maximum size of the group, the request fails.

The above holds true when we trigger using SDK? because, using AWS console if i set Min = 2 and Max=12 and if the scale out policy defines to increase 100% when cpu>60, in this case when first policy triggers it’s 2×2, second policy 4×4 and when third time policy triggers it will add 4 evenly balancing between AZ’s. Please let me know if my understanding is correct.

Its for the desired capacity which cannot exceed the maximum in all cases.

I believe right answer to Q18 will be Autoscaling group. When you select AS group, it enables to drill down to instance details, stop instance and change instance type.

Q18- You have decided to change the Instance type for instances running in your application tier that are using Auto Scaling. In which area below would you change the instance type definition?

Auto Scaling launch configuration

Auto Scaling group

Auto Scaling policy

Auto Scaling tags

If you have not created Launch configuration, then yes you can do it from Auto scaling group.

very nice work Jayendra, I followed your notes / Q&A / whitepapers and passed SysOps Assoicate with 84%. Keep posting all Q&A

Congrats Mugunthan, thats a great score.

Hi Jayendra,

For question:32 could you please advise if the answer should be “Entering Standby” rather than Pending. The question is emphasizing on “After leaving steady state what is the first state”.

For AWS Auto Scaling, what is the first transition state an existing instance enters after leaving steady state in Standby mode?

Detaching

Terminating:Wait

Pending (You can put any instance that is in an InService state into a Standby state. This enables you to remove the instance from service, troubleshoot or make changes to it, and then put it back into service. Instances in a Standby state continue to be managed by the Auto Scaling group. However, they are not an active part of your application until you put them back into service. Refer link)

EnteringStandby

EnterStandBy is the state before StandBy. The state after StandBy is the Pending.

Check Auto Scaling Lifecycle

Q27: believe C is also suitable, considering 5 instances regular. So Launch 15 instances with scale up or down based on the network activity. Can you please justify if this is not the case. Thanks

Option C is 15 running always and 5 for scale up/down hence its not cost effective.

Please could you advice for the below question.

An application is running on Amazon EC2 instances behind an Application Load Balancer. The

Instances run in an auto scaling group across multiple Availability Zones. Four instances are

required to handle a predictable traffic load. The Solutions Architect wants to ensure that

the operation is fault-tolerant up to the loss of one Availability Zone.

Which is the MOST cost-efficient way to meet these requirements?

Deploy two instances in each of three Availability Zones.

Deploy two instances in each of two Availability Zones.

Deploy four instanes in each of two Availability Zones.

Deploy one instance in each of three Availability Zones.

Cost effective option is Deploy two instances in each of three Availability Zones as it would need only 6 instances and even if an AZ goes down you would have 4 instance running

Your advice on below question please

Two Auto Scaling applications, Application A and Application B, currently run within a shared set of

subnets. A solutions architect wants to make sure that ApplicationA can make request to Application

B, but Application B should be denied from making request to Application A.

1) Which is the SIMPLEST solution to achieve this policy?

2) Using security groups that reference the security groups of the other application.

3) Using security groups that reference the application servers IP address.

4) Using Network Access Control Lists to allow/deny traffic based on application IP address.

5) Migrating the applications to separate subnets from each other.

Option 1 should work fine using Security groups for the instance launched by auto scaling.

Wont the option 1 make the application B to talk back to Application A?

So using the IP address specific to the servers would make App B not talk to App A isnt it?

Sorry! I experimented and it works fine 🙂

Q#4 , As per your below statement, it will error out if you try to change the health of the instance using CLIs. Isn’t it so ?

For an unhealthy instance, the instance’s health check can be changed back to healthy manually but you will get an error if the instance is already terminating. Because the interval between marking an instance unhealthy and its actual termination is so small, attempting to set an instance’s health status back to healthy is probably useful only for a suspended group.

Q#6. I think answer should be Option A.

Auto Scaling launches a new instance first and then terminates the unhealthy instance.

The scaling process launches new instances in an unaffected Availability Zone before terminating the unhealthy or unavailable instances. AZRebalance terminates instances only after launching the replacement instances.

Could you please enlighten me how Option D would be the right answer.

#6

For AZRebalance. I agree it is A.

For this question, this answer should be D.

Section: Replacing Unhealthy Instances

https://docs.aws.amazon.com/autoscaling/ec2/userguide/as-maintain-instance-levels.html

Amazon EC2 Auto Scaling creates a new scaling activity for terminating the unhealthy instance and then terminates it. Later, another scaling activity launches a new instance to replace the terminated instance.

Thanks Aaron, thats right.

Hi Anil,

ARRebalance happens when a scale in event happens. For an health check failure, it would terminate the instance and launch a new one.

Refer AWS documentation – https://docs.aws.amazon.com/autoscaling/ec2/userguide/as-maintain-instance-levels.html#replace-unhealthy-instance

To maintain the same number of instances, Auto Scaling performs a periodic health check on running instances within an Auto Scaling group. When it finds that an instance is unhealthy, it terminates that instance and launches a new one.

2.d

as-terminate-instance-in-auto-scaling-group –no-decrement-desired-capacity

https://docs.aws.amazon.com/cli/latest/reference/autoscaling/terminate-instance-in-auto-scaling-group.html

I think is

–no-should-decrement-desired-capacity

Indicates whether terminating the instance also decrements the size of the Auto Scaling group.

thats right. The command line params have undergone quite a lot of changes.

Question 33:

> For AWS Auto Scaling, what is the first transition state an instance enters after leaving steady state when scaling in due to health check failure or decreased load?

I cannot find any information in AWS docs that would confirm your answer “Terminating” is correct here – as per the asg lifecycle I would say that the correct answer should be “Pending” – I failed to find any evidence of going directly to Terminating upon leaving the Standby state when scale-in is active – according to my best understanding it first has to enter Pending state, then InService and then eventually Terminating state.

If it indeed can go directly to “Terminating” state, please provide a link for docs that show that – otherwise I suggest double checking if Terminating is the right answer for this question.

https://jayendrapatil.com/aws-auto-scaling-lifecycle/ – provides the lifecycle which depicts the scale in or failed instance health check would move it to the terminating state.