AWS EC2 Best Practices

AWS recommends the following best practices to get maximum benefit and satisfaction from EC2

Security & Network

- Implement the least permissive rules for the security group.

- Regularly patch, update, and secure the operating system and applications on the instance

- Manage access to AWS resources and APIs using identity federation, IAM users, and IAM roles

- Establish credential management policies and procedures for creating, distributing, rotating, and revoking AWS access credentials

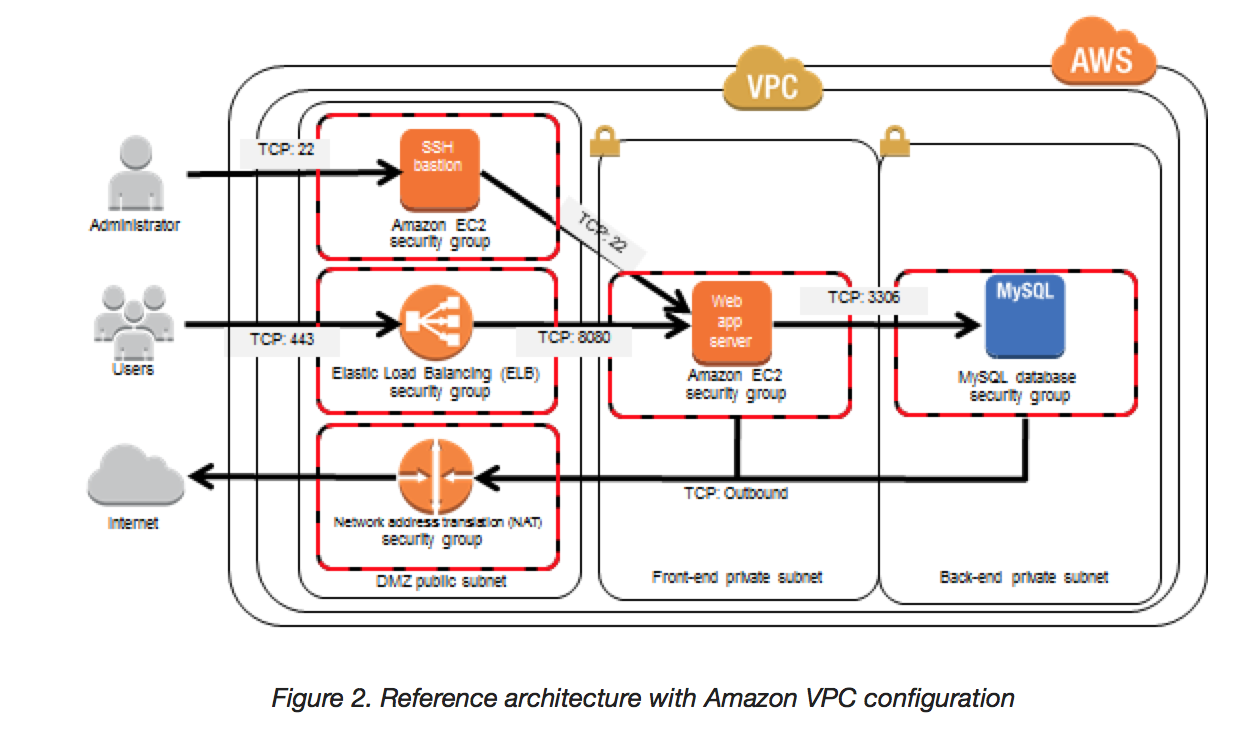

- Launch the instances into a VPC instead of EC2-Classic (If AWS account is newly created VPC is used by default)

- Encrypt EBS volumes and snapshots.

Storage

- EC2 supports Instance store and EBS volumes, so its best to understand the implications of the root device type for data persistence, backup, and recovery

- Use separate Amazon EBS volumes for the operating system (root device) versus your data.

- Ensure that the data volume (with the data) persists after instance termination.

- Use the instance store available for the instance to only store temporary data. Remember that the data stored in the instance store is deleted when an instance is stopped or terminated.

- If you use instance store for database storage, ensure that you have a cluster with a replication factor that ensures fault tolerance.

Resource Management

- Use instance metadata and custom resource tags to track and identify your AWS resources

- View your current limits for Amazon EC2. Plan to request any limit increases in advance of the time that you’ll need them.

Backup & Recovery

Regularly back up the instance usingAmazon EBS snapshots (not done automatically)or a backup tool.- Data Lifecycle Manager (DLM) to automate the creation, retention, and deletion of snapshots taken to back up the EBS volumes

- Create an Amazon Machine Image (AMI) from the instance to save the configuration as a template for launching future instances.

- Implement High Availability by deploying critical components of the application across multiple Availability Zones, and replicate the data appropriately

- Monitor and respond to events.

- Design the applications to handle dynamic IP addressing when the instance restarts.

- Implement failover. For a basic solution, you can manually attach a network interface or Elastic IP address to a replacement instance

- Regularly test the process of recovering your instances and Amazon EBS volumes if they fail.