AWS SageMaker

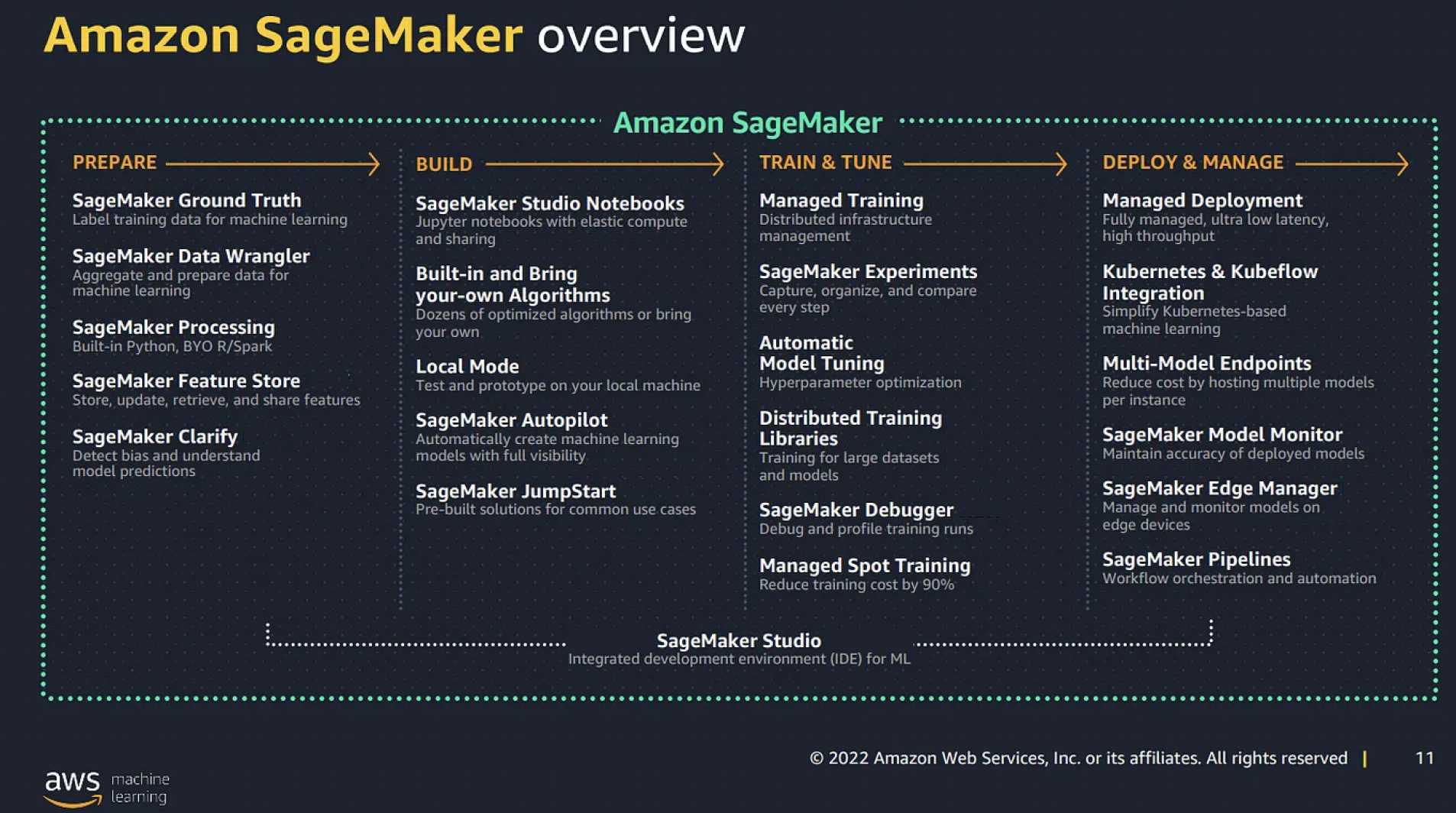

Amazon SageMaker is a fully managed machine learning service that enables data scientists and developers to build, train, and deploy machine learning models quickly and efficiently. This comprehensive platform simplifies the entire machine learning workflow while providing the flexibility to use your preferred tools and frameworks.

- SageMaker removes the heavy lifting from each step of the machine learning process to make it easier to develop high-quality models.

- It is designed for high availability with no maintenance windows or scheduled downtimes.

- APIs run in Amazon’s proven, high-availability data centers, with service stack replication configured across three facilities in each AWS region to provide fault tolerance in the event of a server failure or AZ outage.

- SageMaker provides a full end-to-end workflow, but users can continue to use their existing tools with SageMaker.

- It supports Jupyter notebooks through SageMaker Studio and SageMaker Notebook Instances.

- Users can select the number and type of instance used for hosted notebooks, training jobs, and model hosting to optimize for performance and cost.

What’s New in SageMaker

AWS regularly enhances SageMaker with new features and capabilities. Here are some of the latest additions:

- SageMaker Canvas: A visual, no-code interface that allows business analysts to build ML models without programming experience.

- SageMaker Studio Lab: A free service that provides compute resources for learning ML without requiring an AWS account.

- SageMaker HyperPod: A purpose-built infrastructure for training foundation models with enhanced reliability and performance.

- SageMaker Serverless Inference Enhancements: Increased timeout limits and memory configurations for more flexible serverless deployments.

- SageMaker Model Cards: Documentation and tracking of model information throughout the ML lifecycle.

- SageMaker Role Manager: Simplified granting of least-privilege permissions for ML workloads.

- Foundation Model Support: Enhanced capabilities for working with large language models and other foundation models.

SageMaker Machine Learning Workflow

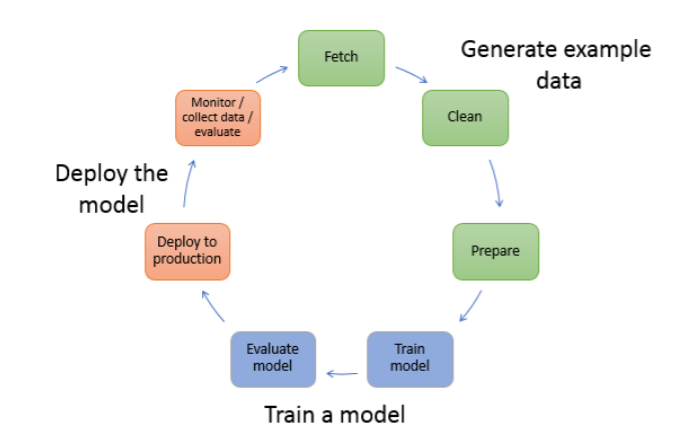

SageMaker supports the complete machine learning workflow, from data preparation to model deployment and monitoring. Each stage is designed to be flexible and interoperable with the others.

Data Preparation and Feature Engineering

Before training a model, data must be explored, cleaned, and transformed. SageMaker provides several tools to streamline this process:

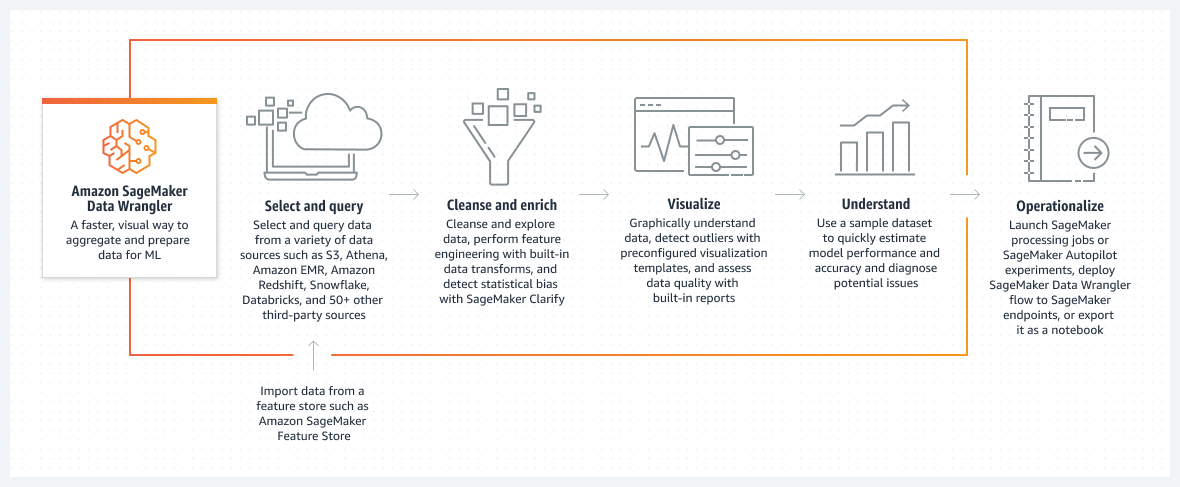

- SageMaker Data Wrangler: Reduces the time to aggregate and prepare data from weeks to minutes, with automated data quality assessment and intelligent transformation recommendations.

- SageMaker Feature Store: Provides a centralized repository for storing, sharing, and managing features with real-time feature computation capabilities.

- SageMaker Processing: Enables running data processing workloads at scale with support for custom frameworks.

Data Preparation Best Practices

- Fetch the data: Import data from various sources including Amazon S3, Amazon Redshift, Amazon Athena, and more.

- Clean the data: Handle missing values, outliers, and inconsistencies with automated data quality assessment.

- Transform the data: Convert data into formats suitable for machine learning algorithms with intelligent transformation recommendations.

Model Training

SageMaker provides flexible options for training machine learning models, from using built-in algorithms to bringing your own code:

- Training the model: Select an algorithm and compute resources appropriate for your data and problem.

- Evaluating the model: Determine whether the accuracy and other metrics meet your requirements using enhanced evaluation tools.

Training Data Format Options

SageMaker supports multiple data storage locations and input modes for training:

- Storage options include Amazon S3, Amazon EFS, and Amazon FSx for Lustre.

- Input modes include:

- File mode: Downloads all data to the training instance before starting. Best for smaller datasets that fit in memory.

- Pipe mode: Streams data directly from S3, enabling faster start times and reduced storage requirements.

- Fast File mode: Combines the ease of File mode with the performance benefits of Pipe mode.

- Streaming mode: Supports continuous data streaming for online learning scenarios with real-time data.

- SageMaker now natively supports all common data formats including CSV, JSON, Parquet, Arrow, and specialized formats for multi-modal data.

Model Building

SageMaker offers multiple approaches to building machine learning models:

- Built-in Algorithms: Optimized algorithms for various ML tasks, including specialized algorithms for multi-modal learning and time-series forecasting.

- Custom Training: Support for all major ML frameworks including TensorFlow, PyTorch, MXNet, and emerging frameworks.

- Foundation Models: Access to pre-trained foundation models with simplified fine-tuning workflows and parameter-efficient training methods.

- AutoML: SageMaker AutoPilot with support for multi-modal data, time-series forecasting, and automated model selection.

- Hybrid Models: Capabilities for combining traditional ML approaches with foundation models for improved performance and explainability.

Model Deployment and Inference

SageMaker provides multiple options for deploying models to production environments, each optimized for different use cases:

- Model deployment helps deploy ML code to make predictions, also known as Inference.

- SageMaker supports auto-scaling for hosted models to dynamically adjust the number of instances based on workload.

- Multi-model endpoints provide a cost-effective solution for deploying large numbers of models using shared resources.

- High availability and reliability are achieved by deploying multiple instances across multiple Availability Zones.

Inference Options Comparison

| Inference Type | Best For | Payload Size | Processing Time | Key Features |

|---|---|---|---|---|

| Real-time | Low-latency, high-throughput requirements | Up to 6 MB | Up to 60 seconds | Persistent REST API endpoint, instance type of your choice |

| Serverless | Intermittent or unpredictable traffic | Up to 4 MB | Up to 60 seconds | No instance management, pay-per-use pricing |

| Batch Transform | Offline processing of large datasets | GB-scale | Days | No persistent endpoint, good for preprocessing |

| Asynchronous | Large payloads, long processing times | Up to 1 GB | Up to one hour | Request queuing, scale to zero when idle |

| Edge | IoT and edge device deployment | Device-dependent | Device-dependent | On-device inference, intermittent connectivity support |

SageMaker also supports Inference Pipelines, which allow you to chain multiple models and preprocessing/postprocessing steps in a sequence of containers.

Testing Model Variants

SageMaker supports testing multiple models or model versions behind the same endpoint using variants:

- Production Variants: Enable A/B or canary testing by allocating portions of traffic to different model versions.

- Shadow Variants: Test new models by sending them copies of production traffic without exposing their responses to users.

- Champion-Challenger: Framework for continuously evaluating and promoting better-performing models with guardrails for safe deployment.

SageMaker Training Optimization

SageMaker provides several features to optimize the training process for cost, performance, and reliability:

- SageMaker Managed Spot Training: Uses EC2 Spot instances to reduce training costs by up to 90% compared to On-Demand instances.

- SageMaker Checkpoints: Saves model state during training to resume from the last checkpoint if interrupted.

- SageMaker Distributed Training: Optimizes training across multiple GPUs and instances for faster model convergence.

- SageMaker Inference Recommender: Helps select the optimal instance type and configuration for deploying models based on performance and cost requirements.

- SageMaker Training Compiler: Optimizes training code for specific hardware accelerators, reducing training time.

SageMaker Security and Governance

SageMaker provides comprehensive security features and governance tools to help you meet compliance requirements and maintain control over your ML workflows:

- ML model artifacts and other system artifacts are encrypted in transit and at rest.

- Support for encrypted S3 buckets and KMS keys for notebooks, training jobs, and endpoints.

- Secure API and console access over SSL connections.

- VPC interface endpoints powered by AWS PrivateLink for secure access without internet exposure.

- SageMaker Role Manager: Simplifies creating least-privilege IAM roles for ML workflows.

- SageMaker Model Cards: Documents model information, intended uses, risk ratings, and evaluation results.

- SageMaker Model Governance: Provides systematic visibility into model development, validation, and usage.

Network Isolation

SageMaker Network Isolation provides additional security by:

- Preventing containers from making outbound network calls, even to other AWS services.

- Not exposing AWS credentials to the container runtime environment.

- Limiting network traffic to peers of each training container in multi-instance training jobs.

- Isolating S3 operations from the training or inference container.

SageMaker Development Environment

SageMaker Studio

SageMaker Studio is a comprehensive integrated development environment (IDE) for machine learning:

- Provides a unified interface for all ML development tasks.

- Supports collaborative development allowing team members to share notebooks and projects.

- Integrates MLOps capabilities for CI/CD pipelines and automated workflows.

- Offers intelligent code assistance and optimization suggestions.

- Enables cross-account collaboration while maintaining governance.

SageMaker Canvas

SageMaker Canvas provides a visual interface that enables business analysts and developers to build ML models without writing code:

- Import data from various sources including Amazon S3, Redshift, and local files.

- Automatically clean and prepare data for model building.

- Build models for common use cases like prediction, categorization, and time series forecasting.

- Evaluate models with easy-to-understand metrics and visualizations.

- Generate predictions on new data and share insights with stakeholders.

- Collaborate with data scientists using SageMaker Studio.

SageMaker Studio Lab

SageMaker Studio Lab provides free resources for learning machine learning:

- Access to CPU and GPU compute instances for educational purposes.

- Guided tutorials and courses for learning ML concepts and SageMaker capabilities.

- Community features for sharing notebooks and collaborating on projects.

- Foundation model experimentation in a controlled environment.

- Easy migration path to full SageMaker when ready for production.

SageMaker ML Components

SageMaker Feature Store

SageMaker Feature Store is a purpose-built repository for storing, sharing, and managing machine learning features:

- Centralized store for features and associated metadata for easy discovery and reuse.

- Reduces repetitive data processing by allowing features to be created once and used for both training and inference.

- Organizes features into FeatureGroups that describe Records.

- Supports both online and offline stores:

- Online store: For low-latency, real-time inference use cases, retaining only the latest feature values.

- Offline store: For training and batch inference, storing historical feature data in Parquet format for optimized storage and queries.

SageMaker JumpStart

SageMaker JumpStart provides pre-built solutions and foundation models to accelerate ML development:

- Access to hundreds of pre-trained, open-source models for various problem types.

- Support for foundation models including large language models, text-to-image models, and embedding models.

- Ability to fine-tune models on your own data before deployment.

- Solution templates that set up infrastructure for common use cases.

- Executable example notebooks for learning SageMaker capabilities.

SageMaker Built-in Algorithms

SageMaker provides numerous built-in algorithms optimized for performance and scale, covering common machine learning tasks:

- Supervised learning algorithms for regression and classification.

- Unsupervised learning algorithms for clustering and anomaly detection.

- Computer vision algorithms for image and video analysis.

- Natural language processing algorithms for text analysis.

- Time series forecasting algorithms for predicting future values.

For a detailed list of available algorithms, please refer to SageMaker Built-in Algorithms.

SageMaker HyperPod

SageMaker HyperPod provides purpose-built infrastructure for training foundation models with enhanced reliability and performance:

- Designed for large-scale distributed training of foundation models.

- Provides fault-tolerant infrastructure with automatic recovery from instance failures.

- Supports checkpointing to resume training from the last saved state.

- Optimized for popular ML frameworks like PyTorch and TensorFlow.

- Integrates with SageMaker’s distributed training libraries for efficient scaling.

AWS ML Accelerators

AWS offers custom silicon designed specifically for machine learning workloads:

- AWS Inferentia:

- Purpose-built acceleration for deep learning inference.

- Delivers up to 70% lower cost per inference compared to GPU-based instances.

- Optimized for high throughput and low latency.

- Available through Amazon EC2 Inf1 and Inf2 instances.

- AWS Trainium:

- Custom chips designed specifically for training deep learning models.

- Provides up to 50% cost savings over comparable GPU-based instances.

- Optimized for common frameworks like PyTorch and TensorFlow.

- Available through Amazon EC2 Trn1 instances.

Note: Elastic Inference has been deprecated and replaced by AWS Inferentia. Customers using Elastic Inference should migrate to Inferentia-based instances for better performance and cost-effectiveness.

Model Quality and Responsible AI

SageMaker Clarify

SageMaker Clarify helps improve ML models by detecting potential bias and helping to explain the predictions that the models make:

- Provides explainability for complex models including deep neural networks.

- Offers comprehensive fairness metrics with customizable thresholds.

- Includes techniques for detecting and mitigating bias in training data and model predictions.

- Integrates with Model Cards for automatic documentation of fairness and explainability insights.

- Helps meet regulatory compliance requirements with pre-built reports.

SageMaker Model Monitor

SageMaker Model Monitor monitors the quality of SageMaker machine learning models in production:

- Provides unified monitoring of data quality, model quality, bias, and explainability.

- Offers early warning system to detect potential issues before they impact model performance.

- Supports configurable automated responses to detected issues.

- Allows for custom domain-specific and business-oriented monitoring metrics.

- Includes specialized monitoring for foundation models, including prompt drift and output quality.

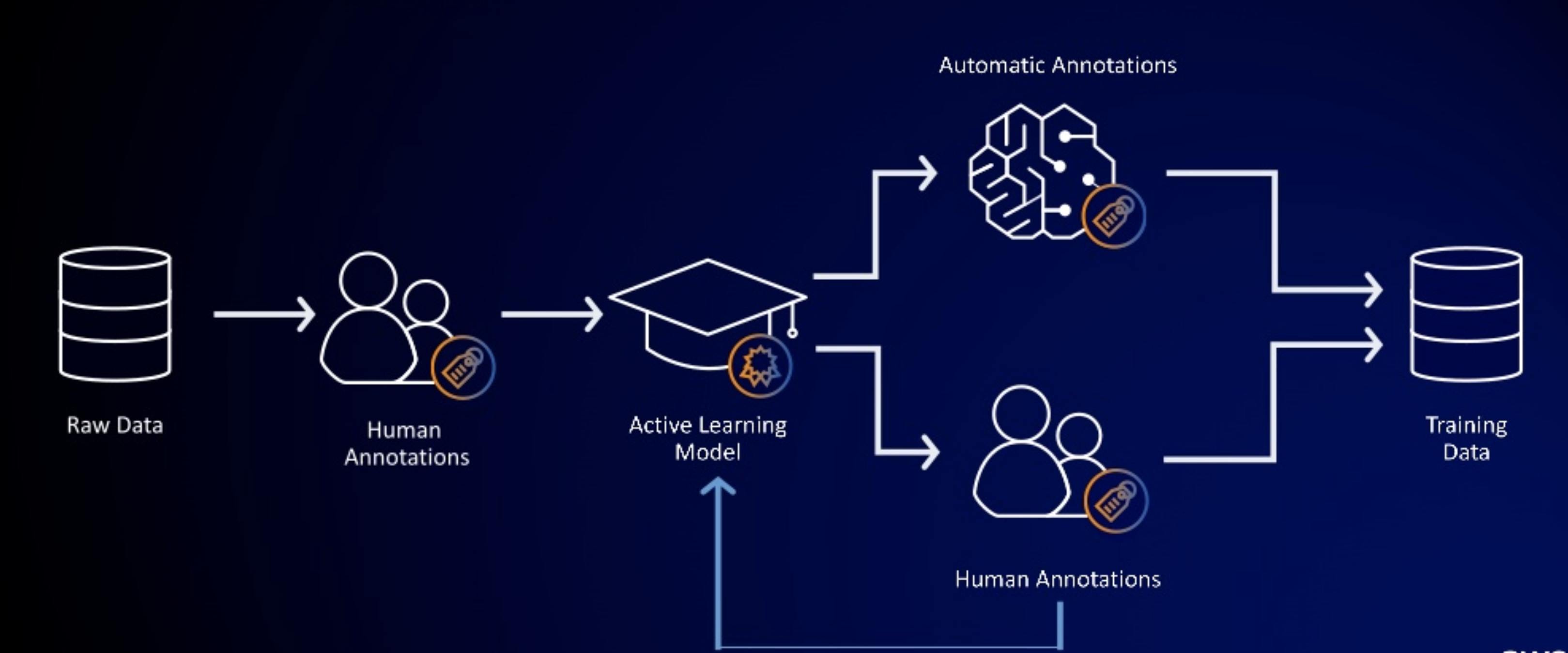

SageMaker Ground Truth

SageMaker Ground Truth provides automated data labeling using machine learning:

- Uses active learning to automate the labeling of input data for certain built-in task types.

- Supports labeling workflows for complex data types including video, audio, and multi-modal content.

- Offers quality control workflows with consensus labeling and expert review.

- Provides access to specialized workforces for domain-specific labeling tasks.

- Helps lower labeling costs by up to 70% through automation.

Automation and Experimentation

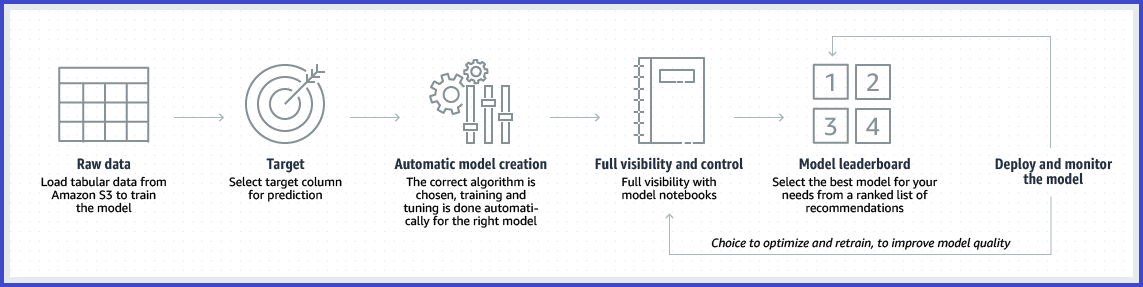

SageMaker AutoPilot

SageMaker AutoPilot automates the end-to-end machine learning process while maintaining transparency:

- Automatically analyzes data and selects appropriate algorithms.

- Preprocesses data and performs feature engineering.

- Trains and tunes multiple models to find the best performer.

- Generates notebooks with the code used for model creation, enabling customization.

- Provides explainability reports showing feature importance for model predictions.

SageMaker Automatic Model Tuning

SageMaker Automatic Model Tuning helps optimize hyperparameters to improve model performance:

- Uses Bayesian optimization to efficiently search the hyperparameter space.

- Supports random search and grid search strategies.

- Can leverage managed spot training to reduce costs.

- Provides warm start capability to use previous tuning jobs as starting points.

Best practices for hyperparameter tuning include:

- Limit the search space: Focus on fewer, more impactful hyperparameters.

- Choose appropriate ranges: Avoid overly broad ranges that waste computational resources.

- Use logarithmic scales for parameters that vary by orders of magnitude.

- Consider concurrent jobs carefully: While parallel jobs complete faster, sequential jobs often find better solutions.

- Design distributed training to target your specific objective metrics.

SageMaker Experiments

SageMaker Experiments helps track, organize, and compare machine learning iterations:

- Automatically captures inputs, parameters, configurations, and results for each run.

- Organizes related runs into experiments for easy comparison.

- Visualizes performance metrics across multiple runs to identify the best models.

- Integrates with SageMaker Studio for a unified experience.

- Supports collaboration by sharing experiment results with team members.

SageMaker Pipelines

SageMaker Pipelines provides a comprehensive MLOps solution:

- Enables creation and editing of ML workflows with visual tools.

- Offers pre-built templates for common ML workflows.

- Includes built-in testing frameworks for validating models before deployment.

- Provides comprehensive monitoring of pipeline execution.

- Supports ML workflows that span multiple AWS accounts for enhanced security.

SageMaker Debugger

SageMaker Debugger provides tools to debug training jobs and resolve problems:

- Automatically detects common training problems like overfitting, vanishing gradients, and exploding tensors.

- Captures metrics and tensors during training for real-time and post-training analysis.

- Sends alerts when anomalies are detected, enabling early intervention.

- Provides visualization tools to understand model behavior.

- Supports popular frameworks including TensorFlow, PyTorch, MXNet, and XGBoost.

Edge and Hybrid Deployment

SageMaker Edge

SageMaker Edge enables machine learning at the edge:

- Provides a lightweight runtime optimized for resource-constrained devices.

- Supports continuous learning on edge devices with periodic synchronization to the cloud.

- Enables intelligent workload distribution between edge devices and cloud resources.

- Offers comprehensive tools for managing and monitoring edge devices running ML models.

- Includes advanced compilation techniques that optimize models for specific edge hardware.

SageMaker Neo

SageMaker Neo enables machine learning models to train once and run anywhere in the cloud and at the edge:

- Optimizes models for a wide range of hardware platforms including CPUs, GPUs, and specialized ML accelerators.

- Provides specialized techniques for optimizing large language models and other foundation models.

- Includes advanced quantization techniques that preserve model accuracy while reducing size.

- Supports intelligent partitioning of models across multiple devices or between edge and cloud.

- Can be used with IoT Greengrass to help perform machine learning inference locally on devices.

SageMaker Data Wrangler

SageMaker Data Wrangler reduces the time it takes to aggregate and prepare data for ML:

- Simplifies the process of data preparation and feature engineering from a single visual interface.

- Supports SQL queries for data selection from various sources.

- Provides data quality and insights reports to automatically verify data quality and detect anomalies.

- Contains over 300 built-in data transformations for quick data transformation without coding.

- Creates processing jobs that can be integrated into ML pipelines.

SageMaker Pricing

SageMaker follows a pay-as-you-go pricing model with no upfront commitments:

- Users pay only for the resources they use across the ML workflow.

- Costs are based on the instance types and duration of usage for notebooks, training, and inference.

- Storage costs apply for data stored in SageMaker Feature Store, model artifacts, and other components.

- Serverless options like SageMaker Serverless Inference charge based on duration and memory configuration.

- Cost optimization features include:

- Managed Spot Training for reduced training costs

- Multi-model endpoints for efficient hosting of multiple models

- Serverless Inference for pay-per-use model hosting

- Inference Recommender for optimal instance selection

- Auto-scaling to match resources with demand

- SageMaker Savings Plans for committed usage discounts

AWS Certification Exam Practice Questions

- Questions are collected from various sources and answers reflect our understanding, which may differ from yours.

- AWS services are updated frequently, so some information may become outdated.

- AWS exam questions may not always reflect the latest service updates.

- We welcome feedback and corrections to improve accuracy.

- A company has built a deep learning model and now wants to deploy it using the SageMaker Hosting Services. For inference, they want a cost-effective option that guarantees low latency but still comes at a fraction of the cost of using a GPU instance for your endpoint. As a machine learning Specialist, what feature should be used?

- Inference Pipeline

- AWS Inferentia

- SageMaker Ground Truth

- SageMaker Neo

Answer: AWS Inferentia – AWS Inferentia is designed specifically for high-performance, cost-effective ML inference, providing better performance at lower cost compared to general-purpose GPU instances.

- A trading company is experimenting with different datasets, algorithms, and hyperparameters to find the best combination for the machine learning problem. The company doesn’t want to limit the number of experiments the team can perform but wants to track the several hundred to over a thousand experiments throughout the modeling effort. Which Amazon SageMaker feature should they use to help manage your team’s experiments at scale?

- SageMaker Inference Pipeline

- SageMaker Experiments

- SageMaker Neo

- SageMaker model containers

Answer: SageMaker Experiments – SageMaker Experiments is designed specifically for tracking, organizing, and comparing machine learning iterations at scale.

- A Machine Learning Specialist needs to monitor Amazon SageMaker in a production environment for analyzing records of actions taken by a user, role, or an AWS service. Which service should the Specialist use to meet these needs?

- AWS CloudTrail

- Amazon CloudWatch

- AWS Systems Manager

- AWS Config

Answer: AWS CloudTrail – CloudTrail is the appropriate service for tracking API calls and user actions, while CloudWatch is better suited for performance monitoring and metrics.