Auto Scaling & ELB

- Auto Scaling & ELB

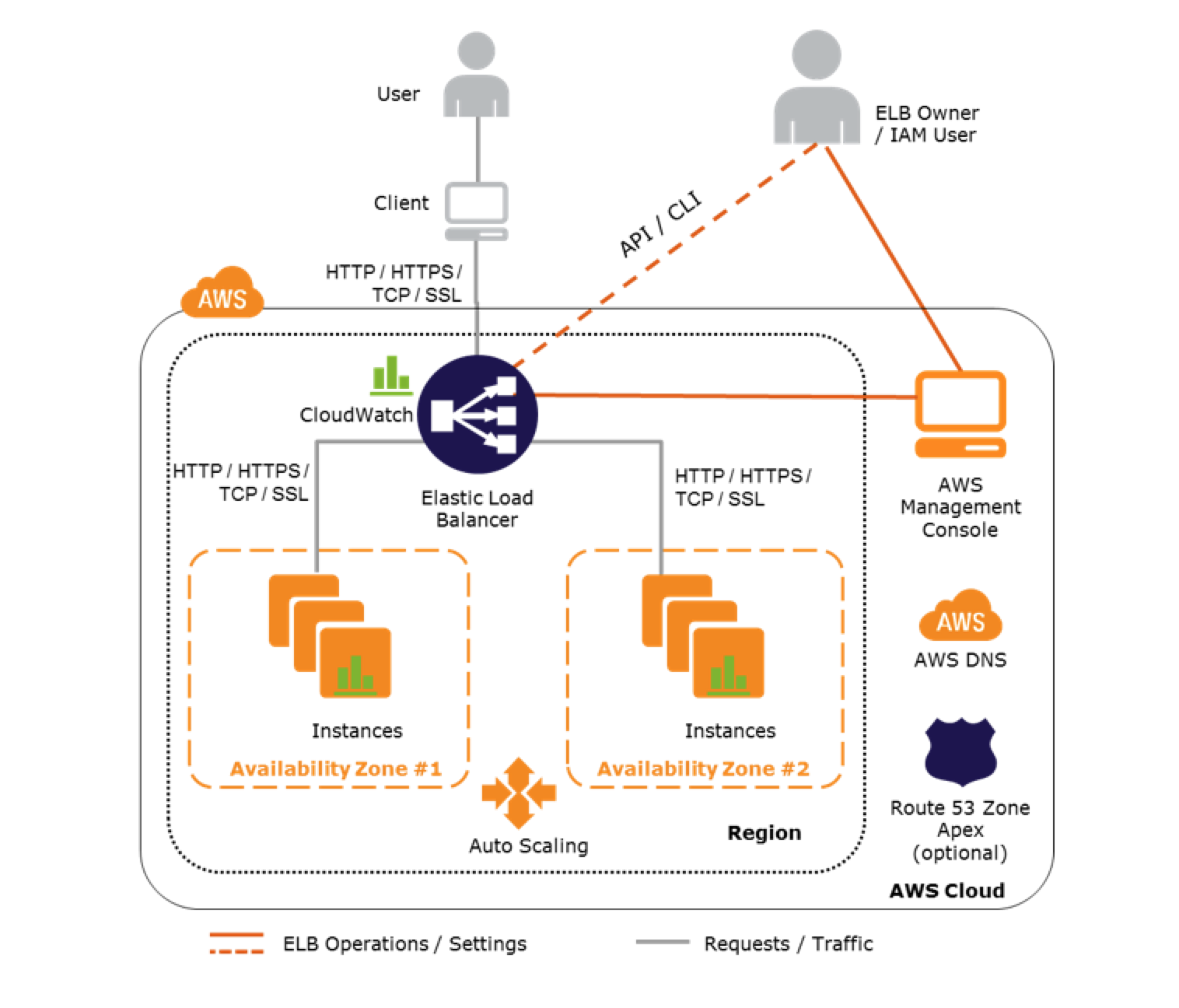

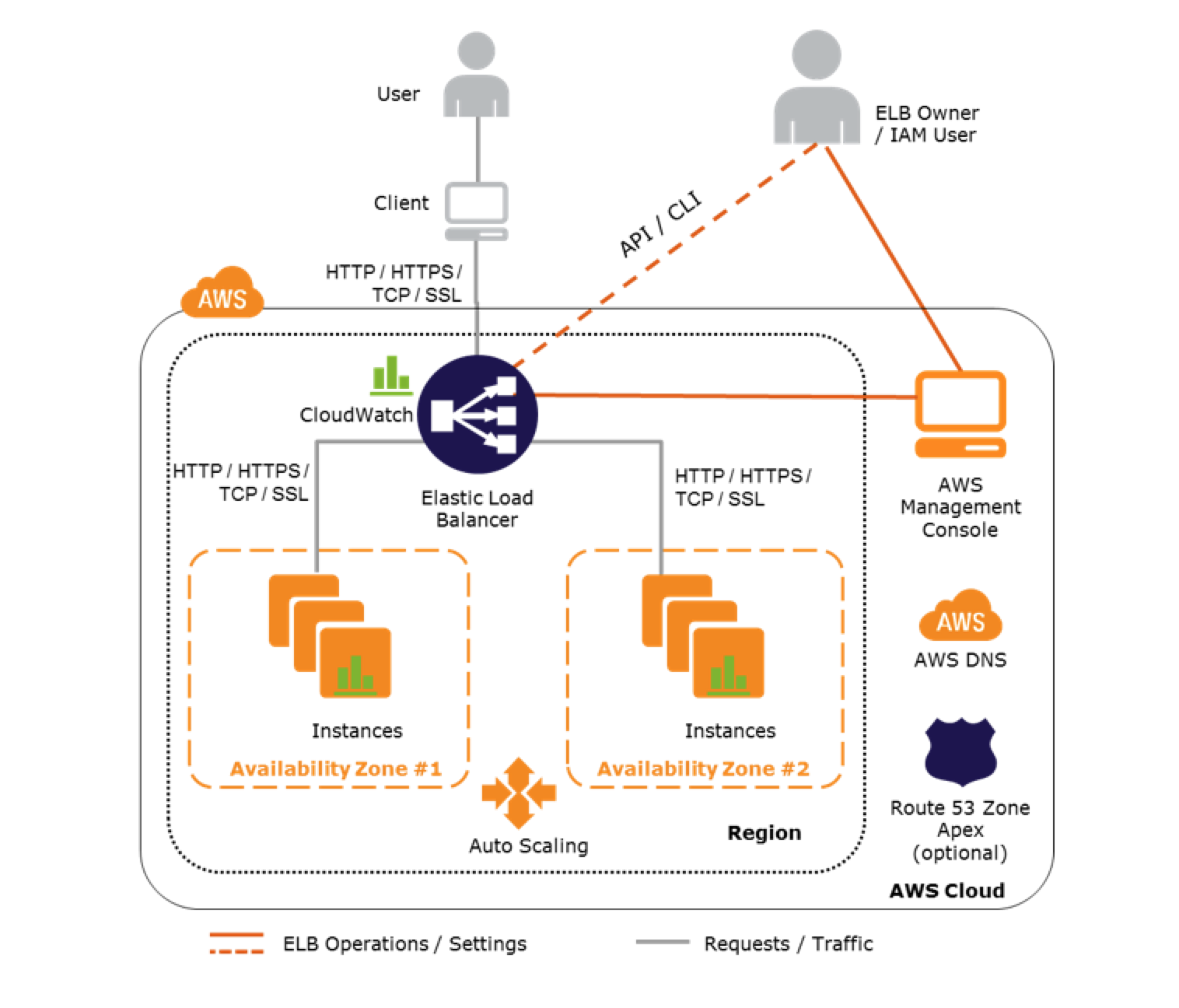

- makes it easy to route traffic across a dynamically changing fleet of EC2 instances

- acts as a single point of contact for all incoming traffic to the instances in an Auto Scaling group.

- Auto Scaling dynamically adds and removes EC2 instances, while Elastic Load Balancing manages incoming requests by optimally routing traffic so that no one instance is overwhelmed

- Auto Scaling helps to automatically increase the number of EC2 instances when the user demand goes up, and decrease the number of EC2 instances when demand goes down

- ELB service helps to distribute the incoming web traffic (called the load) automatically among all the running EC2 instances

- ELB uses load balancers to monitor traffic and handle requests that come through the Internet.

- Using ELB & Auto Scaling

- makes it easy to route traffic across a dynamically changing fleet of EC2 instances

- load balancer acts as a single point of contact for all incoming traffic to the instances in an Auto Scaling group.

Attaching/Detaching ELB with Auto Scaling Group

- Auto Scaling integrates with Elastic Load Balancing and enables attaching one or more load balancers to an existing Auto Scaling group.

- ELB registers the EC2 instance using its IP address and routes requests to the primary IP address of the primary interface (eth0) of the instance.

- After the ELB is attached, it automatically registers the instances in the group and distributes incoming traffic across the instances

- When ELB is detached, it enters the Removing state while deregistering the instances in the group.

- If connection draining is enabled, ELB waits for in-flight requests to complete before deregistering the instances.

- Instances remain running after they are deregistered from the ELB

- Auto Scaling adds instances to the ELB as they are launched, but this can be suspended. Instances launched during the suspension period are not added to the load balancer, after the resumption, and must be registered manually.

High Availability & Redundancy

- Auto Scaling can span across multiple AZs, within the same region.

- When one AZ becomes unhealthy or unavailable, Auto Scaling launches new instances in an unaffected AZ.

- When the unhealthy AZ recovers, Auto Scaling redistributes the traffic across all the healthy AZ.

- Elastic Load balancer can be set up to distribute incoming requests across EC2 instances in a single AZ or multiple AZs within a region.

- Using Auto Scaling & ELB by spanning Auto Scaling groups across multiple AZs within a region and then setting up ELB to distribute incoming traffic across those AZs helps take advantage of the safety and reliability of geographic redundancy.

- Incoming traffic is load balanced equally across all the AZs enabled for ELB.

Health Checks

- Auto Scaling group determines the health state of each instance by periodically checking the results of EC2 instance status checks.

- Auto Scaling marks the instance as unhealthy and replaces the instance if the instance fails the EC2 instance status check.

- ELB also performs health checks on the EC2 instances that are registered with it for e.g. the application is available by pinging a health check page

- ELB health check with the instances should be used to ensure that traffic is routed only to the healthy instances.

- Auto Scaling, by default, does not replace the instance, if the ELB health check fails.

- After a load balancer is registered with an Auto Scaling group, it can be configured to use the results of the ELB health check in addition to the EC2 instance status checks to determine the health of the EC2 instances in the Auto Scaling group.

Monitoring

- Elastic Load Balancing sends data about the load balancers and EC2 instances to CloudWatch. CloudWatch collects data about the performance of your resources and presents it as metrics.

- After registering one or more load balancers with the Auto Scaling group, the Auto Scaling group can be configured to use ELB metrics (such as request latency or request count) to scale the application automatically.

AWS Certification Exam Practice Questions

- Questions are collected from Internet and the answers are marked as per my knowledge and understanding (which might differ with yours).

- AWS services are updated everyday and both the answers and questions might be outdated soon, so research accordingly.

- AWS exam questions are not updated to keep up the pace with AWS updates, so even if the underlying feature has changed the question might not be updated

- Open to further feedback, discussion and correction.

- A company is building a two-tier web application to serve dynamic transaction-based content. The data tier is leveraging an Online Transactional Processing (OLTP) database. What services should you leverage to enable an elastic and scalable web tier?

- Elastic Load Balancing, Amazon EC2, and Auto Scaling

- Elastic Load Balancing, Amazon RDS with Multi-AZ, and Amazon S3

- Amazon RDS with Multi-AZ and Auto Scaling

- Amazon EC2, Amazon DynamoDB, and Amazon S3

- You have been given a scope to deploy some AWS infrastructure for a large organization. The requirements are that you will have a lot of EC2 instances but may need to add more when the average utilization of your Amazon EC2 fleet is high and conversely remove them when CPU utilization is low. Which AWS services would be best to use to accomplish this?

- Amazon CloudFront, Amazon CloudWatch and Elastic Load Balancing

- Auto Scaling, Amazon CloudWatch and AWS CloudTrail

- Auto Scaling, Amazon CloudWatch and Elastic Load Balancing

- Auto Scaling, Amazon CloudWatch and AWS Elastic Beanstalk

- A user has configured ELB with Auto Scaling. The user suspended the Auto Scaling AddToLoadBalancer, which adds instances to the load balancer. process for a while. What will happen to the instances launched during the suspension period?

- The instances will not be registered with ELB and the user has to manually register when the process is resumed

- The instances will be registered with ELB only once the process has resumed

- Auto Scaling will not launch the instance during this period due to process suspension

- It is not possible to suspend only the AddToLoadBalancer process

- You have an Auto Scaling group associated with an Elastic Load Balancer (ELB). You have noticed that instances launched via the Auto Scaling group are being marked unhealthy due to an ELB health check, but these unhealthy instances are not being terminated. What do you need to do to ensure trial instances marked unhealthy by the ELB will be terminated and replaced?

- Change the thresholds set on the Auto Scaling group health check

- Add an Elastic Load Balancing health check to your Auto Scaling group

- Increase the value for the Health check interval set on the Elastic Load Balancer

- Change the health check set on the Elastic Load Balancer to use TCP rather than HTTP checks

- You are responsible for a web application that consists of an Elastic Load Balancing (ELB) load balancer in front of an Auto Scaling group of Amazon Elastic Compute Cloud (EC2) instances. For a recent deployment of a new version of the application, a new Amazon Machine Image (AMI) was created, and the Auto Scaling group was updated with a new launch configuration that refers to this new AMI. During the deployment, you received complaints from users that the website was responding with errors. All instances passed the ELB health checks. What should you do in order to avoid errors for future deployments? (Choose 2 answer) [PROFESSIONAL]

- Add an Elastic Load Balancing health check to the Auto Scaling group. Set a short period for the health checks to operate as soon as possible in order to prevent premature registration of the instance to the load balancer.

- Enable EC2 instance CloudWatch alerts to change the launch configuration’s AMI to the previous one. Gradually terminate instances that are using the new AMI.

- Set the Elastic Load Balancing health check configuration to target a part of the application that fully tests application health and returns an error if the tests fail.

- Create a new launch configuration that refers to the new AMI, and associate it with the group. Double the size of the group, wait for the new instances to become healthy, and reduce back to the original size. If new instances do not become healthy, associate the previous launch configuration.

- Increase the Elastic Load Balancing Unhealthy Threshold to a higher value to prevent an unhealthy instance from going into service behind the load balancer.

- What is the order of most-to-least rapidly-scaling (fastest to scale first)? A) EC2 + ELB + Auto Scaling B) Lambda C) RDS

- B, A, C (Lambda is designed to scale instantly. EC2 + ELB + Auto Scaling require single-digit minutes to scale out. RDS will take at least 15 minutes, and will apply OS patches or any other updates when applied.)

- C, B, A

- C, A, B

- A, C, B

- A user has hosted an application on EC2 instances. The EC2 instances are configured with ELB and Auto Scaling. The application server session time out is 2 hours. The user wants to configure connection draining to ensure that all in-flight requests are supported by ELB even though the instance is being deregistered. What time out period should the user specify for connection draining?

- 5 minutes

- 1 hour (max allowed is 3600 secs that is close to 2 hours to keep the in flight requests alive)

- 30 minutes

- 2 hours

References

AWS Auto Scaling with ELB

Question(1)

question says that it will be using a transnational database , so the answer should include any RDS or DB Solution so i don’t think that (a) is the right answer , what do you think ?

The Key point in the question is elastic and scalable web tier. Is it not asking for Database so #A is the right answer which includes Load balancer, Auto scaling and EC2 instance.

Questions can be tricky as they may have additional information which is totally unrelated to the final question that is asked, so make sure you read the question to understand what they are trying to achieve and filter out the unrelated information

Learning a lot from these. Thank you so much 🙂

Glad its helping Shivam ..

Hi , Thanks for listed out the important points on aws services with respect to exam point of view. I believe reading your pages would suffice to clear main exam rather than reading faq or docs . So the questions what listed our here are from main exam ?

Hi Snethil, most of exams are picked from various forums and sites and where very well part of the certification exams. Reading through will surely help as it covers most of things needed to get through the exams.

Hello Jayandra

I have completed AWS Solution Architect exam with 89%. Your blog was really useful to prepare this exam. Thanks a lot !!!!

I am planning for SysOps. Do you have any blog for the same ?

Thanks

K.Senthilkumar

Congrats Santhil … thats Great ..

Blog covers most of the stuff for Solution Architect and Sys Ops. Only thing missing is the CloudFormation, Opswork part.

Can you help answering below question ?

For me i believe the answer is (C) as ELB needs separate SUBNET for each AZ

You need to design a VPC for a web-application consisting of an Elastic Load Balancer (ELB). a fleet of web/application servers, and an RDS database The entire Infrastructure must be distributed over 2 availability zones.

Which VPC configuration works while assuring the database is not available from the Internet?

A. One public subnet for ELB one public subnet for the web-servers, and one private subnet for the database

B. One public subnet for ELB two private subnets for the web-servers, two private subnets for RDS

C. Two public subnets for ELB two private subnets for the web-servers and two private subnets for RDS

D. Two public subnets for ELB two public subnets for the web-servers, and two public subnets for RDS

Agreed with C, as the infra needs to be in 2 AZs, ELB needs to have 2 Subnets linked with RDS in private subnets

I think answer should be B. ELB can route traffic across AZs and it itself is scalable and highly available. Am I missing something?

ELB by itself will also launch an instance within the mapped subnets. If only subnet (AZ) is associated with ELB and it does down, the ELB effectively goes down. So it is a best practice to associate ELB with multiple subnets for High Availability.

Hi Jayendra- Q#4 why answer is not C,d. A doesnt give any clue but using d gives more option because questions is talking about deplo/yment and issue is coming with new ami with deployment. can you clarify more about it

Thanks Vijay, my bad it is incorrectly marked. D gives more options as in the kind of blue/green deployment. For A the health check if already passing so does not make sense.

Hi Jayendra, thank you for these resources.

Question: Your Solution Architect Associate exam blueprint doesn’t directly reference this “AWS Auto Scaling ELB” blog post/link as part of the CSA exam. However, the blueprint does reference the “AWS Auto Scaling” blogpost, and that blogpost in turn links to this “AWS Auto Scaling ELB” blogpost. There are other examples of this sort of linkage. In cases like that, does the “AWS Auto Scaling ELB” blogpost need to be studied for the exam? Or only the ones that are directly linked from the exam blueprint?

Thanks!

I have tried to link to the same topics and all associated topics in the mail topics are equally important.

For e.g. ELB and Auto Scaling are different topics, but they work together and lead to a bigger topic, thats why I have a separate blog post. But you need to go through it.

Make sure you go through the Main topics and all associated subtopics

Hi Jayendra,

Sorry for bother you,

Can you help answering below question ?

What features allows to auto scale ahead of expected load? (pick 2)

A. Metric-based

B. Healthe check grace period

C. Desired capacity

D. cooldown period

E. scheduled scaling

Thanks you so much.

Scheduled Scaling, which would help scale ahead of time. I think before 30-40 days. Rest are on the spot scaling.

Hi Jayendra,

Thanks you so much,

But the question need to choose 2 answer, please help me.

Metrics based seems the other right option for Dynamic scaling based on Event.

Rest of the options do not make sense as Change in the desired capacity would have an immediate impact. Cooldown period and health check grace period are parameters or configurations that will impact scaling.

Thanks Jayendra,

Hi Jayendra, can you please provide some explanation on Q4. the healthcheck is already configrued with ELB so did not get why 4b will be the right answer ..

The Health check is configured for ELB and failing, however the Auto Scaling needs to use the ELB health check, in addition to the system checks, to determine if the instance in healthy. Hence the Auto Scaling group needs to be updated to use ELB health check for terminating the instances

Hi JP,

Thank You so much for your wonderful blog. Words cannot express the gratitude I feel. I don’t know how the overall readers feel about this but in my opinion it would really help if the answers are provided at the end like an answer key instead of highlighting the correct answers.

That way we newbies can gauge our understanding better. Anyway just my 2 cents…

Like I said what you are doing is priceless.. taking selfless to a whole new level.

Regards,

SG

Thanks SG for the feedback and I have been getting it for quite some time. I am not been able to check on a proper plugin to do the same. Would try to check on the same, once i have some time.

Thank you Jayendra! It helped me lot to prepare for my exam. Hoping to see more such blogs from you.

Glad it Helped, Hortah …

In question no.2 why other options i.e a,b & d are not the right answer can u plz give reasons for each option in detail?

Option A would not work as CloudFront is more of a global content delivery network (CDN) service that accelerates delivery of your

websites, APIs, video content or other web assets through CDN caching.

Option B is wrong as CloudTrail is more of an auditing service.

Option D is wrong as Elastic Beanstalk with not help balance load over instances.