Google Kubernetes Engine – GKE

- Google Kubernetes Engine – GKE provides a managed environment for deploying, managing, and scaling containerized applications using Google infrastructure.

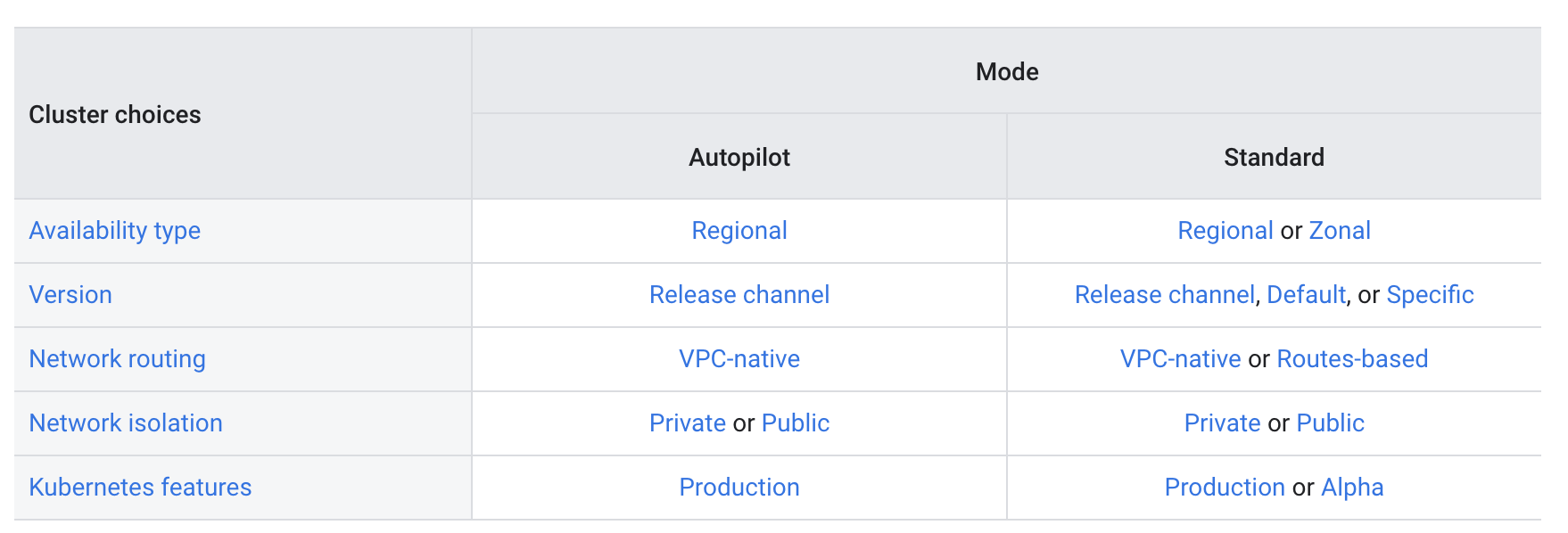

Standard vs Autopilot Cluster

- Standard

- Provides advanced configuration flexibility over the cluster’s underlying infrastructure.

- Cluster configurations needed for the production workloads are determined by you

- Autopilot

- Provides a fully provisioned and managed cluster configuration.

- Cluster configuration options are made for you.

- Autopilot clusters are pre-configured with an optimized cluster configuration that is ready for production workloads.

- GKE manages the entire underlying infrastructure of the clusters, including the control plane, nodes, and all system components.

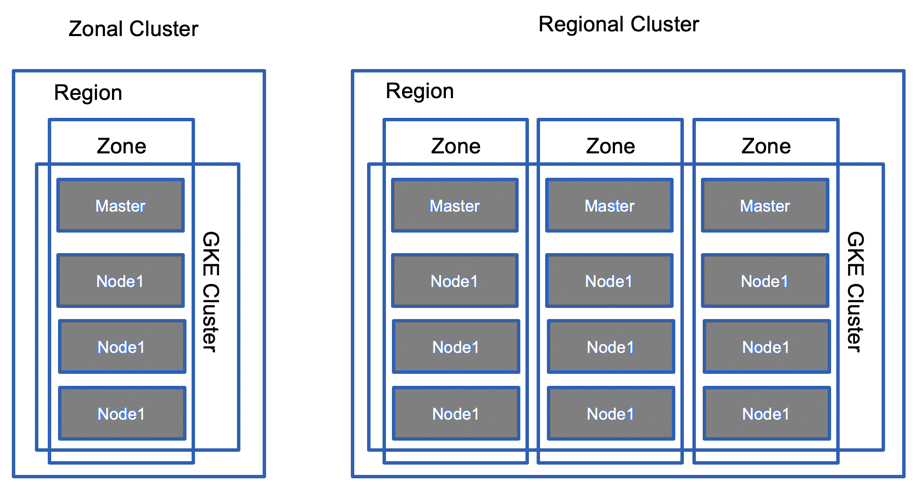

Zonal vs Regional Cluster

- Zonal clusters

- Zonal clusters have a single control plane in a single zone.

- Depending on the availability requirements, nodes for the zonal cluster can be distributed in a single zone or in multiple zones.

- Single-zone clusters

- Master -> Single Zone & Workers -> Single Zone

- A single-zone cluster has a single control plane running in one zone

- Control plane manages workloads on nodes running in the same zone

- Multi-zonal clusters

- Master -> Single Zone & Workers -> Multi-Zone

- A multi-zonal cluster has a single replica of the control plane running in a single zone and has nodes running in multiple zones.

- During an upgrade of the cluster or an outage of the zone where the control plane runs, workloads still run. However, the cluster, its nodes, and its workloads cannot be configured until the control plane is available.

- Multi-zonal clusters balance availability and cost for consistent workloads.

- Regional clusters

- Master -> Multi Zone & Workers -> Multi-Zone

- A regional cluster has multiple replicas of the control plane, running in multiple zones within a given region.

- Nodes also run in each zone where a replica of the control plane runs.

- Because a regional cluster replicates the control plane and nodes, it consumes more Compute Engine resources than a similar single-zone or multi-zonal cluster.

Route-Based Cluster vs VPC-Native Cluster

Refer blog post @ Google Kubernetes Engine Networking

Private Cluster

- Private clusters help isolate nodes from having inbound and outbound connectivity to the public internet by providing nodes with internal IP addresses only.

- External clients can still reach the services exposed as a load balancer by calling the external IP address of the HTTP(S) load balancer

- Cloud NAT or self-managed NAT gateway can provide outbound internet access for certain private nodes

- By default, Private Google Access is enabled, which provides private nodes and their workloads with limited outbound access to Google Cloud APIs and services over Google’s private network.

- The defined VPC network contains the cluster nodes, and a separate Google Cloud VPC network contains the cluster’s control plane.

- The control plane’s VPC network is located in a project controlled by Google. The Control plane’s VPC network is connected to the cluster’s VPC network with VPC Network Peering. Traffic between nodes and the control plane is routed entirely using internal IP addresses.

- Control plane for a private cluster has a private endpoint in addition to a public endpoint

- Control plane public endpoint access level can be controlled

- Public endpoint access disabled

- Most secure option as it prevents all internet access to the control plane

- Cluster can be accessed using Bastion host/Jump server or if Cloud Interconnect and Cloud VPN have been configured from the on-premises network to connect to Google Cloud.

- Authorized networks must be configured for the private endpoint, which must be internal IP addresses

- Public endpoint access enabled, authorized networks enabled:

- Provides restricted access to the control plane from defined source IP addresses

- Public endpoint access enabled, authorized networks disabled

- Default and least restrictive option.

- Publicly accessible from any source IP address as long as you authenticate.

- Public endpoint access disabled

- Nodes always contact the control plane using the private endpoint.

Shared VPC Clusters

- Shared VPC supports both zonal and regional clusters.

- Shared VPC supports VPC-native clusters and must have Alias IPs enabled. Legacy networks are not supported

Node Pools

- A node pool is a group of nodes within a cluster that all have the same configuration and are identical to one another.

- Node pools use a NodeConfig specification.

- Each node in the pool has a

cloud.google.com/gke-nodepool, Kubernetes node label, which has the node pool’s name as its value. - Number of nodes and type of nodes specified during cluster creation becomes the default node pool. Additional custom node pools of different sizes and types can be added to the cluster for e.g. local SSDs, GPUs, preemptible VMs or different machine types

- Node pools can be created, upgrade, deleted individually without affecting the whole cluster. However, a single node in a node pool cannot be configured; any configuration changes affect all nodes in the node pool.

- You can resize node pools in a cluster by adding or removing nodes using

gcloud container clusters resize CLUSTER_NAME --node-pool POOL_NAME --num-nodes NUM_NODES - Existing node pools can be manually upgraded or automatically upgraded.

- For a multi-zonal or regional cluster, all of the node pools are replicated to those zones automatically. Any new node pool is automatically created or deleted in those zones.

- GKE drains all the nodes in the node pool when a node pool is deleted

Cluster Autoscaler

- GKE’s cluster autoscaler automatically resizes the number of nodes in a given node pool, based on the demands of the workloads.

- Cluster autoscaler is automatic by specifying the minimum and maximum size of the node pool and does not require manual intervention.

- Cluster autoscaler increases or decreases the size of the node pool automatically, based on the resource requests (rather than actual resource utilization) of Pods running on that node pool’s nodes

- If Pods are unschedulable because there are not enough nodes in the node pool, cluster autoscaler adds nodes, up to the maximum size of the node pool.

- If nodes are under-utilized, and all Pods could be scheduled even with fewer nodes in the node pool, Cluster autoscaler removes nodes, down to the minimum size of the node pool. If the node cannot be drained gracefully after a timeout period (currently 10 minutes – not configurable), the node is forcibly terminated.

- Before enabling cluster autoscaler, design the workloads to tolerate potential disruption or ensure that critical Pods are not interrupted.

- Workloads might experience transient disruption with autoscaling, esp. with workloads running with a single replica.

Auto-upgrading nodes

- Node auto-upgrades help keep the nodes in the GKE cluster up-to-date with the cluster control plane (master) version when the control plane is updated on your behalf.

- Node auto-upgrade is enabled by default when a new cluster or node pool is created with Google Cloud Console or the

gcloudcommand, - Node auto-upgrades provide several benefits:

- Lower management overhead – no need to manually track and update the nodes when the control plane is upgraded on your behalf.

- Better security – GKE automatically ensures that security updates are applied and kept up to date.

- Ease of use – provides a simple way to keep the nodes up to date with the latest Kubernetes features.

- Node pools with auto-upgrades enabled are scheduled for upgrades when they meet the selection criteria. Rollouts are phased across multiple weeks to ensure cluster and fleet stability

- During the upgrade, nodes are drained and re-created to match the current control plane version. Modifications on the boot disk of a node VM do not persist across node re-creations. To preserve modifications across node re-creation, use a DaemonSet.

- Enabling auto-upgrades does not cause the nodes to upgrade immediately

GKE Security

GCP Certification Exam Practice Questions

- Questions are collected from Internet and the answers are marked as per my knowledge and understanding (which might differ with yours).

- GCP services are updated everyday and both the answers and questions might be outdated soon, so research accordingly.

- GCP exam questions are not updated to keep up the pace with GCP updates, so even if the underlying feature has changed the question might not be updated

- Open to further feedback, discussion and correction.

- Your existing application running in Google Kubernetes Engine (GKE) consists of multiple pods running on four GKE

n1-standard-2nodes. You need to deploy additional pods requiringn2-highmem-16nodes without any downtime. What should you do?- Use

gcloud container clusters upgrade. Deploy the new services. - Create a new Node Pool and specify machine type

n2-highmem-16. Deploy the new pods. - Create a new cluster with

n2-highmem-16nodes. Redeploy the pods and delete the old cluster. - Create a new cluster with both

n1-standard-2andn2-highmem-16nodes. Redeploy the pods and delete the old cluster.

- Use