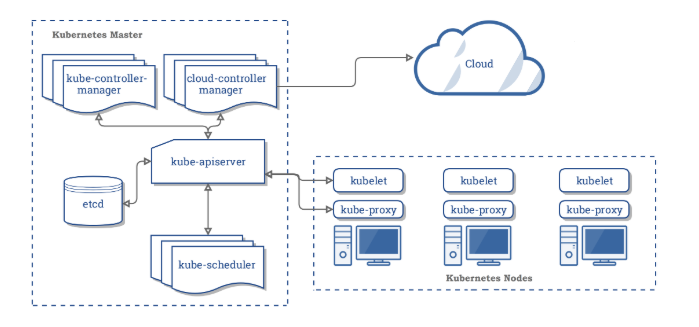

Kubernetes Architecture

- A Kubernetes cluster consists of at least one main (control) plane, and one or more worker machines, called nodes.

- Both the control planes and node instances can be physical devices, virtual machines, or instances in the cloud.

- In managed Kubernetes environments like AWS EKS, GCP GKE, Azure AKS the control plane is managed by the cloud provider.

Control Plane

- The control plane is also known as a master node or head node.

- The control plane manages the worker nodes and the Pods in the cluster.

- In production environments, the control plane usually runs across multiple computers and a cluster usually runs multiple nodes, providing fault-tolerance and high availability.

- It is not recommended to run user workloads on master mode.

- The Control plane’s components make global decisions about the cluster, as well as detect and respond to cluster events.

- The control plane receives input from a CLI or UI via an API.

API Server (kube-apiserver)

- API server exposes a REST interface to the Kubernetes cluster. It is the front end for the Kubernetes control plane.

- All operations against pods, services, and so forth, are executed programmatically by communicating with the endpoints provided by it.

- It tracks the state of all cluster components and manages the interaction between them.

- It is designed to scale horizontally.

- It consumes YAML/JSON manifest files.

- It validates and processes the requests made via API.

etcd (key-value store)

- Etcd is a consistent, distributed, and highly-available key-value store.

- is stateful, persistent storage that stores all of Kubernetes cluster data (cluster state and config).

- is the source of truth for the cluster.

- can be part of the control plane, or, it can be configured externally.

- ETCD benefits include

- Fully replicated: Every node in an etcd cluster has access to the full data store.

- Highly available: etcd is designed to have no single point of failure and gracefully tolerate hardware failures and network partitions.

- Reliably consistent: Every data ‘read’ returns the latest data ‘write’ across all clusters.

- Fast: etcd has been benchmarked at 10,000 writes per second.

- Secure: etcd supports automatic Transport Layer Security (TLS) and optional secure socket layer (SSL) client certificate authentication.

- Simple: Any application, from simple web apps to highly complex container orchestration engines such as Kubernetes, can read or write data to etcd using standard HTTP/JSON tools.

Scheduler (kube-scheduler)

- The scheduler is responsible for assigning work to the various nodes. It keeps watch over the resource capacity and ensures that a worker node’s performance is within an appropriate threshold.

- It schedules pods to worker nodes.

- It watches api-server for newly created Pods with no assigned node, and selects a healthy node for them to run on.

- If there are no suitable nodes, the pods are put in a pending state until such a healthy node appears.

- It watches API Server for new work tasks.

- Factors taken into account for scheduling decisions include:

- Individual and collective resource requirements.

- Hardware/software/policy constraints.

- Affinity and anti-affinity specifications.

- Data locality.

- Inter-workload interference.

- Deadlines and taints.

Controller Manager (kube-controller-manager)

- Controller manager is responsible for making sure that the shared state of the cluster is operating as expected.

- It watches the desired state of the objects it manages and watches their current state through the API server.

- It takes corrective steps to make sure that the current state is the same as the desired state.

- It is a controller of controllers.

- It runs controller processes. Logically, each controller is a separate process, but to reduce complexity, they are all compiled into a single binary and run in a single process.

- Some types of controllers are:

- Node controller: Responsible for noticing and responding when nodes go down.

- Job controller: Watches for Job objects that represent one-off tasks, then creates Pods to run those tasks to completion.

- Endpoints controller: Populates the Endpoints object (that is, joins Services & Pods).

- Service Account & Token controllers: Create default accounts and API access tokens for new namespaces.

Cloud Controller Manager

- The cloud controller manager integrates with the underlying cloud technologies in your cluster when the cluster is running in a cloud environment.

- The cloud-controller-manager only runs controllers that are specific to your cloud provider.

- Cloud controller lets you link your cluster into cloud provider’s API, and separates out the components that interact with that cloud platform from components that only interact with your cluster.

- The following controllers can have cloud provider dependencies:

- Node controller: For checking the cloud provider to determine if a node has been deleted in the cloud after it stops responding.

- Route controller: For setting up routes in the underlying cloud infrastructure.

- Service controller: For creating, updating, and deleting cloud provider load balancers.

Data Plane Worker Node(s)

- The data plane is known as the worker node or compute node.

- A virtual or physical machine that contains the services necessary to run containerized applications.

- A Kubernetes cluster needs at least one worker node, but normally has many.

- The worker node(s) host the Pods that are the components of the application workload.

- Pods are scheduled and orchestrated to run on nodes.

- Cluster can be scaled up and down by adding and removing nodes.

- Node components run on every node, maintaining running pods and providing the Kubernetes runtime environment.

kubelet

- A Kubelet tracks the state of a pod to ensure that all the containers are running and healthy

- provides a heartbeat message every few seconds to the control plane.

- runs as an agent on each node in the cluster.

- acts as a conduit between the API server and the node.

- instantiates and executes Pods.

- watches API Server for work tasks.

- gets instructions from master and reports back to Masters.

kube-proxy

- Kube proxy is a networking component that routes traffic coming into a node from the service to the correct containers.

- is a network proxy that runs on each node in a cluster.

- manages IP translation and routing.

- maintains network rules on nodes. These network rules allow network communication to Pods from inside or outside of cluster.

- ensures each Pod gets a unique IP address.

- makes possible that all containers in a pod share a single IP.

- facilitates Kubernetes networking services and load-balancing across all pods in a service.

- It deals with individual host sub-netting and ensures that the services are available to external parties.

Container runtime

- Container runtime is responsible for running containers (in Pods).

- Kubernetes supports any implementation of the Kubernetes Container Runtime Interface CRI specifications

- To run the containers, each worker node has a container runtime engine.

- It pulls images from a container image registry and starts and stops containers.

- Kubernetes supports several container runtimes: