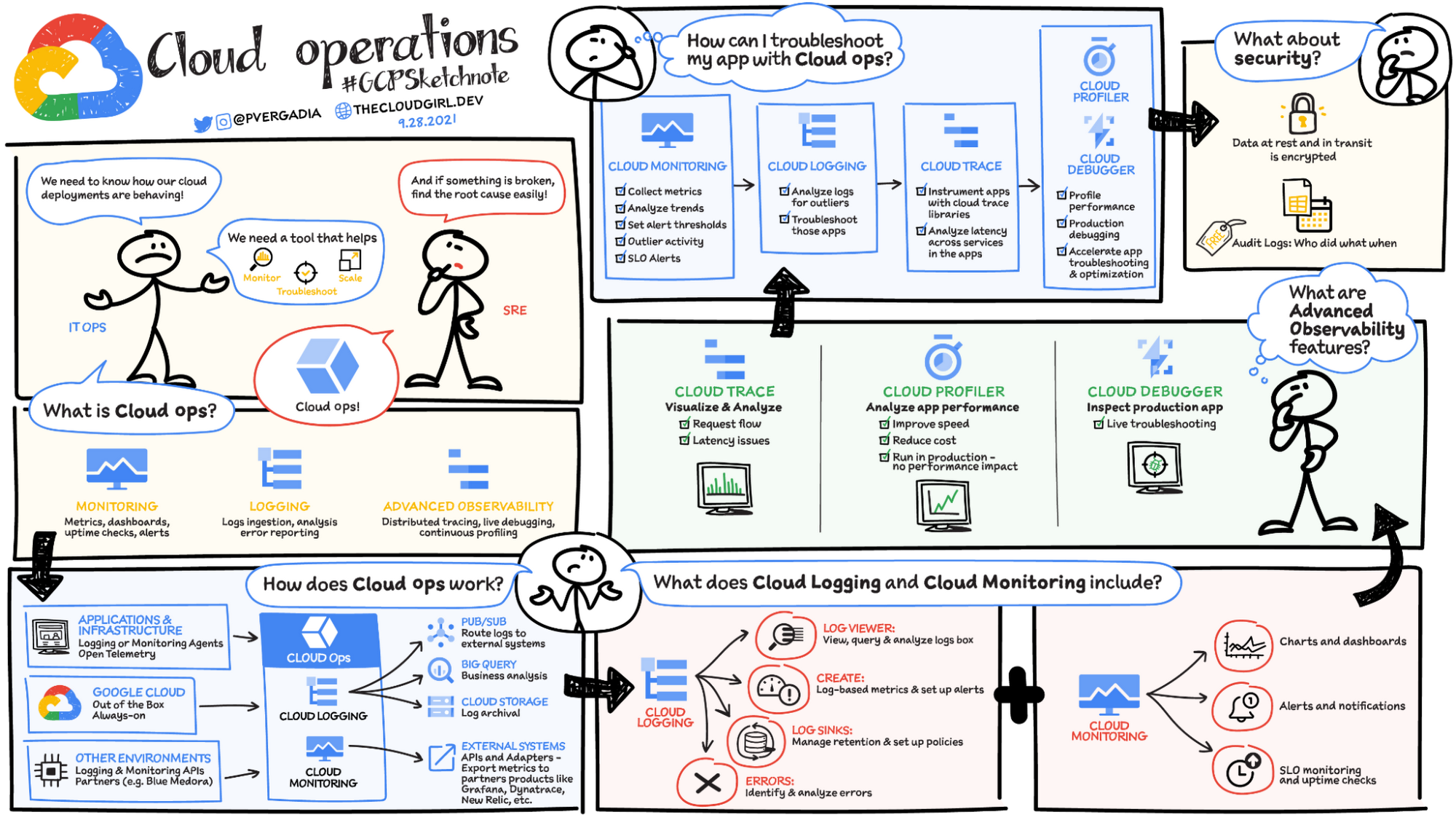

Google Cloud Operations

Google Cloud Operations provides integrated monitoring, logging, and trace managed services for applications and systems running on Google Cloud and beyond.

Cloud Monitoring

- Cloud Monitoring collects measurements of key aspects of the service and of the Google Cloud resources used.

- Cloud Monitoring provides tools to visualize and monitor this data.

- Cloud Monitoring helps gain visibility into the performance, availability, and health of the applications and infrastructure.

- Cloud Monitoring collects metrics, events, and metadata from Google Cloud, AWS, hosted uptime probes, and application instrumentation.

Cloud Logging

- Cloud Logging is a service for storing, viewing and interacting with logs.

- Answers the questions “Who did what, where and when” within the GCP projects

- Maintains non-tamperable audit logs for each project and organizations

- Logs buckets are a regional resource, which means the infrastructure that stores, indexes, and searches the logs are located in a specific geographical location.

Error Reporting

- Error Reporting aggregates and displays errors produced in the running cloud services.

- Error Reporting provides a centralized error management interface, to help find the application’s top or new errors so that they can be fixed faster.

Cloud Profiler

- Cloud Profiler helps with continuous CPU, heap, and other parameters profiling to improve performance and reduce costs.

- Cloud Profiler is a continuous profiling tool that is designed for applications running on Google Cloud:

- It’s a statistical, or sampling, profiler that has low overhead and is suitable for production environments.

- It supports common languages and collects multiple profile types.

- Cloud Profiler consists of the profiling agent, which collects the data, and a console interface on Google Cloud, which lets you view and analyze the data collected by the agent.

- Cloud Profiler is supported for Compute Engine, App Engine, GKE, and applications running on on-premises as well.

Cloud Trace

- Cloud Trace is a distributed tracing system that collects latency data from the applications and displays it in the Google Cloud Console.

- Cloud Trace helps understand how long it takes the application to handle incoming requests from users or applications, and how long it takes to complete operations like RPC calls performed when handling the requests.

- CloudTrace can track how requests propagate through the application and receive detailed near real-time performance insights.

- Cloud Trace automatically analyzes all of the application’s traces to generate in-depth latency reports to surface performance degradations and can capture traces from all the VMs, containers, or App Engines.

Cloud Debugger

- Cloud Debugger helps inspect the state of an application, at any code location, without stopping or slowing down the running app.

- Cloud Debugger makes it easier to view the application state without adding logging statements.

- Cloud Debugger adds less than 10ms to the request latency only when the application state is captured. In most cases, this is not noticeable by users.

- Cloud Debugger can be used with or without access to your app’s source code.

- Cloud Debugger supports Cloud Source Repositories, GitHub, Bitbucket, or GitLab as the source code repository. If the source code repository is not supported, the source files can be uploaded.

- Cloud Debugger allows collaboration by sharing the debug session by sending the Console URL.

- Cloud Debugger supports a range of IDE.

Debug Snapshots

- Debug Snapshots capture local variables and the call stack at a specific line location in the app’s source code without stopping or slowing it down.

- Certain conditions and locations can be specified to return a snapshot of the app’s data.

- Debug Snapshots support canarying wherein the debugger agent tests the snapshot on a subset of the instances.

Debug Logpoints

- Debug Logpoints allow you to inject logging into running services without restarting or interfering with the normal function of the service.

- Debug Logpoints are useful for debugging production issues without having to add log statements and redeploy.

- Debug Logpoints remain active for 24 hours after creation, or until they are deleted or the service is redeployed.

- If a logpoint is placed on a line that receives lots of traffic, the Debugger throttles the logpoint to reduce its impact on the application.

- Debug Logpoints support canarying wherein the debugger agent tests the logpoints on a subset of the instances.