AWS Networking & Content Delivery Services Cheat Sheet

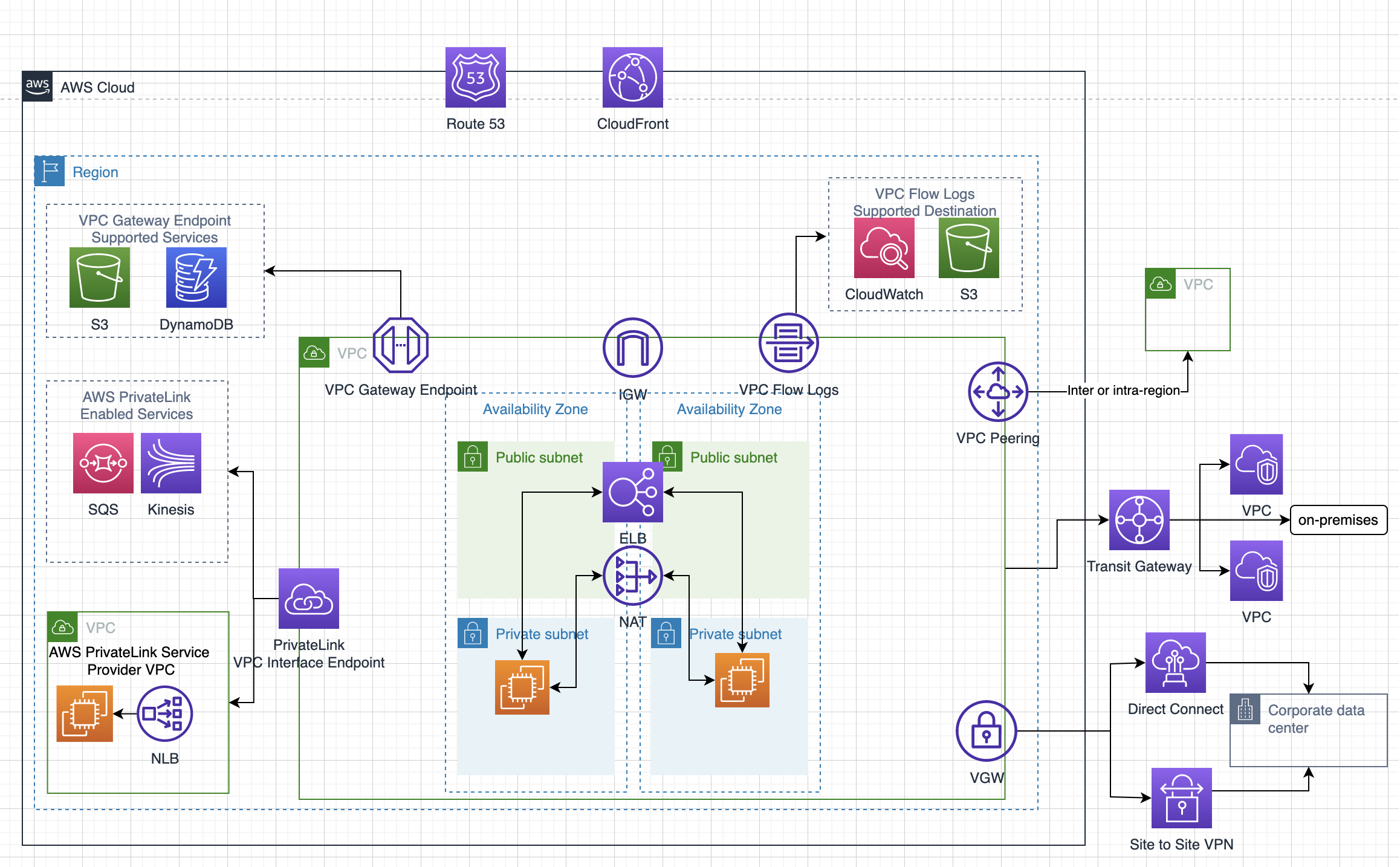

Virtual Private Cloud – VPC

- helps define a logically isolated dedicated virtual network within the AWS

- provides control of IP addressing using CIDR block from a minimum of /28 to a maximum of /16 block size

- supports IPv4 and IPv6 addressing

cannot be extended once created- can be extended by associating secondary IPv4 CIDR blocks to VPC

- Components

- Internet gateway (IGW) provides access to the Internet

- Virtual gateway (VGW) provides access to the on-premises data center through VPN and Direct Connect connections

- VPC can have only one IGW and VGW

- Route tables determine network traffic routing from the subnet

- Ability to create a subnet with VPC CIDR block

- A Network Address Translation (NAT) server provides outbound Internet access for EC2 instances in private subnets

- Elastic IP addresses are static, persistent public IP addresses

- Instances launched in the VPC will have a Private IP address and can have a Public or an Elastic IP address associated with it

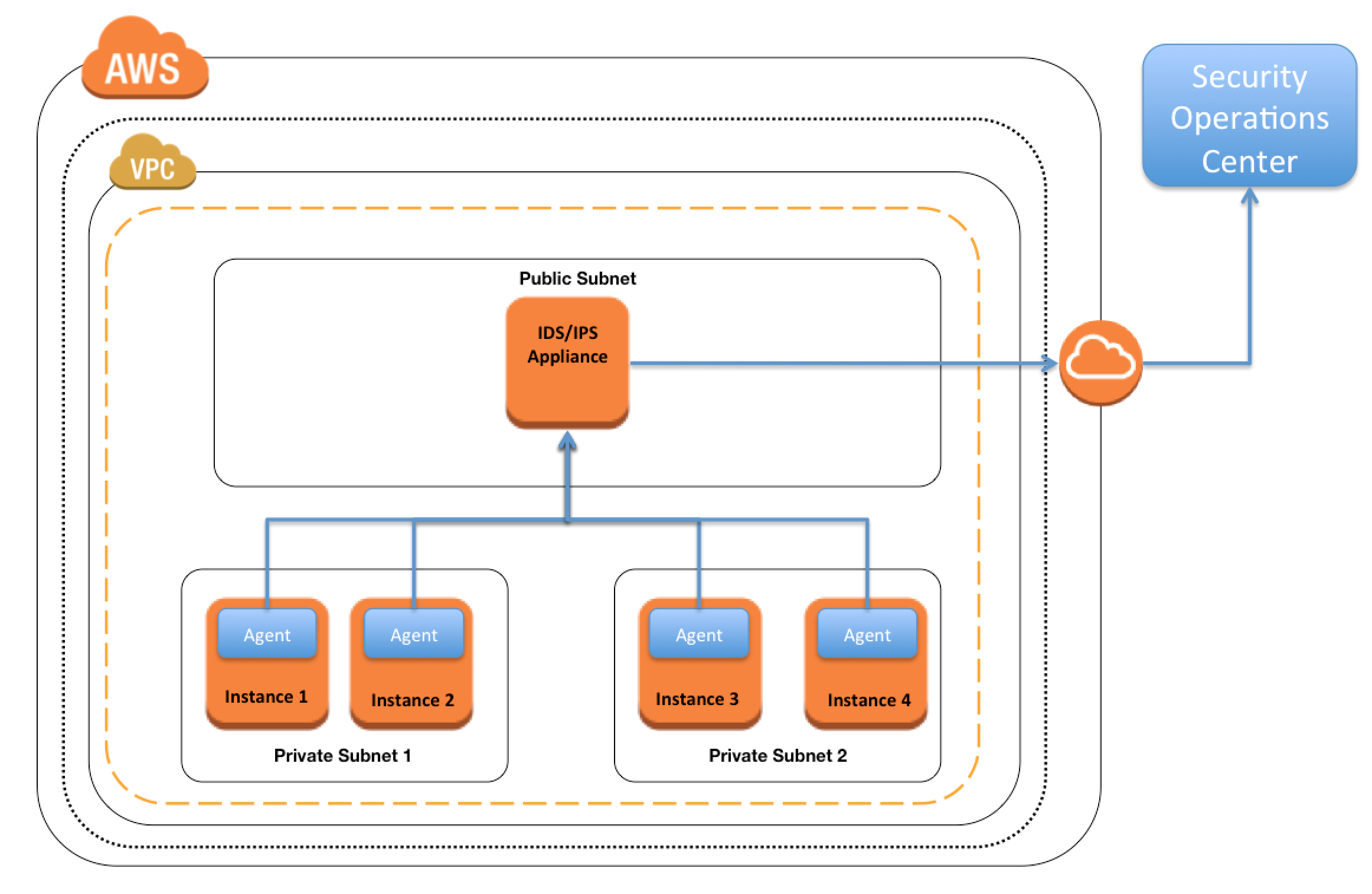

- Security Groups and NACLs help define security

- Flow logs – Capture information about the IP traffic going to and from network interfaces in your VPC

- Tenancy option for instances

- shared, by default, allows instances to be launched on shared tenancy

- dedicated allows instances to be launched on a dedicated hardware

- Route Tables

- defines rules, termed as routes, which determine where network traffic from the subnet would be routed

- Each VPC has a Main Route table and can have multiple custom route tables created

- Every route table contains a local route that enables communication within a VPC which cannot be modified or deleted

- Route priority is decided by matching the most specific route in the route table that matches the traffic

- Subnets

- map to AZs and do not span across AZs

- have a CIDR range that is a portion of the whole VPC.

- CIDR ranges cannot overlap between subnets within the VPC.

- AWS reserves 5 IP addresses in each subnet – first 4 and last one

- Each subnet is associated with a route table which define its behavior

- Public subnets – inbound/outbound Internet connectivity via IGW

- Private subnets – outbound Internet connectivity via an NAT or VGW

- Protected subnets – no outbound connectivity and used for regulated workloads

- Elastic Network Interface (ENI)

- a default ENI, eth0, is attached to an instance which cannot be detached with one or more secondary detachable ENIs (eth1-ethn)

- has primary private, one or more secondary private, public, Elastic IP address, security groups, MAC address and source/destination check flag attributes associated

- AN ENI in one subnet can be attached to an instance in the same or another subnet, in the same AZ and the same VPC

- Security group membership of an ENI can be changed

- with pre-allocated Mac Address can be used for applications with special licensing requirements

- Security Groups vs NACLs – Network Access Control Lists

- Stateful vs Stateless

- At instance level vs At subnet level

- Only allows Allow rule vs Allows both Allow and Deny rules

- Evaluated as a Whole vs Evaluated in defined Order

- Elastic IP

- is a static IP address designed for dynamic cloud computing.

- is associated with an AWS account, and not a particular instance

- can be remapped from one instance to another instance

- is charged for non-usage, if not linked for any instance or instance associated is in a stopped state

- NAT

- allows internet access to instances in the private subnets.

- performs the function of both address translation and port address translation (PAT)

- needs source/destination check flag to be disabled as it is not the actual destination of the traffic for NAT Instance.

- NAT gateway is an AWS managed NAT service that provides better availability, higher bandwidth, and requires less administrative effort

- are not supported for IPv6 traffic

- NAT Gateway supports private NAT with fixed private IPs.

- Egress-Only Internet Gateways

- outbound communication over IPv6 from instances in the VPC to the Internet, and prevents the Internet from initiating an IPv6 connection with your instances

- supports IPv6 traffic only

- Shared VPCs

- allows multiple AWS accounts to create their application resources, such as EC2 instances, RDS databases, Redshift clusters, and AWS Lambda functions, into shared, centrally-managed VPCs

VPC Peering

- allows routing of traffic between the peer VPCs using private IP addresses with no IGW or VGW required.

- No single point of failure and bandwidth bottlenecks

- supports inter-region VPC peering

- Limitations

- IP space or CIDR blocks cannot overlap

- cannot be transitive

- supports a one-to-one relationship between two VPCs and has to be explicitly peered.

- does not support edge-to-edge routing.

- supports only one connection between any two VPCs

Private DNS values cannot be resolved- Security groups from peered VPC can now be referred to, however, the VPC should be in the same region.

VPC Endpoints

- enables private connectivity from VPC to supported AWS services and VPC endpoint services powered by PrivateLink

- does not require a public IP address, access over the Internet, NAT device, a VPN connection, or Direct Connect

- traffic between VPC & AWS service does not leave the Amazon network

- are virtual devices.

- are horizontally scaled, redundant, and highly available VPC components that allow communication between instances in the VPC and services without imposing availability risks or bandwidth constraints on the network traffic.

- Gateway Endpoints

- is a gateway that is a target for a specified route in the route table, used for traffic destined to a supported AWS service.

- only S3 and DynamoDB are currently supported

- Interface Endpoints OR Private Links

- is an elastic network interface with a private IP address that serves as an entry point for traffic destined to a supported service

- supports services include AWS services, services hosted by other AWS customers and partners in their own VPCs (referred to as endpoint services), and supported AWS Marketplace partner services.

- Private Links

- provide fine-grained access control

- provides a point-to-point integration.

- supports overlapping CIDR blocks.

- supports transitive routing

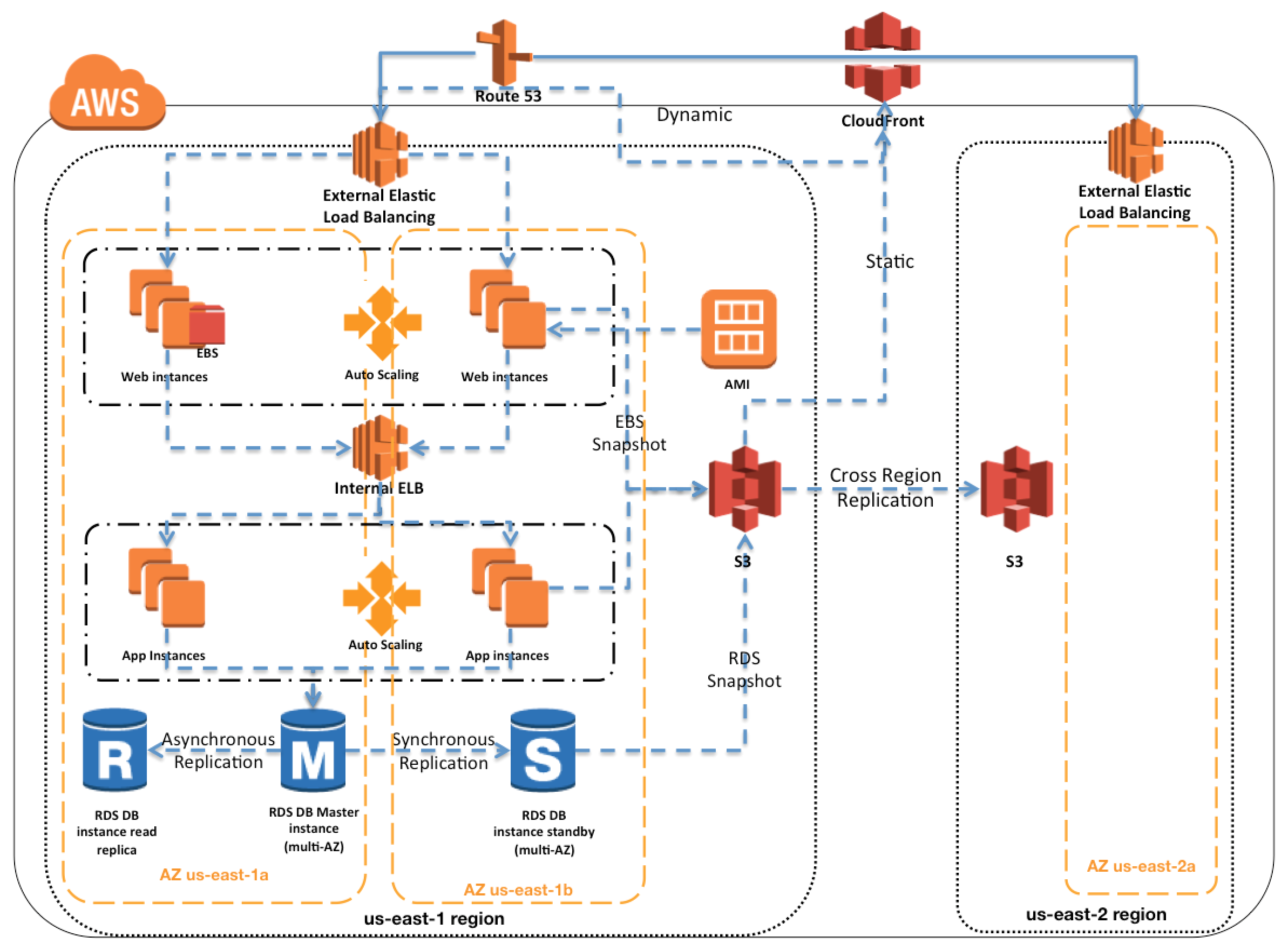

CloudFront

- provides low latency and high data transfer speeds for the distribution of static, dynamic web, or streaming content to web users.

- delivers the content through a worldwide network of data centers called Edge Locations or Point of Presence (PoPs)

- keeps persistent connections with the origin servers so that the files can be fetched from the origin servers as quickly as possible.

- dramatically reduces the number of network hops that users’ requests must pass through

- supports multiple origin server options, like AWS hosted service for e.g. S3, EC2, ELB, or an on-premise server, which stores the original, definitive version of the objects

- single distribution can have multiple origins and Path pattern in a cache behavior determines which requests are routed to the origin

- Web distribution supports static, dynamic web content, on-demand using progressive download & HLS, and live streaming video content

- supports HTTPS using either

- dedicated IP address, which is expensive as a dedicated IP address is assigned to each CloudFront edge location

- Server Name Indication (SNI), which is free but supported by modern browsers only with the domain name available in the request header

- For E2E HTTPS connection,

- Viewers -> CloudFront needs either a certificate issued by CA or ACM

- CloudFront -> Origin needs a certificate issued by ACM for ELB and by CA for other origins

- Security

- Origin Access Identity (OAI) can be used to restrict the content from S3 origin to be accessible from CloudFront only

- supports Geo restriction (Geo-Blocking) to whitelist or blacklist countries that can access the content

- Signed URLs

- to restrict access to individual files, for e.g., an installation download for your application.

- users using a client, for e.g. a custom HTTP client, that doesn’t support cookies

- Signed Cookies

- provide access to multiple restricted files, for e.g., video part files in HLS format or all of the files in the subscribers’ area of a website.

- don’t want to change the current URLs

- integrates with AWS WAF, a web application firewall that helps protect web applications from attacks by allowing rules configured based on IP addresses, HTTP headers, and custom URI strings

- supports GET, HEAD, OPTIONS, PUT, POST, PATCH, DELETE to get object & object headers, add, update, and delete objects

- only caches responses to GET and HEAD requests and, optionally, OPTIONS requests

- does not cache responses to PUT, POST, PATCH, DELETE request methods and these requests are proxied back to the origin

- object removal from the cache

- would be removed upon expiry (TTL) from the cache, by default 24 hrs

- can be invalidated explicitly, but has a cost associated, however, might continue to see the old version until it expires from those caches

- objects can be invalidated only for Web distribution

- use versioning or change object name, to serve a different version

- supports adding or modifying custom headers before the request is sent to origin which can be used to

- validate if a user is accessing the content from CDN

- identifying CDN from which the request was forwarded, in case of multiple CloudFront distributions

- for viewers not supporting CORS to return the Access-Control-Allow-Origin header for every request

- supports Partial GET requests using range header to download objects in smaller units improving the efficiency of partial downloads and recovery from partially failed transfers

- supports compression to compress and serve compressed files when viewer requests include Accept-Encoding: gzip in the request header

- supports different price classes to include all regions, or only the least expensive regions and other regions without the most expensive regions

- supports access logs which contain detailed information about every user request for both web and RTMP distribution

AWS VPN

- AWS Site-to-Site VPN provides secure IPSec connections from on-premise computers or services to AWS over the Internet

- is cheap, and quick to set up however it depends on the Internet speed

- delivers high availability by using two tunnels across multiple Availability Zones within the AWS global network

- VPN requires a Virtual Gateway – VGW and Customer Gateway – CGW for communication

- VPN connection is terminated on VGW on AWS

- Only one VGW can be attached to a VPC at a time

- VGW supports both static and dynamic routing using Border Gateway Protocol (BGP)

- VGW supports AWS-256 and SHA-2 for data encryption and integrity

- AWS Client VPN is a managed client-based VPN service that enables secure access to AWS resources and resources in the on-premises network.

- AWS VPN does not allow accessing the Internet through IGW or NAT Gateway, peered VPC resources, or VPC Gateway Endpoints from on-premises.

- AWS VPN allows access accessing the Internet through NAT Instance and VPC Interface Endpoints from on-premises.

Direct Connect

- is a network service that uses a private dedicated network connection to connect to AWS services.

- helps reduce costs (long term), increases bandwidth, and provides a more consistent network experience than internet-based connections.

- supports Dedicated and Hosted connections

- Dedicated connection is made through a 1 Gbps, 10 Gbps, or 100 Gbps Ethernet port dedicated to a single customer.

- Hosted connections are sourced from an AWS Direct Connect Partner that has a network link between themselves and AWS.

- provides Virtual Interfaces

- Private VIF to access instances within a VPC via VGW

- Public VIF to access non VPC services

- requires time to setup probably months, and should not be considered as an option if the turnaround time is less

- does not provide redundancy, use either second direct connection or IPSec VPN connection

- Virtual Private Gateway is on the AWS side and Customer Gateway is on the Customer side

- route propagation is enabled on VGW and not on CGW

- A link aggregation group (LAG) is a logical interface that uses the link aggregation control protocol (LACP) to aggregate multiple dedicated connections at a single AWS Direct Connect endpoint and treat them as a single, managed connection

- Direct Connect vs VPN IPSec

- Expensive to Setup and Takes time vs Cheap & Immediate

- Dedicated private connections vs Internet

- Reduced data transfer rate vs Internet data transfer cost

- Consistent performance vs Internet inherent variability

- Do not provide Redundancy vs Provides Redundancy

Route 53

- provides highly available and scalable DNS, Domain Registration Service, and health-checking web services

- Reliable and cost-effective way to route end users to Internet applications

- Supports multi-region and backup architectures for High availability. ELB is limited to region and does not support multi-region HA architecture.

- supports private Intranet facing DNS service

- internal resource record sets only work for requests originating from within the VPC and currently cannot extend to on-premise

- Global propagation of any changes made to the DN records within ~ 1min

- supports Alias resource record set is a Route 53 extension to DNS.

- It’s similar to a CNAME resource record set, but supports both for root domain – zone apex e.g. example.com, and for subdomains for e.g. www.example.com.

- supports ELB load balancers, CloudFront distributions, Elastic Beanstalk environments, API Gateways, VPC interface endpoints, and S3 buckets that are configured as websites.

- CNAME resource record sets can be created only for subdomains and cannot be mapped to the zone apex record

- supports Private DNS to provide an authoritative DNS within the VPCs without exposing the DNS records (including the name of the resource and its IP address(es) to the Internet.

- Split-view (Split-horizon) DNS enables mapping the same domain publicly and privately. Requests are routed as per the origin.

- Routing policy

- Simple routing – simple round-robin policy

- Weighted routing – assign weights to resource records sets to specify the proportion for e.g. 80%:20%

- Latency based routing – helps improve global applications as requests are sent to the server from the location with minimal latency, is based on the latency and cannot guarantee users from the same geography will be served from the same location for any compliance reasons

- Geolocation routing – Specify geographic locations by continent, country, the state limited to the US, is based on IP accuracy

- Geoproximity routing policy – Use to route traffic based on the location of the resources and, optionally, shift traffic from resources in one location to resources in another.

- Multivalue answer routing policy – Use to respond to DNS queries with up to eight healthy records selected at random.

- Failover routing – failover to a backup site if the primary site fails and becomes unreachable

- Weighted, Latency and Geolocation can be used for Active-Active while Failover routing can be used for Active-Passive multi-region architecture

- Traffic Flow is an easy-to-use and cost-effective global traffic management service. Traffic Flow supports versioning and helps create policies that route traffic based on the constraints they care most about, including latency, endpoint health, load, geoproximity, and geography.

- Route 53 Resolver is a regional DNS service that helps with hybrid DNS

- Inbound Endpoints are used to resolve DNS queries from an on-premises network to AWS

- Outbound Endpoints are used to resolve DNS queries from AWS to an on-premises network

AWS Global Accelerator

- is a networking service that helps you improve the availability and performance of the applications to global users.

- utilizes the Amazon global backbone network, improving the performance of the applications by lowering first-byte latency, and jitter, and increasing throughput as compared to the public internet.

- provides two static IP addresses serviced by independent network zones that provide a fixed entry point to the applications and eliminate the complexity of managing specific IP addresses for different AWS Regions and AZs.

- always routes user traffic to the optimal endpoint based on performance, reacting instantly to changes in application health, the user’s location, and configured policies

- improves performance for a wide range of applications over TCP or UDP by proxying packets at the edge to applications running in one or more AWS Regions.

- is a good fit for non-HTTP use cases, such as gaming (UDP), IoT (MQTT), or Voice over IP, as well as for HTTP use cases that specifically require static IP addresses or deterministic, fast regional failover.

- integrates with AWS Shield for DDoS protection

Transit Gateway – TGW

- is a highly available and scalable service to consolidate the AWS VPC routing configuration for a region with a hub-and-spoke architecture.

- acts as a Regional virtual router and is a network transit hub that can be used to interconnect VPCs and on-premises networks.

- traffic always stays on the global AWS backbone, data is automatically encrypted, and never traverses the public internet, thereby reducing threat vectors, such as common exploits and DDoS attacks.

- is a Regional resource and can connect VPCs within the same AWS Region.

- TGWs across the same or different regions can peer with each other.

- provides simpler VPC-to-VPC communication management over VPC Peering with a large number of VPCs.

- scales elastically based on the volume of network traffic.